- Papers

Papers is Alpha. This content is part of an effort to make research more accessible, and (most likely) has lost some details from the original. You can find the original paper here.

Introduction

Artificial intelligence (AI) has had and will continue to have a central role in countless aspects of life, livelihood, and liberty. AI is bringing forth a sea-change that is not only limited to technical domains, but is a truly sociotechnical phenomenon affecting healthcare, education, commerce, finance, and criminal justice, not to mention day-to-day life. AI offers both promise and perils. A report published by Martha Lane Fox’s Doteveryone think tankreveals that 59% of tech workers have worked on products they felt harmful to society, and more than 25% of workers in AI who had such an experience quit their jobs as a result. This was particularly marked in relation to AI products. The rise of activism – which has been regarded as one of the current few mechanisms to keep big tech companies in check– against negative social impacts of big tech have brought Social Responsibility of AI into the spotlight of the media, the general public, and AI technologists and researchers. Even researchers in universities and research institutes are trying hard to rectify the mistakes made by algorithms. Stanford’s COVID-19 vaccine allocation algorithm, for example, prioritizes older employees over front-line workers, turning much of our attention again to the transparency and fairness of AI.

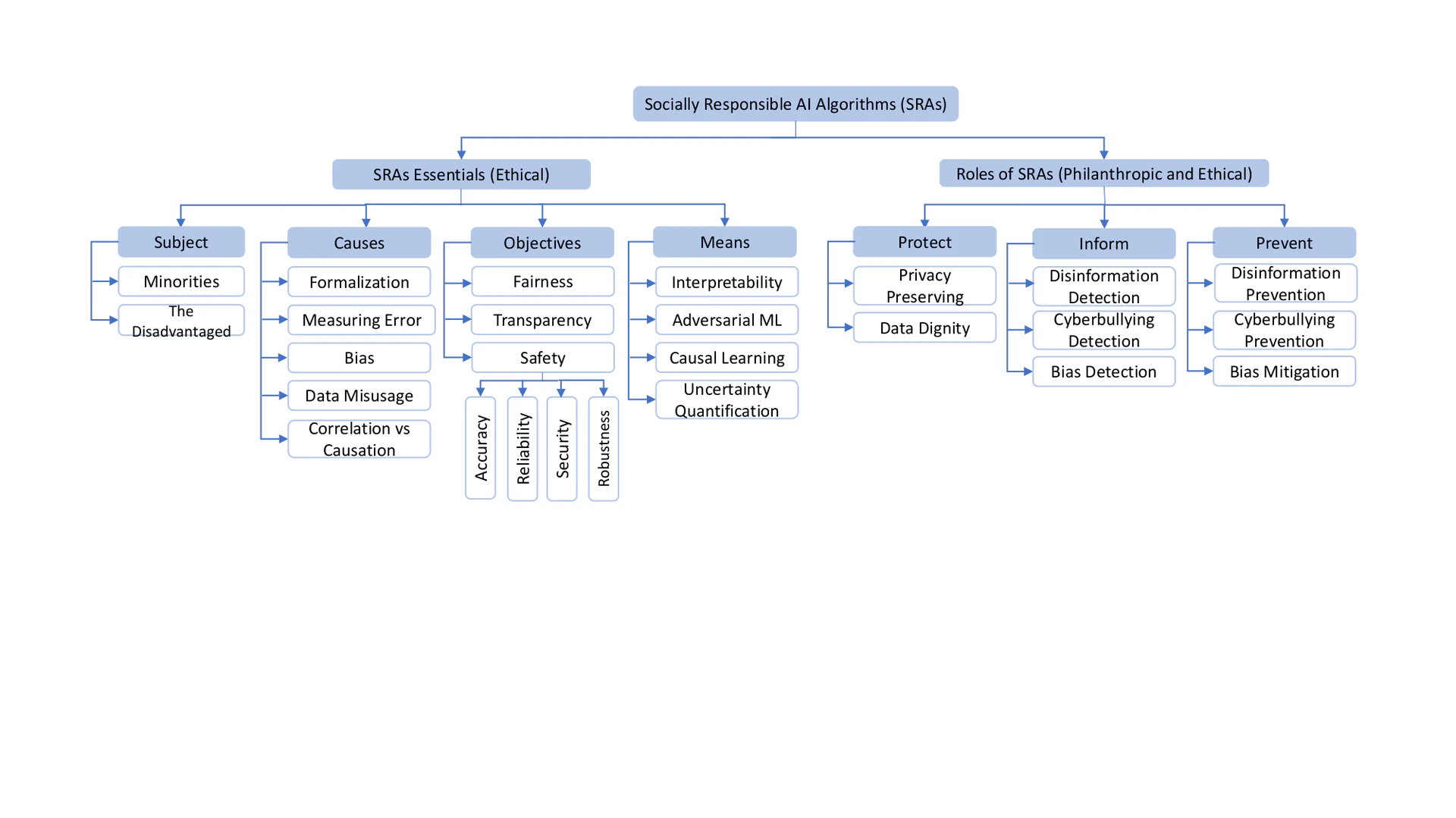

Research directed towards developing fair, transparent, accountable, and ethical AI algorithms has burgeoned with a focus on decision-making algorithms such as scoring or classification to mitigate unwanted bias and achieve fairness. However, this narrow subset of research risks blinding us to the challenges and opportunities that are presented by the full scope of AI. To identify potential higher-order effects on safety, privacy, and society at large, it is critical to think beyond algorithmic bias, to capture all the connections among different aspects related to AI algorithms. Therefore, this survey complements prior work through a holistic understanding of the relations between AI systems and humans. In this work, we begin by introducing an inclusive definition of Social Responsibility of AI . Drawing on theories in business research, we then present a pyramid of Social Responsibility of AI that outlines four specific AI responsibilities in a hierarchy. This is adapted from the pyramid proposed for Corporate Social Responsibility (CSR) by carroll1991pyramid. In the second part of the survey, we review major aspects of AI algorithms and provide a systematic framework – Socially Responsible AI Algorithms (SRAs) – that aims to understand the connections among these aspects. In particular, we examine the subjects and causes of socially indifferent AI algorithms[We define “indifferent” as the complement of responsible rather than “irresponsible”.], define the objectives , and introduce the means by which we can achieve SRAs. We further discuss how to leverage SRAs to improve daily life of human beings and address challenging societal issues through protecting, informing , and preventing/mitigating . We illustrate these ideas using recent studies on several emerging societal challenges. The survey concludes with open problems and challenges in SRAs.

Differences from Existing Surveys. Some recent surveys focus on specific topics such as bias and fairness, interpretability/explainability, and privacy-preservation. These surveys successfully draw great attention to the social responsibility of AI, leading to further developments in this important line of research. However, as indispensable components of socially responsible AI, these topics have been presented in their own self-contained ways. These works pave the way for looking at socially responsible AI holistically. Therefore, our survey aims to frame socially responsible AI with a more systematic view that goes beyond discussion of each independent line of research. We summarize our contributions as follows:

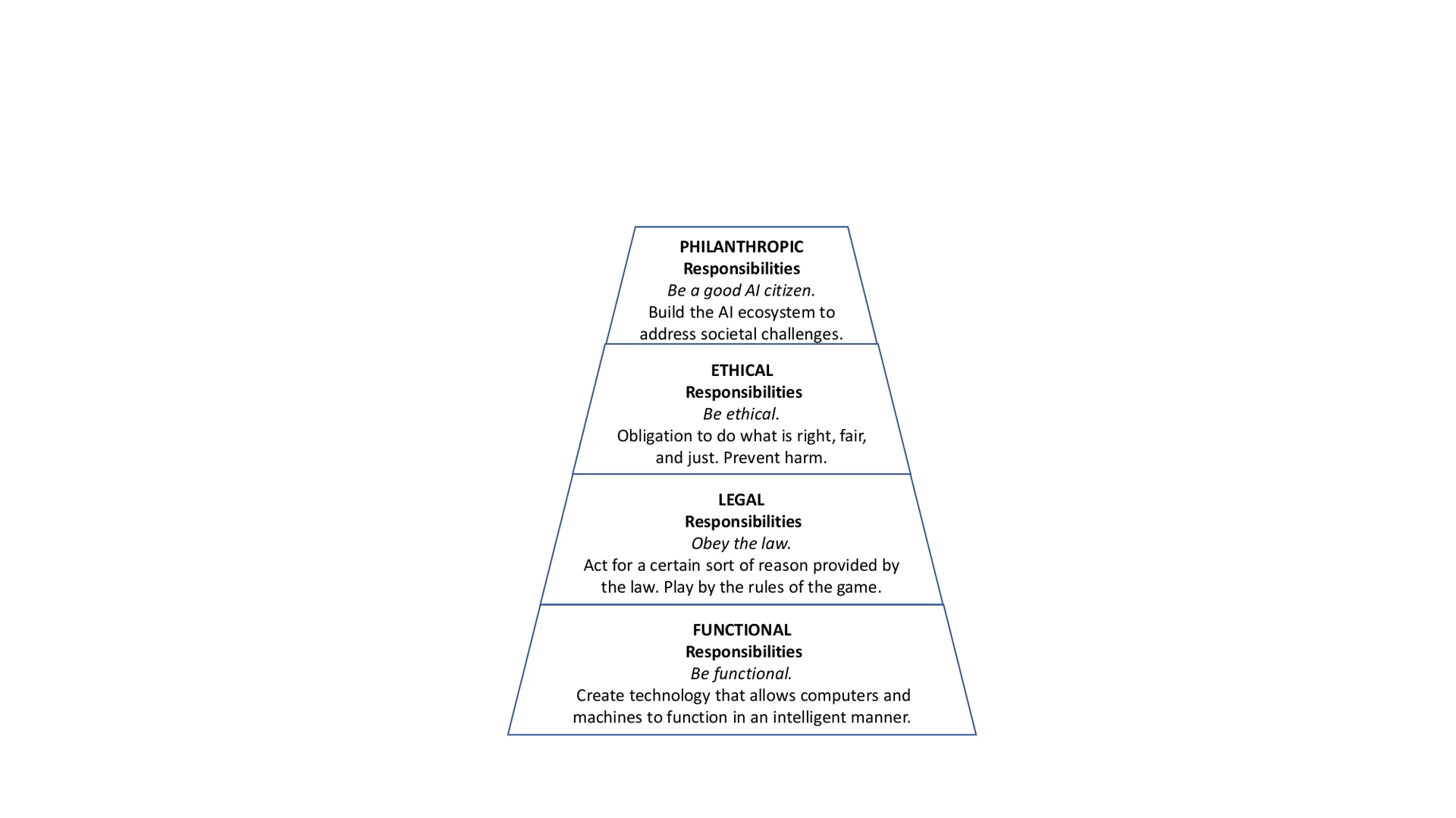

- We formally define social responsibility of AI with three specified dimensions: principles, means , and objectives . We then propose the pyramid of social responsibility of AI, describing its four fundamental responsibilities: functional, legal, ethical , and philanthropic responsibilities. The pyramid embraces the entire range of AI responsibilities involving efforts from various disciplines.

- We propose a systematic framework that discusses the essentials of socially responsible AI algorithms (SRAs) – including its subjects, causes, means , and objectives – and the roles of SRAs in protecting, informing users, and preventing them from negative impact of AI. This framework subsumes existing topics such as fairness and interpretability.

- We look beyond prior research in socially responsible AI and identify an extensive list of open problems and challenges, ranging from understanding why we need AI systems to showing the need to define new AI ethics principles and policies. We hope our discussions can spark future research on SRAs.

Intended Audience and Paper Organization. This survey is intended for AI researchers, AI technologists, researchers, and practitioners from other disciplines who would like to contribute to making AI more socially responsible with their expertise. The rest of the survey is organized as follows: Section 2 introduces the definition and the pyramid of social responsibility of AI, and compares definitions of similar concepts. Section 3 discusses the framework of socially responsible algorithms and its essentials, followed by Section 4 that illustrates the roles of SRAs using several emerging societal issues as examples. Section 5 details the open problems and challenges that socially responsible AI currently confronts. The last section concludes the survey.

Social Responsibility of AI

Social Responsibility of AI includes efforts devoted to addressing both technical and societal issues. While similar concepts (e.g., “Ethical AI”) repeatedly appear in the news, magazines, and scientific articles, “Social Responsibility of AI” has yet to be properly defined. In this section, we first attempt to provide an inclusive definition and then propose the Pyramid of Social Responsibility of AI to outline the various responsibilities of AI in a hierarchy: functional responsibilities, legal responsibilities, ethical responsibilities, and philanthropic responsibilities. At last, we compare “Socially Responsible AI” with similar concepts.

What is Social Responsibility of AI?

Social Responsibility of AI refers to a human value-driven process where values such as Fairness, Transparency, Accountability, Reliability and Safety, Privacy and Security, and Inclusiveness are the principles; designing Socially Responsible AI Algorithms is the means; and addressing the social expectations of generating shared value – enhancing both AI’s ability and benefits to society – is the main objective.

Here, we define three dimensions of Social Responsibility of AI: the principles lay the foundations for ethical AI systems; the means to reach the overarching goal of Social Responsibility of AI is to develop Socially Responsible AI Algorithms; and the objective of Social Responsibility of AI is to improve both AI’s capability and humanity with the second being the proactive goal.

The Pyramid of Social Responsibility of AI

Social Responsibility of AI should be framed in such a way that the entire range of AI responsibilities are embraced. Adapting Carroll’s Pyramid of CSRin the AI context, we suggest four kinds of social responsibilities that constitute the Social Responsibility of AI: functional, legal, ethical, and philanthropic responsibilities, as shown in Figure pyramid. By modularizing AI responsibilities, we hope to help AI technologists and researchers to reconcile these obligations and simultaneously fulfill all the components in the pyramid. All of these responsibilities have always existed, but functional responsibilities have been the main consideration until recently. Each type of responsibility requires close consideration.

The pyramid portrays the four components of Social Responsibility of AI, beginning with the basic building block notion that the functional competence of AI undergirds all elseFunctional responsibilities_ require AI systems to perform in a manner consistent with profits maximization, operating efficiency, and other key performance indicators. Meanwhile, AI is expected to obey the law, which codifies the acceptable and unacceptable behaviors in our society. That is, legal responsibilities require AI systems to perform in a manner consistent with expectations of government and law. All AI systems should at least meet the minimal legal requirements. At its most fundamental level, ethical responsibilities are the obligation to do what is right, just, and fair, and to prevent or mitigate negative impact on stakeholders (e.g., users, the environment). To fulfill its ethical responsibilities, AI systems need to perform in a manner consistent with societal expectations and ethical norms, which cannot be compromised in order to achieve AI’s functional responsibilities. Finally, in philanthropic responsibilities , AI systems are expected to be good AI citizens and to contribute to tackling societal challenges such as cancer and climate change. Particularly, it is important for AI systems to perform in a manner consistent with the philanthropic and charitable expectations of society to enhance people’s quality of life. The distinguishing feature between ethical and philanthropic responsibilities is that the latter are not expected in an ethical sense. For example, while communities desire AI systems to be applied to humanitarian projects or purposes, they do not regard the AI systems as unethical if they do not provide such services. We explore the nature of Social Responsibility of AI by focusing on its components to help AI technologists to reconcile these obligations. Though these four components are depicted as separate concepts, they are not mutually exclusive. It is necessary for AI technologists and researchers to recognize that these obligations are in a constant but dynamic tension with one another.

Comparisons of Similar Concepts

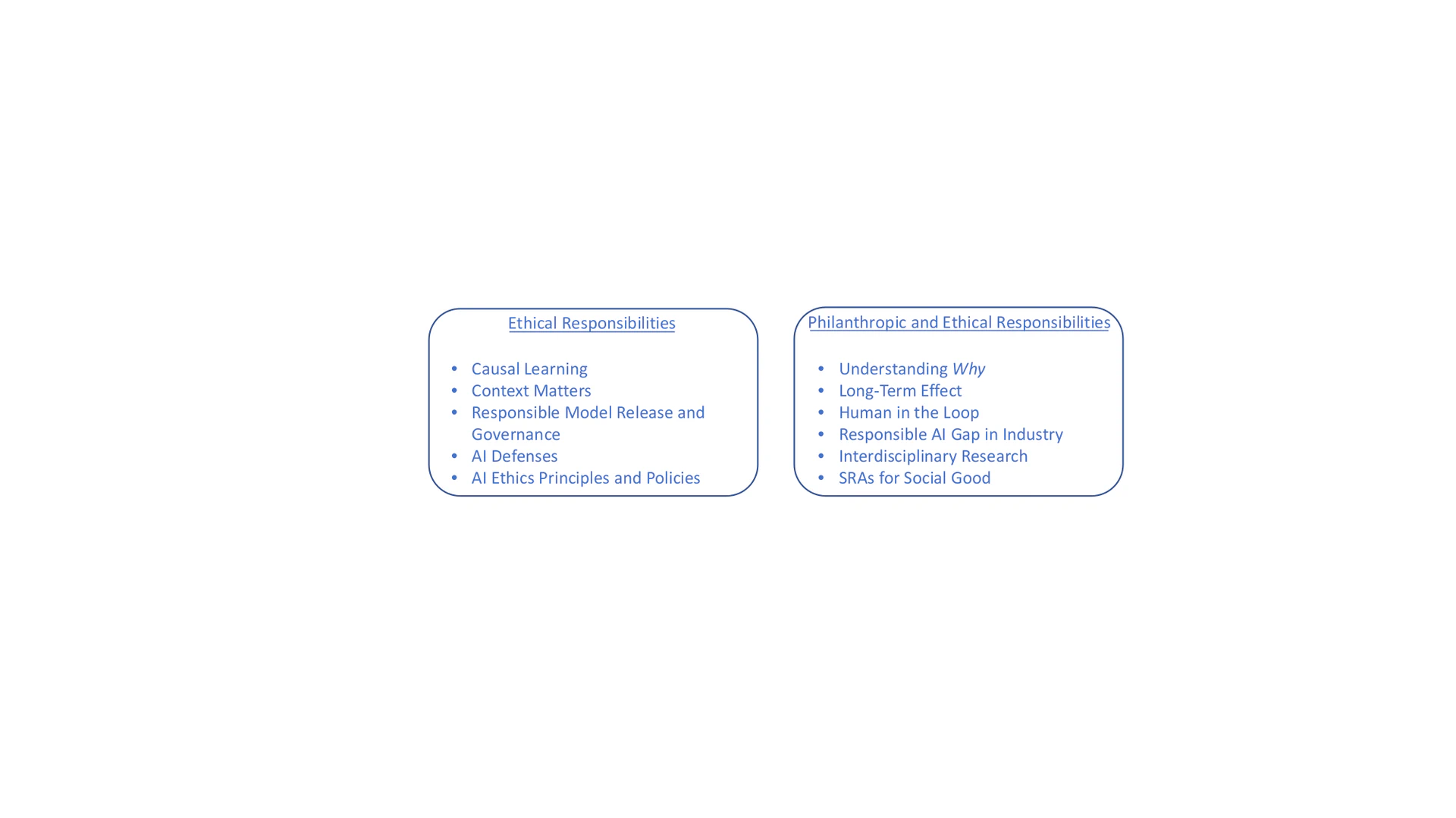

Based on Definition 1 and the pyramid of socially responsibility of AI, we compare Socially Responsible AI with other similar concepts, as illustrated in Table comparisons. The results show that Socially Responsible AI holds a systematic view that subsumes existing concepts and further considers the fundamental responsibilities of AI systems – to be functional and legal, as well as their philanthropic responsibilities – to be able to improve life quality of well beings and address challenging societal issues. In the rest of this survey, we focus our discussions on the ethical (Section 3, essentials of SRAs) and philanthropic (Section 4, roles of SRAs) responsibilities of AI given that both the functional and legal responsibilities are the usual focuses in AI research and development. An overview of SRAs research is illustrated in Figure outline, which we will refer back to throughout the remainder of the survey. Importantly, in our view, the essentials of SRAs work toward ethical responsibilities, and their roles in society encompass both ethical and philanthropic responsibilities.

Definitions of concepts similar to Socially Responsible AI.

Table Label: comparisons

Download PDF to view table[2]https://www.cognizant.com/glossary/human-centered-ai

Socially Responsible AI Algorithms (SRAs)

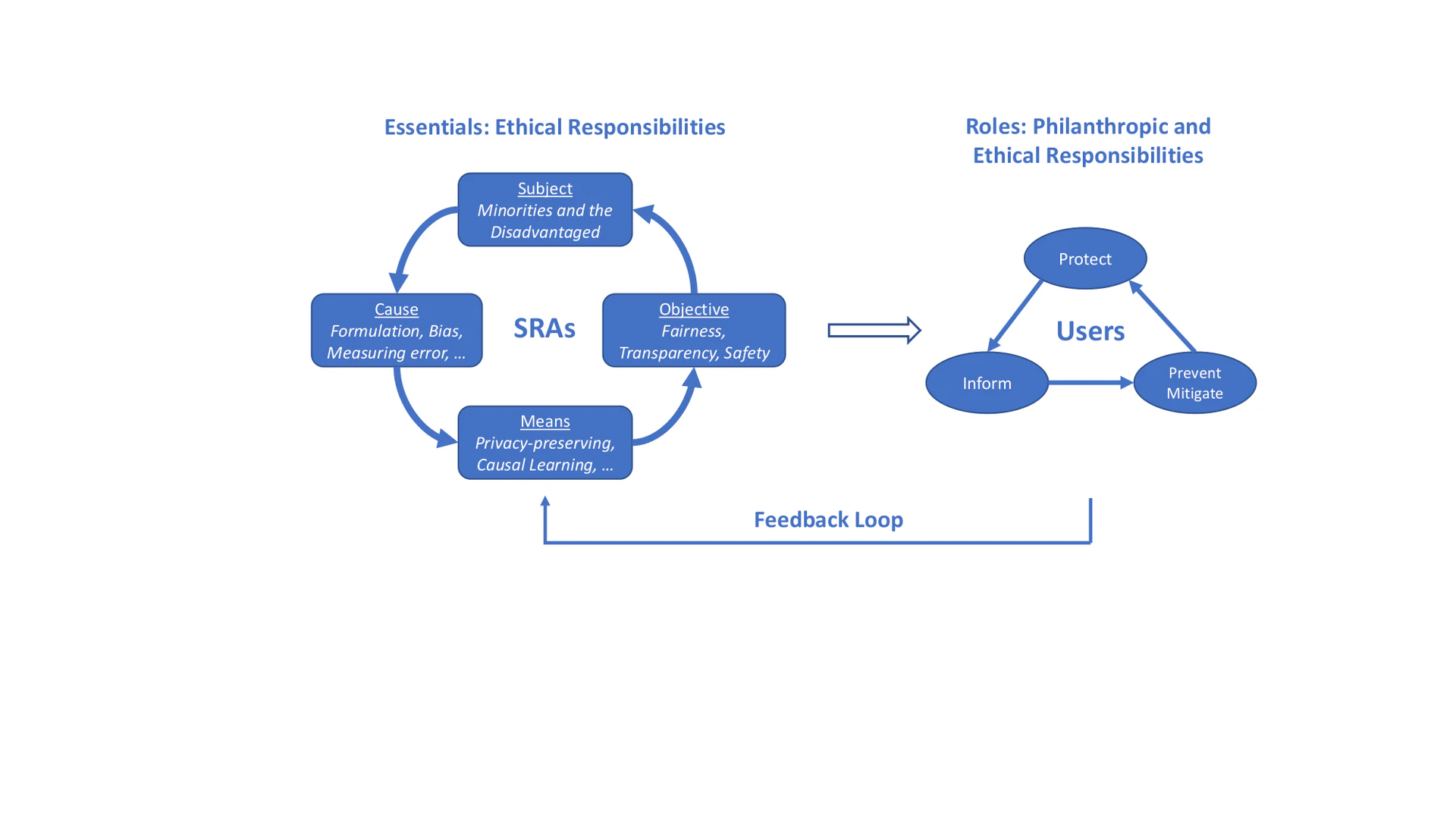

The role of AI technologists and researchers carries a number of responsibilities. The most obvious is developing accurate, reliable, and trustworthy algorithms that can be depended on by their users. Yet, this has never been a trivial task. For example, due to the various types of human biases, e.g., confirmation bias, gender bias, and anchoring bias, AI technologists and researchers often inadvertently inject these same kinds of bias into the developed algorithms, especially when using machine learning techniques. For example, supervised machine learning is a common technique for learning and validating algorithms through manually annotated data, loss functions, and related evaluation metrics. Numerous uncertainties – e.g., imbalanced data, ill-defined criteria for data annotation, over-simplified loss functions, and unexplainable results – potentially lurk in this “beautiful” pipeline and will eventually lead to negative outcomes such as biases and discrimination. With the growing reliance on AI in almost any field in our society, we must bring upfront the vital question about how to develop Socially Responsible AI Algorithms . While conclusive answers are yet to be found, we attempt to provide a systematic framework of SRAs (illustrated in Figure sras) to discuss the components of AI’s ethical responsibilities, the roles of SRAs in terms of AI’s philanthropic and ethical responsibilities, and the feedback from users routed back as inputs to SRAs. We hope to broaden future discussions on this subject. In this regard, we define SRAs as follows:

Socially Responsible AI Algorithms are the intelligent algorithms that prioritize the needs of all stakeholders as the highest priority, especially the minoritized and disadvantaged users, in order to make just and trustworthy decisions. These obligations include protecting and informing users; preventing and mitigating negative impact; and maximizing the long-term beneficial impact. Socially Responsible AI Algorithms constantly receive feedback from users to continually accomplish the expected social values.

In this definition, we highlight that the functional (e.g., maximizing profits) and societal (e.g., transparency) objectives are integral parts of AI algorithms. SRAs aim to be socially responsible while still meeting and exceeding business objectives.

Subjects of Socially Indifferent AI Algorithms

Every human being can be a potential victim of socially indifferent AI algorithms. Mirroring society, the ones who suffer the most, both in frequency and severity, are minorities and disadvantaged groups such as black, indigenous and people of color (BIPOC), and females. For example, Google mislabeled an image of two black people as “gorillas”and more frequently showed ads of high-paying jobs to males than females. Similar gender bias was also found in Facebook algorithms behind the job ads. In domains with high-stakes decisions, e.g., financial services, healthcare, and criminal justice, it is not uncommon to identify instances where socially indifferent AI algorithms favor privileged groups. For example, the algorithm used in Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) was found almost twice as likely to mislabel a black defendant as a future risk than a white defendant. Identifying the subjects of socially indifferent AI algorithms depends on the context. In another study, the journalistic organization ProPublica[https://www.propublica.org/] investigated algorithms that determine online prices for Princeton Review’s tutoring classes. The results showed that people who lived in higher income areas were charged twice as much as the general public and than people living in a zip code with high population density. Asians were 1.8 times more likely to pay higher price, regardless of their income. Analogously, these AI algorithms might put poor people who cannot afford internet service at disadvantage because they simply have never seen such data samples in the training process.

When it comes to purpose-driven collection and use of data, each individual can be the subject of socially indifferent AI algorithms. Users’ personal data are frequently collected and used without their consent. Such data includes granular details such as contact information, online browsing and session record, social media consumption, location and so on. While most of us are aware of our data being used, few have controls to where and how the data is used, and by whom. The misuse of data and lack of knowledge causes users to become the victims of privacy-leakage and distrust.

Causes of Socially Indifferent AI Algorithms

There are many potential factors that can cause AI algorithms to be socially indifferent. Here, we list several causes that have been frequently discussed in literature. They are formalization, measuring errors, bias, privacy, and correlation versus causation.

Formalization

AI algorithms encompass data formalization, label formalization, formalization of loss function and evaluation metrics. We unconsciously make some frame of reference commitment to each of these formalizations. Firstly, the social and historical context are often left out when transforming raw data into numerical feature vectors. Therefore, AI algorithms are trained on pre-processed data with important contextual information missing. Secondly, data annotation can be problematic for a number of reasons. For example, what are the criteria? Who defines the criteria? Who are the annotators? How can it be ensured that they all follow the criteria? What we have for model training are only proxies of the true labels. Ill-formulated loss functions can also result in socially indifferent AI algorithms. Many loss functions in the tasks are over-simplified to solely focus on maximizing profits and minimizing losses. The concerns of unethical optimization are recently discussed by beale2019unethical. Unknown to AI systems, certain strategies in the optimization space that are considered as unethical by stakeholder may be selected to satisfy the simplified task requirements. Lastly, the use of inappropriate benchmarks for evaluation may push algorithms away from the overarching goal of the task and fuel injustice.

Measuring Errors

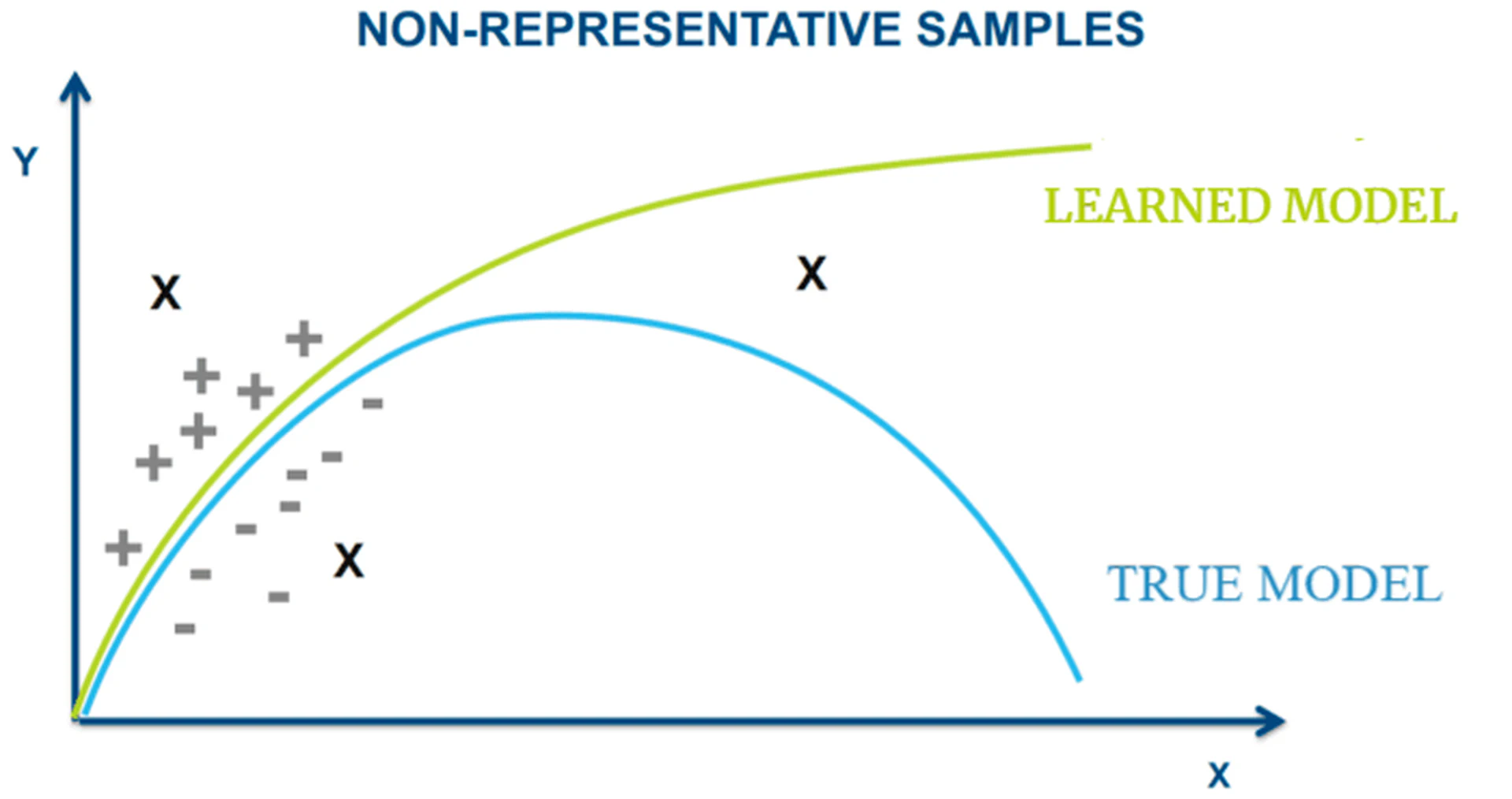

Another cause of socially indifferent AI algorithms is the errors when measuring algorithm performance. When reporting results, researchers typically proclaim the proposed algorithms can achieve certain accuracy or F1 scores. However, this is based on assumptions that the training and test samples are representative of the target population and their distributions are similar enough. Yet, how often does the assumption hold in practice? As illustrated in Figure measureerror, with non-representative samples, the learned model can achieve zero training error and perform well on the testing data at the initial stage. However, with more data being tested later, the model performance deteriorates because the learned model does not represent the true model.

Bias

Bias is one of the most discussed topics regarding responsible AI. We here focus on the data bias, automation bias, and algorithmic bias.

Data Bias. Data, especially big data, is often heterogeneous – data with high variability of types and formats, e.g., text, image, and video. The availability of multiple data sources brings unprecedented opportunities as well as unequivocally presented challenges. For instance, high-dimensional data such as text is infamous for the danger of overfitting and the curse of dimensionality. Additionally, it is rather challenging to find subset of features that are predictive but uncorrelated. The required number samples for generalization also grows proportionally with feature dimension. One example is how the U.S. National Security Agency tried to use AI algorithms to identify potential terrorists. The Skynet project collected cellular network traffic in Pakistan and extracted 80 features for each cell phone user with only 7 known terrorists. The algorithm ended up identifying an Al Jazeera reporter covering Al Qaeda as a potential terrorist. Data heterogeneity is also against the well known $i.i.d.$ assumption in most learning algorithms. Therefore, training these algorithms on heterogeneous data can result in undesired results. Imbalanced subgroups is another source of data bias. As illustrated in, regression analysis based on the subgroups with balanced fitness level suggests positive correlation between BMI and daily pasta calorie intake whereas that based on less balanced data shows almost no relationship.

Automation Bias. This type of bias refers to our preference to results suggested by automated decision-making systems while ignoring the contradictory information. Too much reliance on the automated systems without sparing additional thoughts in making final decisions, we might end up abdicating decision responsibility to AI algorithms.

Algorithmic Bias. Algorithmic bias regards biases added purely by the algorithm itself. Some algorithms are inadvertently taught prejudices and unethical biases by societal patterns hidden in the data. Typically, models fit better to features that frequently appear in the data. For example, an automatic AI recruiting tool will learn to make decisions for a given applicant of a software engineer position using observed patterns such as “experience”, “programming skills”, “degree”, and “past projects”. For a position where gender disparity is large, the algorithms mistakenly interpret this collective imbalance as a useful pattern in the data rather than undesirable noise that should have been discarded. Algorithmic bias is systematic and repeatable error in an AI system that creates discriminated outcome, e.g., privileging wealthy users over others. It can amplify, operationalize, and even legitimize institutional bias.

Data Misuse

Data is the fuel and new currency that has empowered tremendous progress in AI research. Search engines have to rely on data to craft precisely personalized recommendation that improves the online experience of consumers, including online shopping, book recommendation, entertainment, and so on. However, users’ data are frequently misused without the consent and awareness of users. One example is the Facebook-Cambridge Analytical scandalwhere millions of Facebook users’ personal data was collected by Cambridge Analytica, without their consent. In a more recent study, researchers show that Facebook allows advertisers to exploit its users’ sensitive information for tailored ad campaigns. To make things worse, users often have no clue about where, how, and why their data is being used, and by whom. The lack of knowledge and choice over their data causes users to undervalue their personal data, and further creates issues such as privacy and distrust.

Correlation vs Causation

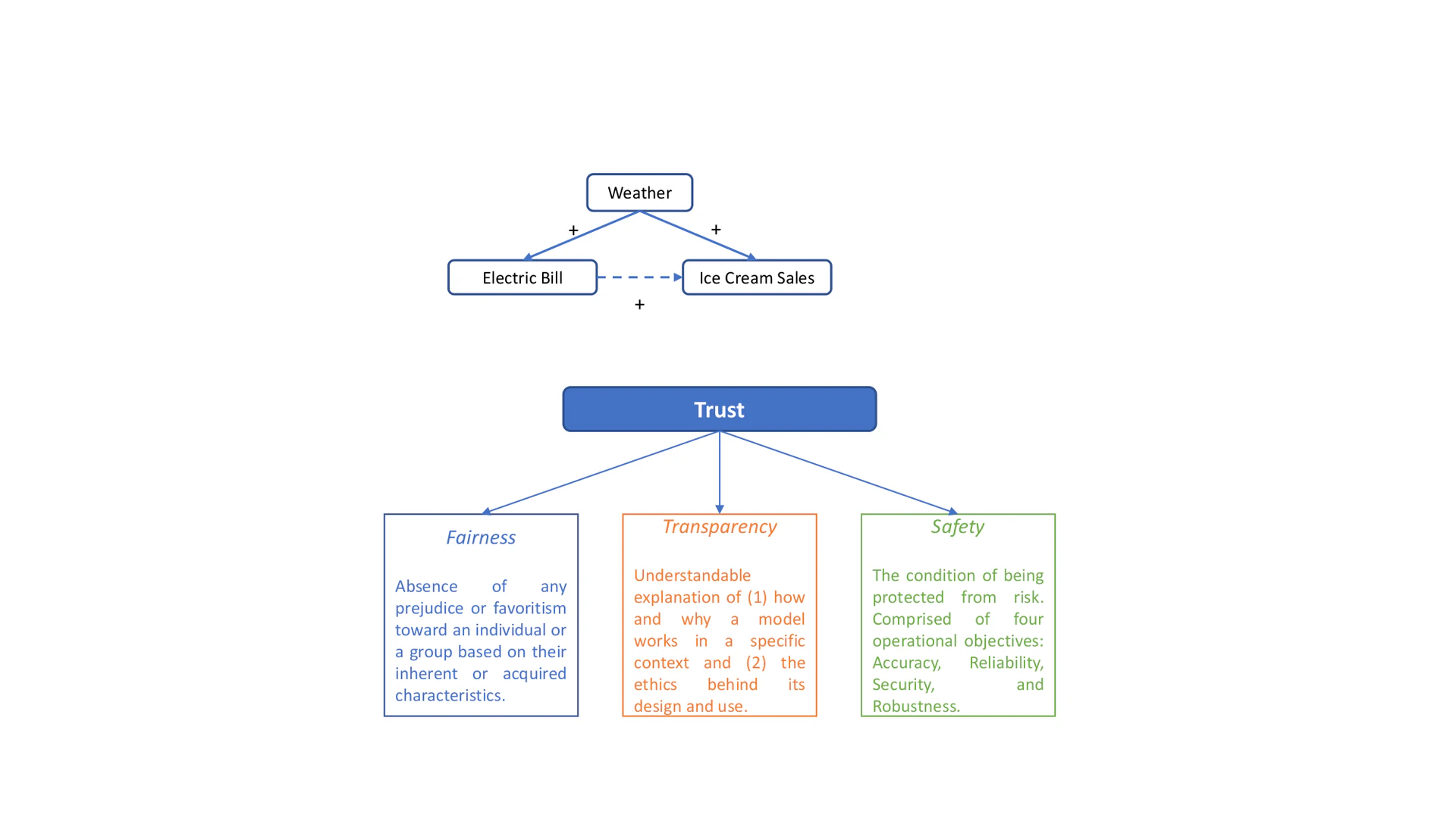

AI Algorithms can become socially indifferent when correlation is misinterpreted as causation. For example, in the diagram in Figure icecream, we observe a strong correlation between the electric bill of an ice cream shop and ice cream sales. Apparently, high electric bill cannot cause the ice cream sales to increase. Rather, weather is the common cause of electric bill and the sale, i.e., high temperature causes high electric bill and the increased ice cream sales. Weather – the confounder – creates a spurious correlation between electric bill and ice cream sales. Causality is a generic relationship between the cause and the outcome. While correlation helps with prediction, causation is important for decision making. One typical example is Simpson’s Paradox. It describes a phenomenon where a trend or association observed in subgroups maybe opposite to that observed when these subgroups are aggregated. For instance, in the study of analyzing the sex bias in graduation admissions at UC Berkeley, the admission rate was found higher in male applicants when using the entire data. However, when the admission data were separated and analyzed over the departments, female candidates had equal or even higher admission rate over male candidates.

Objectives of Socially Responsible AI Algorithms

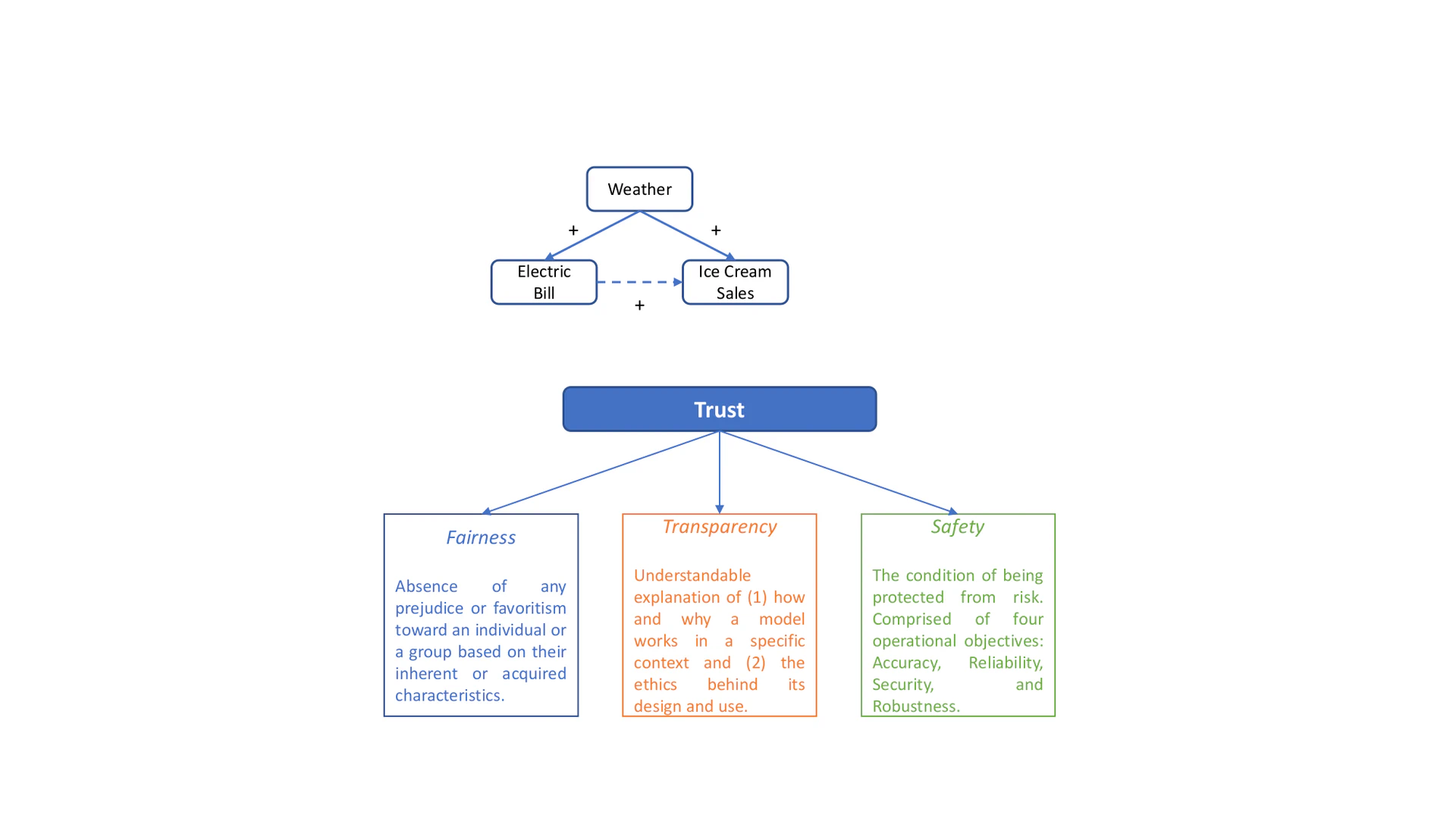

Essentially, the goal is to (re)build trust in AI. By definition, trust is the “firm belief in the reliability, truth or ability of someone or something”[Definition from Oxford Languages.]. It is a high-level concept that needs to be specified by more concrete objectives. We here discuss the SRAs objectives that have been discussed comparatively frequently in literature. They are fairness, transparency, and safety as illustrated in Figure objective.

Fairness

Fairness in AI has gained substantial attentions in both research and industry since 2010. For decades, researchers found it rather challenging to present a unified definition of fairness in part because fairness is a societal and ethical concept. This concept is mostly subjective, changes over social context, and evolves over time, making fairness a rather challenging goal to achieve in practice. Because SRAs is a decision-making process commensurate with social values, we here adopt a fairness definition in the context of decision-making:

“Fairness is the absence of any prejudice or favoritism toward an individual or a group based on their inherent or acquired characteristics”.

Note that even an ideally “fair” AI system defined in a specific context might still lead to biased decisions as the entire decision making process involves numerous elements such as policy makers and environment. While the concept of fairness is difficult to pin down, unfairness/bias/discrimination might be easier to identify. There are six types of discrimination. Direct discrimination results from protected attributes of individuals while indirect discrimination from seemingly neural and non-protected attributes. Systemic discrimination relates to policies that may show discrimination against subgroups of population. Statistical discrimination occurs when decision makers use average statistics to represent individuals. Depending whether the differences amongst different groups can be justified or not, we further have explainable and unexplainable discrimination.

Transparency

Transparency is another important but quite ambiguous concept. This is partly because AI alone can be defined in more than 70 ways. When we seek a transparent algorithm, we are asking for an understandable explanation of how it works: What does the training set look like? Who collected the data? What is the algorithm doing? There are mainly three types of transparency with regard to human interpretability of AI algorithms: For a developer , the goal of transparency is to understand how the algorithm works and get a sense of why; for a deployer who owns and releases the algorithm to the public, the goal of transparency is to make the consumers to feel safe and comfortable to use the system; and what transparency means to a user is understanding what the AI system is doing and why. We may further differentiate global transparency from local transparency, the former aims to explain the entire system whereas the latter explains a decision within a particular context.

Yet, at the same time, disclosures about AI can pose potential risks: explanations can be hacked and releasing additional information may make AI more vulnerable to attacks. It is becoming clear that transparency is often beneficial but not universally good. The AI “transparency paradox” encourages different parties of AI systems to think more carefully about how to balance the transparency and the risks it poses. We can also see related discussions in recent work such as. The paper studied how the widely recognized interpretable algorithms LIMEand SHAPcould be hacked. As the authors illustrated, explanations can be purposefully manipulated, leading to a loss of trust not only in the model but also in its explanations. Consequently, while working towards the goal of transparency, we must also recognize that privacy and security are the indispensable conditions we need to satisfy.

Safety

Because AI systems operate in a world with much uncertainty, volatility, and flux, another objective of SRAs is to be safe, accurate, and reliable. There are four operational objectives relevant to Safety: accuracy, reliability, security, and robustness. In machine learning, accuracy is typically measured by error rate or the fraction of instances for which the algorithm produces an incorrect output. As a standard performance metric, accuracy should be the fundamental component to establishing the approach to safe AI. It is necessary to specify a proper performance measure for evaluating any AI systems. For instance, when data for classification tasks is extremely imbalanced, precision, recall, and F1 scores are more appropriate than accuracy. The objective of reliability is to ensure that AI systems behave as we anticipate. It is a measure of consistency and is important to establish confidence in the safety of AI systemsSecurity_ encompasses the protection of information integrity, confidentiality, and continuous functionality to its users. Under harsh conditions (e.g., adversarial attack, perturbations, and implementation error), AI systems are expected to functions reliably and accurately, i.e., Robustness .

Means Towards Socially Responsible AI Algorithms

In this section, we review four primary machine learning techniques and statistical methods for achieving the goals of SRAs – interpretability and explainability, adversarial machine learning, causal learning, and uncertainty quantification. Existing surveys have conducted comprehensive reviews on each of these techniques: e.g., interpretablity, causal learning, adversarial machine learning, and uncertainty quantification. We thereby focus on the basics and the most frequently discussed methods in each means.

Interpretability and Explainability

Interpretability and explanability are the keys to increasing transparency of AI algorithms. This is extremely important when we leverage these algorithms for high-stakes prediction applications, which deeply impact people’s lives. Existing work in machine learning interpretability can be categorized according to different criteria. Depending on when the interpretability methods are applicable (before, during, or after building the machine learning model), we have pre-model (before), in-model (during), and post-model (after) interpretability. Pre-model techniques are only applicable to the data itself. It requires an in-depth understanding of the data before building the model, e.g., sparsity and dimensionality. Therefore, it is closely related to data interpretability, in which classic descriptive statistics and data visualization methods are often used, including Principal Component Analysisand t-SNE, and clustering methods such as $k$ -means. In-model interpretability asks for intrinsically interpretable AI algorithms (e.g., yang2016hierarchical), we can also refer to it as intrinsic interpretability. It can be achieved through imposition of constraints on the model such as causality, sparsity, or physical conditions from domain knowledge. In-model interpretability answers question how the model works. Decision trees, rule-based models, linear regression, attention network, and disentangled representation learning are in-model interpretability techniques. Post-model interpretability, or post-hoc interpretability (e.g., mordvintsev2015inceptionism,ribeiro2016should), is applied after model training. It answers the question what else can the model tell us. Post-model interpretability include local explanations, saliency maps, example-based explanations, influence functions, feature visualization, and explaining by base interpretable models.

Another criterion to group current interpretability techniques is model-specific vs model-agnostic. Model-specific interpretation is based on internals of a specific model. To illustrate, the coefficients of a linear regression model belong to model-specific interpretation. Model-agnostic methods do not have access to the model inner workings, rather, they are applied to any machine learning model after it has been trained. Essentially, the goal of interpretability is to help the user understand the decisions made by the machine learning models through the tool explanation . There are pragmatic and non-pragmatic theories of explanation. The former indicates that explanation should be a good answer that can be easily understood by the audience. The non-pragmatic theory emphasizes the correctness of the answer to the why-question. Both need to have the following properties: expressive power, translucency, portability, and algorithmic complexity.

Adversarial Machine Learning

Machine learning models, especially deep learning models, are vulnerable to crafted adversarial examples, which are imperceptible to human eyes but can easily fool deep neural networks (NN) in the testing/deploying stage. Adversarial examples have posed great concerns in the security and integrity of various applications. Adversarial machine learning, therefore, closely relates to the robustness of SRAs.

The security of any machine learning model is measured with regard to the adversarial goals and capabilities. Identifying the threat surfaceof an AI system built on machine learning models is critical to understand where and how an adversary may subvert the system under attack. For example, the attack surface in a standard automated vehicle system can be defined with regard to the data processing pipeline. Typically, there are three types of attacks the attack surface can identify: evasion attack – the adversary attempts to evade the system by manipulating malicious samples during testing phase, poisoning attack – the adversary attempts to poison the training data by injecting carefully designed samples into the learning process, and exploratory attack – it tries to collect as much information as possible about the learning algorithm of the underlying system and pattern in training data. Depending on the amount of information available to an adversary about the system, we can define different types of adversarial capabilities. In the training phase (i.e., training phase capabilities), there are three broad attack strategies: (1) data injection. The adversary can only augment new data to the training set; (2) data modification. The adversary has full access to the training data; and (3) logic corruption. The adversary can modify the learning algorithm. In the testing phase (i.e., testing phase capabilities), adversarial attacks focus on producing incorrect outputs. For white-box attack, an adversary has full knowledge about the model used for prediction: algorithm used in training, training data distribution, and the parameters of the fully trained model. The other type of attack is black-box attack, which, on the contrary, assumes no knowledge about the model and only uses historical information or information about the settings. The primary goal of black-box attack is to train a local model with the data distribution, i.e., non-adaptive attack, and with carefully selected dataset by querying the target model, i.e., adaptive attack.

Exploratory attacks do not have access to the training data but aim to learn the current state by probing the learner. Commonly used techniques include model inversion attack, model extraction using APIs, and inference attack. The popular attacks are evasion attacks where malicious inputs are craftily manipulated so as to fool the model to make false predictions. Poisoning attacks, however, modify the input during the training phase to obtain the desired results. Some of the well-known techniques are generative adversarial network (GAN), adversarial examples generation (including training phase modification, e.g., barreno2006can, and testing phase modification, e.g., papernot2016distillation), GAN-based attack in collaborative deep learning, and adversarial classification.

Causal Learning

Causal inference and reasoning is a critical ingredient for AI to achieve human-level intelligence, an overarching goal of Socially Responsible AI. The momentum of integrating causality into responsible AI is growing, as witnessed by a number of works (e.g., kusner2017counterfactual,xu2019achieving,holzinger2019causability) studying SRAs through causal learning methods.

Basics of Causal Learning. The two fundamental frameworks in causal learning are _structural causal models_and potential outcome. Structural causal models rely on the causal graph, which is a special class of Bayesian network with edges denoting causal relationships. A more structured format is referred to as structural equations. One of the fundamental notions in structural causal models is the do -calculus, an operation for intervention . The difficulty to conduct causal study is the difference between the observational and interventional distribution, the latter describes what the distribution of outcome $Y$ is if we were to set covariates $X=x$ . Potential outcome framework interprets causality as given the treatment and outcome, we can only observe one potential outcome. The counterfactuals – potential outcome that would have been observed if the individual had received a different treatment – however, can never be observed in reality. These two frameworks are the foundations of causal effect estimation (estimating effect of a treatment) and causal discovery (learning causal relations amongst different variables).

Many important concepts in causal inference have been adapted to AI such as intervention and counterfactual reasoning. Here, we introduce the causal concept most frequently used in SRAs – propensity score, defined as “conditional probability of assignment to a particular treatment given a vector of observed covariates”. A popular propensity-based approach is Inverse Probability of Treatment Weighting. To synthesize a randomized control trial, it uses covariate balancing to weigh instances based on their propensity scores and the probability of an instance to receive the treatment. Let $t_i$ and $x_i$ be the treatment assignment and covariate of instance $i$ , the weight $w_i$ is typically computed by the following formula:

\[ w_i=\frac{t_i}{P(t_i|x_i)}+\frac{1-t_i}{1-P(t_i|x_i)}, \]where $P(t_i|x_i)$ quantifies the propensity score. The weighted average of the observed outcomes for the treatment and control groups are defined as

\[ \hat{\tau}=\frac{1}{n_1}\sum_{i:t_i=1}w_iy_i-\frac{1}{n_0}\sum_{i:t_i=0}w_iy_i, \]where $n_1$ and $n_0$ denote the sizes of the treated and controlled groups.

Causal Learning for SRAs. Firstly, it is becoming increasingly popular to use causal models to solve fairness-related issues. For example, the subject of causality and its importance to address fairness issue was discussed in. Causal models can also be used to discover and eliminate discrimination to make decisions that are irrespective of sensitive attributes, on individual-, group-, and system-level, see, e.g.,. Secondly, bias alleviation is another field where causal learning methods are frequently discussed and affect many machine learning applications at large. The emerging research on debiasing recommender systemcan serve as one example. Due to the biased nature of user behavior data, recommender systems inevitably involve with various discrimination-related issues: recommending less career coaching services and high-paying jobs to women, recommending more male-authored books, and minorities are less likely to become social influencers. Gender and ethnic biases were even found in a broader context, e.g., word embeddings trained on 100 years of text data. Causal approaches such asaim to mitigate such bias in word embedding relations.

Thirdly, causal learning methods also have had discernible achievements in transparency, especially the interpretability of black-box algorithms. Causality is particularly desired since these algorithms only capture correlations not real causes. Further, it has been suggested that counterfactual explanations are the highest level of interpretability. For model-based interpretations, causal interpretability aims to explain the causal effect of a model component on the final decision. One example to differentiate it from traditional interpretability is only causal interpretability is able to answer question such as “What is the effect of the $n$ -th filter of the $m$ -th layer of a neural network on the prediction of the model?”.Counterfactual explanations is a type of example-based explanations, in which we look for data instances that can explain the underlying data distributions. Counterfactual explanations are human friendly, however, it is possible to have different true versions of explanations for the predicted results, i.e., the Rashomon effect. Studies such asare proposed to address this issue. For detailed discussion on causal interpretability, please refer to. Lastly, causal learning is inherently related to the robustness or adaptability of AI systems, which have been noted to lack the capability of reacting to new circumstances they are not trained for. Causal relationship, however, is expected to be invariant and robust across environments. This complements intensive earlier efforts toward “transfer learning”, “domain adaptation”, and “lifelong learning”. Some current work seeking to extrapolate the relationship between AI robustness and causality includes the independent causal mechanism principle, invariant prediction, and disentangled causal mechanism.

Uncertainty Quantification

AI research continues to develop new state-of-the-art algorithms with superior performance and large-scaled datasets with high quality. Even using the best models and training data, it is still infeasible for AI systems to cover all the potential situations when deployed into real-world applications. As a matter of fact, AI systems always encounter new samples that are different from those used for training. The core question is how to leverage the strengths of these uncertainties. Recent research, e.g.,, has advocated to measure, communicate, and use uncertainty as a form of transparency. There are also tools such as IBM’s Uncertainty Quantification 360[http://uq360.mybluemix.net/overview] to provide AI practitioners access to related resources as common practices for AI transparency. Consequently, uncertainty quantification plays a crucial role in the optimization and decision-making process in SRAs. There are typically two kinds of uncertainties in risk analysis processes: first, the aleatory uncertainty describes the inherent randomness of systems. For example, an AI system can present different results even with the same set of inputs. The uncertainty arises from underlying random variations within the data. Second, the epistemic uncertainty represents the effect of an unknown phenomenon or an internal parameter. The primary reason leading to this type of uncertainty is the lack of observed data. As the variation among the data in aleatory uncertainty is often observable, we can well quantify the uncertainty and assess the risks. Quantification of epistemic uncertainty is more challenging because AI systems are forced to extrapolate over unseen situations. In the literature of uncertainty quantification, one of the most widely recognized techniques are prediction intervals (PI). For neural-network-based models, PI can be categorized into multi-step PI construction methods (e.g., Bayesian method) and direct PI construction methods (e.g., lower upper bound estimation). Here, we briefly discuss several methods in each category. Please refer to the surveyfor more details.

Multi-Step Prediction Intervals Construction Methods. Delta method, Bayesian method, Mean-Variance Estimation method, and Bootstrap method are the four conventional multi-step methods reported in literature. Delta method constructs PIs through nonlinear regression using Tylor series expansion. Particularly, we linearize neural network models through optimization by minimizing the error-based loss function, sum square error. Under the assumption that uncertainty is from normal and homogeneous distribution, we then employ standard asymptotic theory to construct PIs. Delta method has been used in numerous case studies, e.g.,. Bayesian learning provides a natural framework for constructing PIsas it optimizes the posterior distribution of parameters from the assumed prior distribution. Despite its high generalization power, Bayesian techniques are limited by large computational complexity due to the calculation of Hessian matrix. Bootstrap method is the most popular among the four conventional multi-step PI construction methods. It includes smooth, parametric, wild, pairs, residual, Gaussian process, and other types of bootstrap techniques. In NN-based pairs bootstrap algorithm, for example, the key is to generate bootstrapped pairs by uniform sampling with replacement from the original training data. The estimation is then conducted for a single bootstrapped dataset.

Direct Prediction Intervals Construction Methods. This category of methods can tackle some of the limitations in previous methods, such as high demanding in computational power and stringent assumptions. When NN models are constructed through direct training without any assumptions, they can provide more adaptive and smarter PIs for any distribution of targets. Lower Upper Bound estimation method is such a technique that can be applied to arbitrary distribution of targets with more than one order reduced computation time. It directly calculates the lower and the upper bounds through trained NNs. Initially, Lower Upper Bound estimation NNs are optimized with the coverage width-based criterion, which presents several limitations. With all the benefits of the original Lower Upper Bound estimation method, the NN-based Direct Interval Forecasting methodhas much shorter computation time and narrower PIs credited to the improved cost function and the reduced average coverage error. Other approaches for improving the cost function of Lower Upper Bound estimation include the normalized root-mean-square width and particle swarm optimization, optimal system by, the independent width and penalty factors, the deviation from mid-interval consideration, and the deviation information-based criterion.

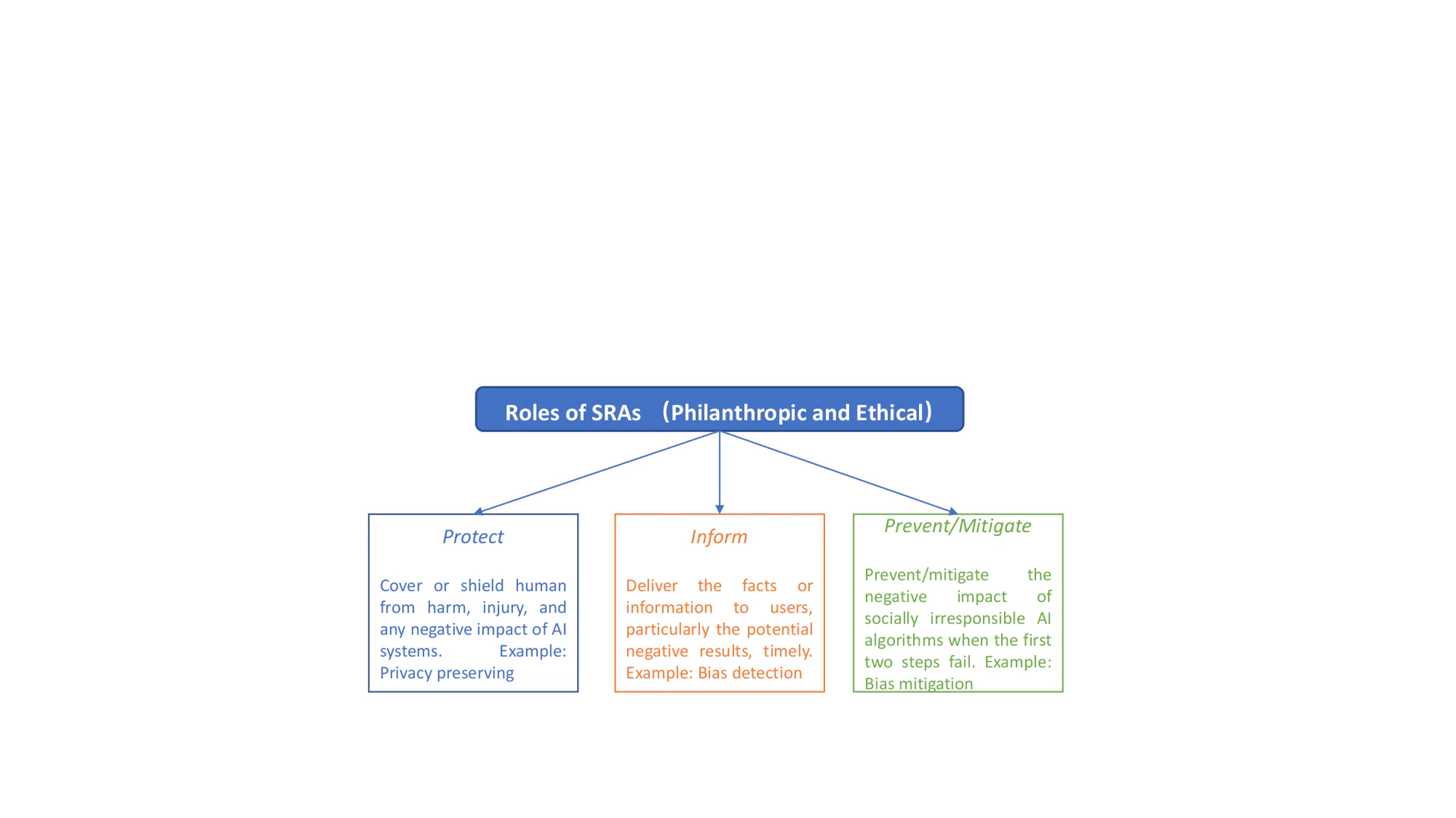

Roles of SRAs

So far, we have introduced the essentials of SRAs to achieve the expected ethical responsibilities. But pragmatic questions regarding their intended use remain: How to operationalize SRAs? What can SRAs eventually do for societal well-being to address societal challenges? Both ethical and philanthropic responsibilities are indispensable ingredients of the answers. While the ultimate goal of SRAs is to do good and be a good AI citizen , their ethical responsibilities should be ensured first. When AI fails to fulfill its ethical responsibilities, its philanthropic benefits can be insignificant. For instance, despite the immense public good of COVID-19 vaccines, there has been great controversy about algorithms for their distribution, which have been shown to be inequitable. Some argue that distribution algorithms should prioritize saving more lives and bringing the economy back more rapidly; they support such an `unfair’ allocation, but we would argue that that is not unfairness, but simply a difference of values and ethics. In our view, roles of SRAs are expected to encompass both ethical and philanthropic responsibilities. In this survey, we describe three dimensions that SRAs can help with to improve the quality of human life as illustrated in Figure cando: Protect (e.g., protect users’ personal information), Inform (e.g., fake news early detection), and Prevent/Mitigate (e.g., cyberbullying mitigation). We illustrate each dimension with research findings in several emerging societal issues. Particularly, for protecting dimension, we focus on privacy preserving and data dignity; for informing and preventing/mitigating dimensions, we discuss three societal issues that raise growing concerns recently: disinformation, abusive language, and unwanted bias. Because there are many various forms of abusive language such as hate speech and profanity, and the body of work related to each form is vast and diverse, spanning multiple interconnected disciplines, this survey uses the form of cyberbullying as a representative for the illustrations.

Protecting

The protecting dimension aims to cover or shield humans (especially the most vulnerable or at-risk) from harm, injury, and negative impact of AI systems, in order to intervene. This can be the protection of users’ personal data and their interactions with AI systems. Two typical examples are privacy preserving and data dignity.

Privacy-Preserving

The capability of deep learning models has been greatly improved by the emerging powerful infrastructures such as clouds and collaborative learning for model training. The fuel of this power, however, comes from data, particularly sensitive data. This has raised growing privacy concerns such as illegitimate use of private data and the disclosure of sensitive data. Existing threats against privacy are typically from attacks such as the adversarial examples we discussed in Sec. adversary. Specifically, there are direct information exposure (e.g., untrusted clouds), which is caused by direct intentional or unintentional data breaches, and indirect (inferred) information exposure (e.g., parameter inference), which is caused by direct access to the model or output. Existing privacy-preserving mechanisms can be classified into three categories, namely, private data aggregation methods, private training, and private inference.

Data aggregation methods are either context-free or context-aware. A context-free approach such as differential privacy, is unaware of the context or what the data will be used for. Context-aware approach such as information-theoretic privacy, on the other hand, is aware of the context in which the data will be used. A naïve technique for privacy protection is to remove identifiers from data, such as name, address, and zip code. It has been used for protecting patients’ information while processing their medical records, but the results are unsatisfying. The k-Anonymity method can prevent information from re-identification by showing at least $k$ samples with exact same set of attributes for given combination of attributes that the adversary has access to. The most commonly used data aggregation method is differential privacy, which aims to estimate the effect of removing an individual from the dataset and keep the effect of the inclusion of one’s data small. Some notable work includes the Laplace mechanism, differential privacy with Advanced Composition, and local differential privacy.

Information-theoretic privacy is a context-aware approach that explicitly models the dataset statistics. By contrast, context-free methods assume worse-case dataset statistics and adversaries. This line of research was studied by diaz2019robustness, pinceti2019data, and varodayan2011smart. The second type of privacy-preserving mechanism works during the training phase. Established work in private training is mostly used to guarantee differential privacy or semantic security and encryption. The two most common methods for encryption are homomorphic encryptionand secure multi-party computation. The third type of privacy-preserving mechanism works during the inference phase. It aims at the trained systems that are deployed to offer inference-as-a-service. Most methods in private inference are similar to those in private training, except for the information-theoretic privacy. It is typically used to offer information-theoretic mathematical or empirical evidence of how these methods operate to improve privacy. There is also work using differential privacy, homomorphic encryption, and secure multi-party computation.

Data Dignity

Beyond privacy preserving, what is more urgent to accomplish is data dignity. It allows users to have absolute control to how their data is being used and they are paid accordingly. Data dignity encompasses the following aspects:

- To help users objectively determine the benefits and risks associated with their digital presence and personal data.

- To let users control how their data will be used and the purpose of using the data.

- To allow users to negotiate the terms of using their data.

- To give users complete right and autonomy to be found, analyzed, or forgotten, apart from the fundamental right over their data. There are business models such as the Microsoft Data Bank designed to give users the control of their data and those shared by the Art of Researchabout how people can buy and sell their personal data.

Informing

The informing dimension aims to deliver the facts or information to users, particularly the potential negative results, in a timely way. We illustrate it with a focus on the discussions of detecting disinformation, cyberbullying, and bias.

Disinformation Detection

Disinformation is false information that is deliberately created and spread to deceive people, a social group, organization, or country. The online information ecosystem is never short of disinformation and misinformation, and the growing concerns have been raised recently. Tackling disinformation is rather challenging mainly because (1) disinformation exists almost in all domains; (2) it is ever-changing with new problems, challenges, and threats emerging every day; (3) it entails the joint efforts of interdisciplinary research – computer science, social science, politics, policy making, and psychology, cognitive science. Accurate and efficient identification of disinformation is the core to combat disinformation. Existing prominent approaches for disinformation detection primarily rely on news content, social context, user comments, fact-checking tools, and explainable and cross-domain detection.

Early work on disinformation detection has been focused on hand-crafted features extracted from text, such as lexical and syntactic features. Apart from text, online platforms also provide abundant social information that can be leveraged to enrich the textual features, e.g., number of re-tweets and likes on Twitter. Informed by theories in social science and network science, another line of work exploits social network information to improve the detection performance. Common features are social context, user profile, user engagement, and relationships among news articles, readers, and publishers. A unique function of online platforms is that they allow users to interact through comments. Recent work has shown that user comments can provide weak supervision signal for identifying the authenticity of news articles, which enables early detection of disinformation. When the user comments are unavailable, it is possible to learn users’ response to news articles and then generate user responses. Fact-checking can be achieved manually or automatically. Manual fact-checking relies on domain experts or crowdsourced knowledge from users. Automatic fact-checking uses structure knowledge bases such as knowledge graph to verify the authenticity of news articles, see, e.g.,. Beyond within-domain detection, other tasks such as cross-domain detection, explanation, and causal understanding of fake news disseminationhave also been discussed in literature.

Cyberbullying Detection

Cyberbullying differs from other forms of abusive language in that it is not an one-off incident but “aggressively intentional acts carried out by a group or an individual using electronic forms of contact, repeatedly or over time against victims who cannot easily defend themselves”. The increasingly reported number of cyberbullying cases on social media and the resulting detrimental impact have raised great concerns in society. Cyberbullying detection is regularly figured as a binary classification problem. While it shares some similarities with document classification, it should be noted that cyberbullying identification is inherently more complicated than simply identifying oppressive content.

Distinct characteristics of cyberbullying such as power imbalance and repetition of aggressive acts are central to marking a message or a social media sessionas cyberbullying. Several major challenges in cyberbullying detection have been discussed in literature such as the formulation of the unique bullying characteristics, e.g., repetition, data annotation, and severe class imbalance. Depending on the employed features, established work can be classified into four categories: content-based, sentiment-based, user-based, and network-based methods. Features extracted from social media content are lexical items such as keywords, Bag of Words, pronoun and punctuation. Empirical evaluations have shown that textual features are the most informative predictors for cyberbullying detection. For instance, using number of offensive terms as content features is effective in detecting offensive and cursing behavior; Computing content similarity between tweets from different users can help capture users’ personality traits and peer influence, two important factors of cyberbullying occurrences. Sentiment-based features typically include key-words, phrases and emojis, and they are often combined with content-based features. A notable workidentified seven types of emotions in tweets such as anger, empathy, and fear. User-based features are typical characteristics of users, e.g., personality (e.g., hostility), demographics (e.g., age), and user activity (e.g., active users). Hostility and neuroticism are found to be strongly related to cyberbullying behavior. Further, gender and age are indicative of cyberbullying in certain cases. Network-based features measure the sociability of online users, e.g., number of friends, followers, and network embeddedness. In addition, a number of methods seek to capture the temporal dynamics to characterize the repetition of cyberbullying, such as.

Bias Detection

Compared to the well-defined notions of fairness, bias detection is much less studied and the solution is not as straightforward as it may seem. The challenges arise from various perspectives. First, the data and algorithms used to make a decision are often not available to policy makers or enforcement agents. Second, algorithms are becoming increasingly complex and the uninterpretability limits an investigator’s ability to identify systematic discrimination through analysis of algorithms. Rather, they have to examine the output from algorithms to check for anomalous results, increasing the difficulty and uncertainty of the task.

Data exploratory analysis is a simple but effective tool to detect data bias. In this initial step of data analysis, we can use basic data statistics and visual exploration to understand what is in a dataset and the characteristics of the data. For algorithmic bias, one of the earliest methods is to compare the selection rate of different groups. Discrimination is highly possible if the selection rate for one group is sufficiently lower than that for other groups. For example, the US Equal Employment Opportunity Commission (EEOC) advocates the “four-fifths rule” or “80% rule”to identify a disparate impact. Suppose $Y$ denotes a binary class (e.g., hire or not), $A$ is the protected attribute (e.g., gender), a dataset presents disparate impact if

\[ \frac{Pr(Y=1|A=0)}{Pr(Y=1|A=1)} \leq \tau =0.8. \]However, statistical disparity does not necessarily indicate discrimination. If one group has disproportionately more qualified members, we may expect the differences between groups in the results.

A more frequently used approach is regression analysis, which is performed to examine the likelihood of favorable (or adverse) decisions across groups based on sensitive attributes. A significant, non-zero coefficient of the sensitive attributes given a correctly specified regression signals the presence of discrimination. However, we cannot guarantee to observe all the factors the decision maker considers. Therefore, instead of using rate at which decisions are made (e.g., the loan approval rates), bias detection can be based on the success rate of the decisions (e.g., the payback rate of the approved applicants), i.e., the outcome test . Another less popular statistical approach for bias detection is benchmarking. The major challenge of benchmarking analysis is identifying the distribution of the sensitive attributes of the benchmark population where sensitive attributes are unlikely to influence the identification of being at-risk. Some solutions can be seen in. Recently, AI researchers have developed tools to automatically detect bias. For instance, drawing on techniques in natural language processing and moral foundation theories, the tool by mokhberian2020moral can understand structure and nuances of content consistently showing up on left-leaning and right-leaning news sites, aiming to help consumers better prepare for unfamiliar news source. In earlier efforts, an international research group launched a non-profit organization Project Implicit[https://implicit.harvard.edu/implicit] in 1998 aimed at detecting implicit social bias.

Preventing/Mitigating

If both of the first two dimensions fail, we may rely on the last dimension to prevent/mitigate the negative impact of socially indifferent AI algorithms on the end-users. We continue the discussions about disinformation, cyberbullying, and bias, with a focus on the prevention and mitigation strategies.

Disinformation Prevention/Mitigation

Preventing the generation/spread of disinformation and mitigating its negative impact is an urgent task because disinformation typically spread fasterthan normal information due to the catchy news content and the ranking algorithms operating behind the online news platforms. To increase user engagement, social recommender systems are designed to recommend popular posts and trending content. Therefore, disinformation often gains more visibility. An effective approach for disinformation mitigation is to govern this visibility of news, e.g., recommendation and ranking based algorithms. Mitigation also relates to early detection.

Network intervention can slow down the spread of disinformation by influencing the exposed users in a social network. For example, we can launch a counter-cascade that consists of fact-checked version of false news articles. This is commonly referred to as the influence limitation or minimization problem. Given a network with accessible counter-cascade, the goal is to find a (minimum) set of nodes in this network such that the effect of the original cascade can be minimized. A variety of approximation algorithmshave been proposed to solve the NP-hard problem and the variants. When applied to disinformation mitigation, they seek to inoculate as many nodes as possible in a short period of time. It is possible to extend the two cascades into tasks with multiple cascades, where we can further consider the different priorities of these cascades, i.e., each cascade influences the node in the network differently. The second method for disinformation mitigation is content flagging: social media platforms allow users to flag' or report’ a news content if they find it offensive, harmful, and/or false. Big social media companies such as Facebook hired professional moderators to manually investigate and/or remove these content. However, considering the millions of news generated/spread every minute, it is impractical for these moderators to manually review all the news. The solution comes to the crowd wisdom – users can choose to `flag’ the content if it violates the community guidelines of the platform. Some platforms can further provide feedback for these users about if their fact-check is correct or not. User behavior is an effective predictor for disinformation detection, therefore, the third prevention method leverages the differences between user behaviors to identify susceptible or gullible users. For example, it is shown inthat groups of vulnerable Twitter users can be identified in fake news consumption. Other studiesalso suggest that older people are more likely to spread disinformation.

Cyberbullying Prevention/Mitigation

In contrast to the large amount of work in cyberbullying detection, efforts for its prevention and mitigation have been a few. Some research suggests that prevention/mitigation strategies are defined at different levels. At technological level, we can consider providing parental control service, firewall blocking service, online services rules, text-message control, and mobile parental control, e.g., KnowBullying and BullyBlocker. Another effective tool is psychological approach, such as talking and listening to cyber-victims, providing counseling services, encouraging victims to make new relations and join social clubs. At education level, we are responsible to educate end-users, help improve their technical and cognitive skills. At administrative level, it is important for organizations and government to develop policies to regulate using free service and enhance workplace environment. Therefore, the goal of cyberbullying prevention/mitigation can only be accomplished with interdisciplinary collaborations, e.g., psychology, public health, computer science, and other behavioral and social sciences. One example is that computer and social scientists attempted to understand behavior of users in realistic environments by designing social media site for experimentation such as controlled study and post-study survey.

Existing solutions to preventing cyberbullying can report/control/warn about message content (e.g., dinakar2012common,vishwamitra2017mcdefender), provide support for victims (e.g., vishwamitra2017mcdefender), and educate both victims and bullies (e.g., dinakar2012common). A variety of anti-bully apps are also available to promote well-being of users. For example, NoMoreBullyingMe App provides online meditation techniques to support victims; “Honestly” Appencourages users to share positive responses with each other (e.g., sing a song). However, current cyberbullying prevention strategies often do not work as desired because of the complexity and nuance with which adolescents bully others online.

Bias Mitigation

Prior approaches for bias mitigation focus on either designing fair machine learning algorithms or theorizing on the social and ethical aspects of machine learning discrimination. From the technical aspect, approaches to fairness typically can be categorized into pre-processing (prior to modelling), in-processing (at the point of modelling), and post-processing (after modelling). One condition to use pre-processing approaches is that the algorithm is allowed to modify the training data. We can then transform the data to remove the discrimination. In-processing approaches eliminate bias by modifying algorithms during the training process. We can either incorporate fairness notion into the objective function or impose fairness constraint. When neither training data nor model can be modified, we can use post-processing approaches to reassign the predicted labels based on a defined function and a holdout set which was not used in the model training phase. Most of these approaches are built on the notion of protected or sensitive variables that define the (un)privileged groups. Commonly used protected variables are age, gender, marital status, race, and disabilities. A shared characteristic of these groups is they are disproportionately (less) more likely to be positively classified. Fairness measures are important to quantify fairness in the development of fairness approaches. However, creating generalized notions of fairness quantification is a challenging task. Depending on the protected target, fairness metrics are usually designed for individual fairness (e.g., every one is treated equally), group fairness (e.g., different groups such as women vs men are treated equally), or subgroup fairness. Drawing on theories in causal inference, individual fairness also includes counterfactual fairness which describes that a decision is fair towards an individual if the result was same when s/he had taken a different sensitive attribute.

Recent years have witnessed immense progress of fair machine learning – a variety of methods have been proposed to address bias and discrimination over different applications. We focus on two mainstream methods: fair classification and fair regression. A review of machine learning fairness can be referred to.

(1) Fair Classification. For a (binary) classifier with sensitive variable $S$ , the target variable $Y$ , and the classification score $R$ , general fairness desiderata have three “non-discrimination” criteria: Independence , i.e., $R\indep S$ ; Separation , i.e., $R\indep S|Y$ ; and Sufficiency , i.e., $Y\indep S | R$ . Fair machine learning algorithms need to adopt/create specific fairness definitions that fit into context. Common methods in fair classification include blinding, causal methods, transformation, sampling and subgroup analysis, adversarial learning, reweighing, and regularization and constraint optimization.

(2) Fair Regression. The goal of fair regression is to jointly minimize the difference between true and predicted values and ensure fairness. It follows the general formulation of fair classification but with continuous rather than binary/categorical target variable. Accordingly, the fairness definition, metrics, and the basic algorithms are adapted from classification to regression. For example, it is suggested using statistical parity and bounded-group-loss metrics to measure fairness in regression. Bias in linear regression is considered as the effects of a sensitive attribute on the target variable through the mean difference between groups and AUC metrics. One commonly used approach in fair regression is regularization, e.g.,.

Apart from fair machine learning, algorithm operators are encouraged to share enough details about how research is carried out to allow others to replicate it. This is a leap for mitigating bias as it helps end-users with different technical background to understand how the algorithm works before making any decision. It is also suggested that AI technologists and researchers develop a bias impact statement as a self-regulatory practice. It can help probe and avert any potential biases that are injected into or resultant from algorithmic decision. Some example questions in the statement are “What will the automated decision do?”, “How will potential bias be detected?”, and “What are the operator incentives”. In algorithm design, researchers are also responsible to encourage the role of diversity within the team, training data, and the level of cultural sensitivity. The “diversity-in-design” mechanism aims to take deliberate and transparent actions to address the upfront cultural biases and stereotypes. Furthermore, we might also consider updating nondiscrimination and other civil rights laws to interpret and redress online disparate impacts. An example of such consideration is to unambiguously define the thresholds and parameters for the disparate treatments of protected groups before the algorithm design.

Open Problems and Challenges

This survey reveals that the current understanding of SRAs is insufficient and future efforts are in great need. Here, we describe several primary challenges, as summarized in Figure challenges, in an attempt to broaden the discussions on future directions and potential solutions.

Causal Learning. The correlation fallacy causes AI algorithms to meet with fundamental obstacles in order to commit social responsibility. These obstacles are robustness, explainability, and cause-effect connections. The era of big data has changed the ways of learning causality, and meanwhile, causal learning becomes an indispensable ingredient for AI systems to achieve human-level intelligence. There are a number of benefits to incorporate causality in the next-generation of AI. For example, teaching AI algorithms to understand “why” can help them transfer their knowledge to different but similar domains. Early efforts in SRAs attempted to employ causal learning concept and methods such as intervention, counterfactual, do -calculus, propensity scoring to address fairness (e.g., counterfactual fairness) and interpretability (causal interpretability) issues. They have shown prominent results in these tasks.

Context Matters. Context is the core to SRAs due to its inherently elaborate nature, e.g., the “Transparency Paradox”. Understanding and quantifying the relationships among the various principles (some are tradeoffs and some are not), e.g., fairness, transparency, and safety, have to be placed in specific context. One such context is the social context . Existing SRAs (e.g., fair machine learning), once introduced into a new social context, may render current technical interventions ineffective, inaccurate, and even dangerously misguided. A recent studyfound that while fair ranking algorithms such as Det-Greedyhelp increase the exposure of minority candidates, their effectiveness is limited by the job contexts in which employers have a preference to particular genders. How to properly integrate social context into SRAs is still an open problemAlgorithmic context_ (e.g., supervised learning, unsupervised learning, and reinforcement learning) is also extremely important when designing SRAs for the given data. A typical example is the feedback loop problem in predictive policing. A subtle algorithmic choice can have huge ramifications on the results. Consequently, we need to understand the algorithmic context to make the right algorithmic choices when designing socially responsible AI systems. Designing context-aware SRAs is the key to achieving Social Responsibility of AI.

Responsible Model Release and Governance. Nontransparent model reporting is one of the main causes of AI indifferent behaviors. As a critical step to clarify the intended use cases of AI systems and the contexts for which they are well suited, responsible model release and governance has been receiving growing attentions from both industry and academia. One role of SRAs is to bring together the tools, solutions, practices, and people to govern the built AI systems across its life cycle. At this early stage, some research results suggested that released models be accompanied by documentation detailing various characteristics of the systems, e.g., what it does, how it works, and why it matters. For example, the AI FactSheetsadvocates to use a factsheet completed and voluntarily released by AI developers to increase the transparency of their services. A similar concept is model cards, short documents that provide benchmarked evaluation for the trained AI models in a variety of conditions, e.g., different cultural or demographic groups. Typically, a model card should include the model details, intended use, evaluation metrics, training/evaluation data, ethical considerations, and caveats and recommendations. To help increase transparency, manage risk, and build trust in AI, AI technologists and researchers are responsible to address various challenges faced in creating useful AI release documentationand develop effective AI governance tools.

AI Defenses. Developing AI systems that outwit malicious AI is still at an early stage. Since we have not fully understood how AI systems work, they are not only vulnerable to attack but also likely to fail in surprising ways. As a result, it is critical and urgent to work on designing systems that are provably robust to help ensure that the AI systems are not vulnerable to adversaries. At least two capabilities an “AI firewall” needs to be equipped with: one capability is to probe an AI algorithm for weaknesses (e.g., perturb the input of an AI system to make it misbehave) and the other one is to automatically intercept potentially problematic inputs. Some big tech companies have started building their own AI defenses to identify the weak spots, e.g., the “red team” in Facebook, the software framework released by Microsoft, Nvidia, IBM, and 9 other companies. AI defenses reflect the fundamental weakness in modern AI and make AI systems more robust and intelligent.

AI Ethics Principles and Policies. Current AI principles and policies for ethical practice have at least two common criticisms: (1) they are too vaguely formulated to prove to be helpful in guiding practice; and (2) they are defined primarily by AI researchers and powerful people with mainstream populations in mind. For the first criticism, to help operationalize AI principles in practice and organizations confront inevitable value trade-offs, it has been suggested to redefine AI principles based on philosophical theories in applied ethics. Particularly, it categorizes published AI principles (e.g., fairness, accountability, and transparency) into three widely used core principles in applied ethics: autonomy, beneficence (avoiding harm and doing good), and justice. The core principles “invoke those values that theories in moral and political philosophy argue to be intrinsically valuable, meaning their value is not derived from something else”. Existing AI principles are instrumental principles that “build on concepts whose values are derived from their instrumental effect in protecting and promoting intrinsic values”. Operationazable AI principles help effectively put ethical AI in practice and reduce the responsible AI Gap in companies. To address the second criticism, we need to best elicit the inputs and values of diverse voices from the Subjects of SRAs, i.e., the minority and disadvantaged groups, and incorporate their perspectives into the tech policy document design process. If we align values of AI systems through a panel of people (who are compensated for doing this), they too can influence the system behavior, and not just the powerful people or AI researchers.

Understanding Why . Many AI systems are designed and developed without fully understanding why : What do we wish the AI system do? This is often the reason that these systems fail to represent the goals of the real tasks, a primary source of AI risks. The problem can become more challenging when the AI system is animated through a number of lines of code that lack nuance, creating a machine that does not align with our true intentions. As the first step, understanding why clearly defines our social expectation of AI systems and paves way for more specific questions such as “What is the problem? Who will define it? and what are the right people to include?”. Answering why helps us effectively abolish the development of socially indifferent AI systems in the first place and also helps understand the kinds of deception an AI system may learn by itself.