- Papers

Papers is Alpha. This content is part of an effort to make research more accessible, and (most likely) has lost some details from the original. You can find the original paper here.

Introduction

Advances in artificial intelligence (AI) could impact nearly all aspects of society, including the labor market, transportation, healthcare, education, and national security. AI’s effects may be profoundly positive, but the technology entails risks and disruptions that warrant attention. While technologists and policymakers have begun to discuss the societal implications of machine learning and AI more frequently, public opinion has not shaped much of these conversations. Given AI’s broad impact, civil society groups argue that the public, particularly those underrepresented in the tech industry, should have a role in shaping the technology.

In the U.S., public opinion has shaped policy outcomes, including those concerning immigration, free trade, international conflicts, and climate change mitigation. As in these other policy domains, we expect the public to become more influential over time in impacting AI policy. It is thus vital to have a better understanding of how the public thinks about AI and the governance of AI. Such understanding is essential to crafting informed policy and identifying opportunities to educate the public about AI’s character, benefits, and risks.

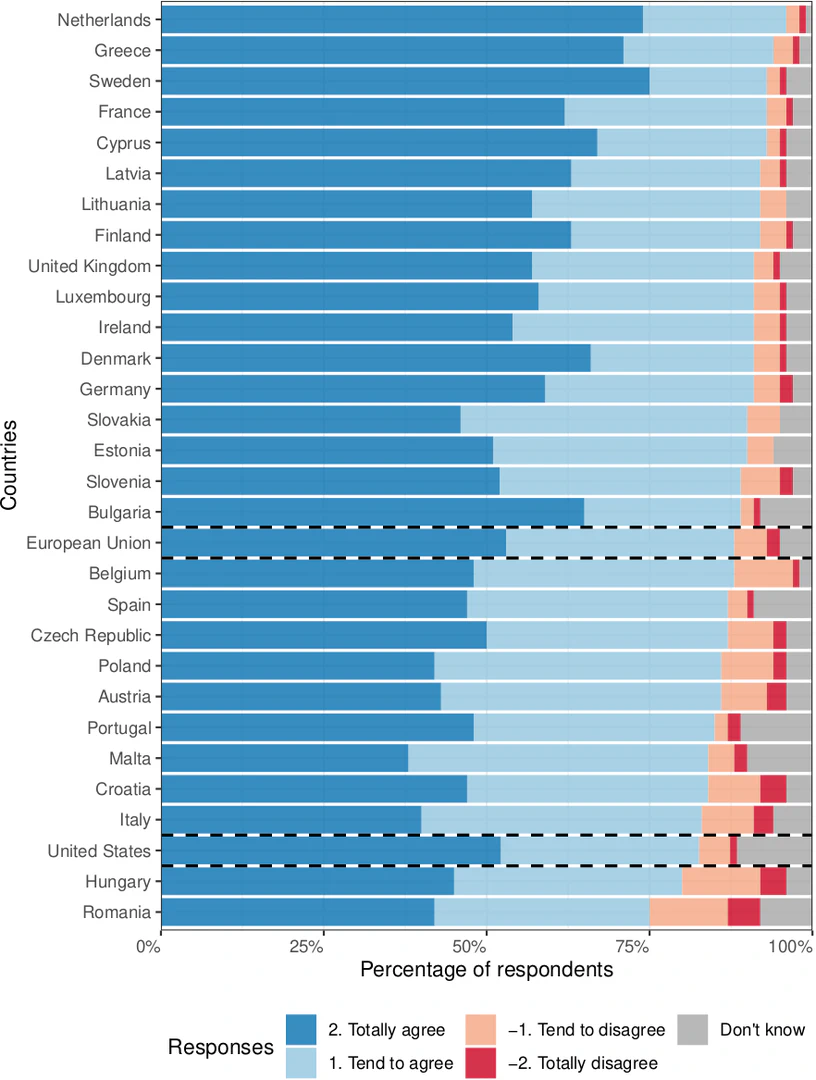

Using an original, large-scale survey ($N$=2000), we studied how the American public perceives AI governance. The overwhelming majority of Americans (82%) believe that AI and/or robots should be carefully managed. This statistic is comparable to survey results from respondents residing in European Union countries, as seen in Figure fig-manage. Furthermore, Americans consider all of the 13 AI governance challenges presented in the survey to be important for tech companies and governments to manage carefully.

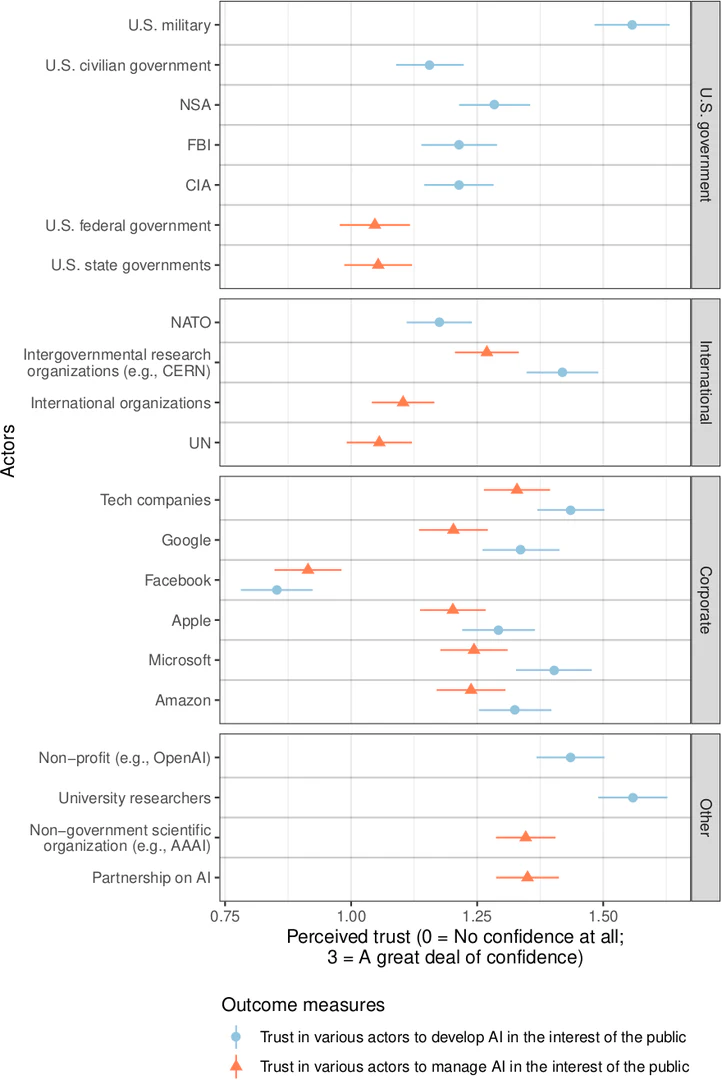

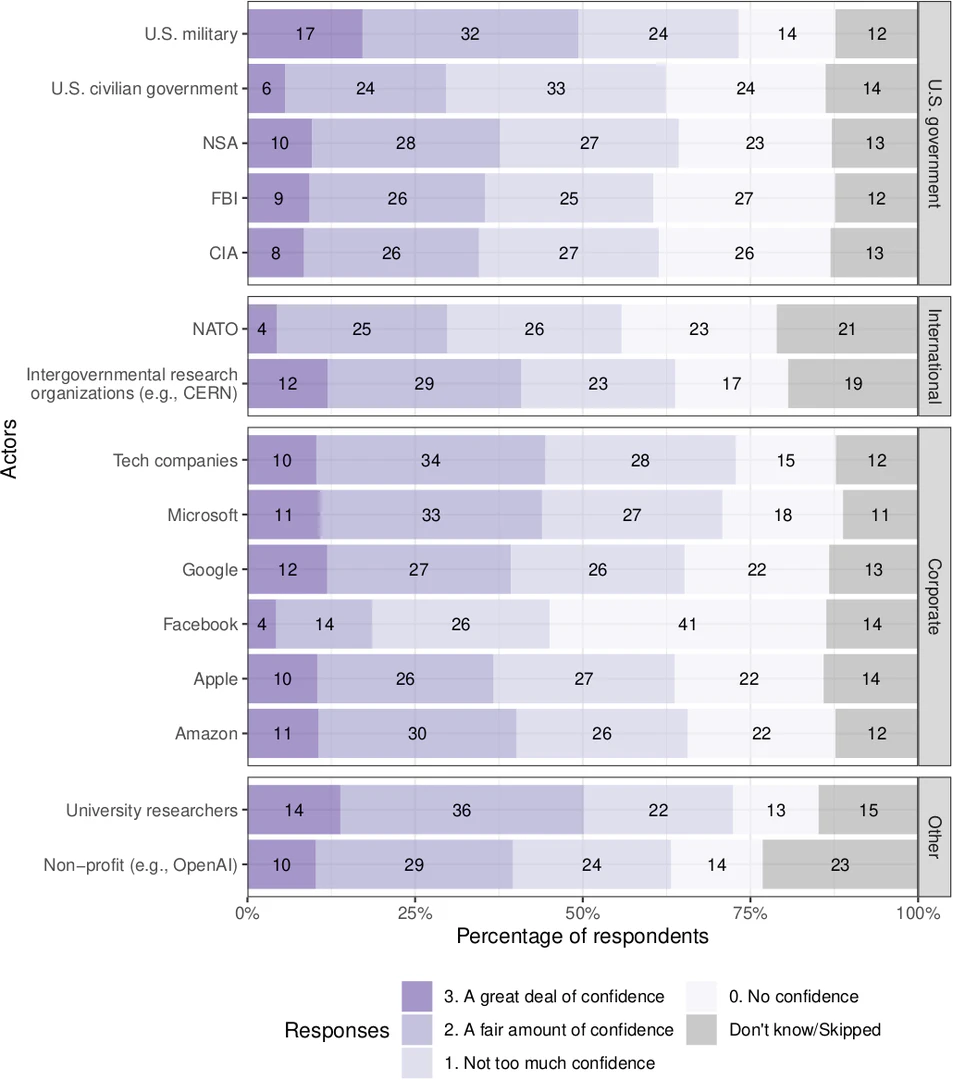

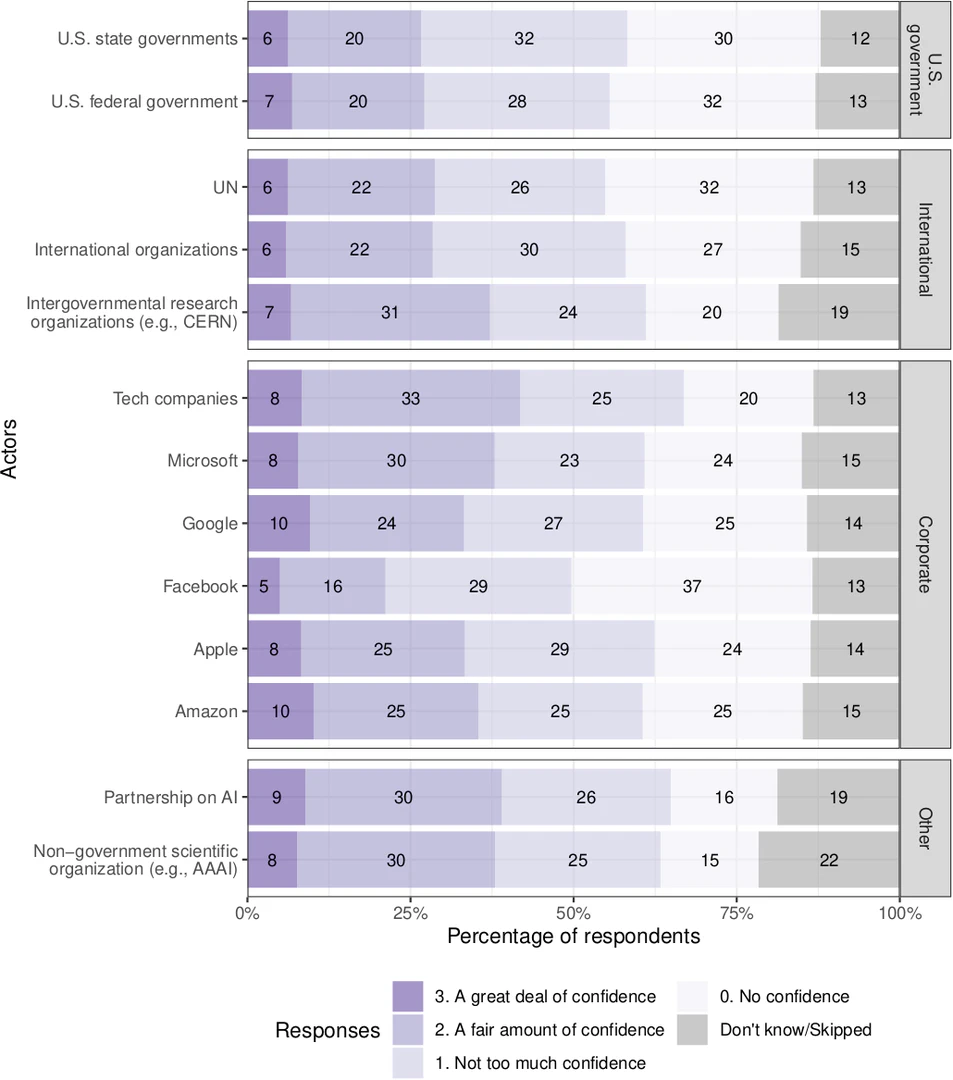

At the same time, Americans have only low to moderate levels of trust in governmental, corporate, and multistakeholder institutions to develop and manage AI in the public’s interest. Public trust varies greatly between organizations. Broadly, the public puts the most trust in university researchers (50% reporting “a fair amount of confidence” or “a great deal of confidence”) and the U.S. military (49%); followed by scientific organizations, the Partnership on AI, tech companies (excluding Facebook), and intelligence organizations; followed by U.S. federal or state governments, and the UN; followed by Facebook. Contrary to existing research on attitudes toward other emerging technologies, our study finds that individual-level trust in various actors to responsibly develop and manage AI does not predict one’s general support for developing AI.

Background

Public Opinion and AI Governance

Studying public opinion allows us to anticipate how electoral politics could shape AI governance as policymakers seek to regulate applications of the technology. Over the past two years, corporations, governments, civil society groups, and multistakeholder organizations have published dozens of high-level AI ethics principles. These documents borrow heavily from core principles in bioethics or medical ethics. But unlike medical ethics, which is guided by the common aim of promoting the health of the patient, AI development does not share one common goal but many goals. Furthermore, many of these AI ethics principles are at tension with each other. For instance, how does one improve the accuracy of algorithmic predictions while ensuring fair and equal treatment of those impacted by the algorithm? As corporations and governments attempt to translate these principles into practice, tensions between principles could lead to political contestation.

In the U.S., voters are divided on how to regulate facial recognition technology and algorithms used to display social media content, with some of these divisions reflecting existing disagreements between partisans. For instance, Democrats, compared with Republicans, are much more opposed to law enforcement using facial recognition technology. While several states and cities that are heavily Democrat have adopted or proposed moratoriums on law enforcement using facial recognition technology, progress to enact federal regulation has been slow. Industry self-regulation is not immune to partisan politics. For example, Google’s AI ethics board dissolved after the company’s employees and external civil society groups protested the inclusion of Heritage Foundation president Kay Coles James and drone company CEO Dyan Gibbens on the board. Recognizing the public’s divergent policy preferences on AI governance issues is necessary to have a productive public policy deliberation.

How Trust in Institutions Affects Regulation of Emerging Technology

The public’s trust in various institutions to develop and manage AI could affect the regulation of the technology. General social trust is negatively correlated with perceived risk from technological hazards. One observational study finds that that general social distrust is positively correlated with support for government regulation, even when the public perceives the government to be corrupt. A follow-up study using more extensive survey data suggests that the public prefers governmental regulation if they trust the government more than they do major companies. This latter finding is supported by how the public has reacted to genetically modified (GM) foods and nanotechnology. These two emerging technologies are similar to AI in that the public has to rely on those with scientific expertise when evaluating potential risks.

Distrust in institutions producing and regulating GM foods is a compelling explanation for the widespread opposition to GM foods in developed countries. Those with a high-level trust of scientists and regulators are more accepting of GM foods; in contrast, distrust of the agricultural/food industry, contrasted with trust in environmental watchdogs, predicts opposition to GM foods. Although scientists are among the most trusted group in the U.S., Americans have cynical views toward scientists when considering GM foods. Only 19% thinks that scientists understand the health consequences of GM foods very well, even though scientists have formed a consensus that GM foods are safe to eat. Furthermore, the American public believes that scientists are more motivated by concerns for their industry than concerns for the public.

Nanotechnology, though less salient than GM foods in the media, is the subject of extensive public opinion research. A meta-analysis of 11 surveys conducted in developed countries finds that the public perceives that the use of nanotechnology has greater benefits than risks; nevertheless, a large subset of the public is uncertain of the consequences of nanotechnology. As in perceptions of GM foods, trust in institutions seems to play a significant role in shaping attitudes toward nanotechnology. Americans who have lower confidence in business leaders within the nanotechnology industry also perceive the technology to be riskier. A higher level of distrust in government agencies to protect the public from nanotechnology hazards is associated with a higher perceived risk of the technology. Consumers who are less trustful of the food industry indicate they are more reluctant to buy foods produced or packaged using nanotechnology.

Existing Survey Research on AI Governance and Trust in Tech Companies

Our survey builds on existing public opinion research on attitudes toward AI and trust in tech companies. Past survey research related to AI tends to focus on specific governance challenges, such as lethal autonomous weapons, algorithmic fairness, or facial recognition technology. A few large-scale surveys have taken a more comprehensive approach by asking about a range of AI governance challenges. We improved upon these surveys by asking respondents to consider a variety of AI governance challenges using the same question-wording. This consistency in question-wording allowed us to compare respondents’ perceptions toward major issues in AI governance.

Another innovation of our research project is that we connect AI governance with trust in tech companies. Trust in tech companies has become a growing topic in survey research. While Americans perceive tech companies to have a more positive impact on society than other institutions, including the news media and the government, their feelings toward tech companies have declined dramatically since 2015. Nevertheless, there exists heterogeneity in how the public views individual tech companies; one consistent finding in non-academic surveys is that the public strongly distrusts Facebook, particularly in its handling of personal data. In our survey, we examined whether this general distrust of tech companies extend to their management of AI development and applications.

Survey Data and Analysis

We conducted an original online survey ($N$=2000) through YouGov between June 6 and 14, 2018. YouGov drew a random sample from the U.S. adult population (i.e., the target sample) and selected respondents from its online respondent panel that matched the target sample on key demographic variables. The details of YouGov’s sample matching methodology can be found in the Appendix.

We pre-registered nearly all of the analysis on Open Science Framework (pre-analysis plan URL: https://osf.io/7gqvm/ ). Pre-registration increases research transparency by requiring researchers to specify their analysis before analyzing the data. Doing so prevents researchers from misusing data analysis to come up with statistically significant results when they do not exist, otherwise known as $p$-hacking. Survey weights provided by YouGov were used in our primary analysis. We followed the Standard Operating Procedures for Don Green’s Lab at Columbia University when handling missing data or “don’t know” responses. Heteroscedasticity-consistent standard errors were used to generate the margins of error at the 95% confidence level. We report cluster-robust standard errors whenever there is clustering by respondent. In figures, each error bar shows the 95% confidence intervals.

Perceptions of AI Governance Challenges

Methodology

We sought to understand how Americans prioritize policy issues associated with AI. Respondents were asked to consider five AI governance challenges, randomly selected from a set of 13 (see the Appendix for the full text); the order these five were given to each respondent was also randomized. After considering each governance challenge, respondents were asked how likely they think the challenge will affect large numbers of people both 1) in the U.S. and 2) around the world within 10 years using a seven-point scale that displayed both numerical likelihoods and qualitative descriptions (e.g., “Very unlikely: less than 5% chance (2.5%)”). Respondents were also asked to evaluate how important it is for tech companies and governments to carefully manage each challenge presented to them using a four-point scale.

General Results

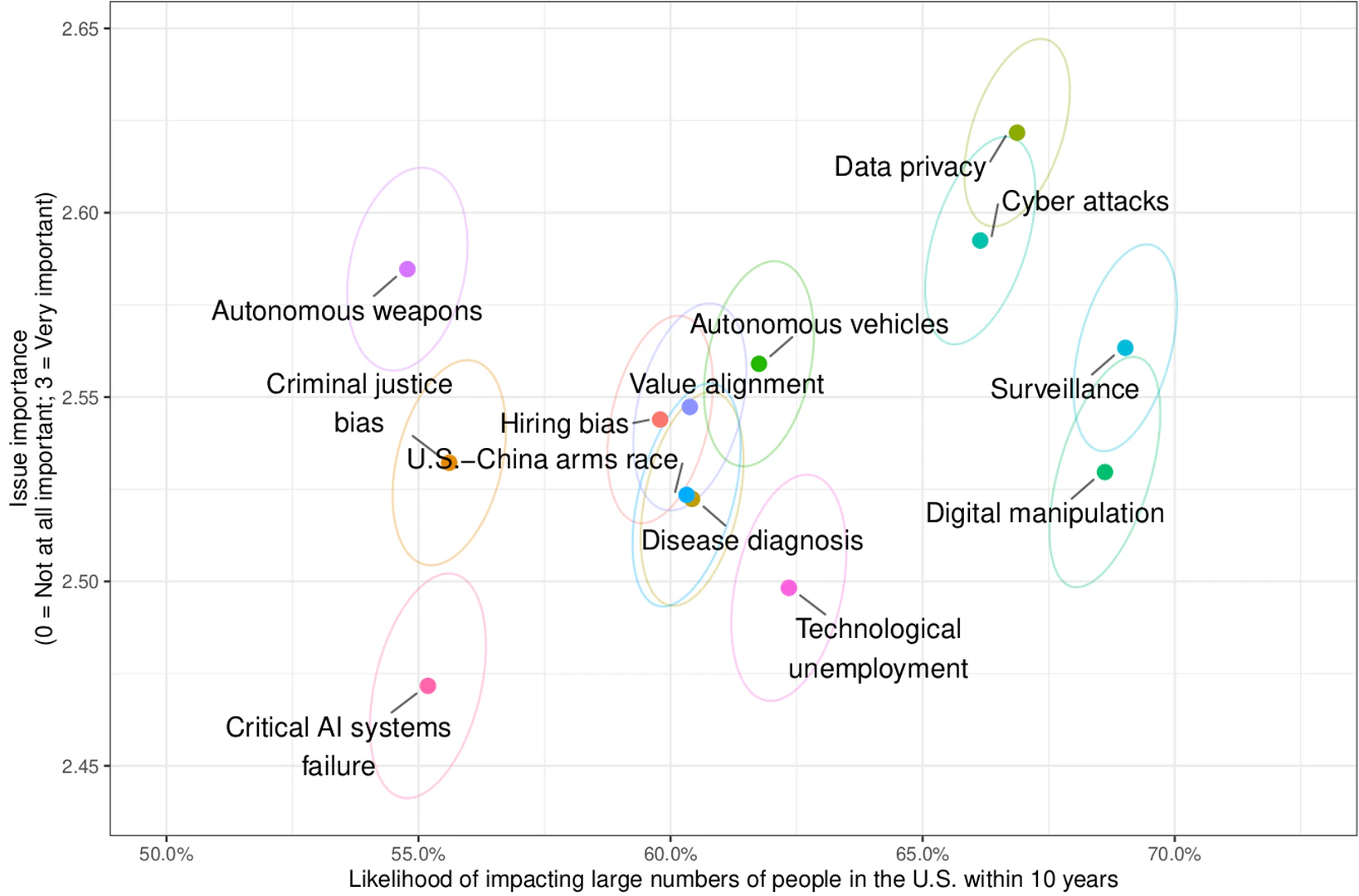

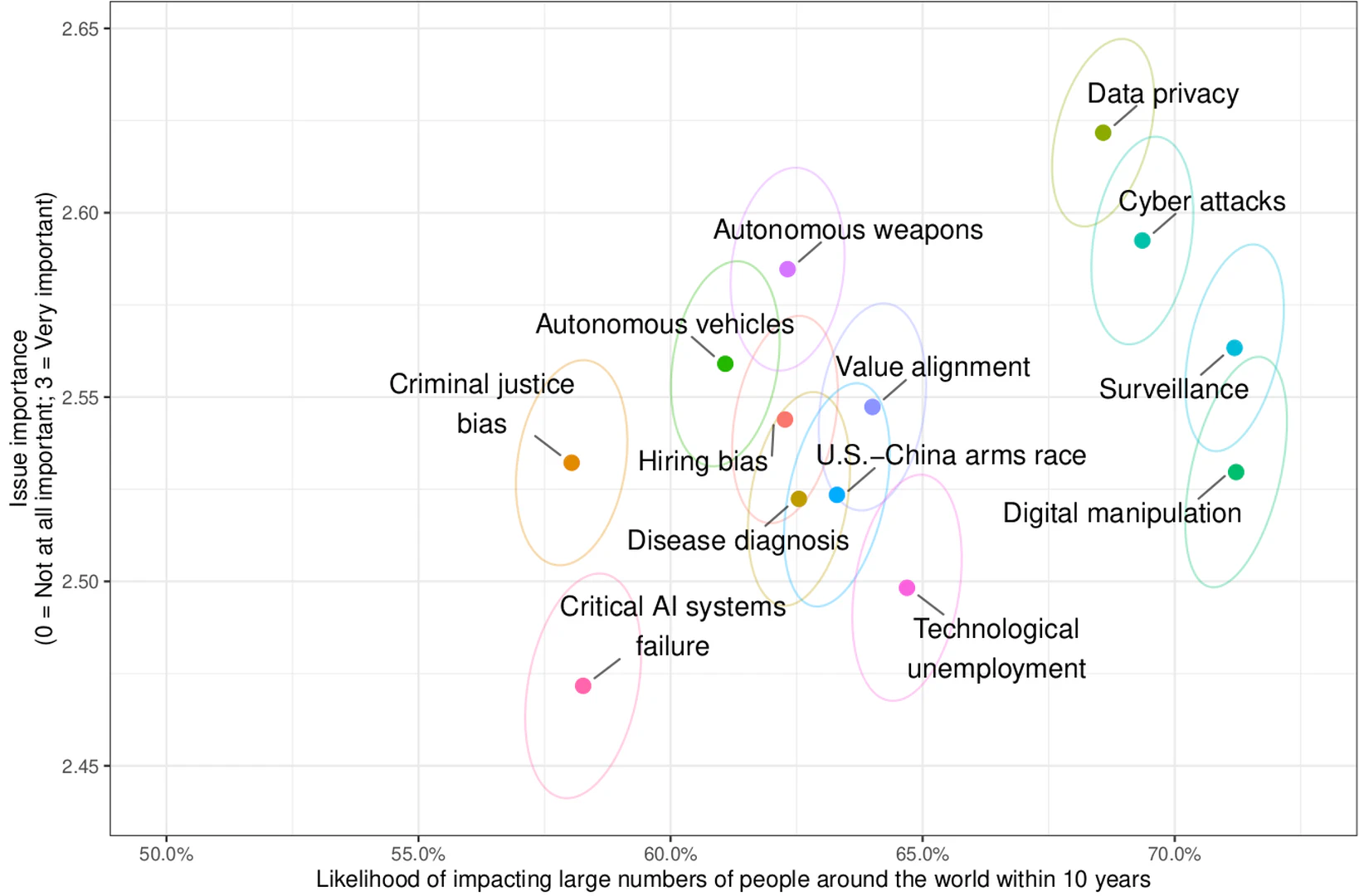

We use scatterplots to visualize our survey results. In the top graph in Figure fig-aigovchallenges, the x-axis is the perceived likelihood of the problem happening to large numbers of people in the U.S. In the bottom graph, the x-axis is the perceived likelihood of the problem happening to large numbers of people around the world. The y-axes on both graphs in Figure fig-aigovchallenges represent respondents’ perceived issue importance, from 0 (not at all important) to 3 (very important). Each dot represents the mean perceived likelihood and issue importance. The correspondent ellipse represents the 95% confidence region of the bivariate means assuming the two variables are distributed multivariate normal.

Americans consider all the AI governance challenges we present to be important: the mean perceived issues importance of each governance challenge is between “somewhat important” (2) and “very important” (3), though there is meaningful and discernible variation across items.

The AI governance challenges Americans think are most likely to impact large numbers of people, and are important for tech companies and governments to tackle, are found in the upper-right quadrant of the two plots. These issues include data privacy as well as AI-enhanced cyber attacks, surveillance, and digital manipulation. We note that the media have widely covered these issues during the time of the survey.

There are a second set of governance challenges that are perceived on average, as about 7% less likely, and marginally less important. These include autonomous vehicles, value alignment, bias in using AI for hiring, the U.S.-China arms race, disease diagnosis, and technological unemployment. Finally, the third set of challenges are perceived on average another 5% less likely, and about equally important, including criminal justice bias and critical AI systems failures.

We also note that Americans predict that all of the governance challenges mentioned in the survey, besides protecting data privacy and ensuring the safety of autonomous vehicles, are more likely to impact people around the world than to affect people in the U.S. While most of these statistically significant differences are substantively small, one difference stands out: Americans think that autonomous weapons are 7.6 percentage points more likely to impact people around the world than Americans (two-sided (p)-value 0.001).

We want to reflect on one result. “Value alignment” consists of an abstract description of the alignment problem and a reference to what sounds like individual level harms: “while performing jobs they could unintentionally make decisions that go against the values of its human users, such as physically harming people.” “Critical AI systems failures,” by contrast, references military or critical infrastructure uses, and unintentional accidents leading to “10 percent or more of all humans to die.” The latter was weighted as less important than the former: we interpret this as a probability-weighted assessment of importance, since presumably the latter, were it to happen, is much more important. We thus think the issue importance question should be interpreted in a way that down-weights low probability risks.

Subgroup Analysis

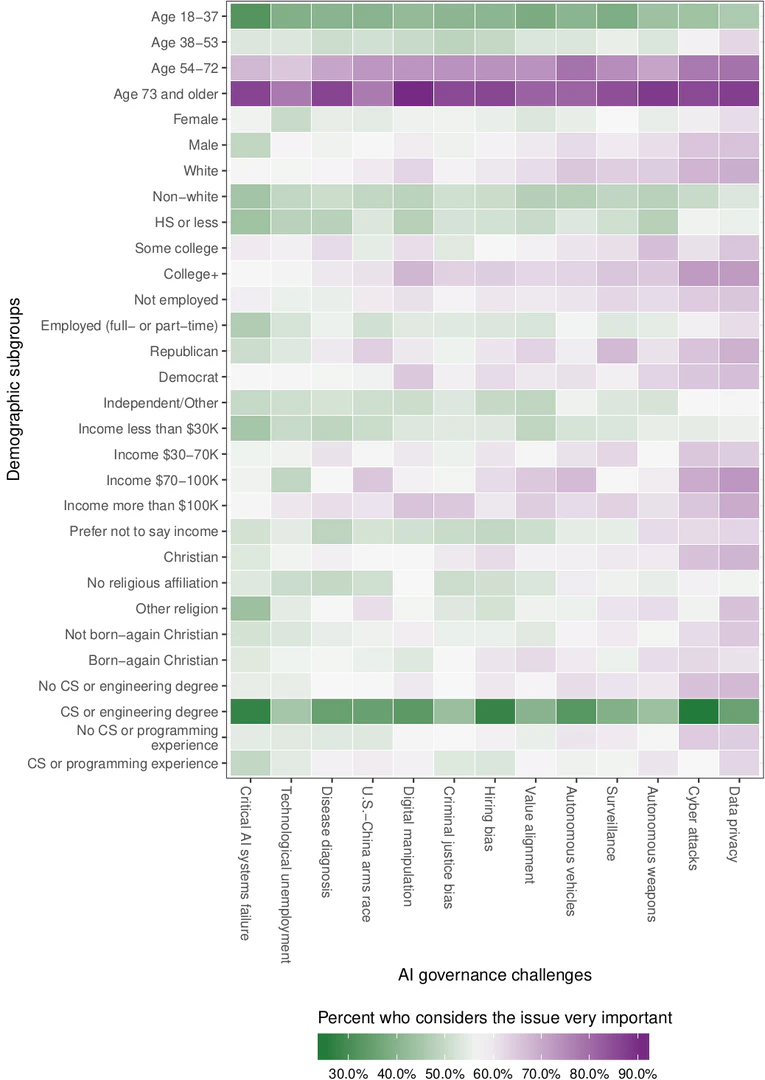

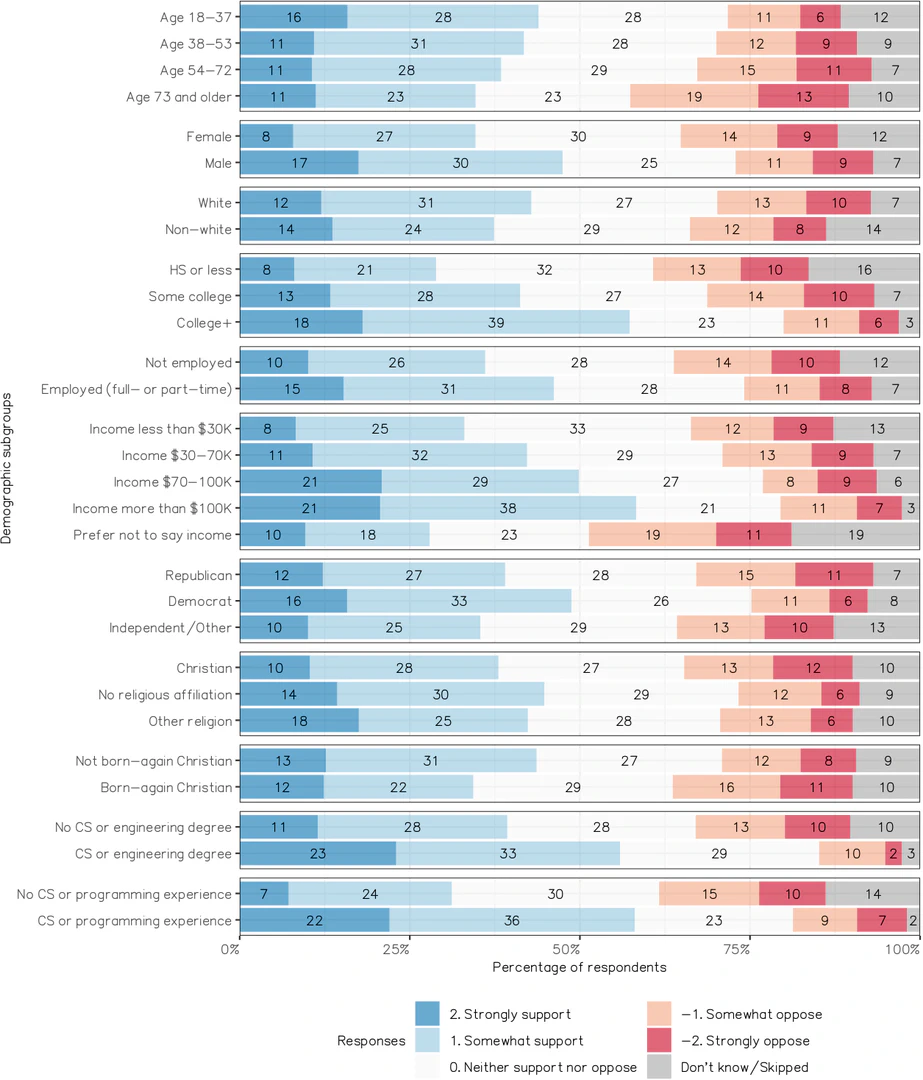

We performed further analysis by calculating the percentage of respondents in each subgroup who consider each governance challenge to be “very important” for governments and tech companies to manage. In general, differences in responses are more salient across demographic subgroups than across governance challenges. In a linear multiple regression predicting perceived issue importance using demographic subgroups, governance challenges, and the interaction between the two, we find that the stronger predictors are demographic subgroup variables, including age group and having CS or programming experience.

Two highly visible patterns emerge from our data visualization. First, a higher percentage of older respondents, compared with younger respondents, consider nearly all AI governance challenges to be “very important.” In another part of the survey (see Figure 7 in the Appendix), we find that older Americans, compared with younger Americans, are less supportive of developing AI. Our results here might explain this age gap: older Americans see each AI governance challenge as substantially more important than do younger Americans. Whereas 85% of Americans older than 73 consider each of these issues to be very important, only 40% of Americans younger than 38 do.

Second, those with CS or engineering degrees, compared with those who do not, rate all AI governance challenges as less important. This result could explain another finding in our survey that shows those with CS or engineering degrees tend to express greater support for developing AI. In Table 4 in the Appendix, we report the results of a saturated linear model using demographic variables, governance challenges, and the interaction between these two types of variables to predict perceived issue importance. We find that those who are 54-72 or 73 and older, relative to those who are below 38, view the governance challenges as more important (two-sided (p)-value 0.001 for both comparisons). Furthermore, we find that those who have CS or engineering degrees, relative to those who do not, view the governance challenges as less important (two-sided (p)-value 0.001).

Trust in Actors to Develop and Manage AI

Methodology

Respondents were asked how much confidence they have in various actors to develop AI. They were randomly assigned five actors out of 15 to evaluate. We provided a short description of actors that are not well-known to the public (e.g., NATO, CERN, and OpenAI). Also, respondents were asked how much confidence, if any, they have in various actors to manage the development and use of AI in the best interests of the public. They were randomly assigned five out of 15 actors to evaluate. Again, we provided a short description of actors that are not well-known to the public (e.g., AAAI and Partnership on AI). Confidence was measured using the same four-point scale described above. The two sets of 15 actors differed slightly because, for some actors, it seemed inappropriate to ask one or the other question. See the Appendix for the exact wording of the questions and descriptions of the actors.

Results

Americans do not express great confidence in most actors to develop or to manage AI, as seen in Figures 5 and 6 in the Appendix. A majority of Americans do not have a “great deal” or even a “fair amount” of confidence in any institution, except university researchers, to develop AI. Furthermore, Americans place greater trust in tech companies and non-governmental organizations (e.g., OpenAI) than in governments to manage the development and use of the technology.

University researchers and the U.S. military are the most trusted groups to develop AI: about half of Americans express a “great deal” or even a “fair amount” of confidence in them. Americans express slightly less confidence in tech companies, non-profit organizations (e.g., OpenAI)[At the time the survey was conducted (June 2018), OpenAI was a 501(c)(3) nonprofit organization. In March 2019, OpenAI announced it is restructuring into “capped-profit” company to attract investments.], and American intelligence organizations. Nevertheless, opinions toward individual actors within each of these groups vary. For example, while 44% of Americans indicated they feel a “great deal” or even a “fair amount” of confidence in tech companies, they rate Facebook as the least trustworthy of all the actors. More than four in 10 indicate that they have no confidence in the company.

Our survey was conducted between June 6 and 14, 2018, shortly after the Facebook/Cambridge Analytica scandal became highly salient in the media. On April 10-11, 2018, Facebook CEO Mark Zuckerberg testified before the U.S. Congress regarding the Cambridge Analytica data leak. On May 2, 2018, Cambridge Analytica announced its shutdown. Nevertheless, Americans’ distrust of the company existed before the Facebook/Cambridge Analytica scandal. In a pilot survey that we conducted on Mechanical Turk during July 13–14, 2017, respondents indicated a substantially lower level of confidence in Facebook, compared with other actors, to develop and regulate AI.

The results on the public’s trust of various actors to manage the develop and use of AI provided are similar to the results discussed above. Again, a majority of Americans do not have a “great deal” or even a “fair amount” of confidence in any institution to manage AI. In general, the public expresses greater confidence in non-governmental organizations than in governmental ones. Indeed, 41% of Americans express a “great deal” or even a “fair amount” of confidence in “tech companies,” compared with 26% who feel that way about the U.S. federal government. But when presented with individual big tech companies, Americans indicate less trust in each than in the broader category of “tech companies.” Once again, Facebook stands out as an outlier: respondents give it a much lower rating than any other actor. Besides “tech companies,” the public places relatively high trust in intergovernmental research organizations (e.g., CERN)[We asked about trust in intergovernmental research organizations, such as CERN, because some policy researchers have proposed creating a politically neutral AI development hub similar to CERN to avoid risks associated with competition in AI development between rival states.], the Partnership on AI, and non-governmental scientific organizations (e.g., AAAI). Nevertheless, because the public is less familiar with these organizations, about one in five respondents give a “don’t know” response.

Similar to our findings, recent survey research suggests that while Americans feel that AI should be regulated, they are unsure who the regulators should be. When asked who “should decide how AI systems are designed and deployed,” half of Americans indicated they do not know or refused to answer. Our survey results also seem to reflect Americans’ general attitudes toward public institutions. According to a 2016 Pew Research Center survey, an overwhelming majority of Americans have “a great deal” or “a fair amount” of confidence in the U.S. military and scientists to act in the best interest of the public. In contrast, public confidence in elected officials is much lower: 73% indicated that they have “not too much confidence” or “no confidence”. Less than one-third of Americans thought that tech companies do what’s right “most of the time” or “just about always”; moreover, more than half indicate that tech companies have too much power and influence in the U.S. economy. Nevertheless, Americans’ attitudes toward tech companies are not monolithic but varies by company. For instance, our research findings reflect the results from several non-academic surveys that find the public distrusts Facebooks significantly more than other major tech companies.

Predicting Support for Developing AI Using Institutional Trust

Regression results: predicting support for developing AI using respondents’ trust in different types of actors to develop AI

Table Label: tab-trustdevsupport

Download PDF to view tableRegression result: predicting support for developing AI using respondents’ trust in different types of actors to manage AI

Table Label: tab-trustmanageupport

Download PDF to view tableThe American public’s support for developing AI is not predicted by their trust in various actors to develop and manage AI. Our null results stand in contrast to studies of GM foods and nanotechnology, where trust in institutions that develop and regulate the technology is associated with greater support for the technology.

For our analysis, we use multiple linear regression to whether individual-level trust in various types of institutions can predict support for developing AI. Note that this analysis is not included in our pre-registration and is therefore exploratory in nature. There are four categories of actors (the same ones shown in Figure fig-trust): U.S. government, international bodies, corporate, and others (e.g., universities and non-profits). In our regressions, the group “others” is the reference group. Support for developing AI is measured using a five-point Likert scale, with -2 meaning “strongly oppose” and 2 meaning “strongly support.” Our regressions controlled for all the demographic variables shown in Table 3 in the Appendix. Trust in none of the actor types predicts support for developing AI, as seen in Tables tab-trustdevsupport and tab-trustmanageupport.

Conclusion

To understand what the American public thinks about the regulation of the technology, we conducted a large-scale survey. The survey reveals that while Americans consider all AI governance challenges to have high issue importance, they do not necessarily trust the actors who have the power to develop and manage the technology to act in the public’s interest. Nevertheless, as our exploratory analysis from the previous section shows, institutional distrust does not necessarily predict opposition to AI development.

One direction for future research is to examine other factors that shape the public’s preferences toward AI governance. Technological knowledge, moral and psychological attributes, and perceptions of risks versus benefits are associated with support for GM foods and nanotechnology. Another direction for future research is to improve the measurement of institutional trust. Our existing survey questions focused on the public interest component of trust; future studies could investigate other components of trust, such as competence, transparency, and honesty. Finally, we plan to investigate whether the public’s perceived lack of political power makes them more distrustful of institutions to govern AI. In a recent survey of the British public, a large majority felt that they were unable to influence AI development, and many felt tech companies and governments have disproportionate influence. Research on public perceptions of AI is nascent but could have a broad impact as AI governance moves from the realm of abstract principles into the world of mass politics.

YouGov Sampling and Weights

YouGov interviewed 2387 respondents who were then matched down to a sample of 2000 to produce the final dataset. The respondents were matched to a sampling frame on gender, age, race, and education. The frame was constructed by stratified sampling from the full 2016 American Community Survey (ACS) one-year sample with selection within strata by weighted sampling with replacements (using the person weights on the public use file).

The matched cases were weighted to the sampling frame using propensity scores. The matched cases and the frame were combined and a logistic regression was estimated for inclusion in the frame. The propensity score function included age, gender, race/ethnicity, years of education, and geographic region. The propensity scores were grouped into deciles of the estimated propensity score in the frame and post-stratified according to these deciles.

The weights were then post-stratified on 2016 U.S. presidential vote choice, and a four-way stratification of gender, age (four-categories), race (four-categories), and education (four-categories), to produce the final weight.

Text of the Questions

Below, we present the survey text as shown to respondents. The numerical codings are shown in parentheses following each answer choice.

Should AI and/or robots should be carefully managed

Please tell me to what extent you agree or disagree with the following statement.

[Respondents were presented with one statement randomly selected from the list below.]

- AI and robots are technologies that require careful management.

- AI is a technology that requires careful management.

- Robots are technologies that require careful management.

ANSWER CHOICES:

- Totally agree (2)

- Tend to agree (1)

- Tend to disagree (-1)

- Totally disagree (-2)

- I don’t know

Support for developing AI

[All respondents were presented with the following prompt.]

Next, we would like to ask you questions about your attitudes toward artificial intelligence.

Artificial Intelligence (AI) refers to computer systems that perform tasks or make decisions that usually require human intelligence. AI can perform these tasks or make these decisions without explicit human instructions. Today, AI has been used in the following applications:

[Respondents were shown five items randomly selected from the list below.]

- Translate over 100 different languages

- Predict one’s Google searches

- Identify people from their photos

- Diagnose diseases like skin cancer and common illnesses

- Predict who are at risk of various diseases

- Help run factories and warehouses

- Block spam email

- Play computer games

- Help conduct legal case research

- Categorize photos and videos

- Detect plagiarism in essays

- Spot abusive messages on social media

- Predict what one is likely to buy online

- Predict what movies or TV shows one is likely to watch online

QUESTION: How much do you support or oppose the development of AI?

ANSWER CHOICES:

- Strongly support (2)

- Somewhat support (1)

- Neither support nor oppose (0)

- Somewhat oppose (-1)

- Strongly oppose (-2)

- I don’t know

AI governance challenges

We would like you to consider some potential policy issues related to AI. Please consider the following:

[Respondents were shown five randomly-selected items from the list below, one item at a time. For ease of comprehension, we include the shorten labels used in the figures in bold.]

- Hiring bias: Fairness and transparency in AI used in hiring: Increasingly, employers are using AI to make hiring decisions. AI has the potential to make less biased hiring decisions than humans. But algorithms trained on biased data can lead to lead to hiring practices that discriminate against certain groups. Also, AI used in this application may lack transparency, such that human users do not understand what the algorithm is doing, or why it reaches certain decisions in specific cases.

- Criminal justice bias: Fairness and transparency in AI used in criminal justice: Increasingly, the criminal justice system is using AI to make sentencing and parole decisions. AI has the potential to make less biased hiring decisions than humans. But algorithms trained on biased data could lead to discrimination against certain groups. Also, AI used in this application may lack transparency such that human users do not understand what the algorithm is doing, or why it reaches certain decisions in specific cases.

- Disease diagnosis: Accuracy and transparency in AI used for disease diagnosis: Increasingly, AI software has been used to diagnose diseases, such as heart disease and cancer. One challenge is to make sure the AI can correctly diagnose those who have the disease and not mistakenly diagnose those who do not have the disease. Another challenge is that AI used in this application may lack transparency such that human users do not understand what the algorithm is doing, or why it reaches certain decisions in specific cases.

- Data privacy: Protect data privacy: Algorithms used in AI applications are often trained on vast amounts of personal data, including medical records, social media content, and financial transactions. Some worry that data used to train algorithms are not collected, used, and stored in ways that protect personal privacy.

- Autonomous vehicles: Make sure autonomous vehicles are safe: Companies are developing self-driving cars and trucks that require little or no input from humans. Some worry about the safety of autonomous vehicles for those riding in them as well as for other vehicles, cyclists, and pedestrians.

- Ditigal manipulation: Prevent AI from being used to spread fake and harmful content online: AI has been used by governments, private groups, and individuals to harm or manipulate internet users. For instance, automated bots have been used to generate and spread false and/or harmful news stories, audios, and videos.

- Cyber attacks: Prevent AI cyber attacks against governments, companies, organizations, and individuals: Computer scientists have shown that AI can be used to launch effective cyber attacks. AI could be used to hack into servers to steal sensitive information, shut down critical infrastructures like power grids or hospital networks, or scale up targeted phishing attacks.

- Surveillance: Prevent AI-assisted surveillance from violating privacy and civil liberties: AI can be used to process and analyze large amounts of text, photo, audio, and video data from social media, mobile communications, and CCTV cameras. Some worry that governments, companies, and employers could use AI to increase their surveillance capabilities.

- U.S.-China arms race: Prevent escalation of a U.S.-China AI arms race: Leading analysts believe that an AI arms race is beginning, in which the U.S. and China are investing billions of dollars to develop powerful AI systems for surveillance, autonomous weapons, cyber operations, propaganda, and command and control systems. Some worry that a U.S.-China arms race could lead to extreme dangers. To stay ahead, the U.S. and China may race to deploy advanced military AI systems that they do not fully understand or can control. We could see catastrophic accidents, such as a rapid, automated escalation involving cyber and nuclear weapons.

- Value alignment: Make sure AI systems are safe, trustworthy, and aligned with human values: As AI systems become more advanced, they will increasingly make decisions without human input. One potential fear is that AI systems, while performing jobs they are programmed to do, could unintentionally make decisions that go against the values of its human users, such as physically harming people.

- Autonomous weapons: Ban the use of lethal autonomous weapons (LAWs): Lethal autonomous weapons (LAWs) are military robots that can attack targets without control by humans. LAWs could reduce the use of human combatants on the battlefield. But some worry that the adoption of LAWs could lead to mass violence. Because they are cheap and easy to produce in bulk, national militaries, terrorists, and other groups could readily deploy LAWs.

- Technological unemployment: Guarantee a good standard of living for those who lose their jobs to automation: Some forecast that AI will increasingly be able to do jobs done by humans today. AI could potentially do the jobs of blue-collar workers, like truckers and factory workers, as well as the jobs of white-collar workers, like financial analysts or lawyers. Some worry that in the future, robots and computers can do most of the jobs that are done by humans today.

- Critical AI systems failure: Prevent critical AI systems failures: As AI systems become more advanced, they could be used by the military or in critical infrastructure, like power grids, highways, or hospital networks. Some worry that the failure of AI systems or unintentional accidents in these applications could cause 10 percent or more of all humans to die.

QUESTION: In the next 10 years, how likely do you think it is that this AI governance challenge will impact large numbers of people in the U.S.?

ANSWER CHOICES:

- Very unlikely: less than 5% chance (2.5%)

- Unlikely: 5-20% chance (12.5%)

- Somewhat unlikely: 20-40% chance (30%)

- Equally likely as unlikely: 40-60% chance (50%)

- Somewhat likely: 60-80% chance (70%)

- Likely: 80-95% chance (87.5%)

- Very likely: more than 95% chance (97.5%)

- I don’t know

QUESTION: In the next 10 years, how likely do you think it is that this AI governance challenge will impact large numbers of people around the world?

ANSWER CHOICES:

- Very unlikely: less than 5% chance (2.5%)

- Unlikely: 5-20% chance (12.5%)

- Somewhat unlikely: 20-40% chance (30%)

- Equally likely as unlikely: 40-60% chance (50%)

- Somewhat likely: 60-80% chance (70%)

- Likely: 80-95% chance (87.5%)

- Very likely: more than 95% chance (97.5%)

- I don’t know

QUESTION: In the next 10 years, how important is it for tech companies and governments to carefully manage the following challenge?

ANSWER CHOICES:

- Very important (3)

- Somewhat important (2)

- Not too important (1)

- Not at all important (0)

- I don’t know

Trust of actors to develop AI

QUESTION: How much confidence, if any, do you have in each of the following to develop AI in the best interests of the public?

[Respondents were shown five items randomly selected from the list below. We included explainer text for actors not well known to the public; respondents could view the explainer text by hovering their mouse over the actor’s name. The items and the answer choices were shown in a matrix format.]

- The U.S. military

- The U.S. civilian government

- National Security Agency (NSA)

- Federal Bureau of Investigation (FBI)

- Central Intelligence Agency (CIA)

- North Atlantic Treaty Organization (NATO);explainer text for NATO: NATO is a military alliance that includes 28 countries including most of Europe, as well as the U.S. and Canada.

- An international research organization (e.g., CERN); explainer text for CERN: The European Organization for Nuclear Research, known as CERN, is a European research organization that operates the largest particle physics laboratory in the world.

- Tech companies

- Apple

- Microsoft

- Amazon

- A non-profit AI research organization (e.g., OpenAI); explainer text for OpenAI: Open AI is an AI non-profit organization with backing from tech investors that seeks to develop safe AI. University researchers

ANSWER CHOICES:

- A great deal of confidence (3)

- A fair amount of confidence (2)

- Not too much confidence (1)

- No confidence (0)

- I don’t know

Trust of actors to manage AI

QUESTION: How much confidence, if any, do you have in each of the following to manage the development and use of AI in the best interests of the public?

[Respondents were shown five items randomly selected from the list below. We included explainer text for actors not well known to the public; respondents could view the explainer text by hovering their mouse over the actor’s name. The items and the answer choices were shown in a matrix format.]

- U.S. federal government

- U.S. state governments

- International organizations (e.g., United Nations, European Union)

- The United Nations (UN)

- An intergovernmental research organization (e.g., CERN); explainer text for CERN: The European Organization for Nuclear Research, known as CERN, is a European research organization that operates the largest particle physics laboratory in the world.

- Tech companies

- Apple

- Microsoft

- Amazon

- Non-government scientific organizations (e.g., AAAI); explainer text for AAAI: Association for the Advancement of Artificial Intelligence (AAAI) is a non-government scientific organization that promotes research in, and responsible use of AI.

- Partnership on AI, an association of tech companies, academics, and civil society groups

ANSWER CHOICES:

- A great deal of confidence (3)

- A fair amount of confidence (2)

- Not too much confidence (1)

- No confidence (0)

- I don’t know

Additional Tables and Figures

Results from a saturated regression predicting perceived issue importance using demographic variables, AI governance challenge, and interactions between the two types of variables; the coefficients for the interactions variables are not shown due to space constraints

Table Label: tab-aigovregsat

Download PDF to view tableBibliography

1@article{floridi2019unified,

2 day = {23},

3 month = {6},

4 year = {2019},

5 date = {2019-06-23},

6 author = {Floridi, Luciano and Cowls, Josh},

7 volume = {1},

8 url = {https://hdsr.mitpress.mit.edu/pub/l0jsh9d1},

9 title = {A Unified Framework of Five Principles for AI in Society},

10 note = {https://hdsr.mitpress.mit.edu/pub/l0jsh9d1},

11 number = {1},

12 doi = {10.1162/99608f92.8cd550d1},

13 journal = {Harvard Data Science Review},

14}

15

16@article{scott2018overview,

17 publisher = {Annual Reviews},

18 year = {2018},

19 pages = {459--479},

20 volume = {38},

21 journal = {Annual Review of Nutrition},

22 author = {Scott, Sydney E and Inbar, Yoel and Wirz, Christopher D and Brossard, Dominique and Rozin, Paul},

23 title = {An overview of attitudes toward genetically engineered food},

24}

25

26@unpublished{mittelstadt2019ai,

27 year = {2019},

28 url = {https://dx.doi.org/10.2139/ssrn.3391293},

29 note = {Forthcoming in \textit{Nature Machine Intelligence}},

30 author = {Mittelstadt, Brent},

31 title = {AI Ethics--Too Principled to Fail?},

32}

33

34@article{caughey2018policy,

35 publisher = {Cambridge University Press},

36 year = {2018},

37 pages = {249--266},

38 number = {2},

39 volume = {112},

40 journal = {American Political Science Review},

41 author = {Caughey, Devin and Warshaw, Christopher},

42 title = {Policy preferences and policy change: Dynamic responsiveness in the American states, 1936--2014},

43}

44

45@techreport{west2019,

46 url = {https://perma.cc/85VP-HL8R},

47 year = {2019},

48 institution = {AI Now Institute},

49 title = {Discriminating Systems: Gender, Race and Power in AI},

50 author = {Sarah Myers West and Meredith Whittaker and Kate Crawford},

51}

52

53@book{oecdai,

54 doi = {https://doi.org/https://doi.org/10.1787/eedfee77-en},

55 year = {2019},

56 title = {Artificial Intelligence in Society},

57 author = {{OECD}},

58}

59

60@inproceedings{whittlestone2019role,

61 organization = {ACM},

62 year = {2019},

63 pages = {195--200},

64 booktitle = {Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society},

65 author = {Whittlestone, Jess and Nyrup, Rune and Alexandrova, Anna and Cave, Stephen},

66 title = {The role and limits of principles in AI ethics: towards a focus on tensions},

67}

68

69@article{cave2019bridging,

70 publisher = {Nature Publishing Group},

71 year = {2019},

72 pages = {5},

73 number = {1},

74 volume = {1},

75 journal = {Nature Machine Intelligence},

76 author = {Cave, Stephen and {\'O}h{\'E}igeartaigh, Se{\'a}n S},

77 title = {Bridging near-and long-term concerns about AI},

78}

79

80@techreport{zhang2019regulation,

81 url = {https://perma.cc/B3FY-K6JH},

82 year = {2019},

83 institution = {Brookings Institution},

84 title = {Public Opinion Lessons for {AI} Regulation},

85 author = {Baobao Zhang},

86}

87

88@techreport{smith2019facial,

89 url = {https://perma.cc/FUV7-5BDJ},

90 year = {2019},

91 institution = {Pew Research Center},

92 title = {More Than Half of U.S. Adults Trust Law Enforcement to Use Facial Recognition Responsibly},

93 author = {Aaron Smith},

94}

95

96@article{siegrist2005perception,

97 publisher = {Taylor \& Francis},

98 year = {2005},

99 pages = {145--156},

100 number = {2},

101 volume = {8},

102 journal = {Journal of Risk Research},

103 author = {Siegrist, Michael and Gutscher, Heinz and Earle, Timothy C},

104 title = {Perception of risk: the influence of general trust, and general confidence},

105}

106

107@article{aghion2010regulation,

108 publisher = {MIT Press},

109 year = {2010},

110 pages = {1015--1049},

111 number = {3},

112 volume = {125},

113 journal = {The Quarterly Journal of Economics},

114 author = {Aghion, Philippe and Algan, Yann and Cahuc, Pierre and Shleifer, Andrei},

115 title = {Regulation and distrust},

116}

117

118@techreport{smith2018,

119 url = {https://perma.cc/KSN6-6FRW},

120 type = {Survey report},

121 institution = {Pew Research Center},

122 year = {2018},

123 author = {Aaron Smith},

124 title = {Public Attitudes Toward Technology Companies},

125}

126

127@article{pitlik2015does,

128 publisher = {Springer},

129 year = {2015},

130 pages = {355--377},

131 number = {3-4},

132 volume = {163},

133 journal = {Public Choice},

134 author = {Pitlik, Hans and Kouba, Ludek},

135 title = {Does social distrust always lead to a stronger support for government intervention?},

136}

137

138@article{siegrist1999causal,

139 publisher = {Wiley Online Library},

140 year = {1999},

141 pages = {2093--2106},

142 number = {10},

143 volume = {29},

144 journal = {Journal of Applied Social Psychology},

145 author = {Siegrist, Michael},

146 title = {A causal model explaining the perception and acceptance of gene technology 1},

147}

148

149@article{siegrist2000influence,

150 publisher = {Wiley Online Library},

151 year = {2000},

152 pages = {195--204},

153 number = {2},

154 volume = {20},

155 journal = {Risk Analysis},

156 author = {Siegrist, Michael},

157 title = {The influence of trust and perceptions of risks and benefits on the acceptance of gene technology},

158}

159

160@article{lang2005does,

161 publisher = {Wiley Online Library},

162 year = {2005},

163 pages = {1241--1252},

164 number = {5},

165 volume = {25},

166 journal = {Risk Analysis: An International Journal},

167 author = {Lang, John T and Hallman, William K},

168 title = {Who does the public trust? The case of genetically modified food in the United States},

169}

170

171@article{olofsson2006attitudes,

172 publisher = {Taylor \& Francis},

173 year = {2006},

174 pages = {601--624},

175 number = {4},

176 volume = {8},

177 journal = {European Societies},

178 author = {Olofsson, Anna and {\"O}hman, Susanna and Rashid, Saman},

179 title = {Attitudes to gene technology: the significance of trust in institutions},

180}

181

182@article{marques2015attitudes,

183 publisher = {SAGE Publications Sage UK: London, England},

184 year = {2015},

185 pages = {601--618},

186 number = {5},

187 volume = {24},

188 journal = {Public Understanding of Science},

189 author = {Marques, Mathew D and Critchley, Christine R and Walshe, Jarrod},

190 title = {Attitudes to genetically modified food over time: How trust in organizations and the media cycle predict support},

191}

192

193@techreport{funk2016new,

194 url = {https://perma.cc/TJ2L-K9JU},

195 year = {2016},

196 institution = {Pew Research Center},

197 title = {The New Food Fights: U.S. Public Divides Over Food Science},

198 author = {Cary Funk and Brian Kennedy},

199}

200

201@article{satterfield2009anticipating,

202 publisher = {Nature Publishing Group},

203 year = {2009},

204 pages = {752},

205 number = {11},

206 volume = {4},

207 journal = {Nature Nanotechnology},

208 author = {Satterfield, Terre and Kandlikar, Milind and Beaudrie, Christian EH and Conti, Joseph and Harthorn, Barbara Herr},

209 title = {Anticipating the perceived risk of nanotechnologies},

210}

211

212@article{macoubrie2004public,

213 publisher = {Springer},

214 year = {2004},

215 pages = {395--405},

216 number = {4},

217 volume = {6},

218 journal = {Journal of Nanoparticle Research},

219 author = {Michael D. Cobb and Jane Macoubrie},

220 title = {Public perceptions about nanotechnology: Risks, benefits and trust},

221}

222

223@article{macoubrie2006nanotechnology,

224 publisher = {Sage Publications Sage CA: Thousand Oaks, CA},

225 year = {2006},

226 pages = {221--241},

227 number = {2},

228 volume = {15},

229 journal = {Public Understanding of Science},

230 author = {Macoubrie, Jane},

231 title = {Nanotechnology: public concerns, reasoning and trust in government},

232}

233

234@article{siegrist2007laypeople,

235 publisher = {Wiley Online Library},

236 year = {2007},

237 pages = {59--69},

238 number = {1},

239 volume = {27},

240 journal = {Risk Analysis: An International Journal},

241 author = {Siegrist, Michael and Keller, Carmen and Kastenholz, Hans and Frey, Silvia and Wiek, Arnim},

242 title = {Laypeople's and experts' perception of nanotechnology hazards},

243}

244

245@article{siegrist2007public,

246 publisher = {Elsevier},

247 year = {2007},

248 pages = {459--466},

249 number = {2},

250 volume = {49},

251 journal = {Appetite},

252 author = {Siegrist, Michael and Cousin, Marie-Eve and Kastenholz, Hans and Wiek, Arnim},

253 title = {Public acceptance of nanotechnology foods and food packaging: The influence of affect and trust},

254}

255

256@article{horowitz2016public,

257 url = {https://doi.org/10.1177/2053168015627183},

258 publisher = {SAGE Publications Sage UK: London, England},

259 year = {2016},

260 number = {1},

261 volume = {3},

262 journal = {Research \& Politics},

263 author = {Horowitz, Michael C},

264 title = {Public opinion and the politics of the killer robots debate},

265}

266

267@article{young2018does,

268 publisher = {Oxford University Press},

269 year = {2018},

270 pages = {562--576},

271 number = {3},

272 volume = {62},

273 journal = {International Studies Quarterly},

274 author = {Young, Kevin L and Carpenter, Charli},

275 title = {Does science fiction affect political fact? Yes and no: A survey experiment on “Killer Robots”},

276}

277

278@inproceedings{saxena2019fairness,

279 organization = {ACM},

280 year = {2019},

281 pages = {99--106},

282 booktitle = {Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society},

283 author = {Saxena, Nripsuta Ani and Huang, Karen and DeFilippis, Evan and Radanovic, Goran and Parkes, David C and Liu, Yang},

284 title = {How Do Fairness Definitions Fare?: Examining Public Attitudes Towards Algorithmic Definitions of Fairness},

285}

286

287@inproceedings{cave2019scary,

288 organization = {ACM},

289 year = {2019},

290 pages = {331--337},

291 booktitle = {Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society},

292 author = {Cave, Stephen and Coughlan, Kate and Dihal, Kanta},

293 title = {Scary robots: examining public responses to AI},

294}

295

296@techreport{politicallyneutral2019,

297 url = {https://perma.cc/JA6M-RDUS},

298 year = {2019},

299 institution = {Center for Security Studies (CSS) at ETH Zurich},

300 title = {A Politically Neutral Hub for Basic AI Research},

301 author = {Sophie-Charlotte Fischer and Andreas Wenger},

302}

303

304@techreport{facevalue2019,

305 url = {https://perma.cc/M4EG-N5MY},

306 year = {2019},

307 institution = {Ada Lovelace Institute},

308 title = {Beyond face value: public attitudes to facial recognition technology},

309 author = {{Ada Lovelace Institute}},

310}

311

312@techreport{eurobarometer460,

313 url = {https://perma.cc/9FRT-ADST},

314 institution = {Eurobarometer},

315 year = {2017},

316 author = {Eurobarometer},

317 title = {Special Eurobarometer 460: Attitudes towards the impact of digitisation and automation on daily life},

318}

319

320@techreport{rs2018,

321 url = {https://perma.cc/79FE-TEHH},

322 type = {Survey report},

323 institution = {The Royal Society},

324 year = {2018},

325 author = {{Ipsos MORI}},

326 title = {Public Views of Machine Learning: Findings from public research and engagement conducted on behalf of the Royal Society},

327}

328

329@techreport{smith2017,

330 url = {https://perma.cc/WU6B-63PZ},

331 institution = {Pew Research Center},

332 year = {2016},

333 author = {Aaron Smith and Monica Anderson},

334 title = {Automation in Everyday Life},

335}

336

337@techreport{doherty2019americans,

338 url = {https://perma.cc/PHQ3-LD8D},

339 institution = {Pew Research Center},

340 year = {2019},

341 author = {Carroll Doherty and Jocelyn Kiley},

342 title = {Americans have become much less positive about tech companies’ impact on the U.S.},

343}

344

345@techreport{gecewicz2019americans,

346 url = {https://perma.cc/F75M-ZRYA},

347 institution = {Pew Research Center},

348 year = {2019},

349 author = {Claire Gecewicz and Lee Rainie},

350 title = {Why Americans Don’t Fully Trust Many Who Hold Positions of Power and Responsibility},

351}

352

353@techreport{zhang2019artificial,

354 url = {http://dx.doi.org/10.2139/ssrn.3312874},

355 year = {2019},

356 institution = {Centre for the Governance of AI, University of Oxford},

357 author = {Zhang, Baobao and Dafoe, Allan},

358 title = {Artificial intelligence: American attitudes and trends},

359}

360

361@article{newton2017verge,

362 url = {https://perma.cc/F75M-ZRYA},

363 journal = {The Verge},

364 year = {2017},

365 author = {Casey Newton, Nick Statt, and Michael Zelenko},

366 title = {The Verge Tech Survey},

367}

368

369@article{molla2018facebook,

370 url = {https://perma.cc/F75M-ZRYA},

371 journal = {Recode},

372 year = {2018},

373 author = {Rani Molla},

374 title = {Facebook is the least-trusted major tech company},

375}

376

377@article{kahn2018americans,

378 url = {https://perma.cc/5KSL-2253},

379 journal = {Reuters},

380 year = {2018},

381 author = {Chris Kahn and David Ingram},

382 title = {Americans less likely to trust Facebook than rivals on personal data: Reuters/Ipsos poll},

383}

384

385@techreport{west2018divided,

386 url = {https://perma.cc/3NJV-5GV4},

387 institution = {Brookings Institution},

388 type = {Survey report},

389 year = {2018},

390 author = {Darrell M. West},

391 title = {Brookings survey finds divided views on artificial intelligence for warfare, but support rises if adversaries are developing it},

392}

393

394@article{nosek2018preregistration,

395 year = {2018},

396 number = {11},

397 volume = {115},

398 pages = {2600--2606},

399 journal = {Proceedings of the National Academy of Sciences},

400 author = {Nosek, Brian A and Ebersole, Charles R and DeHaven, Alexander C and Mellor, David T},

401 title = {The preregistration revolution},

402}

403

404@article{lin2016standard,

405 year = {2016},

406 pages = {495--500},

407 number = {3},

408 volume = {49},

409 journal = {PS: Political Science \& Politics},

410 author = {Lin, Winston and Green, Donald P},

411 title = {Standard operating procedures: A safety net for pre-analysis plans},

412}

413

414@article{funk2017,

415 url = {https://perma.cc/UF9P-WSRL},

416 year = {2017},

417 number = {1},

418 volume = {34},

419 journal = {Issues in Science and Technology},

420 author = {Cary Funk},

421 title = {Real Numbers: Mixed Messages about Public Trust in Science},

422}Attribution

arXiv:1912.12835v1

[cs.CY]

License: cc-by-4.0