- Papers

Papers is Alpha. This content is part of an effort to make research more accessible, and (most likely) has lost some details from the original. You can find the original paper here.

Introduction

With the rise of automation and digitization, the term “big data” has become ubiquitous across a wide range of industries. The rapid growth of cloud computing and tremendous progress in machine learning has attracted businesses to hire ML engineers to make the most value out of their data. ML has grown in popularity as a solution to various software engineering issues, including speech recognition, image processing, natural language processing etc. Nevertheless, The development of an ML model requires significant human expertise. It is necessary to use intuition based on prior knowledge and experience to determine the best ML algorithm/architecture for each dataset. This high demand of ML expertise coupled with the shortage of ML developers and the repetitive nature of many ML pipeline processes have inspired the adoption of low-code machine learning (i.e., AutoML) tools/platforms.

The primary goal of low-code AutoML services is to reduce the manual efforts of developing different ML pipelines, which thus helps in accelerating their development and deployment. This is necessary due to the fact that a business may have a specific business requirement and the domain experts/stakeholders in the business know it, but they lack the skills to develop an ML application. Indeed, for ML development, there are often primarily two key users as follows.

-

Domain Experts are knowledgeable about the problem domain (e.g., rain forecasting, cancer diagnosis, etc.) where the ML is applied. So that they have a deep understanding of the scope of the problem and the dataset.

-

ML Experts are experienced with the nuances of the ML model. They have a greater understanding and experience in selecting the appropriate ML algorithm/architecture, engineering features, training the model, and evaluating its performance.

Both of the domain and ML experts are equally important for the success of the ML-based solution development, and are often complementary to each other during the design and development of the solutions. AutoML aims to make ML more accessible to domain experts by providing end-to-end abstraction from data filtering to model designing & training to model deployment & monitoring. AutoML is becoming an essential topic for software engineering researchers as more and more AutoML applications are developed and deployed. AutoML research is progressing rapidly as academia and industry work collaboratively by making ML research their priority. For example, currently, in at least some experimental settings, AutoML tools yield better results than manually designed models by ML experts(c.f. background of AutoML in sec-background).

Machine learning practitioners face many challenges because of the interdisciplinary nature of the ML domain. They require not only the software engineering expertise to configure and set up new libraries but also in data visualization, linear algebra and statistics. There have been quite some studieson the challenges of deep learning and ML tools, but there are no formal studies on the challenges AutoML practitioners have asked about on public forums. There are also studies on developers’ discussion on machine learning domainand deep learning frameworks, but there are no studies on low-code, i.e., AutoML tools/platforms (c.f. related work in sec-related-work).

The online developer forum Stack Overflow (SO) is the most popular Q&A site with around 120 million posts and 12 million registered users. Several research has conducted by analyzing developers’ discussion of SO posts (e.g., traditional low-code practitioners discussion, IoT, big data, blockchain, docker developers challenges, concurrency, microservices) etc. The increasing popularity and distinctive character of low-code ML software development approaches (i.e., AutoML tools/frameworks) make it imperative for the SE research community to explore and analyze what practitioners shared publicly. To that end, in this paper, we present an empirical study of 14.3K SO posts (e.g., 10.5k Q + 3.3k Acc Ans) relating to AutoML-related discussion in SOto ascertain the interest and challenges of AutoML practitioners (c.f. study methodology in sec-methodology). We use SOTorrent datasetand Latent Dirichlet Allocation (LDA) to systematically analyze practitioners’ discussed topics similar to other studies. We explore the following four research questions by analyzing the dataset (c.f. results in sec-results).

RQ1. What topics do practitioners discuss regarding AutoML services on SO? As low-code machine learning (i.e., AutoML) is an emerging new paradigm, it is vital to study publicly shared queries by AutoML practitioners on a Q&A platform such as SO. We extract 14.3K AutoML related SO posts and apply the LDA topic modelling methodto our dataset. We find a total of 13 AutoML-related topics grouped into four categories: MLOps (43% Questions, 5 topics),Model (28% Questions, 4 topics), Data (27% Questions, 3 topics), and Documentation (2% Questions, topic). We find that around 40% of the questions are related to supported features of a specific AutoML solution providers, around 15% of questions are related to model design and development, and 20% of questions are related to data pre-processing and management. We find relatively fewer questions on programming, and details configuration on ML algorithm as AutoML aims to provide a higher level abstraction over data processing and model design and development pipeline. However, AutoML practitioners still struggle with development system configuration and model deployment.

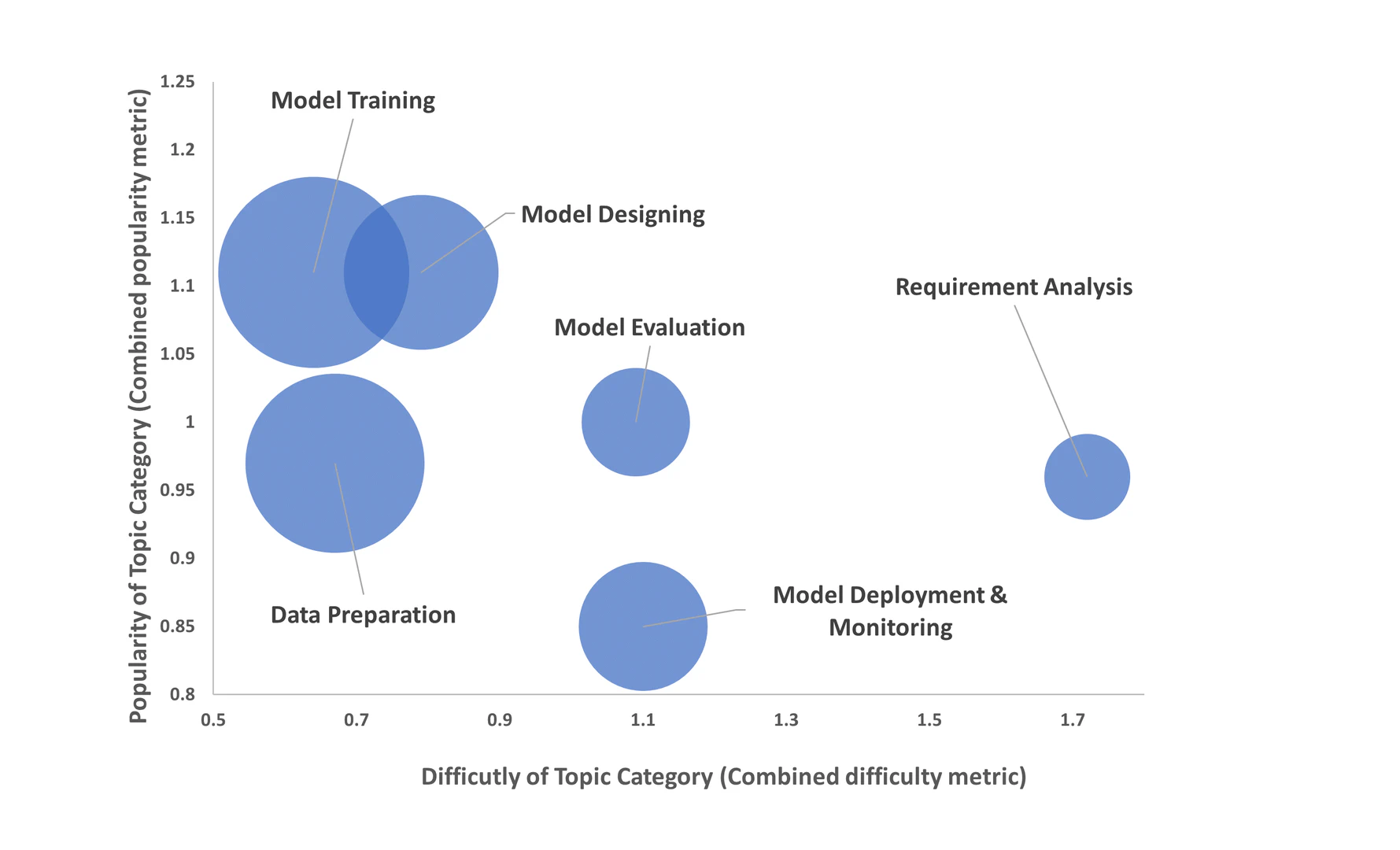

RQ2. How AutoML topics are distributed across machine learning life cycle phases? AutoML aims to provide an end-to-end pipeline service to streamline ML development. The successful adoption of this technology largely depends on how effective this is on different machine learning life cycle (MLLC) phases. So, following related studies, we manually analyze and annotate 348 AutoML questions into one of six ML life cycle stages by taking statically significant questions from all the four topic categories. We find that Model training, i.e., the implementation phase is the most dominant (28.7% questions), followed by Data Preparation (24.7% questions) and Model Designing (18.7% questions).

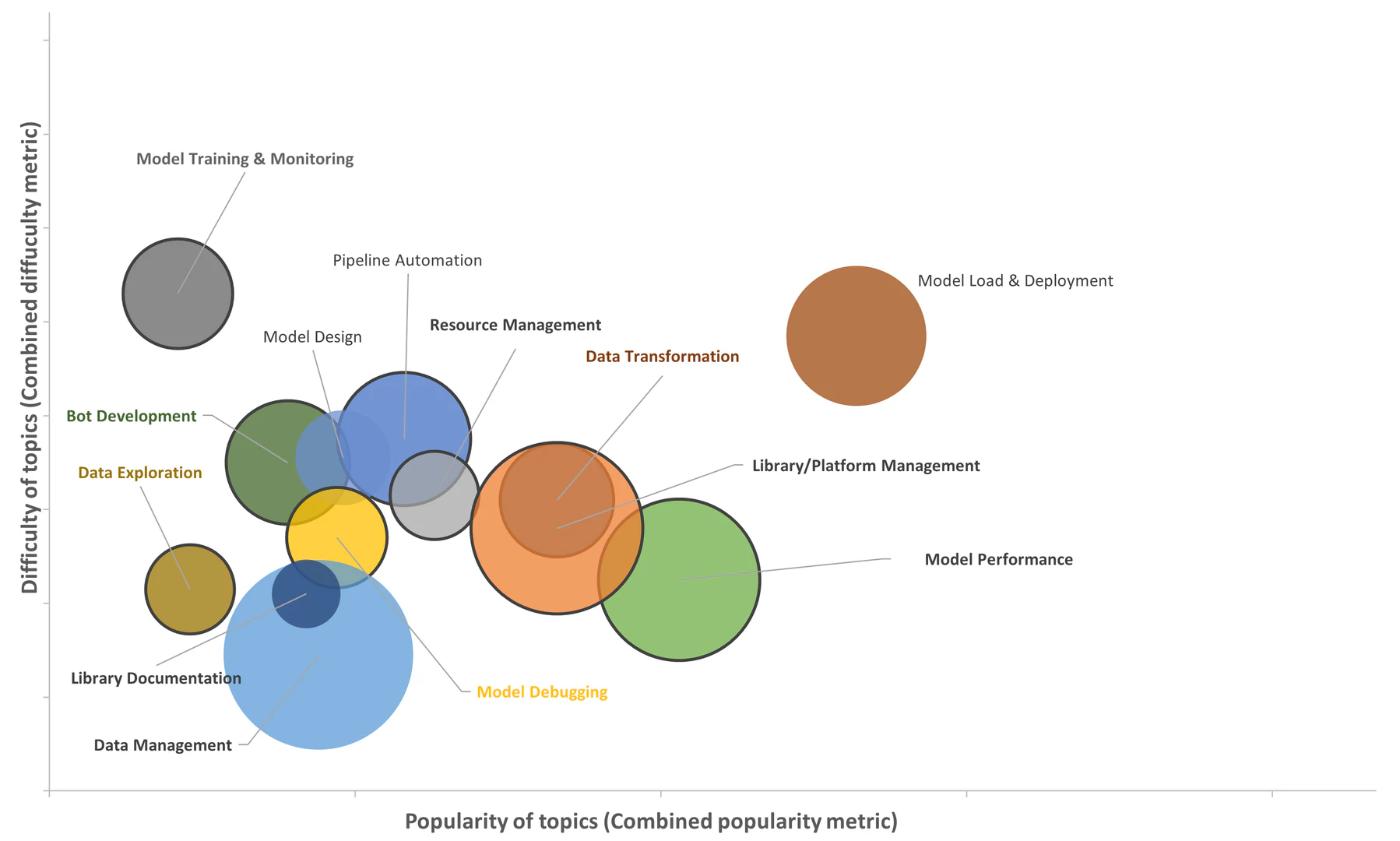

RQ3. What AutoML topics are most popular and difficult on SO? From our previous research questions, we find that AutoML practitioners discuss diverse topics in different stages of machine learning development. However, some of these questions are popular and have larger community support. We find that AutoML practitioners find the MLOps topic category most popular and challenging. AutoML practitioners find the Requirement Analysis phase the most challenging and popular, followed by the model deployment & monitoring MLLC phase. We find that AutoML practitioners find Model Deployment & Load topic to be most challenging regarding the percentage of questions without accepted answers and median hours required to get accepted answers. We also find that questions related to improving the performance of AutoML models are most popular among AutoML practitioners regarding average view count and average score.

RQ4. How does the topic distribution between cloud and non-cloud AutoML services differ? Our analyzed dataset contains both cloud-based and non-cloud-based AutoML services. Cloud-based solutions provides an end-to-end pipeline for data collecting to model operationalization, whereas non-cloud-based services offer greater customizability for data preparation and model development. We find that, in our dataset around 65% SO questionsbelong to cloud and 35% belong to non-cloud based AutoML solution. Cloud-based AutoML solution predominate over Model Deployment and Monitoring phase (82%) and non-cloud based AutoML solution predominate over Model Evaluation phase. MLOps topic category (i.e., 80%) is predominated over cloud-based AutoML solutions, while Model (i.e., 59%) topic category is predominated by non-cloud based AutoML solutions. Our study findings offer valuable insights to AutoML researchers, service providers, and educators regarding what aspect of AutoML requires improvement from the practitioners’ perspective (c.f. discussions and implications in sec-discussion). Specifically, our findings can enhance our understanding of the AutoML practitioners’ struggle and help the researchers and platform vendors better focus on the specific challenges. For example, AutoML solutions lack better support for deployment, and practitioners can prepare for potentially challenging areas. In addition, all stakeholders and practitioners of AutoML can collaborate to provide enhanced documentation and tutorials. The AutoML service vendors can better support model deployment, monitoring and fine-tuning.

Replication Package: The code and data are shared in https://github.com/disa-lab/automl-challenge-so

Background

AutoML as Low-code Tool/Platform for ML

Recent advancements in machine learning (ML) have yielded highly promising results for a variety of tasks, including regression, classification, clustering, etc., on diverse dataset types (e.g., texts, images, structured/unstructured data). The development of an ML model requires significant human expertise. Finding the optimal ML algorithm/architecture for each dataset necessitates intuition based on past experience. ML-expert and domain-expert collaboration is required for these laborious and arduous tasks. The shortage of ML engineers and the tedious nature of experimentation with different configuration values sparked the idea of a low-code approach for ML. This low-code machine learning solution seeks to solve this issue by automating some ML pipeline processes and offering a higher level of abstraction over the complexities of ML hyperparameter tuning, allowing domain experts to design ML applications without extensive ML expertise. It significantly increases productivity for machine learning practitioners, researchers, and data scientists. The primary goal of low-code AutoML tools is to reduce the manual efforts of different ML pipelines, thus accelerating their development and deployment.

In general terms, a machine learning program is a program that can learn from experience, i.e., data. In the traditional approach, a human expert analyses data and explores the search space to find the best model. AutoMLaims to democratize machine learning to domain experts by automating and abstracting machine learning-related complexities. It aims to solve the challenge of automating the Combined Algorithm Selection and Hyper-parameter tuning (CASH) problem. AutoML is a combination of automation and ML. It automates various tasks on the ML pipeline such as data prepossessing, model selection, hyper-parameter tuning, and model parameter optimization. They employ various types of techniques such as grid search, genetics, and Bayesian algorithms. Some AutoML services also help with data visualization, model interpretability, and deployment. It helps non-ML experts develop ML applications and provides opportunities for ML experts to engage in other tasks. The lack of ML experts and exponential growth in computational power make AutoML a hot topic for academia and the industry. Research on AutoML research is progressing rapidly; in some cases, at least in the experimental settings, AutoML tools are producing the best hand-designed models by ML experts.

AutoML Approaches

AutoML approach can be classified into two categories

-

AutoML for traditional machine learning algorithms. It focuses on data pre-processing, feature engineering (i.e., finding the best set of variables and data encoding technique for the input dataset), ML algorithm section, and hyperparameter tuning.

-

AutoML for deep learning algorithms: this includes Neural architecture search (NAS), which generates and assesses a large number of neural architectures to find the most fitting one by leveraging reinforcement learningand genetic algorithm.

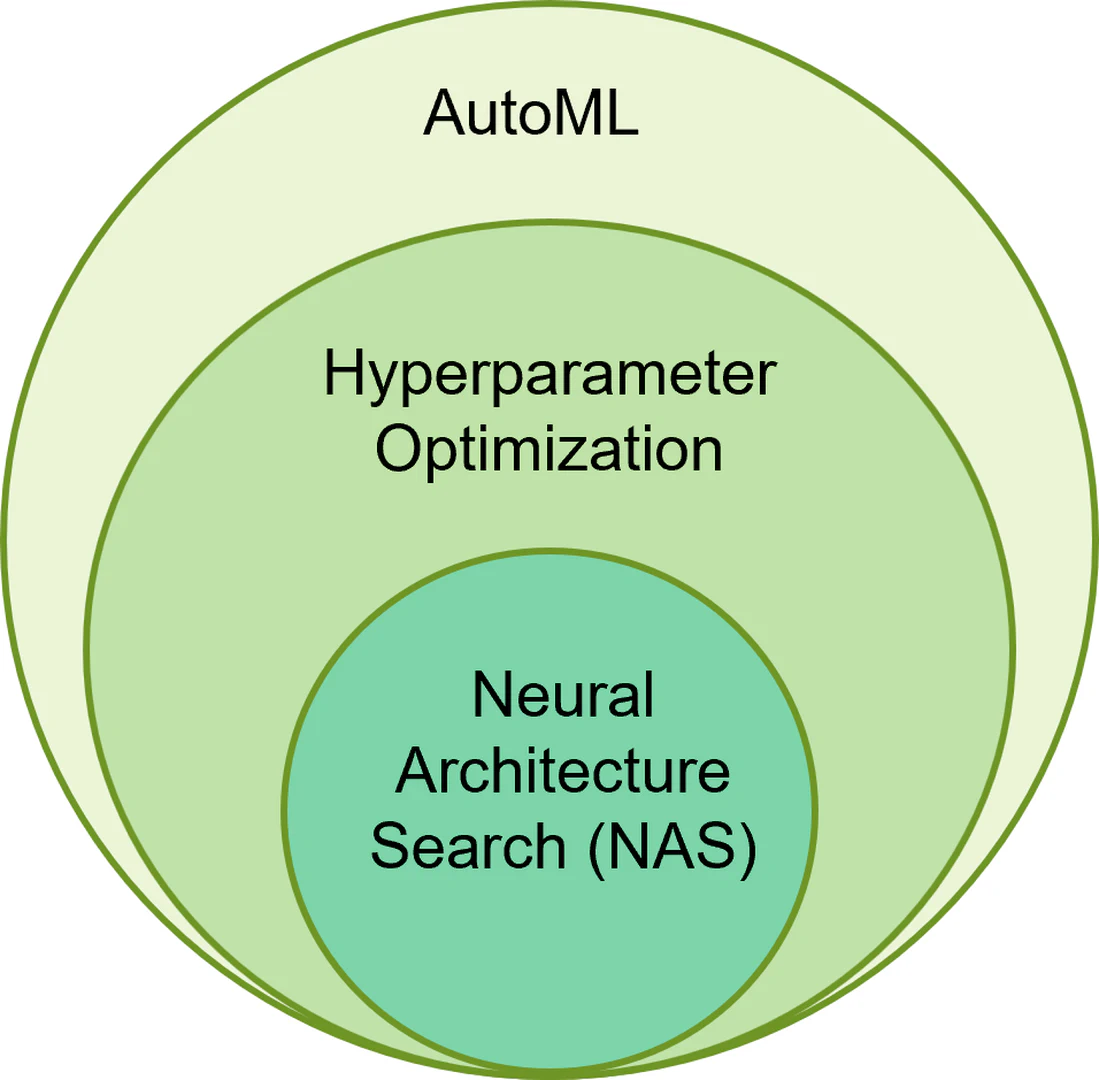

Figure fig-automl-nas provides a high-level overview of AutoML for NAS and hyper-parameter optimization. The innermost circle represents the NAS exploration for the DL models, and the middle circle represents the hyper-parameter optimization search for both NAS and traditional ML applications.

AutoML Services

Depending on their mode of access/delivery, currently availalbe AutoML tools can be used over the cloud or via a stand-alone application in our desktop or internal server computers.

AutoML Cloud Service/Platforms. Large cloud providers and tech businesses started offering Machine Learning as a Service projects to make ML even more accessible to practitioners due to the rising popularity of AutoML tools. Some of these platforms specialize in various facets of AutoML, such as structured/unstructured data analysis, computer vision, natural language processing, and time series forecasting. In 2016, Microsoft released AzureMLruns on top of Azure cloud and assists ML researchers/engineers with data processing and model development. H2O Automlwas released in 2016, followed by H2O-DriverlessAIin 2017. It is a customizable data science platform with automatic feature engineering, model validation and selection, model deployment, and interpretability. In 2017 Google released Google Cloud AutoMLthat provides end-to-end support to train the custom model on the custom dataset with minimal effort. Some other notable cloud platforms are Darwin (2018)AutoML cloud platform for data science and business analytics, TransmogrifAI (2018)runs on top of Salesforce’s Apache Spark ML for structured data. This cloud-based AutoML platform enables end-to-end data analytics and AI solutions for nearly any sector.

AutoML Non-cloud Service (Tools/Library). The initial AutoML tools were developed in partnership with academic researchers and later by startups and large technology corporations. Researchers from the University of British Columbia and Freiburg Auto-Weka (2013), which is one of the first AutoML tools. Later, researchers from the University of Pennsylvania developed TPOT (2014), and researchers from the University of Freiburg released Auto-Sklearn (2014). These three AutoML tools provide a higher level abstraction over the popular ML library “SciKit-Learn” (2007). A similar research effort was followed to provide an automated ML pipeline over other popular ML libraries. University of Texas A&M University developed Auto-Keras (2017)that provides runs on top of Kerasand TensorFlow. Some of the other notable AutoML tools are MLJar (2018), DataRobot (2015), tool named “auto_ml” (2016). These AutoML tools provide a higher level of abstraction over traditional ML libraries such as TensorFlow, Keras, and Scikit-learn and essentially automate some ML pipeline steps (i.e., algorithm selection and hyper-parameter turning).

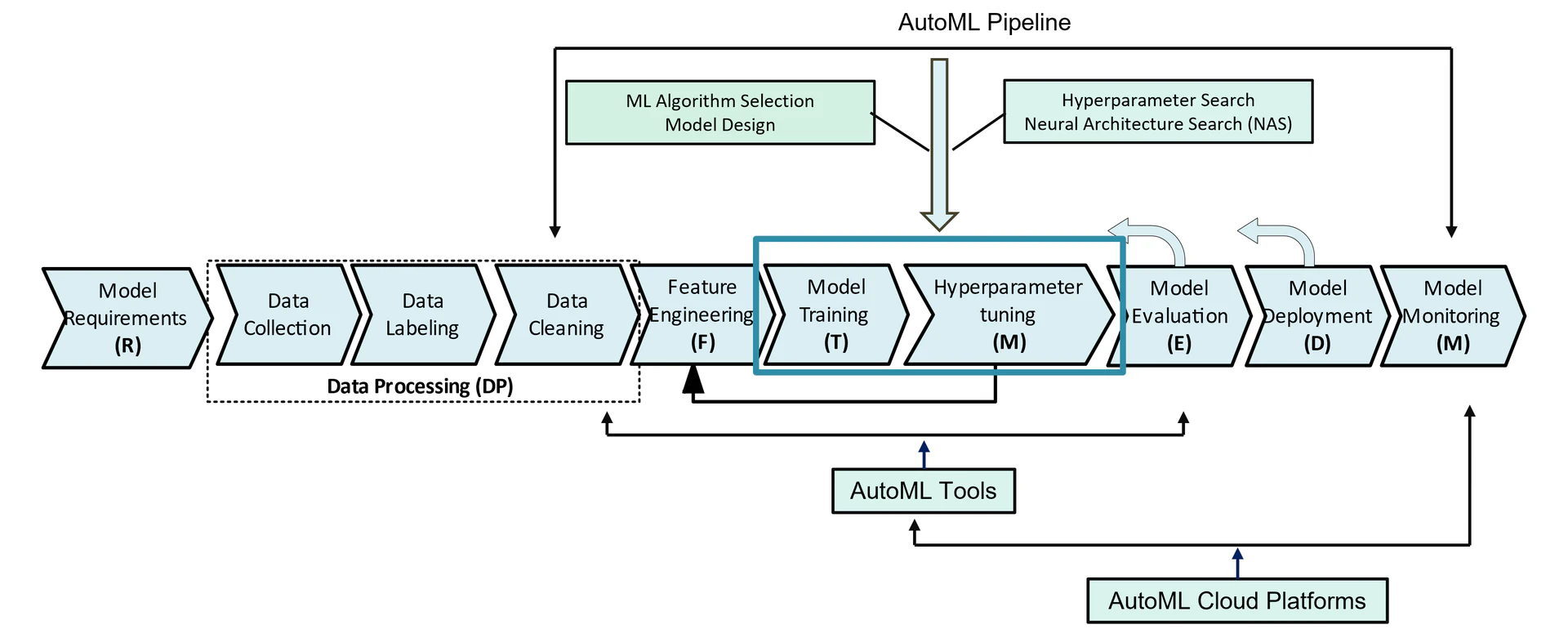

In Figure fig-automl-pipeline, we summarize the ML pipeline services offered by AutoML services. Traditional ML pipeline consists of various steps such as Model requirement, Data processing, feature engineering, model deigning, model evaluation, deployment, and monitoring. AutoML solutions aim to automate various stages of these pipelines, from data cleaning to Model deployment(Fig. fig-automl-pipeline). AutoML non-cloud solutions (i.e., tools/frameworks) mainly focus on automating different data filtering, model selection, and hyperparameter optimization. AutoML cloud platforms encapsulate the services of AutoML tools and provide model deployment and monitoring support. They usually provide the necessary tools for data exploration and visualization.

Study Data Collection and Topic Modeling

In this Section, we discuss our data collection process to find AutoML-related discussion, i.e., posts (Section sub-sec-data-collection). Then, we discuss in detail our data pre-processing and topic modeling steps on these posts (Section sub-sec-topic-modeling).

Data Collection

We collectAutoML related SO posts in the following three steps: [(1)]

-

Download SO data dump,

-

Identify AutoML-related tag list, and

-

Extract AutoML-related posts using our AutoML tag list. We describe the steps in detail below.

Step 1: Download SO data dump. For this study, we use the most popular Q&A site, Stack Overflow (SO), where practitioners from diverse backgrounds discuss various software and programming-related issues. First, we download the latest SO data dumpof June 2022, available during the start of this study. Following related studies, we use the contents of the “Post.xml” file, which contains information about each post like the post’s unique ID, title, body, associated tags, type (Question or Answer), creation date, favorite count, view-count, etc. Our data dump includes developers’ discussions of 14 years from July 2008 to June 2022 and contains around 56,264,787 posts. Out of them, 22,634,238 (i.e., 40.2%) are questions, 33,630,549 (i.e., 59.7%) are answers, and 11,587,787 questions (i.e., 51.19%) had accepted answers. Around 12 million users from all over the world participated in the discussions.

Each SO post contains 19 attributes, and some of the relevant attributes for this study are: [(1)]

-

Post’s unique Id, and creation time,

-

Post’s body with problem description and code snippets,

-

Post’s score, view, and favorite count,

-

Tags associated with a post,

-

Accepted answer Id.

Step 2: Identify AutoML tags. We need to identify AutoML related SO tags to extract AutoML-related posts, i.e., practitioners’ discussions. We followed a similar procedure used in prior workto find relevant SO tags. In Step 1, we identify the initial AutoML-related tags and call them $T_{init}$ . In Step 2, we finalize our AutoML tag list following approaches of related work. Our final tag list $T_{final}$ contains 41 tags from the top 18 AutoML service providers. We discuss each step in detail below.

(1) Identifying Initial AutoML tags. Following our related work, first, we compile a list of topAutoML services. First, we make a query in google with the following two search terms “top AutoML tools” and “top AutoML platforms”. We select the first five search results for each query that contain various websites ranked the best AutoML tools/platforms. The full list of these websites is available in our replication package. So, from these ten websites and the popular technological research website Gartner[https://www.gartner.com/reviews/market/data-science-machine-learning-platforms] we create a list of 38 top AutoML solutions. Then for each of these AutoML platforms, we search for SO tags. For example, We search for “AutoKeras” via the SO search engine. We find a list of SO posts discussing the AutoKeras tool. We compile a list of potential tags for this platform. For example, we notice most of these questions contain “keras” and “auto-keras” tags. Then, we manually examine the metadata of these tags [https://meta.stackexchange.com/tags]. For example, the metadata for the “auto-keras” tag says, “Auto-Keras is an open source software library for automated machine learning (AutoML), written in python. A question tagged auto-keras should be related to the Auto-Keras Python package.” The metadata for the “keras” tag says, “Keras is a neural network library providing a high-level API in Python and R. Use this tag for questions relating to how to use this API. Please also include the tag for the language/backend ([python], [r], [tensorflow], [theano], [cntk]) that you are using. If you are using tensorflow’s built-in keras, use the [tf.keras] tag.”. Therefore, we choose the “auto-keras” tag for the “AutoKeras” AutoML library. Not all AutoML platforms have associated SO tags; thus, they were excluded. For example, for AutoFolioAutoML library, there are no SO tags; thus, we exclude this from our list. This way, we find 18 SO tags for 18 AutoML services and call it $T_{init}$ . The final AutoML solutions and our initial tag list are available in our replication package.

(2) Finalizing AutoML-related tags. Intuitively, there might be variations to tags of 18 AutoML platforms other than those in $T_{init}$ . We use a heuristic technique from related previousworksto find the other relevant AutoML tags. First, we denote the entire SO data as $Q_{all}$ . Second, we extract all questions $Q$ that contain any tag from $T_{init}$ . Third, we create a candidate tag list $T_{candidate}$ using the relevant tags in the questions $Q$ . Fourth, we analyze and select significantly relevant tags from$T_{candidate}$ for ourAutoML discussions. Following related works, we compute relevance and significance for each tag $t$ in $T_{candidate}$ with respect to $Q$ (i.e., the extracted questions that have at least one tag in $T_{init}$ ) and $Q_{all}$ (i.e., our data dump) as follows,

\[ ( Significance) \ \ S_{tag} \ =\ \ \frac{\#\ of\ ques.\ with\ the\ tag\ t\ in\ Q}{\ \ \#\ of\ ques.\ with\ the\ tag\ t\ in\ Q_{all}} \]\[ ( Relevance) \ \ R_{tag} \ =\ \ \frac{\#\ of\ questions\ with\ tag\ t\ in\ Q}{\ \ \#\ of\ questions\ in\ Q} \] A tag $t$ is significantly relevant toAutoML if the $S_{tag}$ and$R_{tag}$ are higher than a threshold value. Similar to related study, we experimented with a wide range of values of $S_{tag}$ = {0.05, 0.10, 0.15, 0.20, 0.25, 0.30, 0.35} and$R_{tag}$ = {0.001, 0.005, 0.010, 0.015, 0.020, 0.025, 0.03}. From our analysis, we find that we increase $S_{tag}$ and$R_{tag}$ the total number of recommend tags decreases. For example, we find that for $S_{tag}$ = .05 and$R_{tag}$ = 0.001 the total number of recommended tags for AutoML is 30 which is highest. However, not all these recommended tags are AutoML-related. For example, “amazon-connect” tag’s $S_{tag}$ = 0.24 and $R_{tag}$ = 0.006 and this tag is quite often associated with questions related to other AutoML tags such as “aws-chatbot”, “amazon-machine-learning” but it mainly contains discussion related to AWS cloud based contact center solutions rather than AutoML related discussion. So, we remove this from our final tag list. Similarly, we find some other tags such as “splunk-formula”, “amazon-ground-truth” etc are frequently correlated with other AutoML platform tags, although they do not contain AutoML related discussions. After manually analysing these 31 tags we find that 23 new tags are relevant to AutoML-related discussions. So, after combining with out initial taglist, i.e., $T_{init}$ , our final tag list $T_{final}$ contains 41 significantly relevant AutoML-related tags which are:

- Final Tag List $T_{final}$

= {

amazon-machine-learning',automl’,aws-chatbot',aws-lex’,azure-machine-learning-studio',azure-machine-learning-workbench’,azureml',azureml-python-sdk’,azuremlsdk',driverless-ai’,ensemble-learning',gbm’,google-cloud-automl-nl',google-cloud-vertex-ai’,google-natural-language',h2o.ai’,h2o4gpu',mlops’,sparkling-water',splunk-calculation’,splunk-dashboard',splunk-query’,splunk-sdk',amazon-sagemaker’,tpot',auto-sklearn’,rapidminer',pycaret’,amazon-lex',auto-keras’,bigml',dataiku’,datarobot',google-cloud-automl’,h2o',mljar’,splunk',transmogrifai’,ludwig',azure-machine-learning-service’, `pycaret'}

Step 3: Extracting AutoML related posts. Hence, our final dataset $B$ contained 14,341posts containing 73.7% Questions (i.e., 10,549 Q) and 26.3% Accepted Answers (i.e., 3,792).

Topic Modeling

We produceAutoML topics from the extracted posts in three steps: [(1)]

-

Preprocess the posts,

-

Find the optimal number of topics, and

-

Generate topics. We discuss the steps in detail below.

Step 1. Preprocess the posts. For each post text, we remove noise using the technique in related works. First, we remove the code snippets from the post body, which is inside code/code tag, HTML tags such as (p/p, a/a, li/li etc.), and URLs. Then we remove the stop words such as “am”, “is”, “are”, “the”, punctuation marks, numbers, and non-alphabetical characters using the stop word list from MALLET, NLTK. After this, we use porter stemmerto get the stemmed representations of the words, e.g., “waiting”, “waits” - all of which are stemmed to the base form of the word “wait”.

Step 2. Finding the optimal number of topics. After the prepossessing, we use Latent Dirichlet Allocationand the MALLET toolto find out the AutoML-related topics in our SO discussions. We follow similar studies using topic modelingin SO dataset. Our goal is to find the optimal number of topics $K$ for our AutoML dataset $B$ so that the coherence score is high, i.e., encapsulation of underlying topics is more coherent. We use Gensim packageto determine the coherence score following previous research. We experiment with different values of $K$ that range from {5, 10, 15, 20, 25, 30, 35, 40, 45, 50, 55, 60, 65, 70} and for each value, we runMALLET LDA on our dataset for 1000 iterations. Then we observe how the coherence score changes with respect to $K$ . As LDA topic modeling has some inherited randomness, we ran our experiment 3 times and found that we got the highest coherence score for $k$ = 15. Choosing the right value of $K$ is crucial because multiple real-world topics merge for smaller values of $K$ , and for a large value of $K$ , a topic breaks down. MALLET uses two hyper-parameters, $\alpha$ , and $\beta$ , to distribute words and posts across the generated topics. Following the previous works, in this study we use the standard values $50/K$ and 0.01 for hyper-parameters $\alpha$ and $\beta$ in our experiment.

Step 3. Generating topics. Topic modeling is a systematic approach to extracting a set of topics by analyzing a collection of documents without any predefined taxonomy. Each document, i.e., the post, has a probability distribution of topics, and every topic has a probability distribution of a set of related words. We generate 15 topics using the above LDA configuration on our AutoML dataset $B$ . Each topic model provides a list of the top $N$ words and a list of $M$ posts associated with the topic. A topic in our context comprises the 30 most commonly co-related terms, which indicate an AutoML development-related concept. Each post has a correlation score between 0 to 1, and following the previous work, we assign a document, i.e., a post with a topic that corresponds most.

Empirical Study

We answer the following four research questions by analyzing the topics we found in our 14.3K AutoML related posts in Stack Overflow (SO).

-

What topics do practitioners discuss regarding AutoML services on SO?

-

How are the AutoML topics distributed across machine learning life cycle phases?

-

What AutoML topics are most popular and difficult on SO?

-

How does the distribution of the topics differ between cloud and non-cloud AutoML services?

Discussions

Evolution of AutoML related discussion

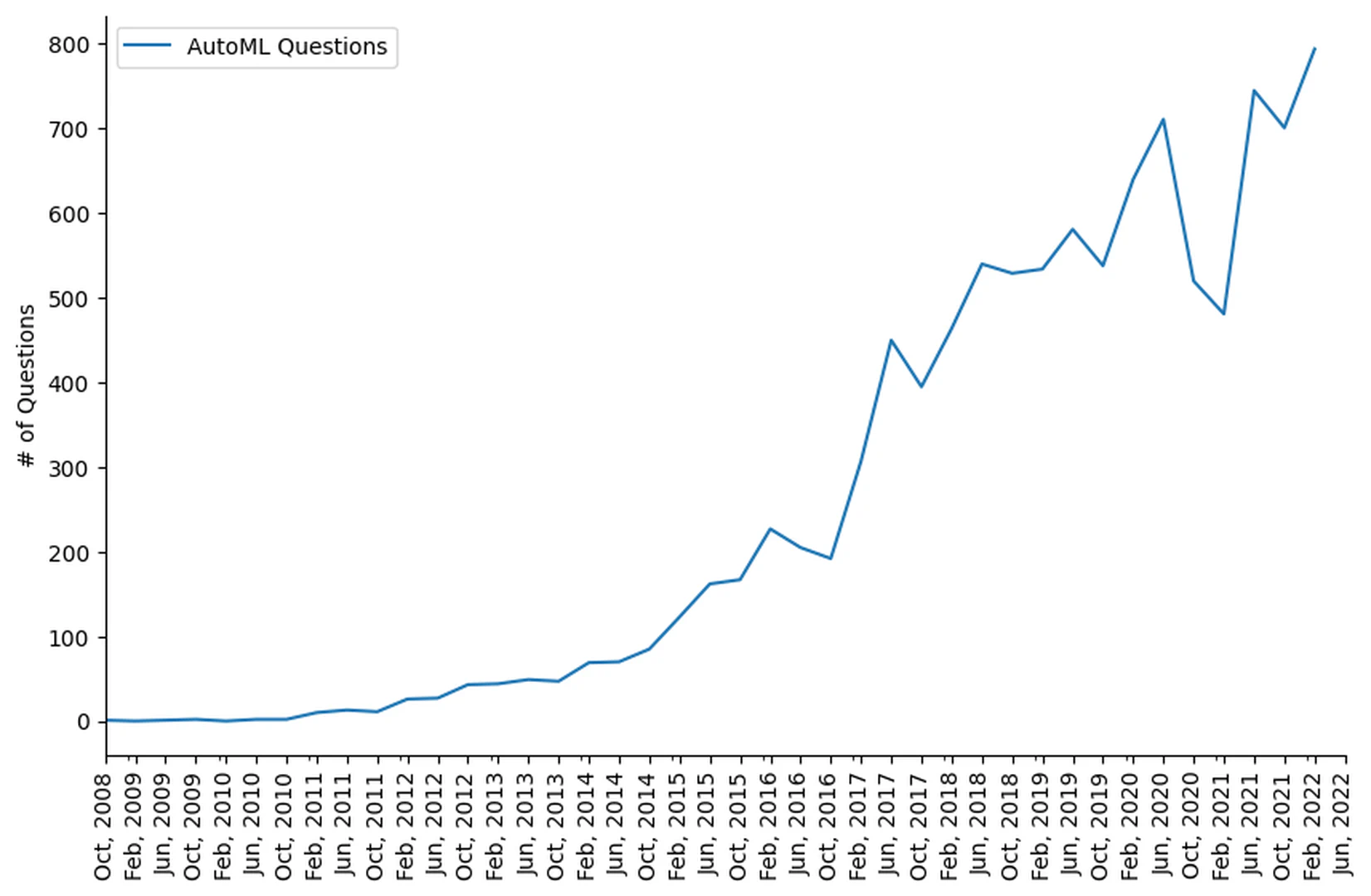

Figure fig-all-questions-evolution depicts the progression of overallAutoML-related discussion from our extracted dataset between 2008 to 2022. Additionally, it demonstrates that AutoML-related discussion is gaining popularity in mid-2016 (i.e., more than 200 questions per quarter). Figure fig-all-questions-evolution shows that during the first quarter of 2021, the total number of AutoML questions on Stack Overflow experiences a considerable decrease (i.e., about 25%), primarily due to the low number of queries in Splunk and Amazon Sagemaker during the pandemic. However, Amazon launches a suite of new AutoML services at the start of 2021, and the overall trend for AutoML-related discussion shows an upward trend. We provide a more detailed explanation for Figure fig-all-questions-evolution below.

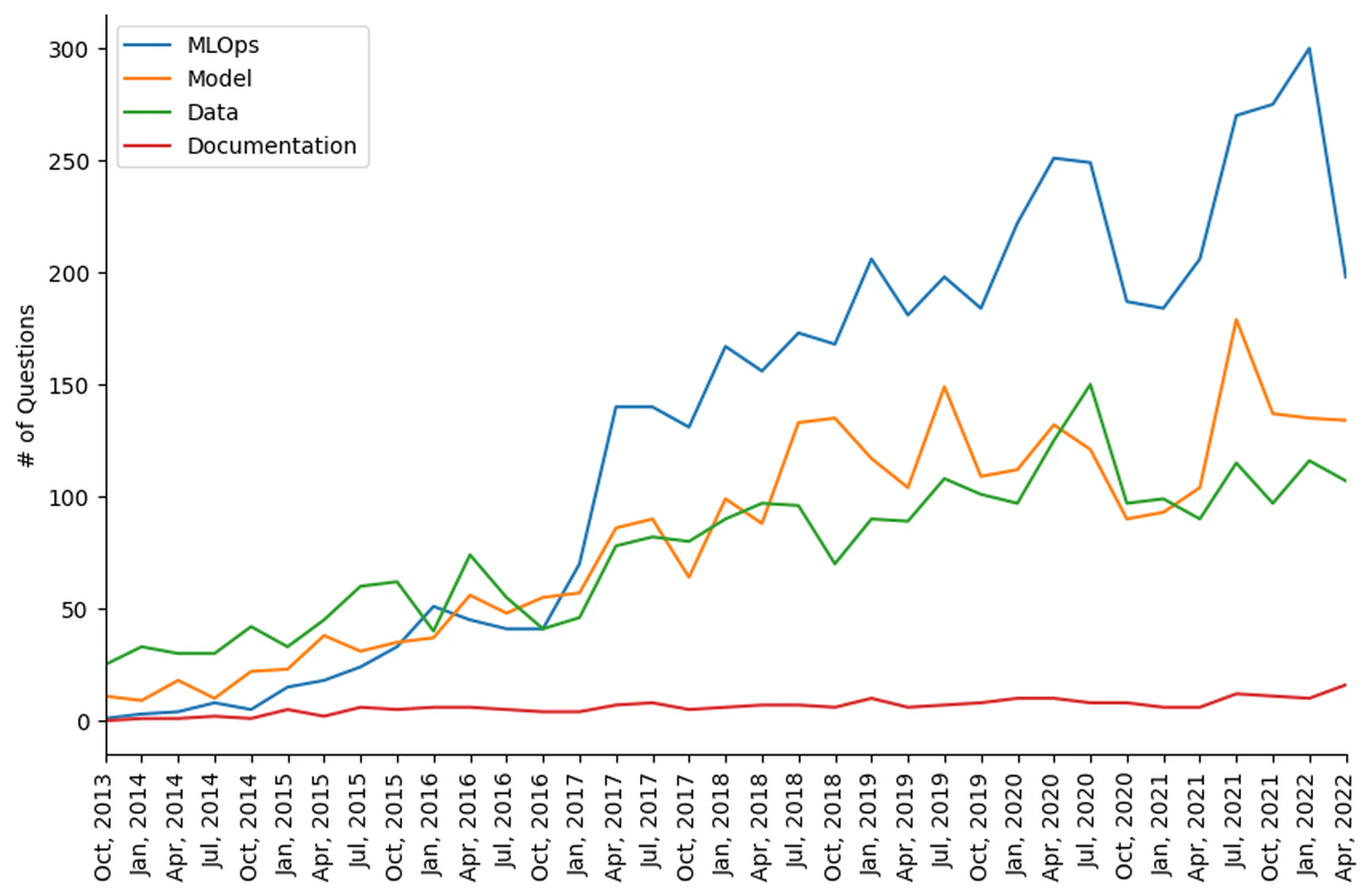

MLOps This is the largest AutoML topic category, with around 43% of AutoML-related questions and five AutoML topics. From Figure fig-topic-cat-evolution, we can see this topic category began to gain popularity from the start of 2017, i.e., after big tech companies started to offer AutoML cloud platform services (sub-sec-automl). From 2017 to 2022, AutoML library and cloud platform management-related topics remain the most popular in this category (e.g., issues with installing the library in different platforms 61883115). From the beginning of 2017 to mid-2018 AutoML cloud platform’s bot development-related queries were quite popular (e.g., “Filling data in response cards with Amazon Lex” in47970307). Around that time, Amazon Lex (2017) was released, a fully managed solution to design, build, test and deploy conversational AI solutions. From our analysis, we can see the rise of Pipeline Automation-related discussion from the beginning of 2019 (e.g., issues related to the deployment pipeline in 55353889). We also notice a significant rise in the number of questions related to model load and deployment from 2019 to 2022 (e.g., “Custom Container deployment in vertex ai” in 69316032).

Model This is the second largest AutoML topic category, with around 28% of AutoML-related questions and four AutoML topics. From Figure fig-topic-cat-evolution, we can see that, similar to MLOps; the Model topic category began to gain popularity from the start of 2017. The dominant topic in this category is the Model performance improvement-related queries using AutoML services (e.g., “Grid Search builds models with different parameters (h2o in R) yields identical performance - why?” in 47475848, “How to understand the metrics of H2OModelMetrics Object through h2o.performance” in 43699454). From our analysis, we see around a 200% increase in the number of questions in Model training and monitoring topic from 2021 to mid-2021. So practitioners ask queries regarding training issues (e.g., “Training Google-Cloud-Automl Model on multiple datasets” in 68764644, 56048974)from practitioners in recent times. Other topics related to model design, model implementation, and debugging evolved homogeneously around this time.

Data This is the third largest AutoML topic category, with around 27% of AutoML-related questions and three AutoML topics. The Data Management topic is the most dominant topic in this category. From our analysis, we can see around a 60% increase in the number of queries on cloud data management, especially after Splunk cloud becomes available on the google cloud platform. So, we can see developers’ queries related to data transfer (e.g., in 63151824), REST API (e.g., in 63152602), API rate limitation (e.g., in 63292162) becomes popular. Other topics from this category evolve homogeneously over time.

Documentation This is the smallest AutoML topic category with around 2% of AutoML-related questions and one AutoML topic. We find that Since 2015 questions from this category shifted slightly from API-specific details (e.g., 35562896) to documentation-related limitations for cloud deployment (e.g., 59328925), containerization (e.g., 68888281).

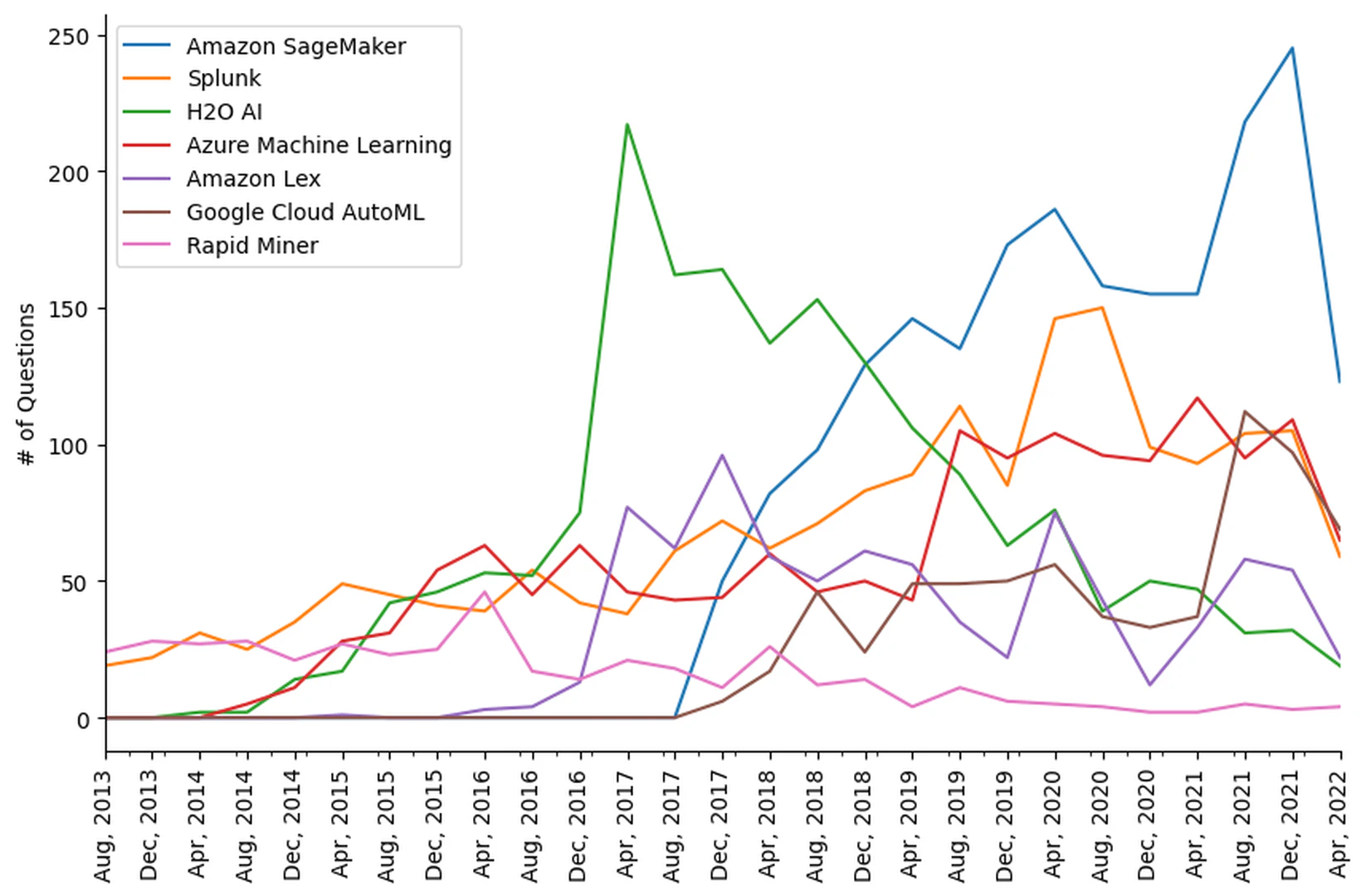

Top AutoML service providers

In Figure fig-top-automl-platforms, we present the evolution of the top seven AutoML solutions in terms of the number of SO questions over the past decade. In our dataset, Amazon Sagemaker, is the largest AutoML platform containing around 20% of all the SO questions followed by Splunk (19%), H2O AI (17%), Azure Machine Learning, Amazon Lex, Google Cloud AutoML, and Rapid Miner. Among these AutoML service providers, H2O AI AI began in mid-2017 with the release of their H2O.ai driverless AI cloud platform, but since then, it has been steadily losing practitioners’ engagement in SO. We also observe that from its release in 2017 AWS SageMaker cloud platform remains dominating, with a notable surge occurring in the middle of 2021. Other AutoML platforms likewise demonstrate an overall upward trend.

AutoML service providers’ support on SO discussion.

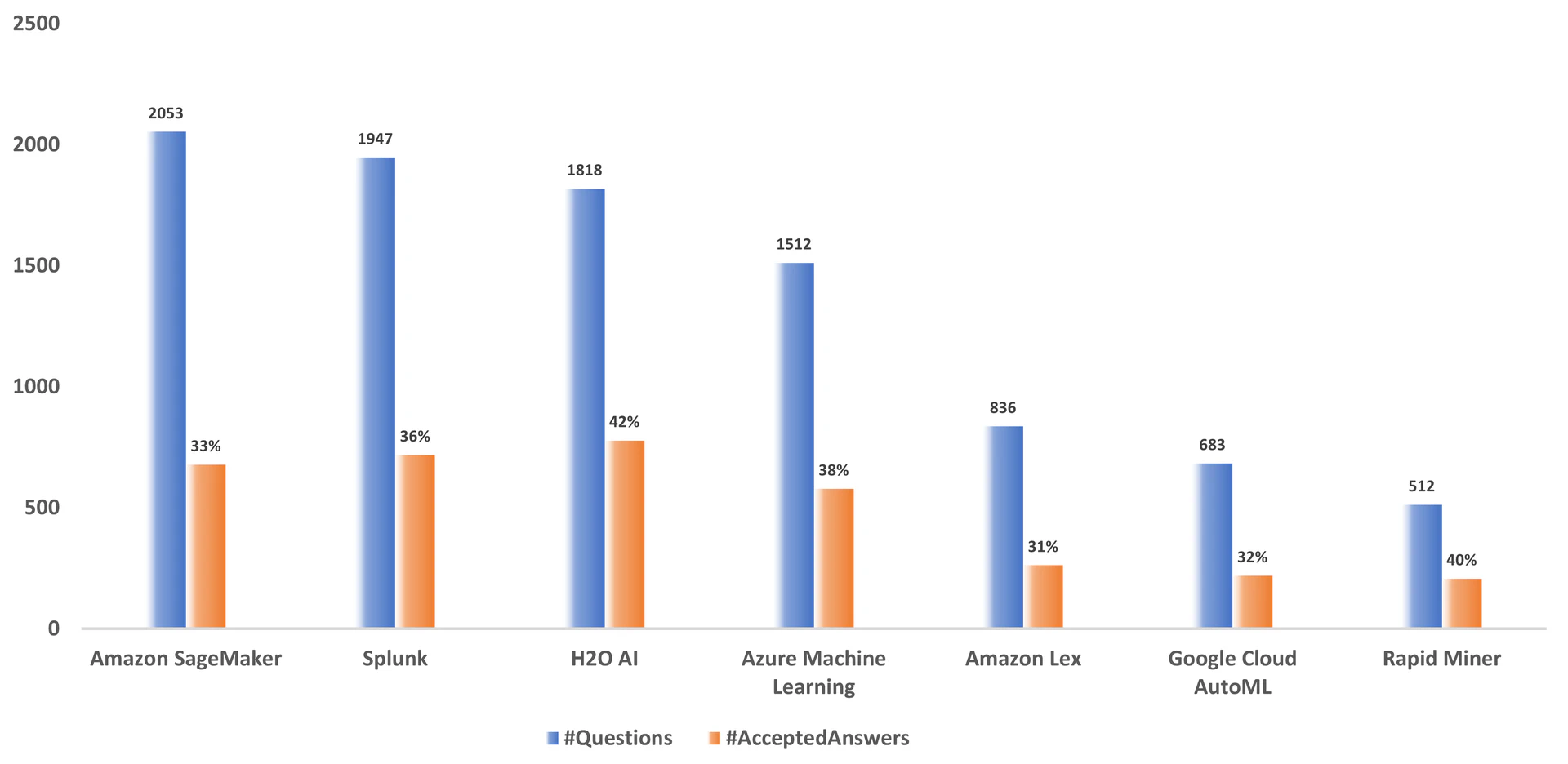

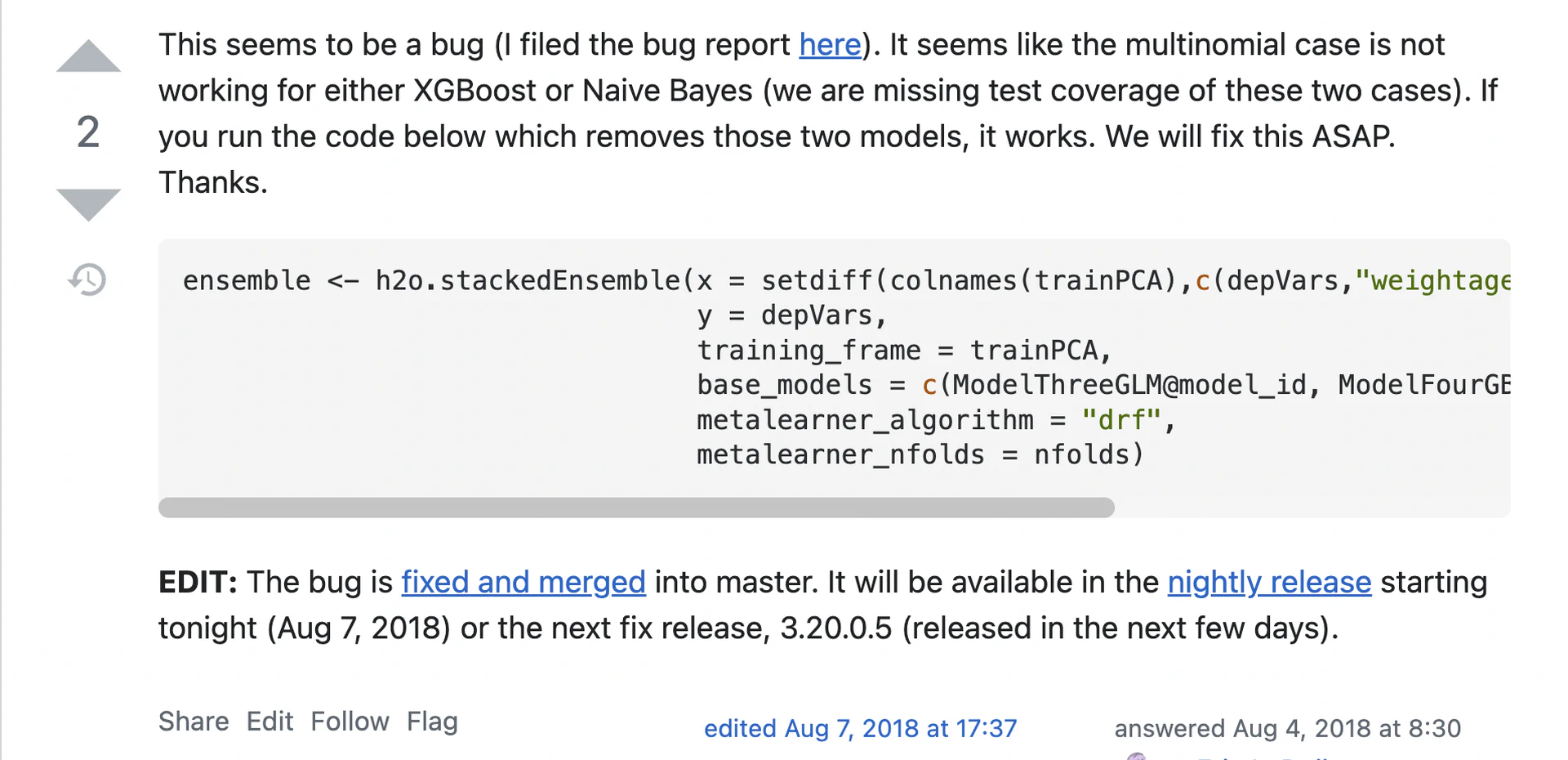

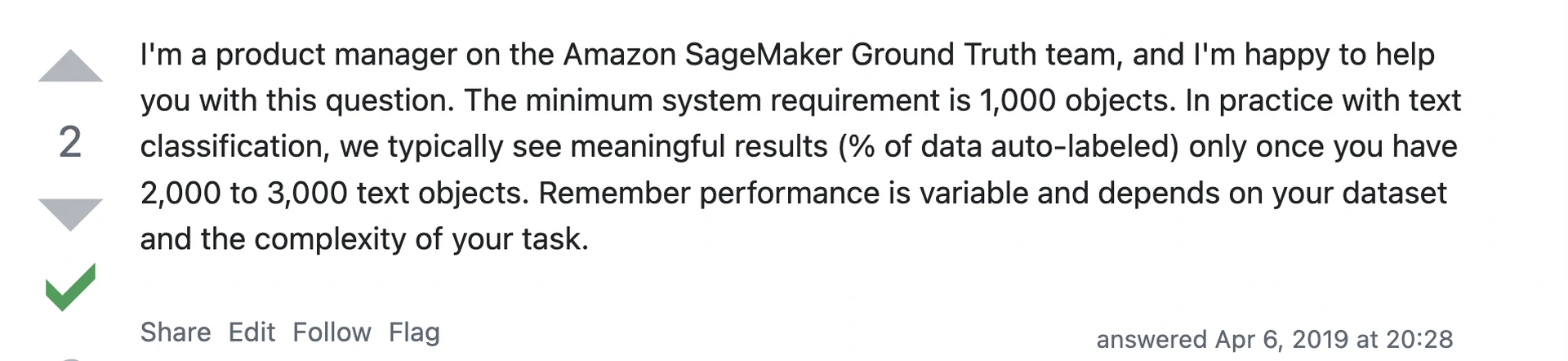

Our analysis shows that popular AutoML providers development actively follows SO discussion and provides solutions. For example, the H2O AutoML team officially provides support for practitioners’ queries in SO (e.g., 51527994), and thus they provide insights into our current limitations (e.g., 52486395) of the framework and plans for fixing the bugs in future releases (e.g., Fig. fig-h2o-support in 51683851). Similarly, we also find the development team from Amazon Sagemaker also actively participating in the SO discussion (Fig. fig-sagemaker-support in 55553190). In Figure fig-top-platform-acc-stat, we present a bar chart with the total number of questions vs. the percentage of questions with accepted answers for each of the top seven AutoML service providers. We can see that Amazon SageMaker has 2,053 questions, and only 33% of its questions have accepted answers. From Figure fig-top-platform-acc-stat, we see H2O AI has the highest percentage of questions with accepted answers (42%), followed by Rapid Miner (40%), Azure Machine Learning (38%), Splunk (36%), Amazon SageMaker (33%), Google Cloud AutoML (32%), Amazon Lex (31%).

Traditional ML vs AutoML challenges.

With with popularity of ML and its widespread adoption, there are several studies on the challenges of machine learning development, deep learning development, DL frameworks/libraries. However, none of this research addresses the challenges of the low-code machine learning approach, i.e., AutoML. In this section, we compare the conclusions of these past studies on the border ML domain with our findings regarding the AutoML challenges. As AutoML is a subset of the ML domain, we find that many issues are comparable while others are unique. This finding is intriguing in and of itself, given that AutoML was intended to mitigate some of the shortcomings of traditional ML development and make ML adoption more accessible. Our analysis shows that AutoML tools are successful in some circumstances but not all. For example, among AutoML practitioners, there is substantially less discussion about Model creation and fine-tuning than among all ML practitioners. However, deployment, library, and system configuration dominate both traditional ML and AutoML development. Future research from the SE domain can further ameliorate many of these challenges by providing a guideline on better documentation, API design, and MLOps. We present a detailed comparison of some of the most significant ML development challenges faced by traditional ML versus AutoML practitioners.

Requirement Analysis. This contains a discussion on formulating an ML solution for a specific problem/task. These questions are related to understanding available data and the support for existing ML frameworks/platforms. An empirical study on the machine learning challenges in SO discussion by Alshangiti et al.reports that ML developers have around 2.5% questions on these challenges in SO. Other studiesalso report similar challenges for ML developers. From our analysis (rq-result-mllc), we also find that AutoML practitioners have around 5.7% queries regarding the supported services from AutoML tools/platforms to meet their business requirements. Our analysis (Fig. fig-bubble-diff-pop-mllc) indicates that AutoML practitioners find these to be particularly hard(e.g., “Does AzureML RL support PyTorch?” in 64025308 or “Is there support for h2o4gpu for deeplearning in python?” in 67727907); therefore, AutoML providers should give more support in terms of available resources.

Data Processing & Management. This challenge relates to data loading, cleaning, splitting, formatting, labeling, handling missing values or imbalanced classes, etc. Previous studies report that ML developers find data pre-processing the second most challenging topicand even some popular ML libraries lack data cleaning features. Our analysis (rq-result-topics and rq-result-pop-diff, we find that this is quite a dominant topic for AutoML practitioners (i.e., 27% questions), but AutoML practitioners find these topics (Fig. fig-bubble-diff-pop-per-category) and MLLC phases (Fig. fig-bubble-diff-pop-mllc) less challenging. This finding suggests that AutoML service provide better abstraction and APIs for this data pre-processing and management pipeline.

Model Design. This contains some conceptual and implementation-related discussions on ML. This contains a discussion about ML algorithms/models, their internal implementation, parameters, etc. (e.g., Architecture of FCC, CNN, ResNet, etc., learning rate). Similar studies on ML challengesreport model creation-related questions as challenging. Questions regarding different ML algorithms are dominant in SO, and Neural network architecture is a popular topic among practitioners and gaining popularity. From our analysis, we find that this challenge is prevalent (4.2% questions) in AutoML practitioners (Fig. fig-taxonomy-tm) and moderately challenging (Table tab-topicdifficulty).

Training & Debugging. This contains a discussion related to the actual training of the model on a specific train dataset. This contains a discussion about use cases or troubleshooting for a specific library via documentation or prior experience. Similar studiesreport that coding error/exception topics– type mismatch, shape mismatch – are most dominant in SO, and resolving these issues requires more implementation knowledge than conceptual knowledge. Our analysis suggests that AutoML practitioners also find model training and debugging-related questions (i.e., around 10%) most popular (Fig. fig-bubble-diff-pop-topic). This suggests that both ML and AutoML practitioners require better support for bug detection.

Model Evaluation & Performance Improvement. This contains challenges related to evaluating the trained model via different metrics and improving the model’s performance via different techniques and multiple iterations. Similar studiesalso report that model-turning and prediction-related queries are less frequent in SO, even though significant ML research focuses on this step. More research is needed on practitioners’ challenges. We find that AutoML practitioners have many queries (i.e., around 10%) regarding improving the performance of the model with fewer resources (e.g., “H2O cluster uneven distribution of performance usage” in 46056853, 62408707). AutoML aims to abstract model architecture-related complexities, but better explainability of the model’s prediction may mitigate many of AutoML practitioners’ concerns (e.g., “RapidMiner: Explaining decision tree parameters” in 23362687).

Model deployment. This contains challenges while deploying the model, scaling up as necessary, and updating the model with a new dataset. Chen et al.report that ML developers face several challenges from Model export, environment configuration, model deployment via cloud APIs, and updating model. Guo et al.evaluated prediction accuracy and performance changes when DL models trained on PC platforms were deployed to mobile devices and browsers and found compatibility and dependability difficulties. Similar studieson developers’ discussion on SO on DL frameworks reported that developers find model deployment challenging. From our analysis, we also find that (Fig. fig-bubble-diff-pop-topic) AutoML practitioners find Model Load & Deployment (around 13% questions) to be one of the most difficult topics (e.g., “Azure ML Workbench Kubernetes Deployment Failed” in 46963846). This is particularly problematic as AutoML is expected to make model deployment and stability-related problems. Future research efforts from SE can help to mitigate these challenges.

Documentation. This challenge is related to incorrect and inadequate documentation, which is also quite prevalent in other software engineering tasks. Similar to empirical studieson the overall ML domain, we find that API misuse related to inadequate documentation or ML expertsis prevalent in all MLLC phases (e.g., In adequate documentation “API call for deploying and undeploying Google AutoML natural language model - documentation error?” in 67470364). This implies that researchers ML/AutoML should collaborate with the SE domain to improve these prevalent limitations.

Implications

In this Section, we summarize the findings can guide the following three stakeholders: [(1)]

-

AutoML Researchers & Educators to have a deeper understanding of the practical challenges/limitations of current AutoML tools and prioritize their future research effort,

-

AutoML platform/tool Providers to improve their services, learning resources, improve support for deployment, and maintenance,

-

AutoML Practitioners/Developers to gain a useful insights of current advantages and limitations to have a better understanding of the trade-offs between AutoML tools vs Tradition ML tools, We discuss the implications in details below.

AutoML Researchers & Educators. We find that the AutoML practitioners challenges are slightly different from traditional ML developers (sub-sec-autom-vs-traditionalml). Researchers can study how to improve the performance of AutoML core services, i.e., finding best ML algorithm configuration or Neural architecture search. AutoML tools/platforms aims to provide a reasonable good ML model at the cost of huge computational power (i.e., sometimes 100x) as it needs to explore a huge search space for potential solutions. Some recent search approach are showing promising results by reducing this cost by around ten fold. From our analysis (rq-diff-pop), we can see model performance improvement is a popular and difficult topic for AutoML practitioners (Table tab-topicpopularity). Future research on the explanability of the trained model can also provide more confidence to the practitioners.Researchers from SE can also help improve documentation, API design, and design intelligent tools to help AutoML practitioners to correctly tag SO questions so they reach the right experts.

AutoML Vendors. In this research, we present the AutoML practitioners discussed topics (Fig.fig-taxonomy-tm), popularity (Table tab-topicpopularity) and difficulty (Table tab-topicdifficulty) of these topics in details. From Figure fig-bubble-diff-pop-topic, we can see that Model load & deployment related topic is one of the most popular and difficult topic among the practitioners. We can also see that Model training & monitoring related question are the most challenging topic and model performance, library/platform management and data transformation related topics are most popular. AutoML vendors can prioritise their efforts based on the popularity and difficulty of these topics(Fig. fig-bubble-diff-pop-per-category). For example, expect for Model Load & Deployment (46 hours) and Model Training & Monitoring (32 hours) topics questions from other 11 topics have median average wait for for accepted answer is less than 20 hours. We also find that topics under data category are quite dominant and our analysis of AutoML tools and similar studies on other ML librariesreport the limitation of data processing APIs that require special attentions.

From Figure fig-bubble-diff-pop-mllc, We see that questions asked during model design and training related MLLC phase quite prevalent and popular among the AutoML community. We can also see that AutoML practitioners find requirement analysis phase (e.g., “How can I get elasticsearch data in h2o?” in 48397934) and Model deployment & monitoring phase quite challenging (e.g., “How to deploy machine learning model saved as pickle file on AWS SageMaker” in 68214823). We can also see popular development teams from popular AutoML providers such as H2O and Microsoft (Fig. fig-h2o-support, Fig. fig-sagemaker-support in sub-sec-top-automl) actively follow practitioners discussion on SO and take necessary actions or provide solutions. Other AutoML tool/platforms providers should also provide support to answer their platform feature specific queries. It shows that the smooth adoption of the AutoML tools/platforms depends on the improved and effective documentation, effective community support and tutorials.

AutoML Practitioners/Developers. The high demand and shortage of ML experts is making low-code, i.e., AutoML approach for ML development attractive for organizations. We can also see the the AutoML related discussion in SO a steady upward tread (Fig. fig-all-questions-evolution). AutoML tools/platforms provides a great support for different ML aspects such as data pre-processing and management but still lacks support for various tasks specially tasks related to model deployment and monitoring. Our analysis can provide project managers a comprehensive overview of current status of overall AutoML tools (sub-sec-top-automl and sub-sec-autom-vs-traditionalml). Our analysis provides invaluable insight on the current strength/limitations to project managers to adopt AutoML approach and better manage their resources. For example, even with AutoML approach setting up and configuring development environment and library management (e.g., “Need to install python packages in Azure ML studio” in 52925424), deployment trained models via cloud endpoint and updating model is still challenging. Our analysis also shows that average median wait time to get an accepted solution from SO is around 12 hours for data management and model implementation/debugging related tasks as development teams from some of these platforms also provide active support.

Threats to Validity

Internal validity threats in our study relate to the authors’ bias while analyzing the questions. We mitigate the bias in our manual labeling of topics andAutoML phases by following the annotation guideline. The first author participated in the labeling process, and in case of confusion, the first author consulted with the second author.

Construct Validity threats relate to the errors that may occur in our data collection process (e.g., identifying relevant AutoML tags). To mitigate this, first we created our initial list of tags, as stated in Section sec-methodology, by analyzing the posts in SO related to the leading AutoML platforms. Then we expanded our tag list using state-of-art approach. Another potential threat is the topic modeling technique, where we choose $K$ = 15 as the optimal number of topics for our dataset $B$ . This optimal number of topics directly impacts the output of LDA. We experimented with different values of $K$ following related works. We used the coherence score and our manual observation to find $K$ ’s optimal value that gives us the most relevant and generalized AutoML-related topics.

External Validity threats relate to the generalizability of our research findings. This study is based on data from developers’ discussions on SO. However, there are other forums that AutoML developers may use to discuss. We only considered questions and accepted answers in our topic modeling following other related works. The accepted and best answer (most scored) in SO may be different. The questioner approves the accepted answer, while all the viewers vote for the best answer. It is not easy to detect whether an answer is relevant to the question. Thus We chose the accepted answer in this study because we believe that the questioner is the best judge of whether the answer solves the problem. Even without the unaccepted answers, our dataset contains around 14.3K SO posts (12.5K questions + 3.7K accepted answers). Nevertheless, we believe using SO’s data provides us with generalizability because SO is a widely used Q&A platform for developers from diverse backgrounds. However, we also believe this study can be complemented by including discussions from other forums and surveying and interviewing AutoML practitioners.

Related Work

Research on AutoML

There are ongoing efforts to increase the performance of AutoML tools through better NAS approach, search for hyperparameters and loss methods, automating machine learning via keeping human in the loop. Truong et al.evaluated few popular AutoML tools on their abilities to automate ML pipeline. They report that at present different tools have different approaches for choosing the best model and optimizing hyperparameters. They report that the performance of these tools are reasonable against custom dataset but they still need improvement in terms of computational power, speed, flexibility etc. Karmaker et al.provides an overview of AutoML end-to-end pipeline. They highlight which ML pipeline requires future effort for the adoption of AutoML approach. There are other case studies on the use of AutoML technologies for autonomous vehicles, industry applicationanticipate bank collapses, predicting bank failureswhere the authors evaluate current strengths and limitations and make suggestions for future improvements. These studies focus on improving the AutoML paradigm from a theoretical perspective, and they do not address the challenges that AutoML practitioners have expressed in public forums such as SO.

Empirical Study on Challenges of ML Applications.

There has been quite some research effortsmostly by interviewing ML teams or surveys on the challenges of integrating ML in software engineering,explanability on the ML models. Bahrampour et al.conducted a comparative case of study on five popular deep learning frameworks – Caffe, Neon, TensorFlow, Theano, and Porch – on three different aspects such as their usability, execution speed and hardware utilization.

There are also quite some efforts on finding the challenges of Machine learning application by analysing developers discussion on stack overflow.

There are a few studies analyzing developer discussion on Stack Overflow regarding popular ML libraries. Islam et al.conduct a detailed examination of around 3.2K SO posts related to ten ML libraries and report the urgent need of SE research in this area. They report ML developers need support on finding errors earlier via static analyser, better support on debugging, better API design, better understanding of ML pipeline. Han et al.an empirical study on developers’ discussion three deep learning frameworks – Tensorflow, Pytorch, and Theano – on stack overflow and GitHub. They compare and report their challenges on these frameworks and provide useful insights to improve them. Zhang et al.analysed SO and GitHub projects on deep learning applications using TensorFlow and reported the characteristics and root causes of defects. Cummaudo et al.use Stack Overflow to mine developer dissatisfaction with computer vision services, classifying their inquiries against two taxonomies (i.e., documentation-related and general questions). They report that developers have a limited understanding of such systems’ underlying technology. Chen et al.conducts a comprehensive study on the challenges of deploying DL software by mining around 3K SO posts and report that DL deployment is more challenging than other topics in SE such as big data analysis and concurrency. They report a taxonomy of 72 challenges faced by the developers. These studies do not focus on the challenges of overall machine learning domain but rather on popular ML libraries, ML deployment, or a specific cloud-based ML service. Our study focuses entirely on overall AutoML related discussion on SO; hence, our analysed data, i.e., SO posts, are different.

There are also several empirical research on the challenges of machine learning, particularly deep learning, in the developers’ discussion on SO. Bangash et al.analyzes around 28K developers’ posts on SO and share their challenges. They report practitioners’ lack of basic understanding on Machine learning and not enough community feedback. Humbatova et al.manually analysed artefacts from GitHub commits and related SO discussion and report a report a variety of faults of while using DL frameworks and later validate their findings by surveying developers. Alshangiti et al.conduct a study on ML-related questions on SO and report developers’ challenges and report that ML related questions are more difficult than other domains and developers find data pre-processing, model deployment and environment setup related tasks most difficult. They also report although neural networks, and deep learning related frameworks are becoming popular, there is a shortage of experts in SO community. In contrast, our study focuses on AutoML tools/platforms rather than boarder machine learning domain in general. In this study, we aim to analyse the whole AutoML domains, i.e., AutoML-related discussion on SO rather than a few specific AutoML platforms. Our analyzed SO posts and SO users differ from theirs, and our findings provide insight focus on AutoML’s challenges.

Research on Topic Modeling & SO discussion

Our reason for employing topic modeling to understand LCSD discussions is rooted in current software engineering study demonstrates that concepts derived from The textual content can be a reasonable approximation of the underlying data. Topic modelling in SO dataset are used in a wide range of studies to understand software logging messagesand previously for diverse other tasks, such as concept and locating features, linking traceability (e.g., bug), to understand the evolution of software and source code history, to facilitate categorizing software code search, to refactor software code base, and for explaining software defects, and various software maintenance. The SO posts are used in several studies on various aspects of software development using topic modeling, such as what developers are discussing in generalor about a particular aspect, e.g., low-code software developers challenges, IoT developers discussion, docker development challenges, concurrency, big data, chatbot, machine learning challenges, challenges on deep learning libraries.

Conclusions

AutoML is a novel low-code approach for developing ML applications with minimal coding by utilizing higher-level end-to-end APIs. It automates various tasks in the ML pipeline, such as data prepossessing, model selection, hyperparameter tuning, etc. We present an empirical study that provides valuable insights into the types of discussions AutoML developers discuss in Stack Overflow (SO). We find 13 AutoML topics from our dataset of 14.3K extracted SO posts (question + acc. answers). We extracted these posts based on 41 SO tags belonging to the popular 18AutoML services. We categorize them into four high-level categories, namely the MLOps category (5 topics, 43.2% questions) with the highest number of SO questions, followed by Model (4 topics, 27.6% questions), Data (3 topics, 27% questions), Documentation (1 topic, 2.2% questions). Despite extensive support for data management, model design & deployment, we find that still, these topics are dominant in AutoML practitioners’ discussions across different MLLC phases. We find that many novice practitioners have platform feature-related queries without accepted answers. Our analysis suggests that better tutorial-based documentation can help mitigate most of these common issues. MLOps and Documentation topic categories predominate in cloud-based AutoML services. In contrast, the Model topic category and Model Evaluation phase are more predominant in non-cloud-based AutoML services. We hope these findings will help various AutoML stakeholders (e.g., AutoML/SE researchers, AutoML vendors, and practitioners) to take appropriate actions to mitigate these challenges. The research and developers’ popularity on AutoML indicates that this technology is likely widely adopted by various businesses for consumer-facing applications or business operational insight from their dataset. AutoML researchers and service providers should address the prevailing developers’ challenges for its fast adoption. Our future work will focus on [(1)]

-

getting AutoML developers’ feedback on our findings by interviews or surveys, and

-

developing tools to address the issues observed in the existing AutoML’s data processing and model designing pipeline.

Bibliography

1@inproceedings{abdellatif2020challenges,

2 series = {MSR '20},

3 location = {Seoul, Republic of Korea},

4 numpages = {12},

5 pages = {174–185},

6 booktitle = {Proceedings of the 17th International Conference on Mining

7Software Repositories},

8 doi = {10.1145/3379597.3387472},

9 url = {https://doi.org/10.1145/3379597.3387472},

10 address = {New York, NY, USA},

11 publisher = {Association for Computing Machinery},

12 isbn = {9781450375177},

13 year = {2020},

14 title = {Challenges in Chatbot Development: A Study of Stack

15Overflow Posts},

16 author = {Abdellatif, Ahmad and Costa, Diego and Badran, Khaled and

17Abdalkareem, Rabe and Shihab, Emad},

18}

19

20@inproceedings{adrian2020app,

21 organization = {Springer},

22 year = {2020},

23 pages = {45--51},

24 booktitle = {International Conference on Applied Human Factors and

25Ergonomics},

26 author = {Adrian, Benjamin and Hinrichsen, Sven and Nikolenko,

27Alexander},

28 title = {App Development via Low-Code Programming as Part of Modern

29Industrial Engineering Education},

30}

31

32@inproceedings{aghajani2020software,

33 organization = {IEEE},

34 year = {2020},

35 pages = {590--601},

36 booktitle = {2020 IEEE/ACM 42nd International Conference on Software

37Engineering (ICSE)},

38 author = {Aghajani, Emad and Nagy, Csaba and Linares-V{\'a}squez,

39Mario and Moreno, Laura and Bavota, Gabriele and Lanza,

40Michele and Shepherd, David C},

41 title = {Software documentation: the practitioners' perspective},

42}

43

44@article{agrapetidou2021automl,

45 publisher = {Taylor \& Francis},

46 year = {2021},

47 pages = {5--9},

48 number = {1},

49 volume = {28},

50 journal = {Applied Economics Letters},

51 author = {Agrapetidou, Anna and Charonyktakis, Paulos and Gogas,

52Periklis and Papadimitriou, Theophilos and Tsamardinos,

53Ioannis},

54 title = {An AutoML application to forecasting bank failures},

55}

56

57@article{agrawal2018wrong,

58 publisher = {Elsevier},

59 year = {2018},

60 pages = {74--88},

61 volume = {98},

62 journal = {Information and Software Technology},

63 author = {Agrawal, Amritanshu and Fu, Wei and Menzies, Tim},

64 title = {What is wrong with topic modeling? And how to fix it using

65search-based software engineering},

66}

67

68@inproceedings{ahmed2018concurrency,

69 series = {ESEM '18},

70 location = {Oulu, Finland},

71 keywords = {concurrency topics, concurrency topic difficulty,

72concurrency topic popularity, concurrency topic hierarchy,

73stack overflow},

74 numpages = {10},

75 articleno = {30},

76 booktitle = {Proceedings of the 12th ACM/IEEE International Symposium

77on Empirical Software Engineering and Measurement},

78 doi = {10.1145/3239235.3239524},

79 url = {https://doi.org/10.1145/3239235.3239524},

80 address = {New York, NY, USA},

81 publisher = {Association for Computing Machinery},

82 isbn = {9781450358231},

83 year = {2018},

84 title = {What Do Concurrency Developers Ask about? A Large-Scale

85Study Using Stack Overflow},

86 author = {Ahmed, Syed and Bagherzadeh, Mehdi},

87}

88

89@article{akiki2020eud,

90 publisher = {Elsevier},

91 year = {2020},

92 pages = {102534},

93 volume = {200},

94 journal = {Science of Computer Programming},

95 author = {Akiki, Pierre A and Akiki, Paul A and Bandara, Arosha K

96and Yu, Yijun},

97 title = {EUD-MARS: End-user development of model-driven adaptive

98robotics software systems},

99}

100

101@inproceedings{al2021quality,

102 organization = {IEEE},

103 year = {2021},

104 pages = {1--5},

105 booktitle = {2021 IEEE International Conference on Autonomous Systems

106(ICAS)},

107 author = {Al Alamin, Md Abdullah and Uddin, Gias},

108 title = {Quality assurance challenges for machine learning software

109applications during software development life cycle

110phases},

111}

112

113@inproceedings{alamin2021empirical,

114 pages = {46-57},

115 year = {2021},

116 organization = {IEEE},

117 booktitle = {2021 IEEE/ACM 18th International Conference on Mining

118Software Repositories (MSR)},

119 author = {Alamin, Md Abdullah Al and Malakar, Sanjay and Uddin, Gias

120and Afroz, Sadia and Haider, Tameem Bin and Iqbal,

121Anindya},

122 title = {An Empirical Study of Developer Discussions on Low-Code

123Software Development Challenges},

124}

125

126@article{alonso2020towards,

127 year = {2020},

128 journal = {arXiv preprint arXiv:2004.13495},

129 author = {Alonso, Ana Nunes and Abreu, Jo{\~a}o and Nunes, David and

130Vieira, Andr{\'e} and Santos, Luiz and Soares, T{\'e}rcio

131and Pereira, Jos{\'e}},

132 title = {Towards a polyglot data access layer for a low-code

133application development platform},

134}

135

136@article{alsaadi2021factors,

137 year = {2021},

138 pages = {123--140},

139 number = {3},

140 volume = {31},

141 journal = {Romanian Journal of Information Technology and Automatic

142Control},

143 author = {ALSAADI, Hana A and RADAIN, Dhefaf T and ALZAHRANI,

144Maysoon M and ALSHAMMARI, Wahj F and ALAHMADI, Dimah and

145FAKIEH, Bahjat},

146 title = {Factors that affect the utilization of low-code

147development platforms: survey study},

148}

149

150@inproceedings{alshangiti2019developing,

151 organization = {IEEE},

152 year = {2019},

153 pages = {1--11},

154 booktitle = {2019 ACM/IEEE International Symposium on Empirical

155Software Engineering and Measurement (ESEM)},

156 author = {Alshangiti, Moayad and Sapkota, Hitesh and Murukannaiah,

157Pradeep K and Liu, Xumin and Yu, Qi},

158 title = {Why is developing machine learning applications

159challenging? a study on stack overflow posts},

160}

161

162@inproceedings{amershi2019software,

163 organization = {IEEE},

164 year = {2019},

165 pages = {291--300},

166 booktitle = {2019 IEEE/ACM 41st International Conference on Software

167Engineering: Software Engineering in Practice (ICSE-SEIP)},

168 author = {Amershi, Saleema and Begel, Andrew and Bird, Christian and

169DeLine, Robert and Gall, Harald and Kamar, Ece and

170Nagappan, Nachiappan and Nushi, Besmira and Zimmermann,

171Thomas},

172 title = {Software engineering for machine learning: A case study},

173}

174

175@inproceedings{amershi_se_case_study_2019,

176 organization = {IEEE},

177 year = {2019},

178 pages = {291--300},

179 booktitle = {proc ICSE-SEIP},

180 author = {Amershi et al.},

181 title = {Software engineering for machine learning: A case study},

182}

183

184@misc{amplifystudio,

185 note = {[Online; accessed 5-November-2022]},

186 howpublished = {{Available: \url{https://aws.amazon.com/amplify/studio/}}},

187 title = {{AWS Amplify Studio overview}},

188 key = {amplifystudio},

189}

190

191@inproceedings{androutsopoulos2014analysis,

192 year = {2014},

193 pages = {573--583},

194 booktitle = {Proceedings of the 36th international conference on

195software engineering},

196 author = {Androutsopoulos et al.},

197 title = {An analysis of the relationship between conditional

198entropy and failed error propagation in software testing},

199}

200

201@article{annas2003hipaa,

202 publisher = {MEDICAL PUBLISHING GROUP-MASS MEDIC SOCIETY},

203 year = {2003},

204 pages = {1486--1490},

205 number = {15},

206 volume = {348},

207 journal = {New England Journal of Medicine},

208 author = {Annas et al.},

209 title = {HIPAA regulations-a new era of medical-record privacy?},

210}

211

212@misc{appengine,

213 note = {[Online; accessed 13-December-2021]},

214 howpublished = {{Available:

215\url{https://cloud.google.com/appengine/docs}}},

216 title = {{App Engine: a fully managed, serverless platform for

217developing and hosting web applications at scale.}},

218 key = {appengine},

219}

220

221@misc{appian,

222 note = {[Online; accessed 5-January-2021]},

223 howpublished = {{Available: \url{https://www.appian.com/}}},

224 title = {{Appian platform overview}},

225 key = {appian},

226}

227

228@inproceedings{arun2010finding,

229 organization = {Springer},

230 year = {2010},

231 pages = {391--402},

232 booktitle = {Pacific-Asia conference on knowledge discovery and data

233mining},

234 author = {Arun, Rajkumar and Suresh, Venkatasubramaniyan and

235Madhavan, CE Veni and Murthy, MN Narasimha},

236 title = {On finding the natural number of topics with latent

237dirichlet allocation: Some observations},

238}

239

240@inproceedings{asaduzzaman2013answering,

241 organization = {IEEE},

242 year = {2013},

243 pages = {97--100},

244 booktitle = {2013 10th Working Conference on Mining Software

245Repositories (MSR)},

246 author = {Asaduzzaman, Muhammad and Mashiyat, Ahmed Shah and Roy,

247Chanchal K and Schneider, Kevin A},

248 title = {Answering questions about unanswered questions of stack

249overflow},

250}

251

252@inproceedings{asuncion2010software,

253 organization = {IEEE},

254 year = {2010},

255 pages = {95--104},

256 volume = {1},

257 booktitle = {2010 ACM/IEEE 32nd International Conference on Software

258Engineering},

259 author = {Asuncion, Hazeline U and Asuncion, Arthur U and Taylor,

260Richard N},

261 title = {Software traceability with topic modeling},

262}

263

264@article{atkinson2003model,

265 publisher = {IEEE},

266 year = {2003},

267 pages = {36--41},

268 number = {5},

269 volume = {20},

270 journal = {IEEE software},

271 author = {Atkinson, Colin and Kuhne, Thomas},

272 title = {Model-driven development: a metamodeling foundation},

273}

274

275@inproceedings{attenberg2011beat,

276 year = {2011},

277 booktitle = {Workshops at the Twenty-Fifth AAAI Conference on

278Artificial Intelligence},

279 author = {Attenberg et al.},

280 title = {Beat the machine: Challenging workers to find the unknown

281unknowns},

282}

283

284@misc{auto_ml,

285 note = {[Online; accessed 5-November-2022]},

286 howpublished = {{Available: \url{https://github.com/ClimbsRocks/auto_ml}}},

287 title = {{Automated machine learning for analytics \& production}},

288 year = {2022},

289}

290

291@misc{autofolio,

292 note = {[Online; accessed 5-November-2022]},

293 howpublished = {{Available: \url{https://github.com/automl/AutoFolio}}},

294 title = {{AutoFolio Automated Algorithm Selection with

295Hyperparameter Optimization Library}},

296 year = {2022},

297}

298

299@misc{automl_nas,

300 note = {[Online; accessed 5-November-2022]},

301 howpublished = {{Available: \url{https://www.altexsoft.com/blog/automl/}}},

302 year = {2022},

303 title = {{AutoML: How to Automate Machine Learning With Google

304Vertex AI, Amazon SageMaker, H20.ai, and Other Providers}},

305}

306

307@misc{automl_pipeline_overview,

308 note = {[Online; accessed 5-November-2022]},

309 howpublished = {{Available:

310\url{https://gomerudo.github.io/auto-ml/nutshell.html}}},

311 title = {{AutoML in a nutshell}},

312 year = {2022},

313}

314

315@misc{automlcomputation,

316 note = {[Online; accessed 5-January-2021]},

317 howpublished = {{Available:

318\url{https://www.fast.ai/2018/07/23/auto-ml-3/}}},

319 title = {{Google's AutoML: Cutting Through the Hype }},

320 year = {2022},

321}

322

323@misc{aws_lex,

324 note = {[Online; accessed 5-November-2022]},

325 howpublished = {{Available: \url{https://aws.amazon.com/lex/}}},

326 title = {{Amazon Lex - Conversational AI and Chatbots}},

327 year = {2022},

328}

329

330@misc{aws_news,

331 note = {[Online; accessed 5-November-2022]},

332 howpublished = {{Available:

333\url{https://www.businesswire.com/news/home/20201208005335/en/AWS-Announces-Nine-New-Amazon-SageMaker-Capabilities}}},

334 title = {{AWS Announces Nine New Amazon SageMaker Capabilities}},

335 year = {2022},

336}

337

338@misc{aws_sagemaker,

339 note = {[Online; accessed 5-November-2022]},

340 howpublished = {{Available: \url{https://aws.amazon.com/sagemaker/}}},

341 title = {{Amazon SageMaker Overview}},

342 year = {2022},

343}

344

345@misc{azureml,

346 note = {[Online; accessed 5-November-2022]},

347 howpublished = {{Available:

348\url{https://azure.microsoft.com/en-us/services/machine-learning/}}},

349 title = {{Azure Machine Learning - ML as a Service}},

350 year = {2022},

351}

352

353@inproceedings{bagherzadeh2019going,

354 keywords = {Big data topic difficulty, Big data topic hierarchy, Big

355data topic popularity, Big data topics, Stackoverflow},

356 address = {New York, NY, USA},

357 publisher = {ACM},

358 pages = {432--442},

359 location = {Tallinn, Estonia},

360 year = {2019},

361 series = {ESEC/FSE 2019},

362 booktitle = {Proceedings of the 2019 27th ACM Joint Meeting on European

363Software Engineering Conference and Symposium on the

364Foundations of Software Engineering},

365 title = {Going Big: A Large-scale Study on What Big Data Developers

366Ask},

367 author = {Bagherzadeh, Mehdi and Khatchadourian, Raffi},

368}

369

370@article{bahrampour2015comparative,

371 year = {2015},

372 journal = {arXiv preprint arXiv:1511.06435},

373 author = {Bahrampour, Soheil and Ramakrishnan, Naveen and Schott,

374Lukas and Shah, Mohak},

375 title = {Comparative study of deep learning software frameworks},

376}

377

378@inproceedings{bajaj2014mining,

379 year = {2014},

380 pages = {112--121},

381 booktitle = {Proceedings of the 11th Working Conference on Mining

382Software Repositories},

383 author = {Bajaj, Kartik and Pattabiraman, Karthik and Mesbah, Ali},

384 title = {Mining questions asked by web developers},

385}

386

387@inproceedings{baltadzhieva2015predicting,

388 year = {2015},

389 pages = {32--40},

390 booktitle = {Proceedings of the international conference recent

391advances in natural language processing},

392 author = {Baltadzhieva, Antoaneta and Chrupa{\l}a, Grzegorz},

393 title = {Predicting the quality of questions on stackoverflow},

394}

395

396@inproceedings{bandeira2019we,

397 organization = {IEEE},

398 year = {2019},

399 pages = {255--259},

400 booktitle = {2019 IEEE/ACM 16th International Conference on Mining

401Software Repositories (MSR)},

402 author = {Bandeira, Alan and Medeiros, Carlos Alberto and Paixao,

403Matheus and Maia, Paulo Henrique},

404 title = {We need to talk about microservices: an analysis from the

405discussions on StackOverflow},

406}

407

408@inproceedings{bangash2019developers,

409 organization = {IEEE},

410 year = {2019},

411 pages = {260--264},

412 booktitle = {2019 IEEE/ACM 16th International Conference on Mining

413Software Repositories (MSR)},

414 author = {Bangash, Abdul Ali and Sahar, Hareem and Chowdhury,

415Shaiful and Wong, Alexander William and Hindle, Abram and

416Ali, Karim},

417 title = {What do developers know about machine learning: a study of

418ml discussions on stackoverflow},

419}

420

421@article{barr2014oracle,

422 publisher = {IEEE},

423 year = {2014},

424 pages = {507--525},

425 number = {5},

426 volume = {41},

427 journal = {IEEE transactions on software engineering},

428 author = {Barr et al.},

429 title = {The oracle problem in software testing: A survey},

430}

431

432@article{barua2014developers,

433 publisher = {Springer},

434 year = {2014},

435 pages = {619--654},

436 number = {3},

437 volume = {19},

438 journal = {Empirical Software Engineering},

439 author = {Barua, Anton and Thomas, Stephen W and Hassan, Ahmed E},

440 title = {What are developers talking about? an analysis of topics

441and trends in stack overflow},

442}

443

444@inproceedings{basciani2014mdeforge,

445 organization = {CEUR-WS},

446 year = {2014},

447 pages = {66--75},

448 volume = {1242},

449 booktitle = {2nd International Workshop on Model-Driven Engineering on

450and for the Cloud, CloudMDE 2014, Co-located with the 17th

451International Conference on Model Driven Engineering

452Languages and Systems, MoDELS 2014},

453 author = {Basciani, Francesco and Iovino, Ludovico and Pierantonio,

454Alfonso and others},

455 title = {MDEForge: an extensible web-based modeling platform},

456}

457

458@article{basil1975iterative,

459 publisher = {IEEE},

460 year = {1975},

461 pages = {390--396},

462 number = {4},

463 journal = {IEEE Transactions on Software Engineering},

464 author = {Basil, Victor R and Turner, Albert J},

465 title = {Iterative enhancement: A practical technique for software

466development},

467}

468

469@article{bassil2012simulation,

470 year = {2012},

471 journal = {arXiv preprint arXiv:1205.6904},

472 author = {Bassil, Youssef},

473 title = {A simulation model for the waterfall software development

474life cycle},

475}

476

477@article{bavota-refactoringtopic-tse2014,

478 year = {2014},

479 volume = {40},

480 title = {Methodbook: Recommending Move Method Refactorings via

481Relational Topic Models},

482 pages = {671-694},

483 number = {7},

484 journal = {IEEE Transactions on Software Engineering},

485 author = {Gabriele Bavota and Rocco Oliveto and Malcom Gethers and

486Denys Poshyvanyk and Andrea De Lucia},

487}

488

489@inproceedings{bayer2006view,

490 organization = {IEEE},

491 year = {2006},

492 pages = {10--pp},

493 booktitle = {13th Annual IEEE International Symposium and Workshop on

494Engineering of Computer-Based Systems (ECBS'06)},

495 author = {Bayer, Joachim and Muthig, Dirk},

496 title = {A view-based approach for improving software documentation

497practices},

498}

499

500@article{beck2001manifesto,

501 year = {2001},

502 author = {Beck, Kent and Beedle, Mike and Van Bennekum, Arie and

503Cockburn, Alistair and Cunningham, Ward and Fowler, Martin

504and Grenning, James and Highsmith, Jim and Hunt, Andrew and

505Jeffries, Ron and others},

506 title = {Manifesto for agile software development},

507}

508

509@inproceedings{beranic2020adoption,

510 organization = {Faculty of Organization and Informatics Varazdin},

511 year = {2020},

512 pages = {97--103},

513 booktitle = {Central European Conference on Information and Intelligent

514Systems},

515 author = {Beranic, Tina and Rek, Patrik and Heri{\v{c}}ko, Marjan},

516 title = {Adoption and Usability of Low-Code/No-Code Development

517Tools},

518}

519

520@article{beynon1999rapid,

521 publisher = {Taylor \& Francis},

522 year = {1999},

523 pages = {211--223},

524 number = {3},

525 volume = {8},

526 journal = {European Journal of Information Systems},

527 author = {Beynon-Davies, Paul and Carne, Chris and Mackay, Hugh and

528Tudhope, Douglas},

529 title = {Rapid application development (RAD): an empirical review},

530}

531

532@article{bhat2006overcoming,

533 publisher = {IEEE},

534 year = {2006},

535 pages = {38--44},

536 number = {5},

537 volume = {23},

538 journal = {IEEE software},

539 author = {Bhat, Jyoti M and Gupta, Mayank and Murthy, Santhosh N},

540 title = {Overcoming requirements engineering challenges: Lessons

541from offshore outsourcing},

542}

543

544@article{biggio2012poisoning,

545 year = {2012},

546 journal = {arXiv preprint arXiv:1206.6389},

547 author = {Biggio et al.},

548 title = {Poisoning attacks against support vector machines},

549}

550

551@article{blei2003latent,

552 year = {2003},

553 volume = {3},

554 title = {Latent Dirichlet Allocation},

555 pages = {993--1022},

556 number = {4-5},

557 journal = {Journal of Machine Learning Research},

558 author = {Blei, David M. and Ng, Andrew Y. and Jordan, Michael I.},

559}

560

561@article{bonawitz2019towards,

562 year = {2019},

563 journal = {arXiv preprint arXiv:1902.01046},

564 author = {Bonawitz et al.},

565 title = {Towards federated learning at scale: System design},

566}

567

568@inproceedings{borg2021aiq,

569 organization = {Springer},

570 year = {2021},

571 pages = {66--77},

572 booktitle = {International Conference on Software Quality},

573 author = {Borg, Markus},

574 title = {The AIQ meta-testbed: pragmatically bridging academic AI

575testing and industrial Q needs},

576}

577

578@inproceedings{botterweck2006model,

579 organization = {Springer},

580 year = {2006},

581 pages = {106--115},

582 booktitle = {International Conference on Model Driven Engineering

583Languages and Systems},

584 author = {Botterweck, Goetz},

585 title = {A model-driven approach to the engineering of multiple

586user interfaces},

587}

588

589@article{bourque1999guide,

590 publisher = {IEEE},

591 year = {1999},

592 pages = {35--44},

593 number = {6},

594 volume = {16},

595 journal = {IEEE software},