- Papers

Papers is Alpha. This content is part of an effort to make research more accessible, and (most likely) has lost some details from the original. You can find the original paper here.

Introduction

AI has been identified as the new electricity[*]. Data has been considered the oil for the digital economy. This is also considered the 4th industrial revolution. From this perspective, have we thought about the sustainability of the new electricity: AI?

When the steam engine was deployed in the 1th industry revolution and electricity was generated in the 2nd industrial revolution, sustainability had not been a concern. Humans’ rush to economic advantages from the 1th and 2nd industrial revolutions caused many problems in the long run, such as climate change and related environmental and humanitarian crises[*]. By the time we retrospect and think about the sustainability of power and energy generation, it has caused significant damage to humanity. Therefore, we mustn’t be making the same mistake in the 4th industrial revolution: AI.

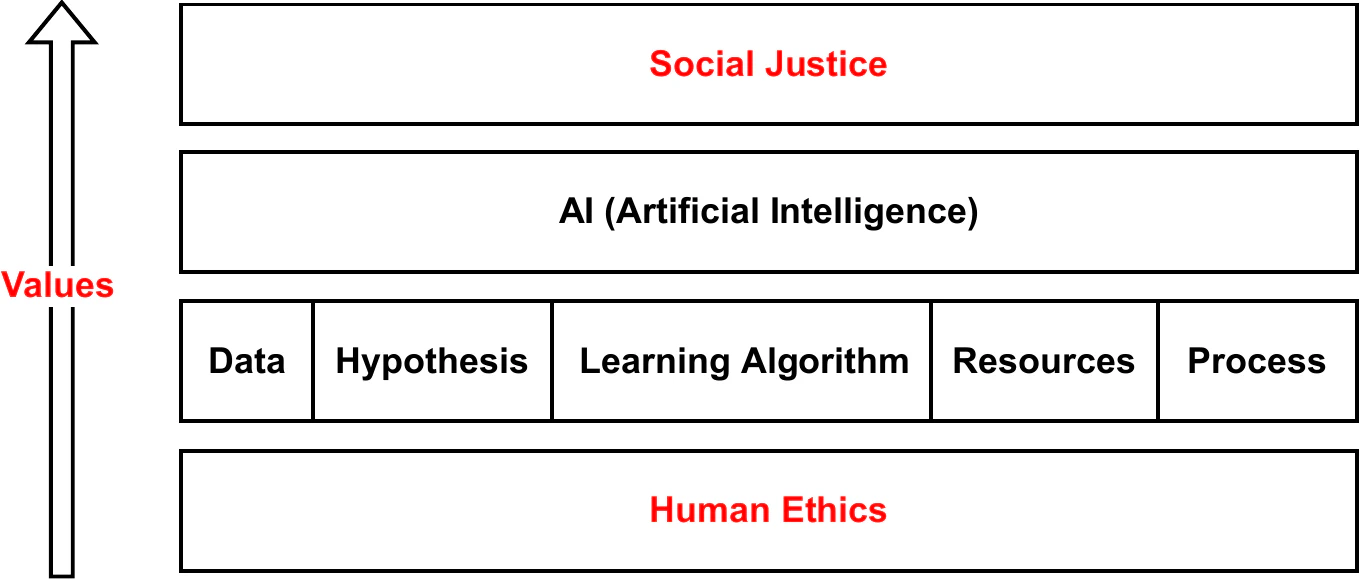

AI governance is a complex process as AI has autonomous decision-making capability. Consequently, AI can create fundamental risks in human dignity, human rights and human autonomy[*,*,*]. Hence, AI ethics and governance must be realized from the very beginning when humans initiate artificial intelligence. Therefore AI ethics should be underpinned by human ethics[*].

Human Ethics

Consequentialism and Utilitarianism can be identified as two broad categories of human ethics. Consequentialism is a theory that says whether something is ethical or not depends on its outcomes or consequences. In this way, the focus is on outcomes rather than the overall benefit or process. In contrast, in Utilitarianism, the ethical nature is decided based on whether the process is optimised to maximise the overall benefit to the society rather than the outcomes. These two different ethical perspectives sometimes create a dilemma, where we may see a decision is ethical in the Consequentialism perspective but not ethical in the Utilitarianism perspective and vice versa. Therefore, the leaders need to understand both perspectives and make sure AI realisation can be justifiable in both perspectives as much as possible.

Human should consider AI as a capability rather than an agent. AI should not take autonomy wherever human dignity is a concern. The fundamental purpose of AI is to transform the values of human, data and technologies towards social justice (see AI Ethics Intro) by optimising the Consequentialism and Utilitarianism perspectives of human ethics.

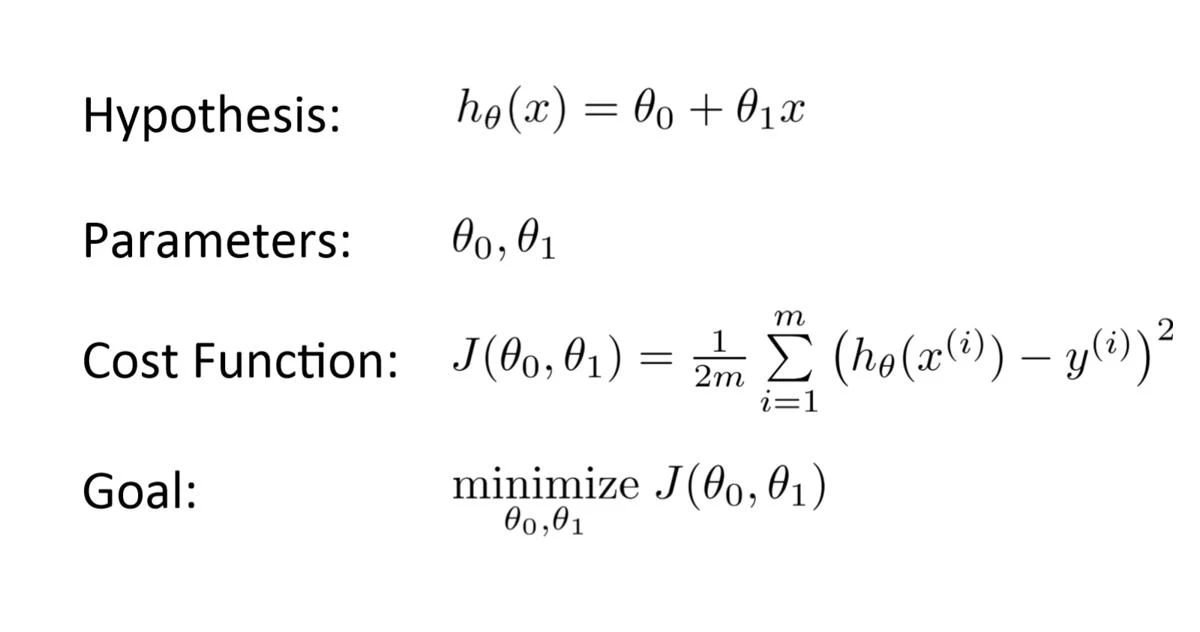

In technical perspective, humans are accountable for their decisions on AI implementations:

- bias mitigation,

- problem selection,

- opportunity cost evaluation for social justice,

- data selection and sampling,

- insight (features) incorporation,

- algorithm selection,

- hyperparameter tuning,

- regularisation, etc. AI linear regression shows the basic touch-points of human decision making in a simple form of an AI algorithm, linear regression. Note how human decision-making influences a typical AI solution in data, hypothesis, algorithmic, resource and process perspectives. Many tools (e.g., MLOps, ModelOps, AIOps, XOps, DataOps) enable and facilitate deciding and fine-tuning all of those factors and aspects. Our ethics, knowledge and risk appetite determine why how and what we do, which is why AI governance and ethics are important.

AI from the Consequentialism Perspective

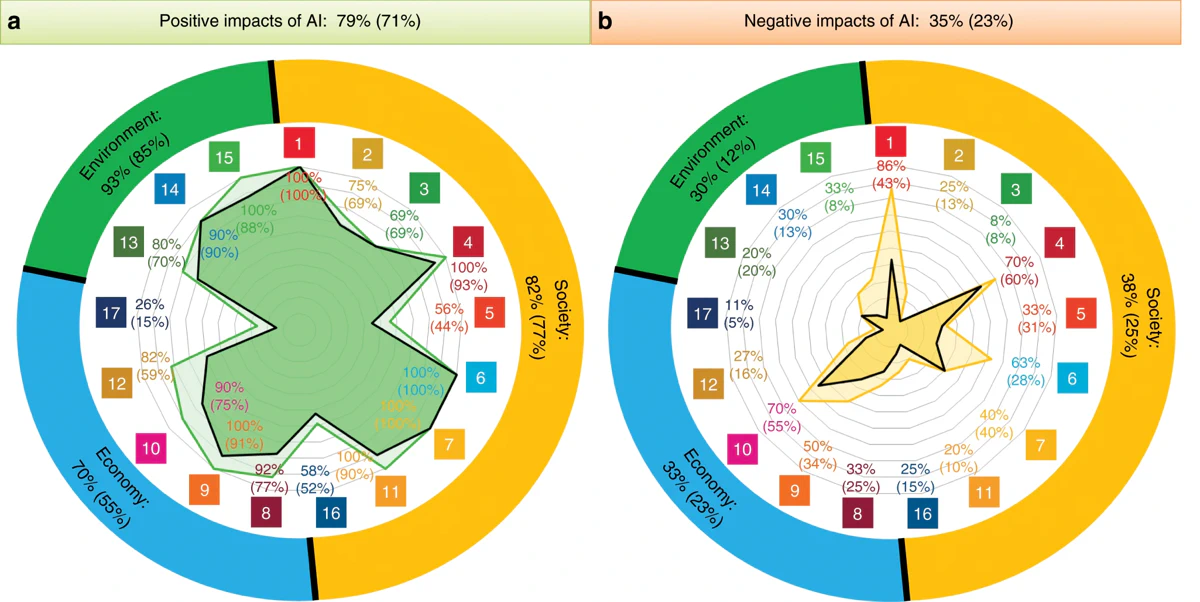

AI can support 79% of the United Nations 17 Sustainable Development Goals (SDGs) (see UN SDGs)[*]which is the foundation of ESG and Social Impact strategies planned to realise by 2030. In 2015, United Nations member states adopted these 17 SDGs as their 2030 agenda for sustainable development[*]. This agenda establishes a shared framework for peace and prosperity for a sustainable future for people and the planet. The framework supports environmental, social and corporate governance (ESG) for sustainability.

In Consequentialism perspective of AI ethics, UN SDGs provide a globally acceptable ethical framework for AI governance. However, depending on governance and ethics of AI, there can be pros and cons in AI applications. AI for SDGs shows how AI impacts positively and negatively on each UN SDGs.

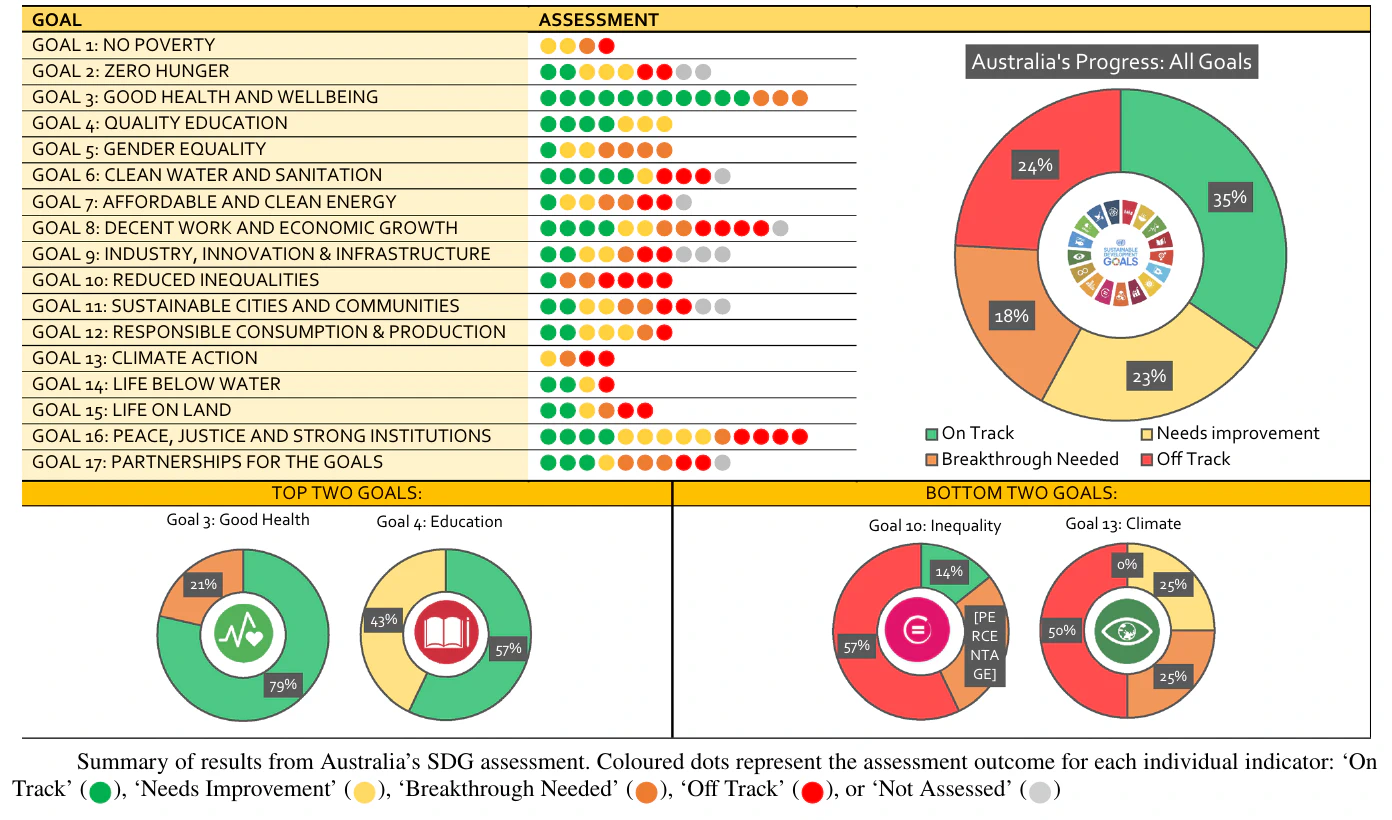

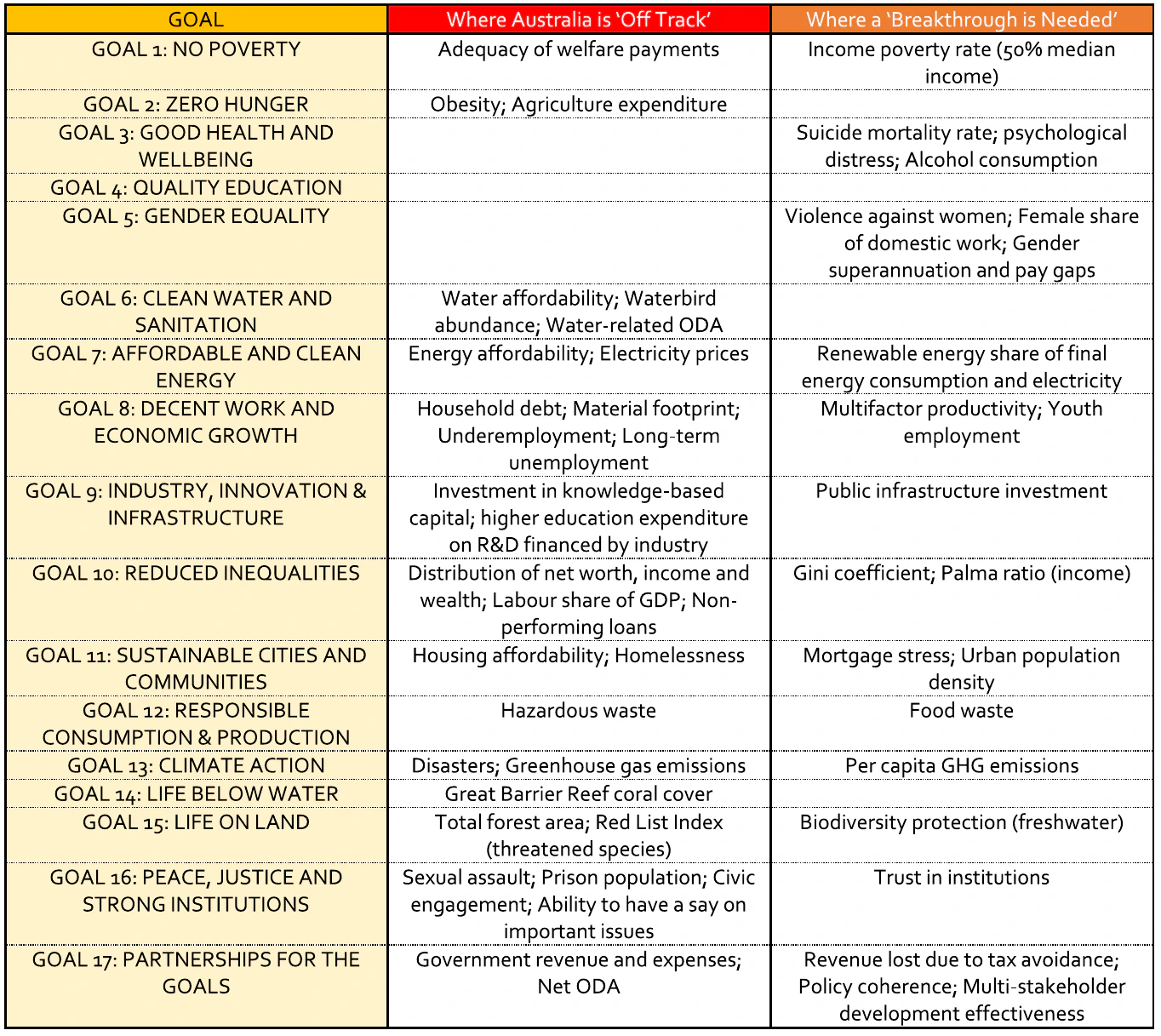

The UN SDGs are an urgent call for action by all countries - developed and developing - in a global partnership; the Australian organisations must address this diligently. Australia still has a long journey ahead in achieving UN SDGs. AUS SDGs performance illustrates the results of Australia’s SDG assessment[*]. Note the goals in which Australia is off track and needs a breakthrough.

Moreover, AUS SDGs Risks summarises the Australian concerns related to unsatisfactory progress in each UN SDG analysed in[*].

Therefore, in the economic acceleration effort with AI, the government should focus on achieving UN SDGs effectively, which will promote AI ethics, governance and AI for sustainability.

AI from the Utilitarianism Perspective

In the Utilitarianism perspective of AI ethics and governance, the motivation would be to maximise the overall benefit to the society instead of morality. In this perspective, leaders are encouraged to look into the more granular level and customised design and implementations rather than premeditated norms, moral conventions or solutions (which are more focused on the Consequentialism perspective). The following are important design concerns when focusing on AI ethics and sustainability of AI from the Utilitarianism perspective.

Bias

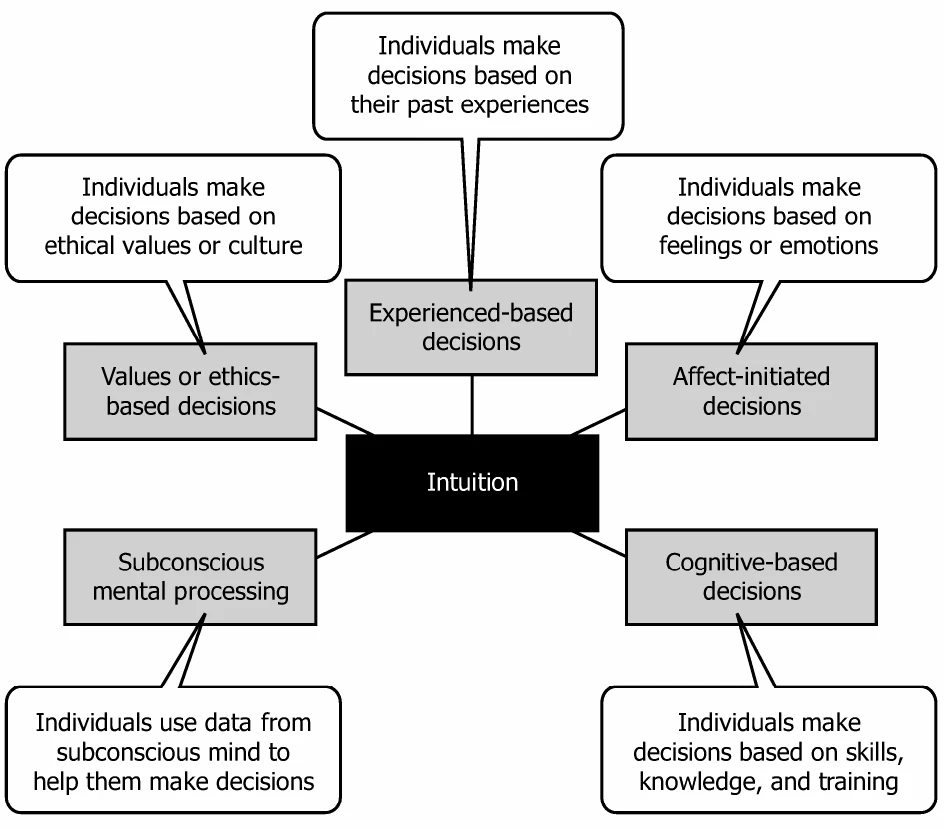

Bias in data, algorithms and people is the fundamental cause of the failure of AI implementations. Unlike many other applications, AI is introduced to involve autonomous, semi-autonomous or prescriptive decision making. Therefore, it is important to mitigate the biases in AI to maximise social justice. The leaders should be self-aware, conscious, and avoid intuitive decisions on AI implementations, management and governance. intuition shows the traits of intuitive decision-making. The collaborations, partnerships and working as a distributed network are recommended by the 17th UN SDGs to overcome those traits by promoting diversity, equity and inclusion in people realising AI.

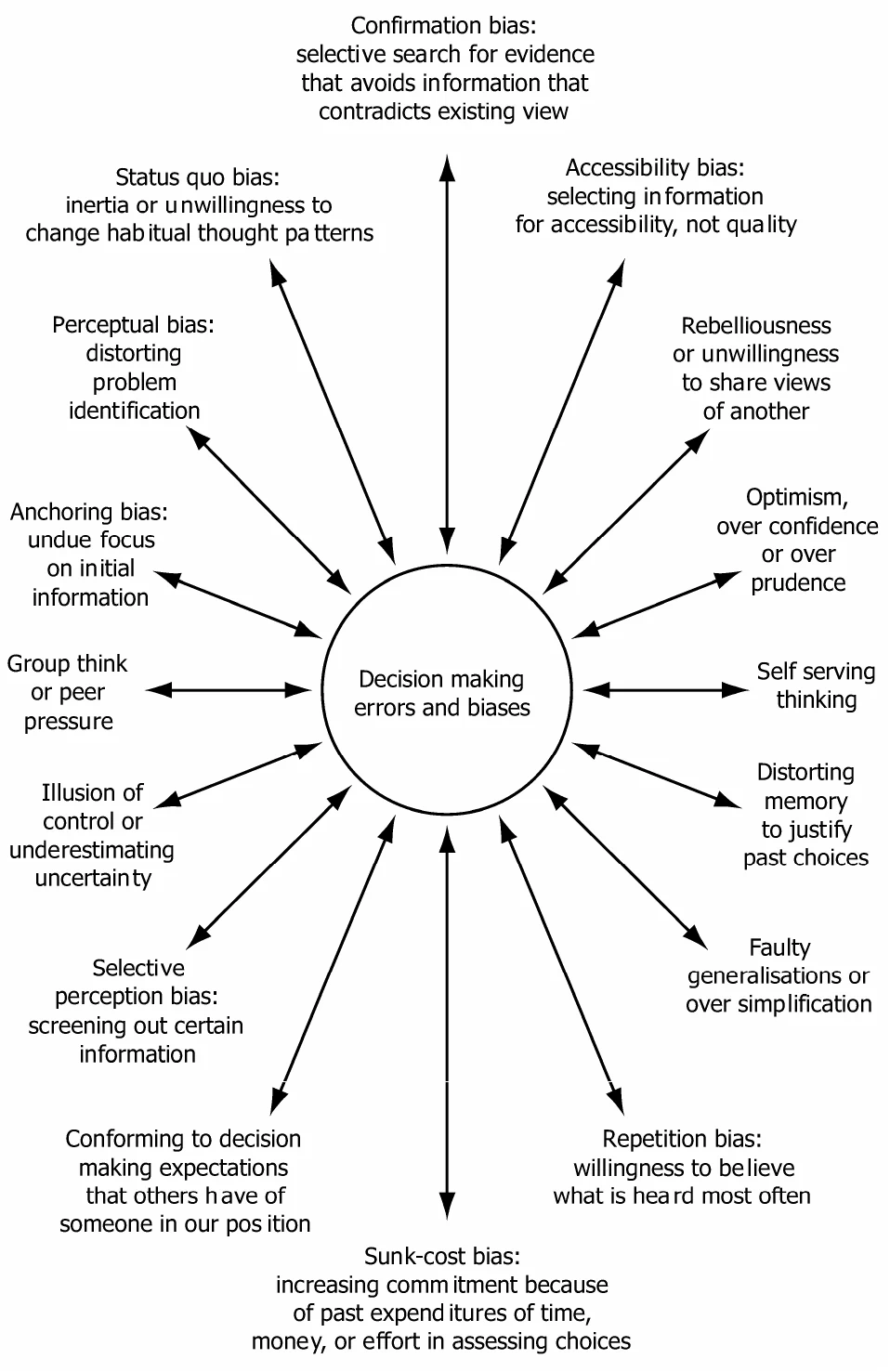

It is understood that each individual has their own biases, traits and ways of thinking. That is why collective decision making with a diverse group is more effective than individual decision making. biases shows various decision-making errors and biases that leaders should be aware of when forming, norming and driving AI strategies and transformation. Diverse perspectives, more information, more alternatives, and different thinking styles are key success factors of Utilitarianism perspectives of AI ethics, which help democratise AI, avoiding disparities and meaningful participation and representation[*].

Diversity

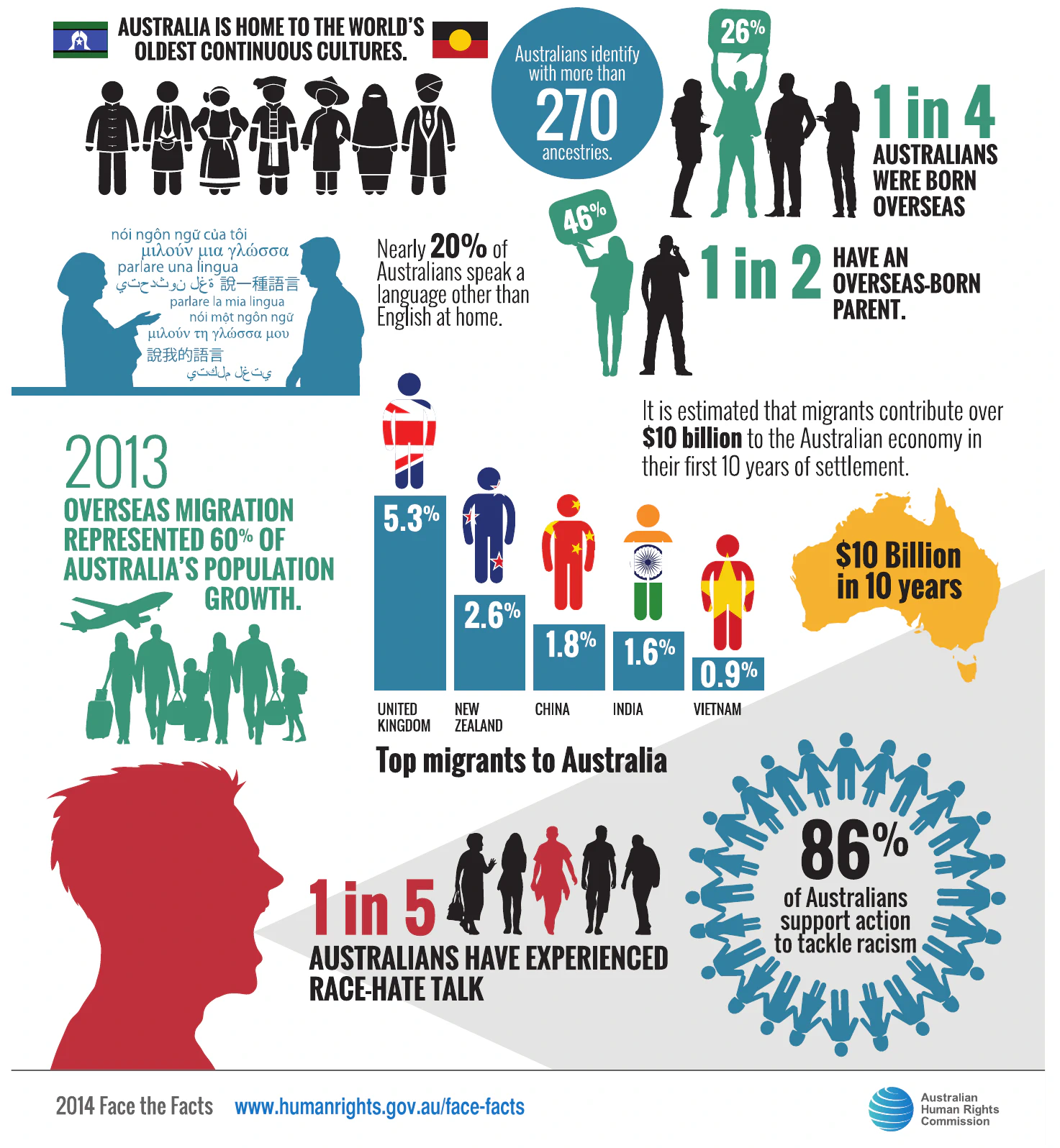

Australia has vibrant multicultural community (see AUS Cultural Diversity). This is one of the uniqueness of Australia. The Aboriginal and Torres Strait Islander peoples’ culture is the world’s oldest continuous culture. Australians can be related to more than 270 ancestries. Since 1945, almost 7 million people have migrated to Australia. This rich culture is one of the greatest strengths of its economic prosperity. Therefore, it is important to consider this great diversity when mitigating biases and promoting inclusions in AI initiatives.

Leaders should bring diversity to AI solutions by enabling equity and inclusion. “Neither a person nor an apple can be diverse. Diversity is the property of a collection of people—a basket with many kinds of fruit”[*]. Gender equality and reduced inequalities are key focuses in sustainability addressing through 5th and 10th UN SDGs. On the other hand, the Australian anti-discrimination law was established to eliminate all forms of discrimination which is an integral part of promoting diversity[*].

Impartiality and Localisation

Impartiality and localisation are two important objectives in an equitable AI solution. When managing impartiality, retaining fairness to locality is equally important. If the AI model is generalised across the entire population, it may be justified as an impartial solution but might not be fair for minority groups. Even deploying locally optimised multiple models may create injustice to people at the margins of the segments and cause issues from the impartiality perspective.

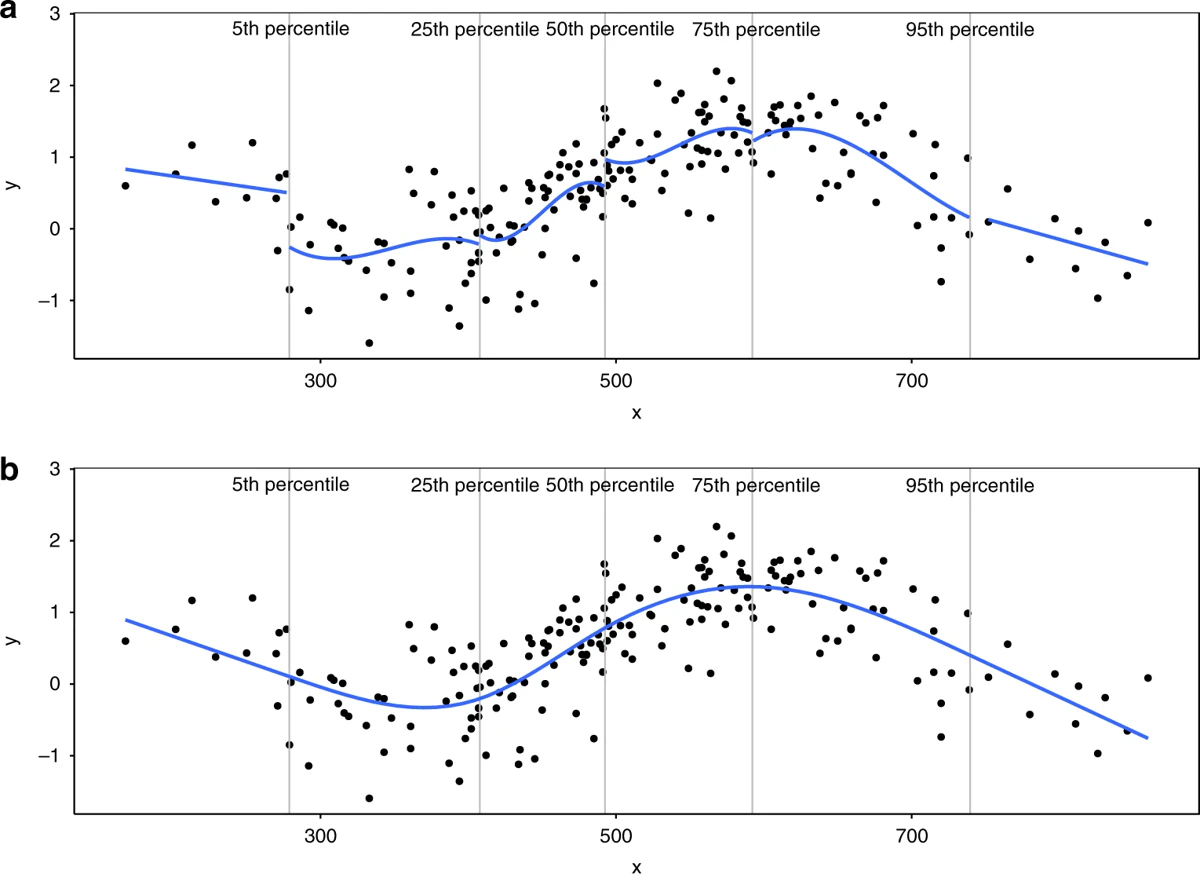

restricted cubic spline shows two modelling strategies on complex and diversified data points. In machine learning, regularisation techniques generalise the model while mitigating overfit. Sometimes, the regularisation may neglect the minority requirements. Therefore, the model complexity on data should be determined by accounting impartiality and localisation of the solution.

Equity

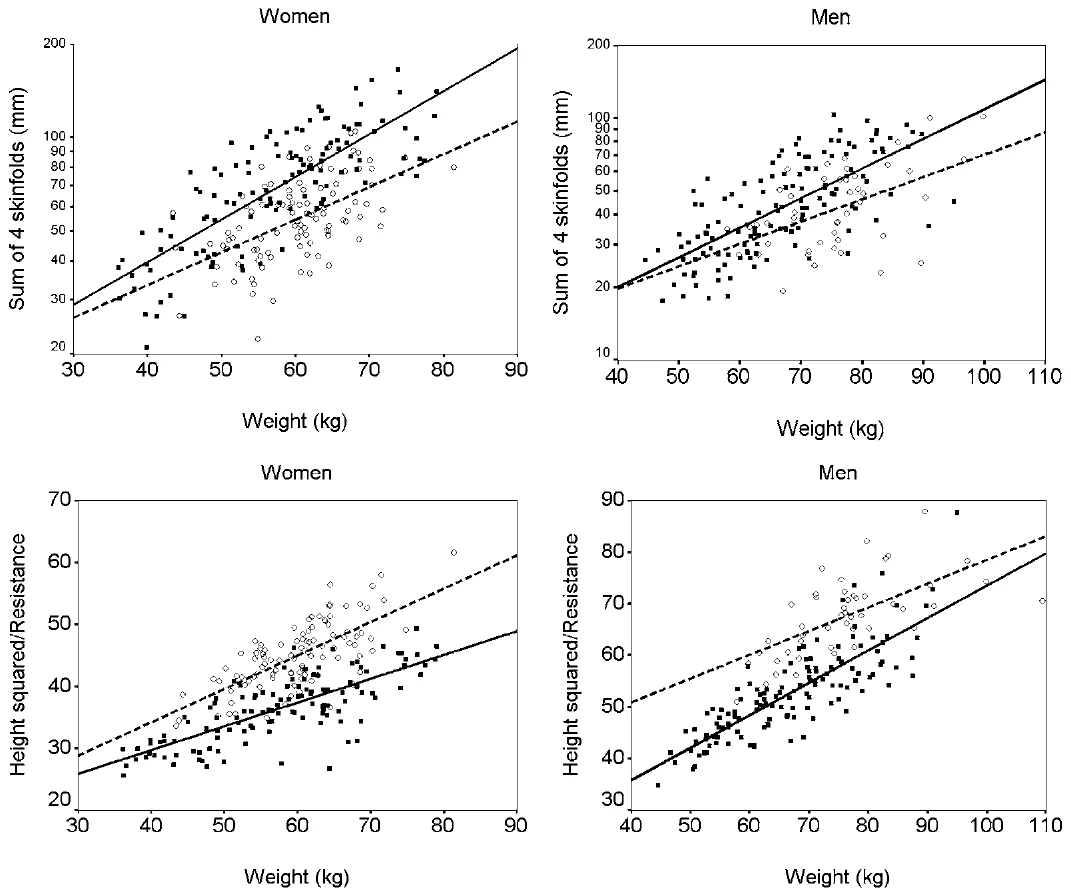

Equity is an important concern in social justice, which is quite relevant to the Australian multicultural society. Bringing AI equity to relevant groups is important when creating values or making decisions from an ethical perspective. For example, Aboriginal and European Australians have a significantly different body fat distribution and fat mass for given body weight or BMI. By research, it has been identified that (see BMI FNP) BMI ranges valid for the majority of Australians to determine weight status may be inappropriate in Australian Aboriginal people[*].

Inclusion

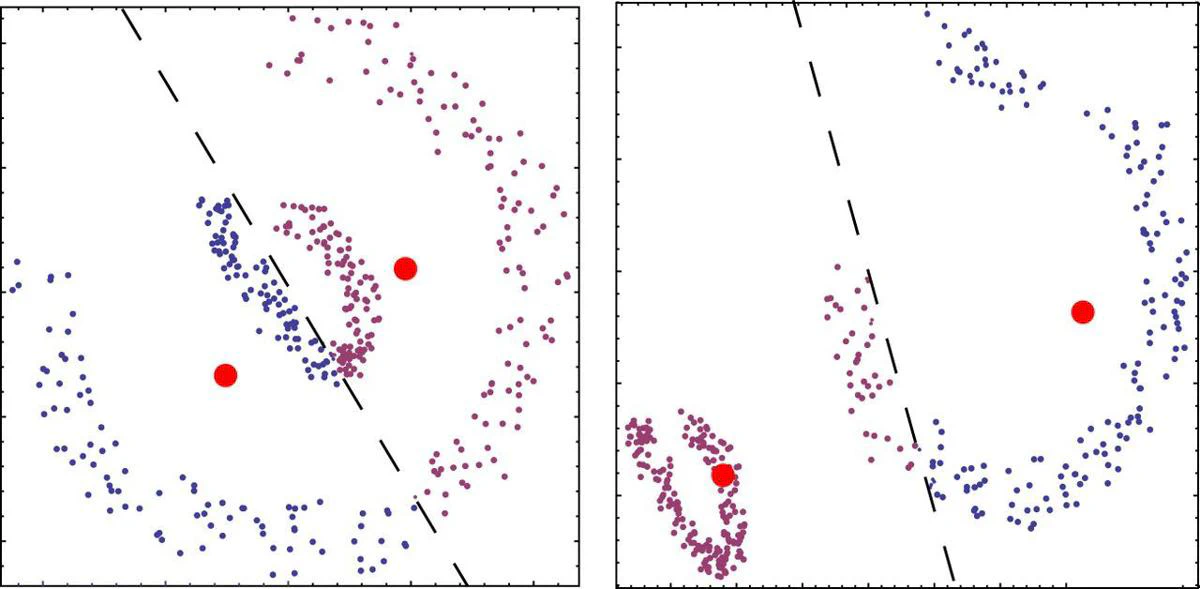

Reducing overfit of an AI algorithm by regularisation and/or dimensionality reduction may disregard important attributes related to minority groups. Therefore, data scientists should bring the right amount of data insights to the design to enhance inclusiveness, which can be considered a controlled bias. For example, most of the time, the initiation of hyperparameters is important at the start of unsupervised learning. This intentional bias can enhance the quality of an AI solution. Poor control of machine learning is difficult to be compensated for and can lead to undesirable outcomes (see Bias Clustering)[*].

Complexity in AI Governance

The AI spectrum is quite broad[*]. From IoT sensor management to smart city development, different stakeholders should look into different perspectives such as social justice, strategy, technology, sustainability, ethics, policies, regulations, compliance, etc. Moreover, things get even more complex when different perspectives are entangled. As examples,

- Environmental and Social: AI has been identified as a key enabler on 79% (134 targets) of United Nations (UN) Sustainable Development Goals (SDGs)[*]. However, 35% (59 targets) may experience a negative impact from AI. While the environment gets the highest potential, the society gets the most negative impact from AI and creates social concerns,

- Environmental and Technology: Cloud computing is promising with the availability and scalability of resources in data centres. With emerging telecommunication technologies (e.g., 5G), the energy consumption when transferring data from IoT/edge devices to the cloud became a concern on carbon footprint and sustainability. This energy concern is a factor that shifts the technology landscape from cloud computing to fog computing[*]1. Economic and Sustainability: Businesses are driving AI, hoping it can contribute about 15.7 trillion to the world economy by 2030[*]. On the other hand, the UN SDGs are also planned to achieve by 2030 in the areas critically important for humanity, and the planet[*]. The synergy between AI economic and sustainability strategies will be essential,

- Economic and Social: Businesses are driving AI, hoping it can contribute about 15.7 trillion to the world economy by 2030. However, the research found that 85% of AI projects will fail due to bias in data, algorithms, or the teams responsible for managing them[*]. Therefore, AI ethics and governance for the sustainability of AI became a key success factor in economic goals in AI.

- Economic and Ethical: Still, no government has been able to pass AI law except ethical frameworks or regulatory guidelines[*]. Therefore, there are many emerging AI risks for humanity on our way to economic prosperity, such as autonomous weapons, automation-spurred job loss, socioeconomic inequality, bias caused by data and algorithms, privacy violations, and deepfakes[*]. On the other hand, the complex differences in AI applications don’t necessarily mean there are no similarities in other perspectives such as cultural values, community or strategy. For example, similar organizations may work on different sustainability goals for social justice. Such differences in AI strategy should not obstruct the partnership and collaboration opportunities between them.

A Framework and a Model for AI Governance

When addressing AI governance requirements, the complexity of the AI can be identified as the main challenge[*]. Unlike any other technology, AI governance is complex because of its autonomous decision-making capability and influence on people’s decision-making. Hence, AI governance is entangled with human ethics, which must be realised where artificial intelligence is applied or influenced. We introduced a framework and model with the simple golden circle in mind. They help directors find solutions for why, how and what questions when governing AI. First, the innovative KITE conceptualised abstraction framework helps directors drive the purpose of AI initiatives to address key success factors. With the support of the KITE abstraction framework, the innovative Wind-turbine conceptualised model helps to develop a comprehensive AI strategy for organisations. These frameworks and models help drive AI for sustainability in more structured, systematic, transparent, and collaborative ways.

KITE abstraction framework

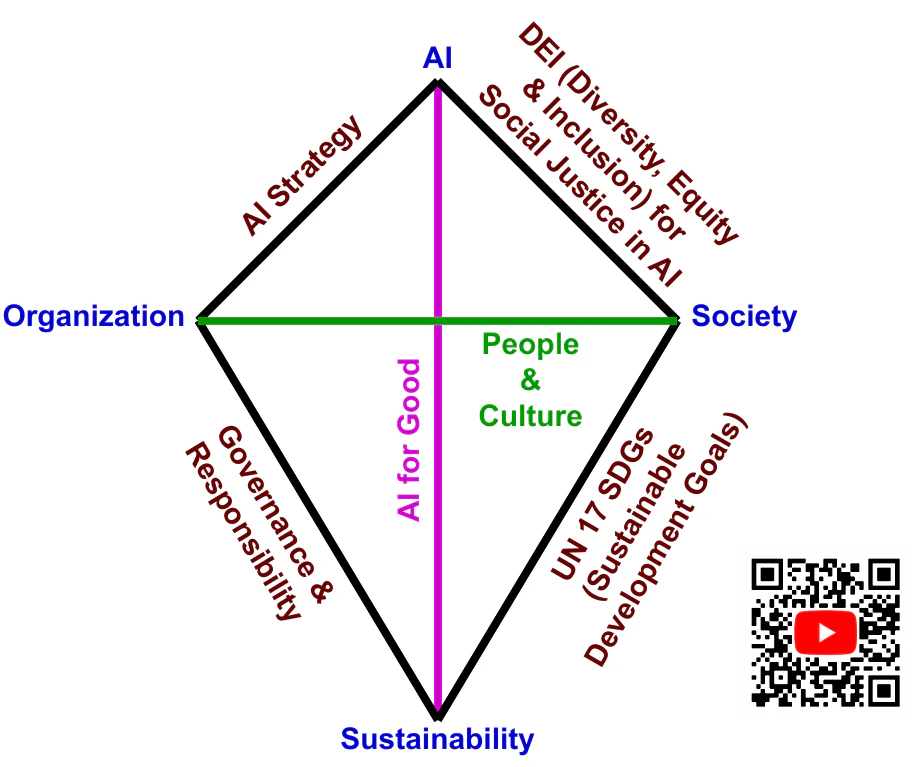

The KITE abstraction framework (see KITE)[*]helps directors govern AI aligning with the broader ESG purpose, fundamentally the why aspect of the golden circle. Irrespective of the complexity of the AI application, this framework analyses the four key dimensions of

- 1.] AI,

- 2.] Organisation,

- 3.] Society, and

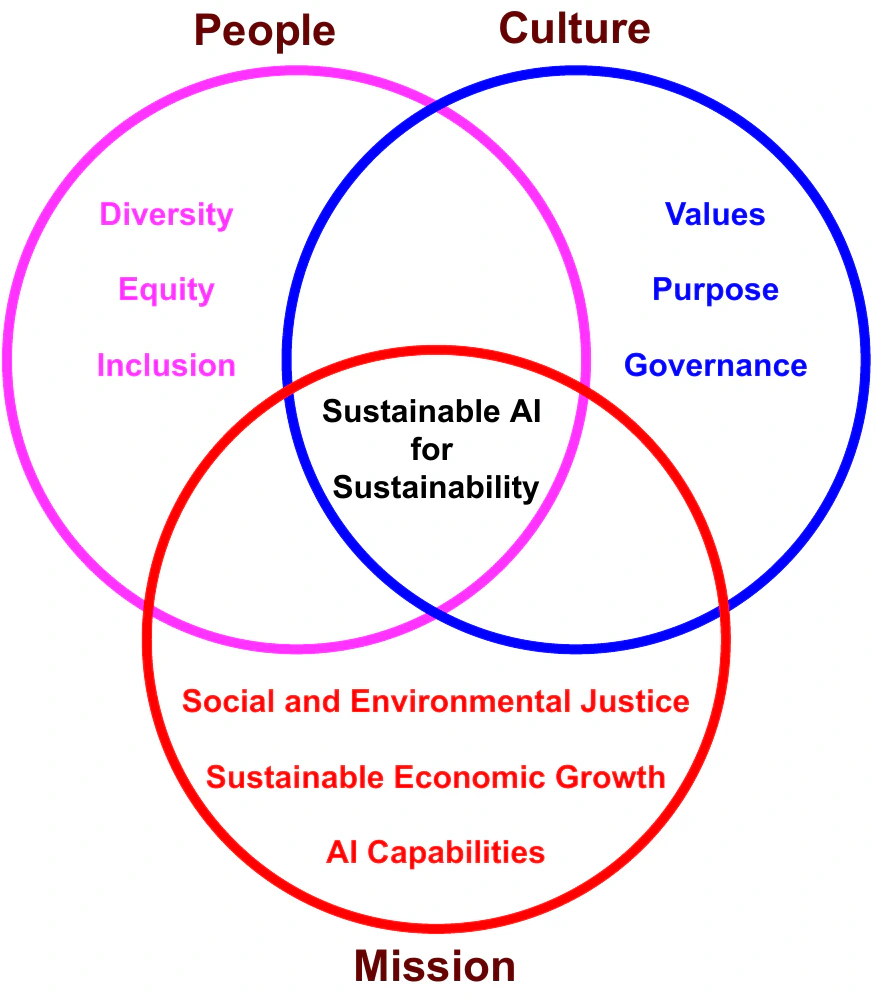

- 4.] Sustainability. The interdependencies of these dimensions enable addressing of AI strategy, AI for Good and United Nations Sustainable Development Goals. Further, it helps mitigate AI risks due to biases by bringing social diversity, equity and inclusion to AI governance. As illustrated in the diagram, it helps organisational governance and responsibilities by guiding the orchestration of people, culture and AI mission towards sustainability.

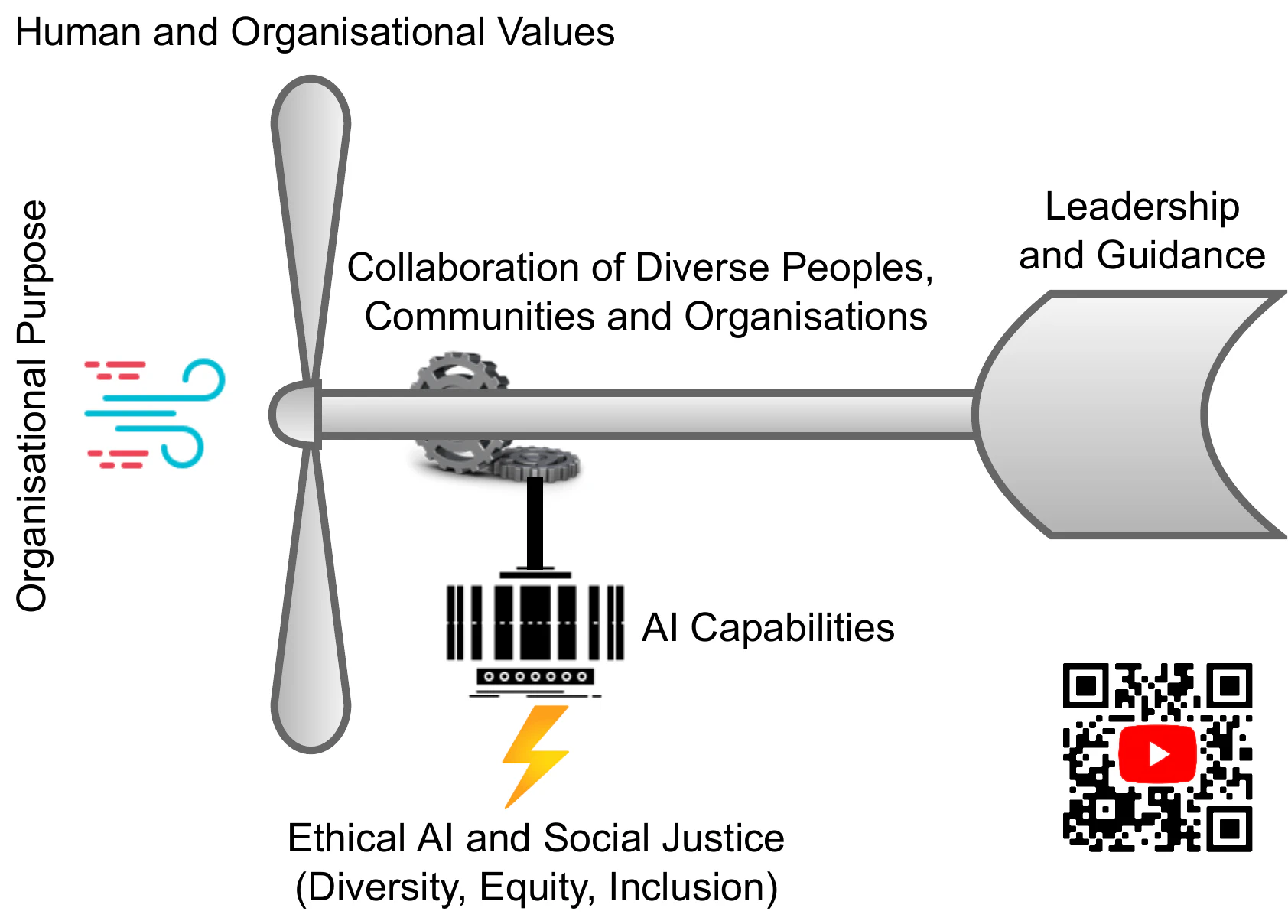

Wind-turbine conceptualised model

The wind-turbine conceptualised model (see Wind-turbine)[*]helps directors address how and what aspects of AI governance. The model helps oversee AI processes supporting social justice with social diversity, equity and inclusion. From the organisational perspective, this model directs the AI initiative towards humanity and sustainable development goals (SDGs) for minimising human suffering. Further, this model helps oversee the operations and management, represented by the tail of the wind turbine. The front-faced multi-blade rotor represents the values and policies (e.g., seven fundamental principles) that ethically and efficiently address humanitarian needs, risks and suffering. The wheels in the gearbox represent the community, partners and volunteers who are continually helping with diversity, equity and inclusion. Finally, the generator represents the Data and AI capabilities that drive the AI innovation and transformation for sustainability. In summary, directors can oversee the full spectrum of the AI processes, stakeholders, and management.

People, Culture and Mission

To make sure AI for good programs serve the purpose of serving humanity and sustainability, it is important to mitigate the biases in decision making in leadership, management and governance while managing the projects that enhance social justice. These make sure we can realise AI ethics and sustainable development goals.

However, to minimise biases and enhance social justice, it is required to bring social diversity, equity and inclusion to the leadership, management and governance. Only then can we achieve utilitarianism and consequentialism perspectives of human ethics which can underpin the AI ethics for serving humanity and sustainability. Our framework helps all stakeholders including communities, volunteers and partners to collaborate on sustainable development goals and social justice.

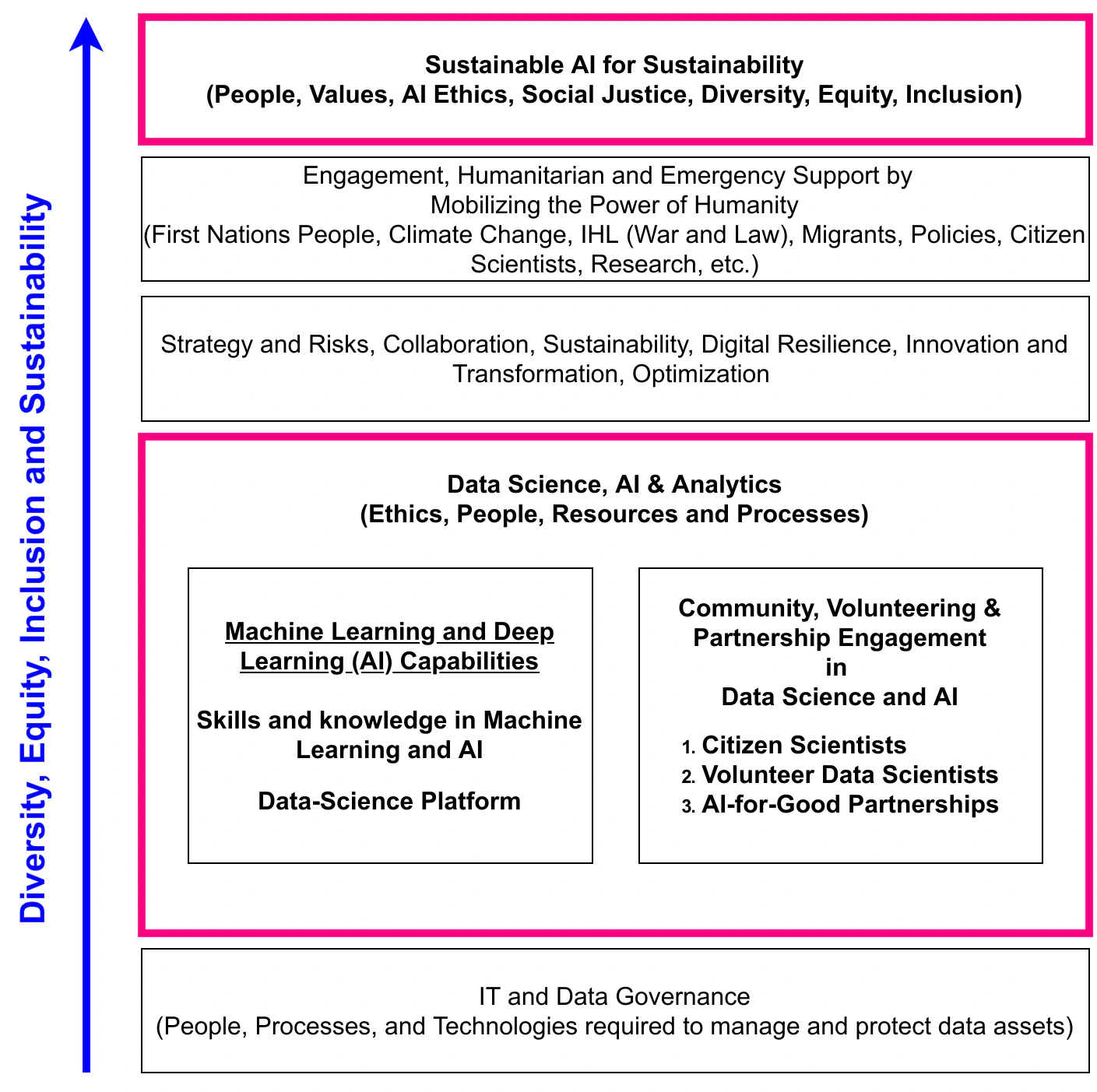

From the corporate governance and management perspective, this framework helps the corporate board, human resource (HR) and management to orchestrate culture, people and mission towards humanity and sustainability. Wind-turbine illustrates how the synergy between corporate culture, people and mission can drive AI ethics towards sustainable AI and goals[*].

Adaptation of the Framework

The adaptation of the proposed framework helps creating values based on the data wisdom[*]. It helps AI innovation and transformation towards social justice. As shown in DEI in AI[*]the data science and AI as a service layer supports business strategies of ESG by leveraging data and IT assets while enhancing DEI (diversity, equity and inclusion), brand advocacy, customer experience (CX), and return on investment (ROI).

The proposed framework establishes synergy between AI governance and social justice by mobilizing the organizational culture towards AI-driven innovation and transformation. A greater social diversity, equity and inclusion can be expected in AI initiatives which enable ethical inclusion, processes and outcomes in AI. The sustainable AI and sustainable development goals will be a primary focus in AI developments that drive business objectives and corporate social responsibilities. The ideation of this strategy can be illustrated by The AI Golden Circle[*].

Conclusion

AI would be a key capability for future prosperity. Good governance of AI is very important to mitigate AI risks and create values. AI frameworks and standards are emerging to govern AI aligning with human ethics and emerging environmental, social, and corporate governance (ESG) principles. In brief, diversity, equity and inclusion (DEI) together with social and cultural values can make AI initiatives vibrant and sustainable. Further, it will mitigate biases related to AI, including biases in data, algorithms, people, and processes. This book’s recommendations will help leaders orchestrate people, culture, and mission toward sustainable AI for social justice.

Author’s Biography

Dr Mahendra Samarawickrama (GAICD, MBA, SMIEEE, ACS(CP)) is the ICT Professional of the Year 2022 in the ACS Digital Disruptors Awards. He is a highly accomplished leader having an impressive track record of driving visions, technology innovations and transformation towards humanity, social justice, and sustainability. He is a founding director of the Centre for Ethical AI and the Centre for Sustainable AI. He supports the formation of organisational Environmental, Social, and Governance (ESG) strategy and drives ESG projects leveraging emerging technologies. He specialises in directing AI, Data Science and Customer Experience (CX)-focussed teams on building state-of-the-art capabilities. He is an author, inventor, mentor, advisor and regularly speaks at various technology forums, conferences and events worldwide. Many of his publications and frameworks related to AI governance and ethics are spotlighted in national and international forums.

As the Manager of the Data Science and Analytics team in the Australian Red Cross, he has developed an AI governance and strategy framework crucial to the business’ successful deployment of Data Science and AI capabilities to mobilise the power of humanity. He built the Volunteer Data Science and Analytics team from the ground up, supporting the Australian Red Cross’s strategic goals. He is supporting the business for personalised engagement of customers for disaster resilience in these demanding times of pandemic, natural disasters, and global conflicts. He is also a co-author of the IFRC data playbook and contributed to the data science and emerging technology chapter for AI governance, ethics, and literacy. In all these processes, he valued diversity, equity and inclusion. In recognition of this, his team became finalists in 1) the Diversity, Equity and Inclusion in Action Award in the 2021 IoT Awards, 2) the Best Use of Technology to Revolutionise CX Award in the 2021 Ashton Media CX Awards, 3) the Service Transformation for the Digital Consumer for Not-for-Profit/NGO in 2022 ACS Digital Disruptors Awards, and contributed to winning the CX Team of the Year Award in 2021 Ashton Media CX Awards. All of these awards are prestigious national awards.

He is an industry collaborator who actively leads technology innovation-and-transformation initiatives and partnerships toward humanity, social justice and sustainability. In this perspective, he is an Advisory Council Member in Harvard Business Review (HBR), an Expert in AI ethics and governance at Global AI Ethics Institute, an industry Mentor in the UNSW business school, a senior member of IEEE (SMIEEE), an honorary visiting scholar at the University of Technology Sydney (UTS), an Advisor for Data Science and Ai Association of Australia (DSAi), and a graduate member of the Australian Institute of Company Directors (GAICD).

He has recently established a YouTube channel and a Twitter channel to share his knowledge with the community. With a PhD in Computer Science and Masters degrees in Business Administration and Project Management, he brings the capacity to steer organisations through the complex, data-driven problems of our time.

Bibliography

1@article{Mahendra2022PMC,

2 title = {{AI Governance and Ethics Framework for Sustainable AI and Sustainability}},

3 journal = {Submission in response to the Department of the Prime Minister and Cabinet issues paper Positioning Australia as a leader in digital economy regulation - Automated Decision Making and AI Regulation},

4 author = {Samarawickrama, Mahendra},

5 year = {2022},

6 month = {04}

7}

8

9@Misc{samarawickrama-2021A,

10 author = {Samarawickrama, Mahendra},

11 title = {{KITE: An Abstraction Framework for Reducing Complexity in AI Governance }},

12 month = {10},

13 year = {2021},

14 howpublished = {\url{https://unstats.un.org/unsd/undataforum/blog/KITE-an-abstraction-framework-for-reducing-complexity-in-ai-governance/}}

15}

16

17@article{samarawickrama-2022,

18 author = {Samarawickrama, Mahendra},

19 journal = {Company Director},

20 month = {03},

21 number = {02},

22 pages = {52--53},

23 title = {{Keeping AI Honest}},

24 volume = {38},

25 year = {2022},

26}

27

28@article{samarawickrama-2021B,

29 author = {{Samarawickrama, M. G}},

30 journal = {Hyperight},

31 title = {{Social justice and sustainability by leveraging data science and AI: Interview with Dr. Mahendra Samarawickrama}},

32 year = {2021},

33}

34

35@article{PMID:12879090,

36 Title = {Relation of adiposity and body fat distribution to body mass index in Australians of Aboriginal and European ancestry},

37 Author = {Piers, LS and Rowley, KG and Soares, MJ and O'Dea, K},

38 DOI = {10.1038/sj.ejcn.1601630},

39 Number = {8},

40 Volume = {57},

41 Month = {August},

42 Year = {2003},

43 Journal = {European journal of clinical nutrition},

44 ISSN = {0954-3007},

45 Pages = {956—963},

46 URL = {https://doi.org/10.1038/sj.ejcn.1601630},

47}

48

49@article{Lorimer2017ClusteringHM,

50 title={Clustering: how much bias do we need?},

51 author={Tom Lorimer and Jenny Held and Ruedi Stoop},

52 journal={Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences},

53 year={2017},

54 volume={375}

55}

56

57@article{Gauthier2019,

58 doi = {10.1038/s41409-019-0679-x},

59 url = {https://doi.org/10.1038/s41409-019-0679-x},

60 year = {2019},

61 month = oct,

62 publisher = {Springer Science and Business Media {LLC}},

63 volume = {55},

64 number = {4},

65 pages = {675--680},

66 author = {J. Gauthier and Q. V. Wu and T. A. Gooley},

67 title = {Cubic splines to model relationships between continuous variables and outcomes: a guide for clinicians},

68 journal = {Bone Marrow Transplantation}

69}

70

71@article{Allen2019,

72 doi = {10.1007/s11625-019-00711-x},

73 url = {https://doi.org/10.1007/s11625-019-00711-x},

74 year = {2019},

75 month = jul,

76 publisher = {Springer Science and Business Media {LLC}},

77 volume = {15},

78 number = {2},

79 pages = {521--538},

80 author = {Cameron Allen and Michael Reid and John Thwaites and Rod Glover and Tahl Kestin},

81 title = {Assessing national progress and priorities for the Sustainable Development Goals ({SDGs}): experience from Australia},

82 journal = {Sustainability Science}

83}

84

85@article{Vinuesa2020,

86 doi = {10.1038/s41467-019-14108-y},

87 url = {https://doi.org/10.1038/s41467-019-14108-y},

88 year = {2020},

89 month = jan,

90 publisher = {Springer Science and Business Media {LLC}},

91 volume = {11},

92 number = {1},

93 author = {Ricardo Vinuesa and Hossein Azizpour and Iolanda Leite and Madeline Balaam and Virginia Dignum and Sami Domisch and Anna Fell\"{a}nder and Simone Daniela Langhans and Max Tegmark and Francesco Fuso Nerini},

94 title = {The role of artificial intelligence in achieving the Sustainable Development Goals},

95 journal = {Nature Communications}

96}

97

98@misc{stanfordAndrewElectricity,

99 author = {Shana Lynch},

100 title = {{A}ndrew {N}g: {W}hy {A}{I} {I}s the {N}ew {E}lectricity},

101 howpublished = {\url{https://www.gsb.stanford.edu/insights/andrew-ng-why-ai-new-electricity}},

102 year = {2017},

103 month = {march},

104}

105

106@article{Abram2016,

107 doi = {10.1038/nature19082},

108 url = {https://doi.org/10.1038/nature19082},

109 year = {2016},

110 month = aug,

111 publisher = {Springer Science and Business Media {LLC}},

112 volume = {536},

113 number = {7617},

114 pages = {411--418},

115 author = {Nerilie J. Abram and and Helen V. McGregor and Jessica E. Tierney and Michael N. Evans and Nicholas P. McKay and Darrell S. Kaufman},

116 title = {Early onset of industrial-era warming across the oceans and continents},

117 journal = {Nature}

118}

119

120@article{Burke1999,

121 author={Burke,Lisa A. and Miller,Monica K.},

122 year={1999},

123 month={11},

124 title={Taking the mystery out of intuitive decision making},

125 journal={The Academy of Management Executive},

126 volume={13},

127 number={4},

128 pages={91-99},

129 isbn={10795545},

130 url={http://ezproxy.lib.uts.edu.au/login?url=https://www.proquest.com/scholarly-journals/taking-mystery-out-intuitive-decision-making/docview/210531328/se-2?accountid=17095},

131}

132

133@article{hammond1998hidden,

134 title={The hidden traps in decision making},

135 author={Hammond, John S and Keeney, Ralph L and Raiffa, Howard},

136 journal={Harvard business review},

137 volume={76},

138 number={5},

139 pages={47--58},

140 year={1998},

141 publisher={Boston}

142}

143

144@misc{UN_SDG_URL,

145 title = {{THE} 17 {GOALS} {\textbar} {Sustainable} {Development}},

146 url = {https://sdgs.un.org/goals},

147}

148

149@ARTICLE{ArtoLaitinen2021,

150AUTHOR={Laitinen, Arto and Sahlgren, Otto},

151TITLE={AI Systems and Respect for Human Autonomy},

152JOURNAL={Frontiers in Artificial Intelligence},

153VOLUME={4},

154YEAR={2021},

155URL={https://www.frontiersin.org/article/10.3389/frai.2021.705164},

156DOI={10.3389/frai.2021.705164},

157ISSN={2624-8212},

158}

159

160@article{Zardiashvili2020,

161 doi = {10.1007/s11023-019-09514-6},

162 url = {https://doi.org/10.1007/s11023-019-09514-6},

163 year = {2020},

164 month = jan,

165 publisher = {Springer Science and Business Media {LLC}},

166 volume = {30},

167 number = {1},

168 pages = {121--143},

169 author = {Lexo Zardiashvili and Eduard Fosch-Villaronga},

170 title = {{\textquotedblleft}Oh, Dignity too?{\textquotedblright} Said the Robot: Human Dignity as the Basis for the Governance of Robotics},

171 journal = {Minds and Machines}

172}

173

174@article{Boni2021,

175 doi = {10.1177/17816858211059249},

176 url = {https://doi.org/10.1177/17816858211059249},

177 year = {2021},

178 month = oct,

179 publisher = {{SAGE} Publications},

180 volume = {20},

181 number = {2},

182 pages = {182--190},

183 author = {Micha{\l} Boni},

184 title = {The ethical dimension of human{\textendash}artificial intelligence collaboration},

185 journal = {European View}

186}

187

188@article{cf2015transforming,

189 title={{A/RES/70/1} Transforming our world: the 2030 Agenda for Sustainable Development},

190 author={{UN General Assembly (UNGA)}},

191 journal={Resolut 25},

192 publisher={{United Nations}},

193 pages = {1--35},

194 url = {https://www.un.org/en/development/desa/population/migration/generalassembly/docs/globalcompact/A_RES_70_1_E.pdf},

195 year={2015}

196}

197

198@incollection{Stahl2021,

199 doi = {10.1007/978-3-030-69978-9_3},

200 url = {https://doi.org/10.1007/978-3-030-69978-9_3},

201 year = {2021},

202 publisher = {Springer International Publishing},

203 pages = {19--33},

204 author = {Bernd Carsten Stahl},

205 title = {Concepts of Ethics and Their Application to {AI}},

206 booktitle = {{SpringerBriefs} in Research and Innovation Governance}

207}

208

209@misc{au-anti-discrimination,

210author = {},

211title = {Australia’s anti-discrimination law | Attorney-General's Department},

212howpublished = {\url{https://www.ag.gov.au/rights-and-protections/human-rights-and-anti-discrimination/australias-anti-discrimination-law}},

213month = {},

214year = {},

215note = {(Accessed on 04/12/2022)}

216}

217

218@misc{AHRC-online,

219author = {},

220title = {Face the facts: Cultural Diversity | Australian Human Rights Commission},

221howpublished = {\url{https://humanrights.gov.au/our-work/education/face-facts-cultural-diversity}},

222month = {},

223year = {},

224note = {(Accessed on 04/12/2022)}

225}

226

227@article{SEPage2007,

228 ISSN = {15589080, 19434529},

229 URL = {http://www.jstor.org/stable/27747407},

230 author = {Scott E. Page},

231 journal = {Academy of Management Perspectives},

232 number = {4},

233 pages = {6--20},

234 publisher = {Academy of Management},

235 title = {{Making the Difference: Applying a Logic of Diversity}},

236 urldate = {2022-04-12},

237 volume = {21},

238 year = {2007}

239}

240

241@ARTICLE{Baccarelli2017,

242 author={Baccarelli, Enzo and Naranjo, Paola G. Vinueza and Scarpiniti, Michele and Shojafar, Mohammad and Abawajy, Jemal H.},

243 journal={IEEE Access},

244 title={Fog of Everything: Energy-Efficient Networked Computing Architectures, Research Challenges, and a Case Study},

245 year={2017},

246 volume={5},

247 number={},

248 pages={9882-9910},

249 doi={10.1109/ACCESS.2017.2702013}

250}

251

252@misc{PwC_AI_2017,

253 title={{PWC's Global Artificial Intelligence Study: Sizing the Prize}}, url={https://www.pwc.com/gx/en/issues/data-and-analytics/publications/artificial-intelligence-study.html},

254 author={PwC},

255 year={2017}

256}

257

258@misc{Gartner2018,

259 title={{Gartner Says Nearly Half of CIOs Are Planning to Deploy Artificial Intelligence}}, url={https://www.gartner.com/en/newsroom/press-releases/2018-02-13-gartner-says-nearly-half-of-cios-are-planning-to-deploy-artificial-intelligence},

260 author={Gartner},

261 year={2018}

262}

263

264@article{Floridi2019,

265 doi = {10.1038/s42256-019-0055-y},

266 url = {https://doi.org/10.1038/s42256-019-0055-y},

267 year = {2019},

268 month = may,

269 publisher = {Springer Science and Business Media {LLC}},

270 volume = {1},

271 number = {6},

272 pages = {261--262},

273 author = {Luciano Floridi},

274 title = {Establishing the rules for building trustworthy {AI}},

275 journal = {Nature Machine Intelligence}

276}

277

278@article{Perc2019,

279 doi = {10.1057/s41599-019-0278-x},

280 url = {https://doi.org/10.1057/s41599-019-0278-x},

281 year = {2019},

282 month = jun,

283 publisher = {Springer Science and Business Media {LLC}},

284 volume = {5},

285 number = {1},

286 author = {Matja{\v{z}} Perc and Mahmut Ozer and Janja Hojnik},

287 title = {Social and juristic challenges of artificial intelligence},

288 journal = {Palgrave Communications}

289}

290

291@incollection{Nagabhushan2021,

292 doi = {10.1007/978-3-030-93620-4_19},

293 url = {https://doi.org/10.1007/978-3-030-93620-4_19},

294 year = {2021},

295 publisher = {Springer International Publishing},

296 pages = {261--275},

297 author = {P. Nagabhushan and Sanjay Kumar Sonbhadra and Narinder Singh Punn and Sonali Agarwal},

298 title = {Towards Machine Learning to~Machine Wisdom: A Potential Quest},

299 booktitle = {Big Data Analytics}

300}

301

302@misc{mgsAicd2022,

303 title = {{Keeping AI honest}},

304 url = {https://aicd.companydirectors.com.au/membership/company-director-magazine/2022-back-editions/march/ai-ethics},

305 author = {Samarawickrama, Mahendra},

306 year = {2022},

307 month = {March},

308 pages = {52-–53},

309 volume = {38},

310 number = {2},

311 journal = {Company Director},

312 publisher = {Australian Institute of Company Directors (AICD)}

313}

314

315@misc{mgsGaiei2022,

316 title = {{Global Trends in AI 2022: Food for thought from GAIEI experts}},

317 url = {https://globalethics.ai/global-trends-in-ai-2022-food-for-thought-from-gaiei-experts/},

318 author = {{E. R. Goffi, A. Momcilovic \textit{et al.}}},

319 year = {2022},

320 publisher = {Global AI Ethics Institute}

321}

322

323@misc{mgsAfr2022,

324 title = {{Award-winner warns of the failures of artificial intelligence}},

325 url = {https://www.afr.com/technology/award-winner-warns-of-the-failures-of-artificial-intelligence-20220313-p5a4b3},

326 author = {Mark Eggleton},

327 year = {2022},

328 journal = {Special Report of Reimagination 2022},

329 pages = {S4--S5},

330 publisher = {The Australian Financial Review (AFR)}

331}

332

333@misc{mgsUn2021,

334 title = {{KITE: An Abstraction Framework for Reducing Complexity in AI Governance}},

335 url = {https://unstats.un.org/unsd/undataforum/blog/KITE-an-abstraction-framework-for-reducing-complexity-in-ai-governance/},

336 author = {Samarawickrama, Mahendra},

337 year = {2021},

338 month = {October},

339 journal = {United Nations World Data Forum},

340 publisher = {United Nations}

341}

342

343@misc{mgsHyper2021,

344 title = {{Social justice and sustainability by leveraging data science and AI: Interview with Dr. Mahendra Samarawickrama}},

345 url = {https://hyperight.com/social-justice-and-sustainability-by-leveraging-data-science-and-ai-interview-with-dr-mahendra-samarawickrama/},

346 author = {Samarawickrama, Mahendra},

347 year = {2021},

348 publisher = {Hyperight}

349} Copyright Notice

Copyright © 2022 Mahendra Samarawickrama

Copyright © 2023 AIModels.org (Remix)

ISBN: 978-0-6454693-0-1

This report was submitted to the consultation process of The Australian Department of the Prime Minister and Cabinet for the regulation of artificial intelligence (AI) and automated decision making.

Third party copyright

Wherever a third party holds copyright in this material, the copyright remains with that party. Their permission may be required to use the material. Please contact them directly.

Attribution

This publication should be attributed as follows:

M. Samarawickrama, “AI Governance and Ethics Framework for Sustainable AI and Sustainability,” Submission in response to the Department of the Prime Minister and Cabinet issues paper Positioning Australia as a leader in digital economy regulation - Automated Decision Making and AI Regulation, Apr. 2022, ISBN: 978-0-6454693-0-1.

AI Governance and Ethics Framework for Sustainable AI and Sustainability

Dr Mahendra Samarawickrama (GAICD, MBA, SMIEEE, ACS(CP))