- Papers

Papers is Alpha. This content is part of an effort to make research more accessible, and (most likely) has lost some details from the original. You can find the original paper here.

Introduction

With the rapid growth of the AI industry, the need for AI and AI ethics expertise has also grown. Companies and governmental organizations are paying more attention to the impact AI can have on our society and how AI systems should be designed and deployed responsibly. From 2015 onward, a series of AI ethics principles, in-depth auditing toolkits, checklists, codebases, standards and regulationshave been proposed by many different international actors. Several communities of research and practice such as FATE (Fairness, Accountability, Transparency, and Ethics), responsible AI, AI ethics, AI safety and AI alignment have emerged. This general movement towards responsible development of AI has created new roles in the industry referred to as responsible AI practitioners in this paper. The primary mandate of these roles is understanding, analyzing, and addressing ethical and social implications of AI systems within the business context. The emergence of these roles challenges technology companies to curate these roles and teams. Leaders in AI-related organizations need to identify, recruit and train appropriate candidates for such roles. As the demand to fill such roles continue to increase, educators need effective means to train talent with the right set of skills.

Recently, scholars examined the common roles responsible AI practitioners serve, explored the challenges that they face, and criticized the problematic nature of the accountability mechanisms that relate to these roles. Moreover, others highlight the myriad practical challenges facing the development of a comprehensive training program to fill such roles. However, there is a lack of empirical research investigating the types of roles, corresponding responsibilities, and qualifications that responsible AI practitioners have in the industry. To address these gaps, we examine the following research questions:

- RQ1: What are the types of roles and responsibilities that responsible AI practitioners hold in the industry?

- RQ2: What are the skills, qualifications, and interpersonal qualities necessary for holding such roles?

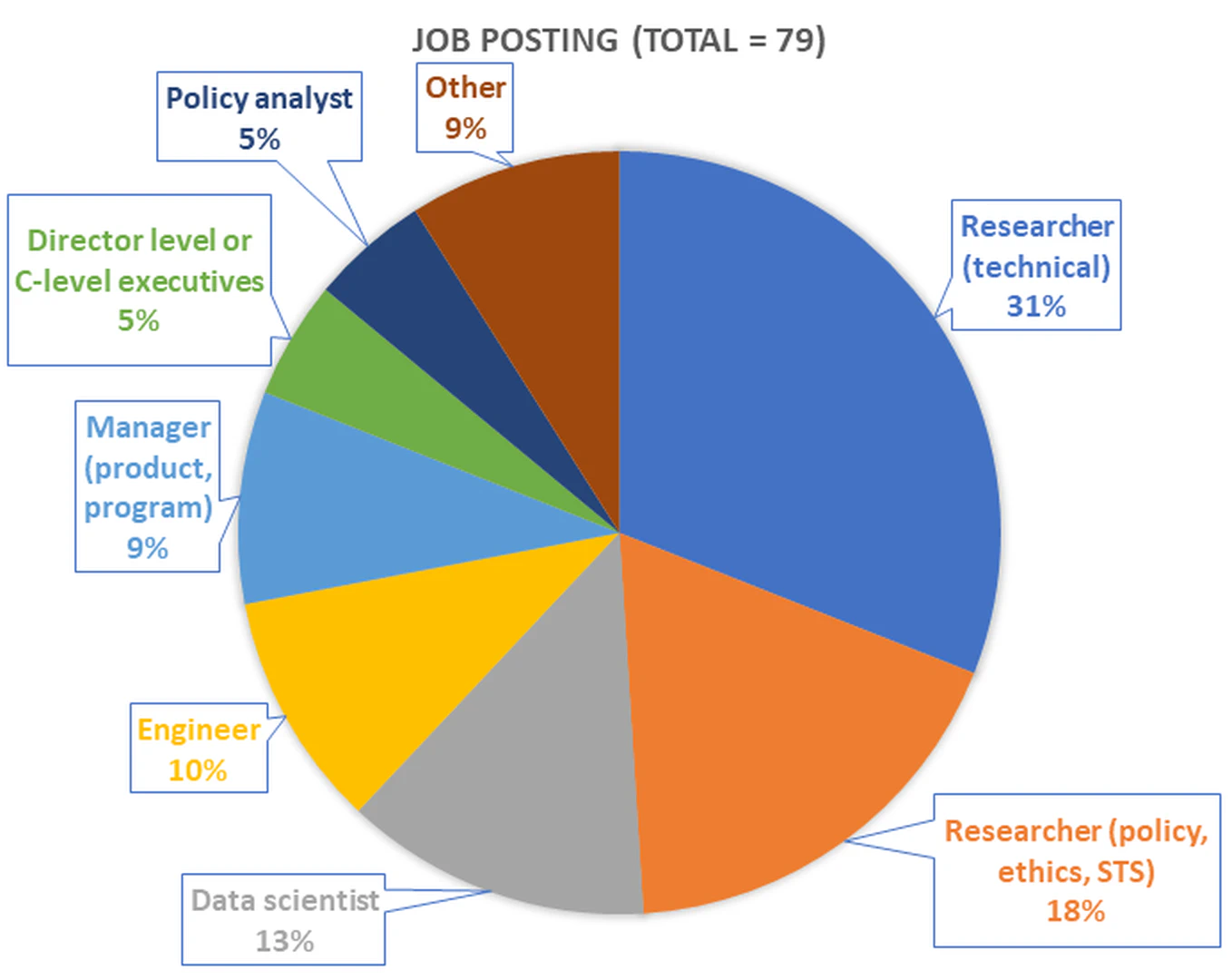

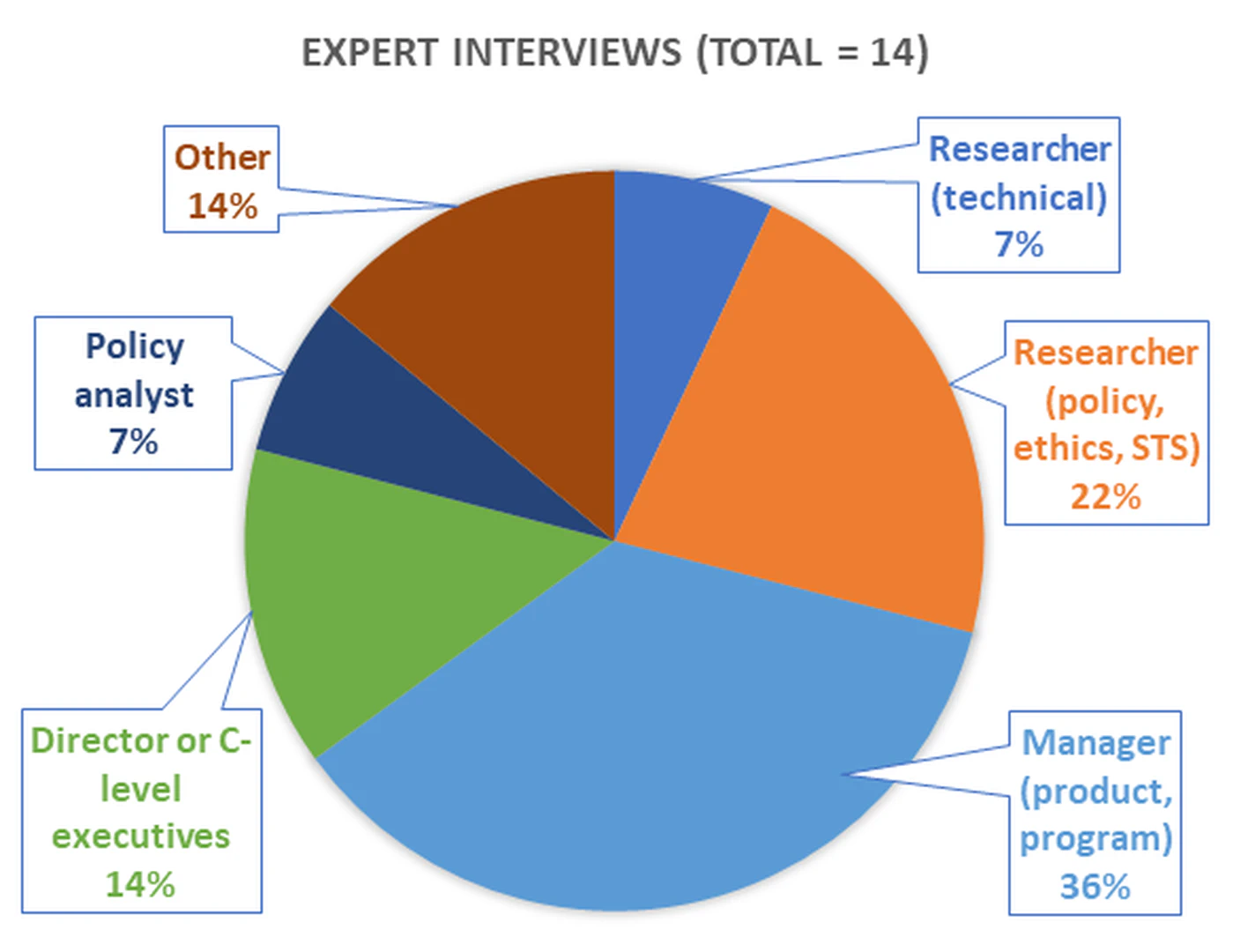

We address these questions by conducting a two-part qualitative study. We examined 79 job postings from March 2020 to March 2022 and conducted expert interviews with 14 practitioners who currently hold these roles in the industry. Learning from fields of competency-based recruitment and curriculum development, we propose an ontology of different occupations and an accompanying list of competencies for those occupations.

As illustrated in Figure fig-teaser, our competency framework outlines seven occupations that responsible AI practitioners hold in the industry: researcher (of two kinds), data scientist, engineer, director/executive, manager, and policy analyst. For each occupation, the ontology includes a list of responsibilities, skills, knowledge, attitudes, and qualifications. We find that while the roles and responsibilities held by responsible AI practitioners are wide-ranging, they all have interdisciplinary backgrounds and are individuals who thrive in working with individuals from different disciplines. We discuss how educators and employers can use this competency framework to develop new curricula/programs and adequately recruit for the rapidly changing field of responsible AI development.

Background

With the increased media reporting and regulation requirements around social and ethical issues of AI-based products and services, the role of a responsible AI practitioner has emerged as a demanding position in the technology industry. In this section, we provide an overview of debates about these roles and existing educational programs that aim to train future responsible AI practitioners. We discuss how existing competency frameworks treat the role of a responsible AI practitioner and highlight the gaps we address in this work.

Emergence of the responsible AI practitioners

Considering the nascency of AI ethics as a domain, only a few scholars have characterized occupations held by responsible AI practitioners. For instance, Gambelin frames the role of an AI ethicist as “an individual with a robust knowledge of ethics” who has the responsibility and the ability to “apply such abstract concepts (i.e. ethical theories) to concrete situations” for the AI system. According to Gambelin, an AI ethicist in the industry also needs to be aware of existing policy work, have experience in business management, and possess excellent communication skills. Gambelin identifies bravery as the most important characteristic of an AI ethicist as they often need to “shoulder responsibility” for potential negative impacts of AI in the absence of regulation.

Moss and Metcalf investigated practices and challenges of responsible AI practitioners in Silicon Valley and described them as “ethics owners” who are responsible for “handling challenging ethical dilemmas with tools of tech management and translating public pressure into new corporate practices”. Echoing Moss and Metcalf’s seminal work on examining AI industry practices, a growing body of empirical work highlights that responsible AI practitioners face challenges such as misalignment of incentives, nascent organizational cultures, shortage of internal skills and capability, and the complexity of AI ethics issues when trying to do their day-to-day tasks. Furthermore, only large technology companies often have the necessary resources to hire responsible AI practitioners. Small and medium-sized companies struggle to access such expertise and rely on openly available information or hire external consultants/auditors as needed. This has given rise to AI ethics as consulting and auditing service.

While challenges in operationalizing responsible AI practices are an active area of research, there is a gap in understanding the role and necessary competencies of responsible AI practitioners in the industry.

Qualifications to be a responsible AI practitioner

The emergence of auditors in the field of responsible AI emphasizes the need for formal training and certification of such roles in the industry. This raises a few practical questions: Who is qualified to take these roles? How should these individuals be trained? Are existing computer science, engineering, and social science curricula prepare individuals for such roles?

Educators responded to this need by developing a range of educational programs and curricula. In a survey of the curricula for university courses focused on AI ethics, Garrett et al. emphasize that such topics should be formally integrated into the learning objectives of current and new courses. On the other hand, as Peterson et al. describe, discussing social and ethical issues in computer science courses remains a challenge. They propose pedagogues for fostering the emotional engagement of students in the classroom as a solution.

Recognizing the importance of interdisciplinary approaches in AI ethics, Raji et al. argue that computer science is currently valued significantly over liberal arts even in the research area of fairness of machine learning systems. Furthermore, they state that the perceived superiority culture in computer science and engineering has created a “new figure of a socio-technical expert”, titled “Ethics Unicorns” - full stack developers, who can solve challenging problems of integrating technology in society.

This overemphasis on computer science expertise and the trend toward integrating ethics content in existing technical curricula may be problematic if these efforts do not match the skills and disciplinary needs of the industry. It raises questions about whether the educational backgrounds of responsible AI practitioners today are indeed in computer science. In this work, we inform the curriculum development efforts across a diverse range of disciplinary areas by understanding these roles in the industry and outlining the attributes, qualifications, and skills necessary for holding them.

Competency frameworks in AI and AI ethics

Competency frameworks are useful tools for human resource management (i.e. recruitment, performance improvement) and educational development (i.e. new training programs and curriculum development in universities). Competency frameworks highlight different competencies required for a profession and link these competencies to skills and knowledge. According to Diana Kramer “competencies are skills, knowledge and behaviours that individuals need to possess to be successful today and in the future”. This definition frames our discussion of competency in this paper.

Competency frameworks help governmental and non-governmental organizations keep track of the type of skills their employees/general public need in the short and long term. Educators use these frameworks to update existing curricula and develop appropriate learning objectives. On the other hand, business leaders and human resource professionals use these frameworks for their recruitment practices.

Today’s existing competency frameworks do not sufficiently represent roles and competencies of a responsible AI practitioner. For example, ONET is United State’s national program for collecting and distributing information about occupations. ONET-SOC is a taxonomy that defines 923 occupations and they are linked to a list of competencies. Searching the taxonomy for “ethics”, “machine learning”, “data”, “security”, and “privacy” leads to minimal results such as “information security analysis”, “data scientist and “database architect”. The dataset do not include occupation titles such as machine learning engineer/researcher or data/AI ethics manager.

ESCO, the European skills, competencies, qualifications, and occupation is the European and multilingual equivalent of US’s O*NET. ESCO contains 3008 occupations and13890 skills. Searching for the above terms leads to more relevant results such as computer vision engineer, ICT intelligent system designer, policy manager, corporate social responsibility manager, ethics hacker, data protection officer, chief data officer, and ICT security manager. However, emerging occupations relevant to AI and AI ethics have not been well-represented in these established, Western competency frameworks.

As a response, a number of new AI competency frameworks have recently been developed. One such enabler is the series of projects funded by the Pôle montréalais d’enseignement supérieur en intelligence artificielle (PIA), a multi-institutional initiative in Montreal, Canada aimed to align educational programs with the needs of the AI industry. Six projects related to AI competency frameworks were funded – including the work presented in this paper. This resulted in an overarching AI competency for postsecondary education that includes ethical competencies, and a competency framework specific to AI ethics skills training. Bruneault et al., in particular, created a list of AI ethics competencies based on interviews of university instructors/professors already teaching courses related to AI ethics across North America.

Our work complements these collective efforts by providing a framework that represents the needs of the industry expressed in recent AI ethics-related job postings and the realities of the jobs AI ethics practitioners hold in nonprofit and for-profit corporations today.

Methodology

Practitioners and scholars of different domains typically create competencies frameworks using a process most appropriate for their needs. However, many follow a version of the process highlighted by Sanghi. The steps of the process are: 1) Define the purpose and performance objective of a position, 2) Identify the competencies and behaviors that predict and describe superior performance in the job, 3) Validate selected competencies, 4) Implement/integrate competencies and 5) Update competencies.

In this work, we focus on answering questions raised in the first two steps about the objectives of responsible AI practitioner roles and skills/qualities required to perform well in these positions. We take a two-pronged approach to understand the nature of emerging roles under the broad category of responsible AI practitioners in the industry. Firstly, we reviewed and analyzed job postings related to our working definition of responsible AI practitioner . Secondly, we interviewed individuals who are responsible AI practitioners in the industry today. We then synthesized data collected from these two sources through thematic analyses. We present our proposed competency framework in Section finding. This study was approved by the Research Ethics Board of our academic institution.

AI Ethicist Job Postings Review

We collected and analyzed 94 publicly available job postings over the period of March 2020 to March 2022. The job postings included a range of job titles, including researcher, manager, and analyst.The following sections describe the process for collecting, selecting, and analyzing these job postings that led to the development of the ontology of responsible AI practitioner roles and skills.

Collection of job postings

To collect “AI ethicist” job postings, we searched and scraped three job-finding websites, including LinkedIn, indeed.com, and SimplyHired, every two months from March 2020 to March 2022. We used the following search terms: AI ethics lead, Responsible AI lead, AI ethics researcher, data OR AI ethicist and fairness OR transparency researcher/engineer. Considering that search results only showed a few relevant job postings, we also collected job postings that came through referrals, including mailing lists such as FATML, 80000hours.org, and roboticsworldwide.

After scanning all the resulting job postings with the inclusion criteria, we gathered a total of 79 job postings for thematic analysis. We included the job postings that were published within our data collection period, were situated in the industry (including not-for-profit organizations), and outlined responsibilities with regards to implementing AI ethics practices in a given sector.[The table outlining the inclusion and exclusion criteria is in the supplemental material.]

Analysis

Using Braun and Clarke’s thematic analysis methodology, we analyzed the job postings with the coding scheme illustrated in Table tab-jp-coding.The lead author created this coding scheme after reviewing all the postings. The coding scheme was also informed by frequently used categories across competency frameworks explained earlier in section competencyframework.

The codes were generally split into four key elements: the company environment, responsibilities in the given occupation, qualifications, and skills. The codes of “company environment” and “qualifications - interdisciplinarity” are unique to this coding scheme due to their prevalence in the postings’ content.

After developing the first draft of the coding scheme, a student researcher was trained to use this scheme and coded 10% of the job postings. The student researcher’s analysis using the coding scheme was consistent with the lead researcher’s analysis of the same set of job postings. The discussion between the lead and student researcher helped clarify the description and examples for each code. However, there were no new codes that were added to the scheme. The lead author updated the coding scheme and coded the entire set of postings using the new scheme.

Expert interviews

The job postings provide a high-level analysis of the required skills and competencies expressed by recruiters; however, they may not represent the reality of these roles. Therefore, we conducted 14 interviews with experts who currently hold responsible AI practitioner positions in the industry. The focus of the interviews was on understanding the responsibilities, qualifications, and skills necessary for these roles. Considering the objective of this research project on the type of roles and skills, we did not acquire any demographic information about the participants in these roles. This also ensured that we can maintain the anonymity of these participants considering that a limited number of people hold these positions.

Recruitment

We compiled a list of potential interview candidates through (a) referrals within the authors’ professional network and (b) we used similar search terms as the ones highlighted for job postings to look for people who currently hold these positions. Moreover, we also considered people from the industry who had accepted papers at relevant conferences such as FAccT and AIES in 2020 and 2021. The suitable participants:

- worked for a minimum of three months in their role;

- held this position in the industry or worked mainly with industrial partners;

- held managerial, researcher, technical positions that are focused on implementing responsible AI practices within the industry.

We did not interview researchers or professors in academic institutes and only interviewed those holding positions at nonprofit and for-profit companies. While we only used the search terms in English to find interview participants for practical reasons, we did not limit our recruitment efforts to a geographical region given the limited number of individuals holding these roles across the industry. We recruited and conducted interviews from June 2021 - February 2022.

Interview protocol

The primary researcher conducted all fourteen interviews. All of the interviews were 45 to 60 minutes in length. The interviewer first described the project and obtained the participant’s consent. The interview was semi-structured with ten questions focused on exploring the following four topics:[The detailed interview protocol is included in the supplementary material.]

- Background and current role

- Situation your work, projects in AI ethics

- Skills, knowledge, values

- Looking into the future

Data Analysis

The interviews were audio recorded and transcribed. The primary researcher checked and corrected the transcriptions manually afterwards. The authors analyzed the interviews deductively and inductively. The lead author applied the coding scheme derived from the job postings () to the interview transcripts. Furthermore, a reflexive thematic analysiswas done by the lead author to capture the rich and nuanced details that were not represented in the coding scheme for the job postings. The results from the reflexive analysis were used to contextualize and improve the coding scheme from the job postings. However, no new codes were added to the scheme considering the focus of the research objective addressed in this paper. The lead author presented the coding scheme to the full research team, including a student researcher who had independently reviewed the data to identify general trends. The coding scheme was iteratively revised and refined based on the feedback from the research team.

Author reflexivity and limitations

We recognize that this research reflects our positionality and biases as academics in North America. Furthermore, the data we collected were all in English and they were representative of job postings and positions in companies situated in North America and Europe. We were not able to collect data on job postings and candidates representing existing efforts in Asia and the Global South. Furthermore, we recognize that the roles in this field are continually shifting. Therefore, this ontology is only a snapshot of the roles and skills that responsible AI practitioners have and are recruited for today. Further iterations on these types of frameworks will be necessary in the future as these roles evolve. Finally, this study focuses on examining responsibilities, qualifications, and skills required of today’s practitioners independent of their demographic factors (e.g., gender, age). We recognize the importance of representing a demographically diverse group of individuals and their experiences in qualitative research such as ours. Once responsible AI practitioners become a common occupation held by many, future studies should include demographic factors as part of similar investigations.

Proposed competency framework for responsible AI practitioners

From our analysis, we developed a preliminary competency framework that captures seven classes of existing occupational roles and several emerging classes of occupations. Figures postingchart and interviewchart show how each occupation type was represented in the job postings and interviews. Three of the occupations require technical expertise (researcher, data scientist, and engineer), two require policy expertise (researcher, policy analyst), and the remaining two are managerial (manager, director). In the following sections, we provide a detailed description of the responsibilities, skills, qualifications, and qualities for each of these roles.

Researcher (technical)

The most common class of occupations found in the job postings was that of a researcher focused on technical aspects of fairness, explainability, safety, alignment, privacy and auditability of AI systems (24 job postings, 2 interviews). Employers represented in this dataset were looking to hire researchers at varying levels of seniority (assistant, associate and principal). The main responsibilities of these researchers are split into four main categories: conducting research, communicating their findings, working with other teams (internally and externally), and developing novel solutions for identified problems. As expected, research directions set by these researchers need to support company-specific needs, and there is an emphasis on communication between researchers and product, legal and executive teams.

Skills The researchers in this group need to have a mix of technical skills (i.e. software engineering and programming languages such as Python), research skills (i.e. analytical thinking and synthesis of complex ideas ), and leadership skills (i.e. leading and guiding fellow researchers). The dataset from the job postings emphasized equally all these skills, and more senior positions emphasized leadership skills. A senior researcher explained that they look for “different research skills” depending on the project; however, they generally look for “some background in machine learning, statistics, computer science or something of that nature” and hire candidates that have some “interdisciplinary background” . The data from the postings and the interviews show a strong emphasis on good verbal and written communication skills. Participants highlighted the ability to publish in academic venues and some emphasized the ability to communicate with different audiences internally (i.e. product teams and executives) and externally (policy-makers and executives). A technical researcher emphasized the importance of_“convincing stakeholders”_ and creating “strategic collaborations” by communicating with practitioners with “diverse” backgrounds.

Qualifications The job postings mainly aim to attract candidates who have a PhD in computer science or a related field. Few of the job postings accept a master’s in these fields, whereas some do not highlight a specific degree and mainly focus on necessary skills and knowledge. The majority of postings have a heavy emphasis on the required experience. Interview participants also emphasized the importance of experience. A research manager expressed that they are not necessarily looking for a “PhD in computer science” . They are looking for candidates with experience in “leading and executing a research agenda” , working with different people and teams, synthesizing and “communicating challenging concepts” , and practicing software engineering. Some postings highlight experience with implementing AI ethics-related concepts. However, this was often listed as a preferred qualification rather than a required one. Similarly, researchers we interviewed, echoed the importance and value of having a publication record in “Fairness, Accountability, Transparency, and Ethics (FATE) communities” such as ACM Conference on Fairness, Accountability, and Transparency (FAccT) and AAAI/ACM Conference on AI, Ethics, and Society (AIES).

Interpersonal Qualities The most common attitude/value was the aptitude and interest to collaborate and work in an interdisciplinary environment . A researcher emphasized that the current conversations are “engineering focused” and they actively incorporate perspectives from_social science and philosophy by collaborating with experts in these areas_ . The most desired value was “curiosity to learn about [responsible AI] problems” . Many of the participants highlight other values and attitudes such as “passion” towards building safe and ethical AI systems, willingness to manage uncertainty and challenges , creativity , and resourcefulness .

Data scientist

The data scientist occupation is represented in 10 job postings in our dataset, and none in the interviews. The job postings seek to fill traditional data scientist roles with an added focus on examining responsible AI-related issues. The common responsibilities outlined for these positions are a) to collect and pre-process data, and b) to develop, analyze, and test models – these are typical of existing data science roles. However, the job postings emphasize the position’s responsibility to test machine learning models for AI ethics concerns such as fairness and transparency. Data scientists who work in the responsible AI domain have additional non-conventional roles. These roles include understanding and interpreting existing regulations, policies, and standards on the impact of AI systems and testing the systems’ capability for elements covered in these policies. They also need to work with technical and non-technical stakeholders to communicate findings, build capacity around responsible AI concepts and engage them as needed.

Skills The job postings put a heavy emphasis on advanced analytical skills and the ability to use programming languages such as R, Python and SQL for basic data mining. The ability to learn independently in a new domain and master complex code base is also listed as one of the key skills. A few of the postings list project management and organizational skills; however, this is not common. When it comes to the knowledge required, the focus shifts from the technical domain to an understanding of fields such as sociology, critical data studies, and AI regulations. Many postings highlight that potential candidates need to be familiar with concepts such as AI/ML auditing, algorithmic impact assessments, assessment of fairness in predictive models, explainability, robustness, and human-AI interaction. Technical knowledge, such as understanding transfer-based language models and logistic regression model development, is also highlighted in the posting. Lastly, the job postings outline the need for strong interpersonal, verbal, and written communication skills. However, experience publishing and presenting at academic venues is not mentioned.

Qualifications The majority of the job postings require a bachelor’s degree in quantitative fields such as data science and computer science and prefer higher degrees (master’s or Ph.D.). Companies are looking for candidates who have experience in data science, software engineering, and worked with large language models. Moreover, they are looking for experience in putting responsible AI principles into practice, evaluating the ethics of algorithms, and having basic familiarity with law and policy research. The ability and experience to translate AI ethic principles into practice are heavily emphasized throughout these job postings.

Interpersonal Qualities The job postings emphasize the ability to work with people from different backgrounds. However, these job postings do not include a comprehensive list of values. A few postings mention being a self-starter, working collaboratively to resolve conflict, and caring deeply about the data used to train ML models as key attitudes. Being flexible, innovative, curious, adaptive, and passionate about tackling real word challenges are also some of the sought-after values.

Engineer

The engineer occupation is represented in 8 of the job postings. None of our interview participants belong to this category. The key responsibility of an engineer practicing AI ethics is to help establish a safety culture and system within an organization by developing technical tools. They are tasked with developing a workflow for modeling and testing for issues such as bias, explainiability, safety, and alignment of AI systems. As part of this, engineers need to create code bases that could be used across the AI system development pipeline based on existing and evolving best practices.

Skills and Qualifications Job postings for engineers place a significant emphasis on experience-based qualifications and skills. The companies represented in this dataset are looking for skills and experience in software development, dataset production pipelines, researching fairness and safety implications of ML systems, and the development of large language models. They are also looking for experience working in a fast-paced technology company. Based on these qualifications, the main set of skills are programming and AI/ML development skills and this needs to be supported by knowledge and familiarity with foundational concepts in AI/ML, fairness, explainability, system’s safety, and safety life cycle management. Lastly, most of the job descriptions do not have a heavy emphasis on communication skills. Only a few mention excellent written and oral communication skills as a requirement.

Interpersonal Qualities In contrast to the lack of emphasis on communication skills, these postings have a particular focus on the attitude and values of ideal candidates more so than any other occupation category. These attitudes include being result-oriented, willingness to contribute as needed (even if not specified) and keen to learn new concepts. They are looking for people who value working on challenging problems and care about the societal impact of their work.

Researcher (law, philosophy and social sciences)

The second most frequent category of postings belongs to researchers that focus on topics such as policy, sociotechnical issues, and governance (14 job postings, 3 interviews). We created a separate group of positions as their responsibilities, skills, and qualities are sufficiently different from the technical researcher position. Candidates in this category need to conduct research, perform ethics or impact assessments of AI systems, act as a liaison and translator between research, product, policy, and legal teams, and lastly, advise on policy, standards, and regulations-related matters internally and externally. When conducting research, two different focus areas come up in the job postings: testing and evaluating AI system to inform policy and researching existing policies/regulations, and translating them into practice.

Skills The job postings highlight two sets of distinct skills for this group of researchers. Firstly, these researchers require a basic level of programming, advanced analytics, and data visualization skills. Few positions highlighted the need for even more advanced ML and AI skills. It is noteworthy that despite these researchers’ focus on policy, governance and sociotechnical issues, the postings still require them to have some data analytic skills. Secondly, these researchers need to have excellent facilitation, community-building, and stakeholder engagement skills. These two skills need to be complemented by strong leadership and management skills. The job postings heavily emphasize strong communication skills for this group of researchers. Besides the conventional skill of presenting and publishing papers, this group of researchers need to effectively work across different functionalities and disciplines. On a similar trend, these researchers need to have expertise in a variety of areas. They need to have a good understanding of “qualitative and quantitative research methods” , reliably know the current and emerging_“legal and regulatory frameworks and policies”_ , be “familiar with AI technology” and have a good knowledge of practices, process, design, and development of AI technology. This is a vast range of expertise and often “very difficult to recruit” for as highlighted by our expert interviewees.

Qualifications Just over half of the job postings list a Ph.D. in relevant areas as a requirement, including human-computer interaction, cognitive psychology, experimental psychology, digital anthropology, law, policy, and quantitative social sciences. Two postings require only a bachelor’s or a master’s in the listed areas. Similar to the technical researcher occupation, some positions do not specify any educational requirements and only focus on experience and skills. Our expert interviewees in this category are from a range of educational backgrounds ranging from a master’s in sociotechnical systems, a law degree combined with a background in statistics, and a master’s in cognitive systems.

Besides experience in research, companies are looking for experience in translating research into design, technology development, and policy. A researcher explained that they need to do a lot of “translational work” between the academic conversation and product teams in companies. A good candidate for this occupation would have “project management” , “change management” , “stakeholder engagement” , and “applied ethics” experience in a “fast-paced environment”. All four of these skills do not appear in all of the job postings and interview discussions. However, a permutation of them appears throughout the job posting data and participants’ responses.

Interpersonal Qualities

As emphasized strongly in both of the datasets, ideal candidates in this category need to have a “figure-it-out somehow” or “make it happen” attitude as explained by a participant. They are “driven by curiosity and passion towards” issues related to responsible AI development and are excited to engage with the product teams. Participants noted that ideal candidates in these roles are “creative problem solvers” who can work in a “fast-changing environment” .

Policy analyst

Policy analyst occupation is the least represented [1 expert interview, 4 job postings] in our data sources; however, considering the consistent list of competencies, we decided to include it within the proposed framework. The role of a policy analyst is to understand, analyze and implement a given policy within an organization. Moreover, they need to engage with policymakers and regulators and provide feedback on existing policies.

Skills and Qualifications A policy analyst needs to have proven knowledge of laws, policies, regulations, and precedents applicable to a given technology when it comes to AI ethics-related issues. Moreover, all of the job postings highlight the importance of familiarity with AI technology. According to the job postings, a good candidate would have experience in interpreting policy and developing assessments for a given application. They also need to be skilled in management, team building, and mentorship. This finding echoes remarks from expert interviews. Even though none of the job postings specify an educational degree requirement, the expert we interviewed was a lawyer with a master’s in technology law.

Interpersonal Qualities The job postings in this category heavily emphasized values and attitudes. A good analyst needs to have sound judgment and outstanding personal integrity. They should be caring and knowledgeable about the impact of technology on society. Moreover, they enjoy working on complex multifaceted problems and are passionate about improving governance of AI systems. The expert interviewee’s perspective closely matches these attributes. Participants elaborated that they needed to be “brave” and “step up to ask questions and challenge status quo consistently over a long time” . As expected communications skills are considered critical for success. The expert interviewee significantly emphasized the importance of “networking as a key factor” in succeeding in their role.

Manager

We analyzed 7 management-related job postings and 5 expert interviewees in this category. The product managers take the role of incorporating responsible AI practices in the product development process. In contrast, program managers are often leading and launching a new program on establishing AI ethics practices within the organization. These programs often involve building an organization’s capacity to manage responsible AI issues.

Skills For both streams of management, the potential candidates need to have strong business acumen and a vision for the use/development of AI technology within an organization. Some of the key management skills highlighted in the job postings include the ability to manage multiple priorities and strategically remove potential blockers to success. Another sought-after skill is the ability to effectively engage stakeholders in the process. Expert interviewees also echoed the importance of this skill as their roles often involve getting people “on board with new ways of thinking and creating” . According to the job postings, good candidates for management need to have a practical understanding of the AI life cycle and be familiar with integrating responsible AI practices into a program or a product. Our interviewees note that they continuously need to “learn and keep up with the fast-paced development of AI” .

Qualifications Not many postings have highlighted educational qualifications and instead focused on experience qualifications. However, the main educational qualification is a bachelor’s degree with a preference for higher degrees. The postings have primarily highlighted a degree in a technical field such as computer science or software engineering. Interestingly the interviews reflect a different flavor of educational backgrounds. All of the experts we interviewed had at minimum a master’s degree and the majority of them completed their studies in a non-technical field such as philosophy, media studies, and policy. However, these individuals had acquired a significant level of expertise in AI ethics through “self-studying” and “engaging with the literature” and the responsible AI “community” . For example, two of the participants trained in technical fields and had a significant level of industry experience. Similarly, they had learned about responsible AI through their own initiative.

On the other hand, the job postings heavily focus on experience , including a significant amount of technical know-how, experience focused on ML development, product and program management , and implementation of ethical and social responsibility practices within fast-paced technology companies. The interview participants had been “working in the industry for some time” before taking on these management roles. However, their range of experiences do not cover all of the required experiences outlined in the job descriptions. As expected, excellent communication skills are noted in the job descriptions and strongly echoed by the experts as well. The job postings do not necessarily elaborate on the nature of communication skills; however, the experts note that the “ability to listen” , understand , and sometimes “persuade different stakeholders” is key in such roles.

Interpersonal Qualities Few of the job postings make remarks about attitudes/values and highlight that managers need to value designing technology for social good and cooperation with other stakeholders. A good candidate for management shouldfoster a growth mindset and approach their work with agility, creativity, and passion. All of the participants expressed their passion for developing ethical technologyand indicate that they took a lot of initiative to learn and contribute to the field within their company and externally before they could take on their management roles.

Director

The job descriptions dataset has 4 postings for director positions and 2 of the expert interviewees have directorship roles. According to the job postings, director responsibilities include at least three of the following: a) lead the operationalization of AI ethics principles, b) provide strategic direction and roadmap towards enterprise-wide adoption and application of ethical principles and decision frameworks, and c) build internal capacity for AI ethics practice and governance. Depending on the nature of the organization and its need to incorporate AI ethics practices, these responsibilities vary in scope. For example, a director within a technology start-up will only be able to commit “limited amount of time to operationalizing AI ethics principles and building internal capacity” compared to a director within a larger technology company.

Skills and qualifications According to the job postings, the key skill for being a director is having the ability to build a strong relationship with a broad community that helps define and promote best practice standards of AI ethics. An ideal director can effectively pair their technical skills/know-how with their management skills and policy/standards knowledge to develop strategic plans for the company. Experience in directing and leading teams, particularly in social responsibility practices within technology companies is highly valued for such positions. Only one job posting specifies an educational (a bachelor’s related to policy development and implementation). Others only highlight experience. The two interviewees hold master’s degrees in business and information systems respectively. They also had extensive industry experience that was not directly in AI ethics. However, their experience involved “translation of policy within a technology application” .

Interpersonal Qualities As expected, according to the job postings a good candidate for directorship needs to have exceptional written and verbal communication skills, need to be able “to articulate complex ideas” to technical and non-technical audiences, “engage and influence stakeholders” and “collaborate with people from different disciplines, and cultures” . This set of skills was reflected in our expert interviews. Both interviewees emphasized how they maintain a good flow of communication with the employees and how they remain always open to having conversations on a needs basis. This allowed them to build trust within the company and pursue moving forward with their strategic plan. The job postings highlight the ability to earn trust in relationships as a sought-after value for a directorship role. A director should also be able to challenge the status quo, be passionate about good technology development, be comfortable with ambiguity , and adapt rapidly to changing environment and demands . Most importantly, a director needs to have “a strong and clear commitment to the company values” as they set the tone for others within the organization.

Emerging occupations

Besides the abovementioned classes of occupations, we found a few other positions that do not map easily to any of the existing categories. Considering the limited number of these positions, they do not justify a category of their own. However, we note these emerging roles to understand how they might shape up the responsible AI profession. These occupation titles include data ethicists (2 in job postings), AI ethics consultants (2 in interviews), dataset leads (2 in job postings), communication specialist (1 in job postings), safety specialist (1 in job posting) and UX designer (1 in job postings). The following describes the main function of these positions:

- Data ethicist: manage organizational efforts in operationalizing AI ethics practices through policy and technology development work. This role has similarities to the role of a policy analyst and data scientist.

- AI ethics consultant: apply their expertise in AI ethics to solve pain points for consulting clients.

- Dataset lead: curate datasets while accounting for fairness and bias-related issues.

- Safety specialist: use and test large language model-based systems to identify failures and errors.

- AI ethics communication specialist: write communication pieces that focus on AI ethics issues.

- UX designers: design user interfaces with ethics in mind.

Future of the responsible AI profession

Our interview participants shared a variety of responses to the question “what will the future of their job be like?”. Some participants thought that eventually, “everyone in a company will be responsible” for understanding ethical and social issues of AI as part of their job. In this scenario, everyone would need to have the appropriate knowledge and skillset to apply responsible AI practices in their work or at least know when they need to ask for advice from internal or external experts.

On the contrary, many participants expressed that “dedicated roles” need to be recruited. These participants elaborate that recruitment for these roles is and will “continue to be challenging” as it is difficult to find people with interdisciplinary backgrounds and established industry work experience. Many of the managers we interviewed have chosen “to build teams that come from different disciplinary backgrounds” and provide “professional development opportunities” on the job. However, they also described that hiring people into these roles is challenging since corporate leaders are not always willing to invest a lot of resources in AI ethics. This often can lead to “exhaustion and burn-out” for individuals who currently hold these roles - this is especially true for small and medium-sized technology companies. According to participants, this will likely change with a progressive shift in the regulatory landscape.

Discussion

Educators and employers play a pivotal role in shaping a responsible AI culture. In our efforts to create a competency framework that outlines the range of roles for responsible AI practitioners, we find that such frameworks can not only guide corporate leaders to recruit talent but also help grow their responsible AI capacity.

We find that the ability to work in an interdisciplinary environment, communicate and engage with diverse stakeholder groups, and the aptitude for curiosity and self-learning are consistently highlighted for all of the roles. This emphasizes the need to foster an environment where students and existing employees in different roles are encouraged to adopt interdisciplinary approaches/collaboration and explore responsible AI content.

In this section, we articulate how an interdisciplinary environment can be fostered, the importance of organizational support for responsible AI practitioners, and the need to proactively monitor the rapidly changing occupational demand and landscape for these roles.

Being able to work in an interdisciplinary environment is critical

Our results show that many of the responsible AI practitioners today come from non-traditional, non-linear, and interdisciplinary educational and work backgrounds to their current positions. The educational and work experiences of these participants span a multitude of fields and allowed them to develop a strong set of skills in navigating disciplinary boundaries and understanding problems from diverse perspectives. The participants often described their role as a translator and facilitator between different groups and disciplines within the organization. For instance, they remarked that a concept such as fairness, transparency, or ethically safe has completely different meanings depending on the personal and professional backgrounds of their audience. The participants often needed to translate what these concepts mean across different disciplinary boundaries (i.e. statistics and law).

Notably, while the job postings asked for a diverse array of skills and qualifications from multiple disciplines, those who hold such positions today are often specialized in one or two disciplines. However, they had been exposed to and worked across multiple disciplines in their professional career. The most important asset that our interviewees emphasized was being able to work across disciplinary boundaries. The candidates who successfully hold such positions are_not “ethical unicorn, full stack developers”_. However, they have honed the skills necessary to translate and create solutions to responsible AI issues across multiple disciplines. Building on existing proposal to improve responsible AI practicesand education, we posit that AI team leaders need to pay a special attention to hiring individuals with the capability to create, critique and communicate across multiple disciplines . Consider Furthermore, educators can get inspiration from education models in highly interdisciplinary fields such as healthcare and create curricula/spaces where students work with peers from different academic backgrounds.

Responsible AI practitioners are advocates - but they need organizational support

We find that responsible AI practitioners are often highly driven and motivated to make a positive impact. These individuals often hold a strong sense of valuing social justice and want to ensure that AI technology is developed in a way that is good for society’s well-being. One of the most consistent ideas that came through in the interviews is the attitude that the participants had toward their careers. Many of the interview participants took the time to immerse themselves in learning new topics and expressed that they were self-motivated to do so. This is especially true for the individuals who are taking some of these first positions in the industry. When looking at the career trajectory of many of the participants, we observe that they often created their own roles or came into a newly created role. Moreover, these individuals often needed to start their own projects and create relationships with others in the organization to measure their own progress and establish credibility.

Similar to any emerging profession many of the participants act as champions for ethical and safe development of AI. They are often working in an environment that questions and challenges the need for considering AI ethics principles. As some of the participants remarked, they often have to answer questions such as “why do we need to pay for ethics assessments?” ,“what is the value of considering AI ethics in a start-up?” , or “why should we put in the time? what is the value added?” . This act of advocating for AI ethics is even more challenging when existing regulations do not have proper enforcement mechanisms for responsible AI practices. Many of the participants assume the role of an advocate and often use their excellent communication skills to build relationships and capacity within their organization.

For the successful implementation of responsible AI practices, it is important that business leaders pay attention and support the advocacy efforts of these practitioners. Many of today’s responsible AI practitioners are working with limited resources, have critical responsibilities, and are experiencing burn-out. Whenever possible, leaders in AI companies need to create appropriate incentive structures, provide the necessary resources and communicate the value of establishing responsible AI practices to their employees so that these practitioners have the necessary support for the effective execution of their responsibilities. Recognizing the nature of these roles, educators can learn from existing methodsand integrate leadership training into their curricula when addressing responsible AI-related content.

Educators and employers need to monitor and plan for the rapidly changing landscape of responsible AI roles

The nature of occupations in the AI industry is continually growing and shifting. The rapid technological development, upcoming regulationsand global economic conditionsimpact how companies recruit and retain responsible AI expertise. Furthermore, there is a need for new educational efforts and programs for preparing new graduates to take on responsible AI practices. The proposed ontology provides a synthesis of roles that have emerged in responsible AI practice and it can serve as a planning tool for corporate leaders and educators.

Corporate leaders can use this ontology to build internal capacity for individuals who currently hold researcher, data scientist, engineer, policy advisor, manager, and director roles in their institutions. Depending on these companies’ responsible AI needs and resources, business executives can work towards creating interdisciplinary teams for establishing responsible AI practice by recruiting individuals with the competencies outlined for each of these roles. Besides recruiting and fostering for responsible AI competencies, these leaders need to communicate the importance of these practices and start by creating the appropriate organizational incentives and resources for adapting responsible AI practices. Government and non-governmental organizations could support such efforts, particularly small and medium-size companies, by formally recognizing such roles in their taxonomies of occupationsand providing resources.

Current computer science and engineering education focuses primarily on teaching professional ethics. There is minimal focus and resources on cultivating skills and knowledge required for cultivating the skills that focus on ethics in design. On the other hand, there is a lack of clarity of how much students in social and political sciences need to work on their technical acumen to become skilled responsible AI practitioners. Educators could use the list of competencies to develop a set of learning objectives and examine the efficacy of different teaching pedagogies in supporting these objectives. Moreover, Educators can use the competency framework as a tool for acquiring resources for further curricula and program development.

Notably, the proposed ontology primarily focuses on type of roles, responsibilities and skills without addressing other important factors in recruitment and education efforts such as diversity of individual who get to learn about responsible AI issues or take such roles in the industry. Therefore, it is critical that users of this ontology, consider factors that are not captured in the scope of this ontology. Furthermore, considering the rapidly changing conversation around responsible AI practices, the type of roles in this onotlogy will shift and expand. We invite the community of researchers , practitioners and educators to reflect on these roles and build on this ontology.

Conclusion

With the increased regulatory activities in the industry, companies have the incentive to ensure responsible AI development. In this work, we found seven different type of roles and their corresponding responsibilities, skills, qualifications, and interpersonal qualities expected in today’s responsible AI practitioner. We propose a preliminary competency framework for responsible AI practitioners and highlight the importance of creating interdisciplinary teams and providing adequate organizational support for individuals in these roles.

We thank our study participants for taking the time to share their experiences, expertise, and feedback. We also thank our anonymous reviewers for their deep engagement and valuable feedback on this paper. This work benefitted greatly from the data collection and analysis assistance from our collaborators Sandi Mak, Ivan Ivanov, Aidan Doudeau, and Nandita Jayd at Vanier College, Montreal. We are grateful for their contributions. Finally, this work was financially supported by the Natural Sciences and Engineering Research Council of Canada and Pôle montréalais d’enseignement supérieur en intelligence artificielle.

Supplementary Material

Interview protocol

Consent Process Thank you for reading and signing the Human Subjects Consent Form for this project..

Introduction Thank you for agreeing to take part in this study. My name is [interviewer] and I will be conducting this interview. I am a research assistant working with [advisor]. We have invited you to take part in this today because of your current role. The purpose of this study is to examine the experiences of professionals including ethicists, technologists and business leaders who are dealing with ethical and social implications of particular AI technology through development and implementation.

Today I am playing two roles: that of a interviewer and that of a researcher. At this time I would like to give a brief overview of the project and the consent form. [5min]

Background and current role

- Please tell us about your role at your current company.

- What is your official job title, and what are you main responsibilities?

- Could you please tell me about your background, expertise and experience that led you to take on your current role?

- Who do you work most closely with at your company? Who do you manage?Who do you report to? Who are clients? Who are your partners?

Situation your work, projects in AI ethics

- How do you situate your work within the broader field of AI ethics? What types of challenges are you working on in this field? Please feel free to share any specific examples from your projects.

- What are the main projects related to AI ethics that you are working on that you can tell us about?

- What types of resources (academic papers, academic experts, standards, guidelines) do you use in your AI ethics practice?

- Do you use the guidelines on AI ethics practice that have published in this field over the past 5 years? If so, which one and what does following the guideline look like at your company?

- What are the most important challenges to implementing ethics principles at your work?

Skills, knowledge, values

- What are the most important skillsets, knowledge base and values that you currently use at your job? What are you currently developing and will need in the future?

- If you decide to hire someone to replace you in your current role, what would you look for? What skills or background would your ideal candidate have?

Looking into the future

- From your perspective, what roles do you think are necessary in the field of AI ethics in academia, industry, governmental and non-governmental organizations? Please elaborate.

Relevant tables

Inclusion and exclusion criteria for job postings

Table Label: tab-jp-criteria

Download PDF to view tableBibliography

1@article{Abril07,

2 note = {},

3 url = {http://doi.acm.org/10.1145/1219092.1219093},

4 doi = {10.1145/1188913.1188915},

5 pages = {36--44},

6 year = {2007},

7 month = {January},

8 number = {1},

9 volume = {50},

10 journal = {Communications of the ACM},

11 title = {The patent holder's dilemma: Buy, sell, or troll?},

12 author = {Patricia S. Abril and Robert Plant},

13}

14

15@article{Cohen07,

16 acmid = {1219093},

17 url = {http://doi.acm.org/10.1145/1219092.1219093},

18 doi = {10.1145/1219092.1219093},

19 year = {2007},

20 month = {April},

21 number = {2},

22 volume = {54},

23 numpages = {50},

24 articleno = {5},

25 journal = {J. ACM},

26 title = {Deciding equivalances among conjunctive aggregate queries},

27 author = {Sarah Cohen and Werner Nutt and Yehoshua Sagic},

28}

29

30@book{Kosiur01,

31 note = {},

32 month = {},

33 series = {},

34 number = {},

35 volume = {},

36 editor = {},

37 edition = {2nd.},

38 address = {New York, NY},

39 year = {2001},

40 publisher = {Wiley},

41 title = {Understanding Policy-Based Networking},

42 author = {David Kosiur},

43}

44

45@book{Harel79,

46 note = {},

47 month = {},

48 number = {},

49 editor = {},

50 url = {http://dx.doi.org/10.1007/3-540-09237-4},

51 doi = {10.1007/3-540-09237-4},

52 publisher = {Springer-Verlag},

53 address = {New York, NY},

54 volume = {68},

55 series = {Lecture Notes in Computer Science},

56 title = {First-Order Dynamic Logic},

57 year = {1979},

58 author = {David Harel},

59}

60

61@inbook{Editor00,

62 note = {},

63 month = {},

64 type = {},

65 number = {},

66 pages = {},

67 chapter = {},

68 url = {http://dx.doi.org/10.1007/3-540-09456-9},

69 doi = {10.1007/3-540-09237-4},

70 publisher = {University of Chicago Press},

71 edition = {1st.},

72 address = {Chicago},

73 volume = {9},

74 year = {2007},

75 series = {The name of the series one},

76 subtitle = {The book subtitle},

77 title = {The title of book one},

78 editor = {Ian Editor},

79 author = {},

80}

81

82@inbook{Editor00a,

83 note = {},

84 month = {},

85 type = {},

86 number = {},

87 pages = {},

88 chapter = {100},

89 volume = {},

90 url = {http://dx.doi.org/10.1007/3-540-09456-9},

91 doi = {10.1007/3-540-09237-4},

92 publisher = {University of Chicago Press},

93 edition = {2nd.},

94 address = {Chicago},

95 year = {2008},

96 series = {The name of the series two},

97 subtitle = {The book subtitle},

98 title = {The title of book two},

99 editor = {Ian Editor},

100 author = {},

101}

102

103@incollection{Spector90,

104 note = {},

105 month = {},

106 type = {},

107 series = {},

108 number = {},

109 volume = {},

110 url = {http://doi.acm.org/10.1145/90417.90738},

111 doi = {10.1145/90417.90738},

112 pages = {19--33},

113 editor = {Sape Mullender},

114 chapter = {},

115 edition = {2nd.},

116 year = {1990},

117 address = {New York, NY},

118 publisher = {ACM Press},

119 booktitle = {Distributed Systems},

120 title = {Achieving application requirements},

121 author = {Asad Z. Spector},

122}

123

124@incollection{Douglass98,

125 note = {},

126 month = {},

127 type = {},

128 number = {},

129 edition = {},

130 url = {http://dx.doi.org/10.1007/3-540-65193-4_29},

131 doi = {10.1007/3-540-65193-4_29},

132 pages = {368--394},

133 editor = {Grzegorz Rozenberg and Frits W. Vaandrager},

134 chapter = {},

135 year = {1998},

136 volume = {1494},

137 address = {London},

138 publisher = {Springer-Verlag},

139 booktitle = {Lectures on Embedded Systems},

140 series = {Lecture Notes in Computer Science},

141 title = {Statecarts in use: structured analysis and object-orientation},

142 author = {Bruce P. Douglass and David Harel and Mark B. Trakhtenbrot},

143}

144

145@book{Knuth97,

146 note = {},

147 month = {},

148 series = {},

149 number = {},

150 volume = {},

151 editor = {},

152 edition = {},

153 address = {},

154 year = {1997},

155 publisher = {Addison Wesley Longman Publishing Co., Inc.},

156 title = {The Art of Computer Programming, Vol. 1: Fundamental Algorithms (3rd. ed.)},

157 author = {Donald E. Knuth},

158}

159

160@book{Knuth98,

161 note = {(book)},

162 month = {},

163 number = {},

164 editor = {},

165 url = {},

166 doi = {},

167 publisher = {Addison Wesley Longman Publishing Co., Inc.},

168 address = {},

169 edition = {3rd},

170 volume = {1},

171 series = {Fundamental Algorithms},

172 title = {The Art of Computer Programming},

173 year = {1998},

174 author = {Donald E. Knuth},

175}

176

177@incollection{GM05,

178 editors = {Z. Ghahramani and R. Cowell},

179 month = {January},

180 publisher = {The Society for Artificial Intelligence and Statistics},

181 booktitle = {Proceedings of Tenth International Workshop on Artificial Intelligence and Statistics, {\rm The Barbados}},

182 year = {2005},

183 title = {Structured Variational Inference Procedures and their Realizations (as incol)},

184 author = {Dan Geiger and Christopher Meek},

185}

186

187@inproceedings{Smith10,

188 note = {},

189 organization = {},

190 month = {},

191 number = {},

192 url = {http://dx.doi.org/99.0000/woot07-S422},

193 doi = {99.9999/woot07-S422},

194 pages = {422--431},

195 address = {Milan Italy},

196 publisher = {Paparazzi Press},

197 year = {2010},

198 volume = {3},

199 editor = {Reginald N. Smythe and Alexander Noble},

200 series = {LAC '10},

201 booktitle = {Proceedings of the 3rd. annual workshop on Librarians and Computers},

202 title = {An experiment in bibliographic mark-up: Parsing metadata for XML export},

203 author = {Stan W. Smith},

204}

205

206@inproceedings{VanGundy07,

207 numpages = {9},

208 articleno = {Paper 7},

209 address = {Berkley, CA},

210 publisher = {USENIX Association},

211 series = {WOOT '07},

212 booktitle = {Proceedings of the first USENIX workshop on Offensive Technologies},

213 title = {Catch me, if you can: Evading network signatures with web-based polymorphic worms},

214 year = {2007},

215 author = {Matthew Van Gundy and Davide Balzarotti and Giovanni Vigna},

216}

217

218@inproceedings{VanGundy08,

219 pages = {99-100},

220 numpages = {2},

221 articleno = {7},

222 address = {Berkley, CA},

223 publisher = {USENIX Association},

224 series = {WOOT '08},

225 booktitle = {Proceedings of the first USENIX workshop on Offensive Technologies},

226 title = {Catch me, if you can: Evading network signatures with web-based polymorphic worms},

227 year = {2008},

228 author = {Matthew Van Gundy and Davide Balzarotti and Giovanni Vigna},

229}

230

231@inproceedings{VanGundy09,

232 pages = {90--100},

233 address = {Berkley, CA},

234 publisher = {USENIX Association},

235 series = {WOOT '09},

236 booktitle = {Proceedings of the first USENIX workshop on Offensive Technologies},

237 title = {Catch me, if you can: Evading network signatures with web-based polymorphic worms},

238 year = {2009},

239 author = {Matthew Van Gundy and Davide Balzarotti and Giovanni Vigna},

240}

241

242@inproceedings{Andler79,

243 note = {},

244 organization = {},

245 month = {},

246 number = {},

247 volume = {},

248 editor = {},

249 url = {http://doi.acm.org/10.1145/567752.567774},

250 doi = {10.1145/567752.567774},

251 pages = {226--236},

252 address = {New York, NY},

253 publisher = {ACM Press},

254 year = {1979},

255 series = {POPL '79},

256 booktitle = {Proceedings of the 6th. ACM SIGACT-SIGPLAN symposium on Principles of Programming Languages},

257 title = {Predicate Path expressions},

258 author = {Sten Andler},

259}

260

261@techreport{Harel78,

262 note = {},

263 month = {},

264 address = {Cambridge, MA},

265 number = {TR-200},

266 type = {MIT Research Lab Technical Report},

267 institution = {Massachusetts Institute of Technology},

268 title = {LOGICS of Programs: AXIOMATICS and DESCRIPTIVE POWER},

269 year = {1978},

270 author = {David Harel},

271}

272

273@mastersthesis{anisi03,

274 year = {2003},

275 intitution = {FOI-R-0961-SE, Swedish Defence Research Agency (FOI)},

276 school = {Royal Institute of Technology (KTH), Stockholm, Sweden},

277 title = {Optimal Motion Control of a Ground Vehicle},

278 author = {David A. Anisi},

279}

280

281@phdthesis{Clarkson85,

282 month = {},

283 type = {},

284 note = {UMI Order Number: AAT 8506171},

285 address = {Palo Alto, CA},

286 school = {Stanford University},

287 title = {Algorithms for Closest-Point Problems (Computational Geometry)},

288 year = {1985},

289 author = {Kenneth L. Clarkson},

290}

291

292@misc{Poker06,

293 url = {http://www.poker-edge.com/stats.php},

294 lastaccessed = {June 7, 2006},

295 title = {Stats and Analysis},

296 month = {March},

297 year = {2006},

298 author = {Poker-Edge.Com},

299}

300

301@misc{Obama08,

302 note = {},

303 lastaccessed = {March 21, 2008},

304 month = {March},

305 url = {http://video.google.com/videoplay?docid=6528042696351994555},

306 day = {5},

307 howpublished = {Video},

308 title = {A more perfect union},

309 year = {2008},

310 author = {Barack Obama},

311}

312

313@misc{JoeScientist001,

314 lastaccessed = {},

315 month = {August},

316 howpublished = {},

317 url = {},

318 note = {Patent No. 12345, Filed July 1st., 2008, Issued Aug. 9th., 2009},

319 title = {The fountain of youth},

320 year = {2009},

321 author = {Joseph Scientist},

322}

323

324@inproceedings{Novak03,

325 distincturl = {1},

326 organization = {},

327 series = {},

328 number = {},

329 volume = {},

330 editor = {},

331 howpublished = {Video},

332 note = {},

333 url = {http://video.google.com/videoplay?docid=6528042696351994555},

334 doi = {99.9999/woot07-S422},

335 month = {March 21, 2008},

336 pages = {4},

337 address = {New York, NY},

338 publisher = {ACM Press},

339 year = {2003},

340 booktitle = {ACM SIGGRAPH 2003 Video Review on Animation theater Program: Part I - Vol. 145 (July 27--27, 2003)},

341 title = {Solder man},

342 author = {Dave Novak},

343}

344

345@article{Lee05,

346 note = {},

347 howpublished = {Video},

348 url = {http://doi.acm.org/10.1145/1057270.1057278},

349 doi = {10.1145/1057270.1057278},

350 month = {Jan.-March},

351 number = {1},

352 volume = {3},

353 eid = {4},

354 journal = {Comput. Entertain.},

355 title = {Interview with Bill Kinder: January 13, 2005},

356 year = {2005},

357 author = {Newton Lee},

358}

359

360@article{rous08,

361 note = {To appear},

362 howpublished = {},

363 url = {},

364 doi = {},

365 articleno = {Article~5},

366 month = {July},

367 number = {3},

368 volume = {12},

369 journal = {Digital Libraries},

370 title = {The Enabling of Digital Libraries},

371 year = {2008},

372 author = {Bernard Rous},

373}

374

375@article{384253,

376 address = {New York, NY, USA},

377 publisher = {ACM},

378 doi = {http://doi.acm.org/10.1145/351827.384253},

379 pages = {11},

380 issn = {1084-6654},

381 year = {2000},

382 volume = {5},

383 journal = {J. Exp. Algorithmics},

384 title = {(old) Finding minimum congestion spanning trees},

385 author = {Werneck,, Renato and Setubal,, Jo\~{a}o and da Conceic\~{a}o,, Arlindo},

386}

387

388@article{Werneck:2000:FMC:351827.384253,

389 address = {New York, NY, USA},

390 publisher = {ACM},

391 acmid = {384253},

392 doi = {10.1145/351827.384253},

393 url = {http://portal.acm.org/citation.cfm?id=351827.384253},

394 articleno = {11},

395 issn = {1084-6654},

396 year = {2000},

397 month = {December},

398 volume = {5},

399 journal = {J. Exp. Algorithmics},

400 title = {(new) Finding minimum congestion spanning trees},

401 author = {Werneck, Renato and Setubal, Jo\~{a}o and da Conceic\~{a}o, Arlindo},

402}

403

404@article{1555162,

405 address = {Amsterdam, The Netherlands, The Netherlands},

406 publisher = {Elsevier Science Publishers B. V.},

407 doi = {http://dx.doi.org/10.1016/j.inffus.2009.01.002},

408 pages = {342--353},

409 issn = {1566-2535},

410 year = {2009},

411 number = {4},

412 volume = {10},

413 journal = {Inf. Fusion},

414 title = {(old) Distributed data source verification in wireless sensor networks},

415 author = {Conti, Mauro and Di Pietro, Roberto and Mancini, Luigi V. and Mei, Alessandro},

416}

417

418@article{Conti:2009:DDS:1555009.1555162,

419 keywords = {Clone detection, Distributed protocol, Securing data fusion, Wireless sensor networks},

420 address = {Amsterdam, The Netherlands, The Netherlands},

421 publisher = {Elsevier Science Publishers B. V.},

422 acmid = {1555162},

423 doi = {10.1016/j.inffus.2009.01.002},

424 url = {http://portal.acm.org/citation.cfm?id=1555009.1555162},

425 numpages = {12},

426 pages = {342--353},

427 issn = {1566-2535},

428 year = {2009},

429 month = {October},

430 number = {4},

431 volume = {10},

432 journal = {Inf. Fusion},

433 title = {(new) Distributed data source verification in wireless sensor networks},

434 author = {Conti, Mauro and Di Pietro, Roberto and Mancini, Luigi V. and Mei, Alessandro},

435}

436

437@inproceedings{Li:2008:PUC:1358628.1358946,

438 keywords = {cscw, distributed knowledge acquisition, incentive design, online games, recommender systems, reputation systems, user studies, virtual community},

439 address = {New York, NY, USA},

440 publisher = {ACM},

441 acmid = {1358946},

442 doi = {10.1145/1358628.1358946},

443 url = {http://portal.acm.org/citation.cfm?id=1358628.1358946},

444 numpages = {6},

445 pages = {3873--3878},

446 location = {Florence, Italy},

447 isbn = {978-1-60558-012-X},

448 year = {2008},

449 booktitle = {CHI '08 extended abstracts on Human factors in computing systems},

450 title = {Portalis: using competitive online interactions to support aid initiatives for the homeless},

451 author = {Li, Cheng-Lun and Buyuktur, Ayse G. and Hutchful, David K. and Sant, Natasha B. and Nainwal, Satyendra K.},

452}

453

454@book{Hollis:1999:VBD:519964,

455 address = {Upper Saddle River, NJ, USA},

456 publisher = {Prentice Hall PTR},

457 edition = {1st},

458 isbn = {0130850845},

459 year = {1999},

460 title = {Visual Basic 6: Design, Specification, and Objects with Other},

461 author = {Hollis, Billy S.},

462}

463

464@book{Goossens:1999:LWC:553897,

465 address = {Boston, MA, USA},

466 publisher = {Addison-Wesley Longman Publishing Co., Inc.},

467 edition = {1st},

468 isbn = {0201433117},

469 year = {1999},

470 title = {The Latex Web Companion: Integrating TEX, HTML, and XML},

471 author = {Goossens, Michel and Rahtz, S. P. and Moore, Ross and Sutor, Robert S.},

472}

473

474@techreport{897367,

475 address = {Amherst, MA, USA},

476 publisher = {University of Massachusetts},

477 source = {http://www.ncstrl.org:8900/ncstrl/servlet/search?formname=detail\&id=oai%3Ancstrlh%3Aumass_cs%3Ancstrl.umassa_cs%2F%2FUM-CS-1987-018},

478 year = {1987},

479 title = {Vertex Types in Book-Embeddings},

480 author = {Buss, Jonathan F. and Rosenberg, Arnold L. and Knott, Judson D.},

481}

482

483@techreport{Buss:1987:VTB:897367,

484 address = {Amherst, MA, USA},

485 publisher = {University of Massachusetts},

486 source = {http://www.ncstrl.org:8900/ncstrl/servlet/search?formname=detail\&id=oai%3Ancstrlh%3Aumass_cs%3Ancstrl.umassa_cs%2F%2FUM-CS-1987-018},

487 year = {1987},

488 title = {Vertex Types in Book-Embeddings},

489 author = {Buss, Jonathan F. and Rosenberg, Arnold L. and Knott, Judson D.},

490}

491

492@proceedings{Czerwinski:2008:1358628,

493 address = {New York, NY, USA},

494 publisher = {ACM},

495 order_no = {608085},

496 location = {Florence, Italy},

497 isbn = {978-1-60558-012-X},

498 year = {2008},

499 title = {CHI '08: CHI '08 extended abstracts on Human factors in computing systems},

500 note = {General Chair-Czerwinski, Mary and General Chair-Lund, Arnie and Program Chair-Tan, Desney},

501 author = {},

502}

503

504@phdthesis{Clarkson:1985:ACP:911891,

505 address = {Stanford, CA, USA},

506 school = {Stanford University},

507 note = {AAT 8506171},

508 year = {1985},

509 title = {Algorithms for Closest-Point Problems (Computational Geometry)},

510 advisor = {Yao, Andrew C.},

511 author = {Clarkson, Kenneth Lee},

512}

513

514@article{1984:1040142,

515 address = {New York, NY, USA},

516 publisher = {ACM},

517 issue_date = {January/April 1984},

518 number = {5-1},

519 volume = {13-14},

520 issn = {0146-4833},

521 year = {1984},

522 journal = {SIGCOMM Comput. Commun. Rev.},

523 key = {{$\!\!$}},

524}

525

526@inproceedings{2004:ITE:1009386.1010128,

527 address = {Washington, DC, USA},

528 publisher = {IEEE Computer Society},

529 acmid = {1010128},

530 doi = {http://dx.doi.org/10.1109/ICWS.2004.64},

531 url = {http://dx.doi.org/10.1109/ICWS.2004.64},

532 pages = {21--22},

533 isbn = {0-7695-2167-3},

534 year = {2004},

535 series = {ICWS '04},

536 booktitle = {Proceedings of the IEEE International Conference on Web Services},

537 title = {IEEE TCSC Executive Committee},

538 key = {IEEE},

539}

540

541@book{Mullender:1993:DS:302430,

542 address = {New York, NY, USA},

543 publisher = {ACM Press/Addison-Wesley Publishing Co.},

544 isbn = {0-201-62427-3},

545 year = {1993},

546 title = {Distributed systems (2nd Ed.)},

547 editor = {Mullender, Sape},

548}

549

550@techreport{Petrie:1986:NAD:899644,

551 address = {Austin, TX, USA},

552 publisher = {University of Texas at Austin},

553 source = {http://www.ncstrl.org:8900/ncstrl/servlet/search?formname=detail\&id=oai%3Ancstrlh%3Autexas_cs%3AUTEXAS_CS%2F%2FAI86-33},

554 year = {1986},

555 title = {New Algorithms for Dependency-Directed Backtracking (Master's thesis)},