- Papers

Papers is Alpha. This content is part of an effort to make research more accessible, and (most likely) has lost some details from the original. You can find the original paper here.

Introduction

New pervasive technologies in the workplace have been introduced to enhance productivity (e.g., a tool that provides an aggregated productivity score based on, for example, email use on the move, network connectivity, and exchanged content). Yet, some of them are judged to be invasive so much so that they make it hard to build a culture of trust at work, and often impacting workers’ productivity and well-being in negative ways. While these technologies hold the promise of enabling employees to be productive, report after report has highlighted the outcries of AI-based tools being biased and unfair, and lacking transparency and accountability. Systems are now being used to analyze footage from security cameras in workplace to detect, for example, when employees are not complying with social distancing rules[https://www.ft.com/content/58bdc9cd-18cc-44f6-bc9b-8ca4ac598fc8 ]; while there is a handful of good intentions behind such a technology (e.g., ensuring safe return to the office after the COVID-19 pandemic), the very same technology could be used to surveil employees’ movements, or time away from desk. Companies now hold protected intellectual properties on technologies that use ultrasonic sound pulses to detect worker’s location and monitor their interactions with inventory bins in factories.[Wrist band haptic feedback system: https://patents.google.com/patent/WO2017172347A1/ ]

As we move towards a future likely ruled by big data and powerful AI algorithms, important questions arise relating to the psychological impacts of surveillance, data governance, and compliance with ethical and moral concerns (https://social-dynamics.net/responsibleai ). To make the first steps in answering such questions, we set out to understand how employees judge pervasive technologies in the workplace and, accordingly, determine how desirable technologies are supposed to behave both onsite and remotely. In so doing, we made two sets of contributions: First, we considered 16 pervasive technologies that track workplace productivity based on a variety of inputs, and conducted a study in which 131 US-based crowd-workers judged these technologies along the 5 well-established moral dimensions of harm, fairness, loyalty, authority, and purity. We found that the judgments of a scenario were based on specific heuristics reflecting whether the scenario: was currently supported by existing technologies; interfered with current ways of working or was not fit for purpose; and was considered irresponsible by causing harm or infringing on individual rights. Second, we measured the moral dimensions associated with each scenario by asking crowd-workers to associate words reflecting the five moral dimensions with it. We found that morally right technologies were those that track productivity based on task completion, work emails, and audio and textual conversations during meetings, whereas morally wrong technologies were those that involved some kind of body-tracking such as tracking physical movements and facial expressions.

RELATED WORK

On a pragmatic level, organizations adopted“surveillance” tools mainly to ensure security and boost productivity. In a fully remote work setting, organizations had to adopt new security protocolsdue to the increased volume of online attacks,[https://www.dbxuk.com/statistics/cyber-security-risks-wfh ] and they ensured productivity by tracking the efficient use of resources.

However, well-meaning technologies could inadvertently be turned into surveillance tools. For example, a technology that produces an aggregated productivity score[https://www.theguardian.com/technology/2020/nov/26/microsoft-productivity-score-feature-criticised-workplace-surveillance ] based on diverse inputs (e.g., email, network connectivity, and exchanged content) can be a double-edged sword. On the one hand, it may provide managers and senior leadership visibility into how well an organization is doing. On the other hand, it may well be turned into an evil tool that puts employees under constant surveillance and unnecessary psychological pressure.[https://twitter.com/dhh/status/1331266225675137024 ] More worryingly, one in two employees in the UK thinks that it is likely that they are being monitored at work, while more than two-thirds are concerned that workplace surveillance could be used in a discriminatory way, if left unregulated. Previous studies also found that employees are willing to be `monitored’ but only when a company’s motivations for doing so are transparently communicated. Technologies focused on workplace safety typically receive the highest acceptance rates, while technologies for unobtrusive and continuous stress detection receive the lowest,with employees mainly raising concerns about tracking privacy-sensitive information.

To take a more responsible approach in designing new technologies, researchers have recently explored which factors affect people’s judgments ofthese technologies.In his book “How humans judge machines”, Cesar Hidalgo showed that people do not judge humans and machines equally, and that differences were the result of two principles. First, people judge humans by their intentions and machines by their outcomes (e.g., “in natural disasters like the tsunami, fire, or hurricane scenarios, there is evidence that humans are judged more positively when they try to save everyone and fail—a privilege that machines do not enjoy”-p. 157). Second, people assign extreme intentions to humans and narrow intentions to machines, and, surprisingly, they may excuse human actions more than machine actions in accidental scenarios (e.g., “when a car accident is caused by either a falling tree or a person jumping in front of a car, people assign more intention to the machine than to the human behind the wheel”-p. 130).

Previous work has mostly focused on scenarios typically involving aggression, physical, or psychological harm. Here, in the workplace context, we explore scenarios reflecting aspects tailored to the pervasive computing research agenda that transcend harm such as ease of adoption and technological intrusiveness.

ONLINE STUDY

Summary of crowd-workers demographics.

Table Label: tab-crowdworkers-demographics

Download PDF to view tableScenarios Generation

The Electronic Frontier Foundation, a leading non-profit organization defending digital privacy and free speech has analyzed employee-monitoring software programs, and classified these programs based on five main aspects that are being tracked: (a) work time on computer (e.g., tracking inactivity) (b) log keystrokes (e.g., typing behavior, text messages being exchanged), (c) websites, apps, social media use, and emails, (d) screenshots to monitor task completion time, and (e) webcams monitoring facial expressions, body postures, or eye movements. Drawing from this analysis, we devised a set of 16 AI-based workplace technologies (Table tab-technologies). As a result of rapid technological advancements, this list might not be exhaustive, but, as we shall see next (sec:analysis), our methodology could be used on newly introduced technologies as it is a generalizable way of identifying how individuals tend to make their moral judgments.

Sixteen tracking technologies that were judged along five well-established moral dimensions: harm, fairness, loyalty, authority, and purity.

Table Label: tab-technologies

Download PDF to view tableHaving the 16 technologies at hand, we created scenarios involving their use onsite and remotely. Scenarios are short stories that describe an action that can have a positive or negative moral outcome. Here, an action is defined as a technology that tracks productivity through certain types of data. For example, the scenario for tracking productivity through social media use (Technology 14 in Table tab-technologies) when working remotely reads as: All employees are working remotely and, as a new policy, their company is using the latest technologies to keep track of their social media use to monitor productivity. Having 16 technologies and 2 work modes (i.e., onsite or remotely), we ended up with 32 scenarios.

Procedure

For each scenario, we used a set of questions probing people’s attitudes toward a technology. We captured these attitudes through three questions (facets) concerning whether a technology is: hard to adopt, intrusive, and harmful. These facets originate from experiments conducted to understand people’s attitudes toward AI more generally(p. 27). For each scenario, we asked three questions, answered on a Likert-scale:

-

Was the technology hard to adopt? (1: extremely unlikely; 7: extremely likely)

-

Was the technology intrusive? (1: extremely unobtrusive; 7: extremely intrusive)

-

Was the technologyharmful? (1: extremely harmless; 7: extremely harmful)

After responding to these questions, crowd-workers were asked to choose words associated with five moral dimensions that best describe the scenario. In general, morality speaks to what is judged to be “right” or “wrong”, “good” or “bad”. Moral psychologists identified a set of five dimensions that influence individuals’ judgments: harm (which can be both physical or psychological), fairness (which is typically about biases), loyalty (which ranges from supporting a group to betraying a country), authority (which involves disrespecting elders or superiors, or breaking rules), and purity (which involves concepts as varied as the sanctity of religion or personal hygiene).

Each dimension included two positive and two negative words(p. 28). The dimension harm included the words harmful (-)', violent (-)’, caring (+)', protective (+)’; the dimension fairness included the words unjust (-)', discriminatory (-)’, fair (+)', impartial (+)’; the dimensionloyalty included the words disloyal (-)', traitor (-)’, devoted (+)', loyal (+)’; the dimension authority included the words disobedient (-)', defiant (-)’, lawful (+)', respectful (+)’; the dimension purity included the words indecent (-)', obscene (-)’, decent (+)', virtuous (+)’. The (-) and (+) signs indicate whether a word has a negative or positive connotation. In the work environment, some of these terms (e.g., violent, traitor) might not apply, and, as such, we studied all the words aggregated by moral dimension rather than studying them individually. Finally, to place our results into context, we asked crowd-workers to report their basic demographic information(e.g., gender, ethnicity, industry sector, number of years of employment).

Participants and Recruitment

We administered the 32 scenarios through Amazon Mechanical Turk (AMT), which is a popular crowd-sourcing platform for conducting social experiments. We only recruited highly reputable AMT workers by targeting workers with 95% HIT (Human Intelligence Task) approval rate and at least 100 approved HITs. We applied quality checks using an attention question, which took the form of “Without speculating on possible advances in science, how likely are you to live to 500 years old?” An attention question is a standard proactive measure to ensure data integrity, which helps to detect and discard responses generated by inattentive respondents. To this end, we rejected those who chose any option other than the less likely, leaving us with a total of 131 crowd-workers with eligible answers. To ensure that crowd-workers had a common understanding of these technologies, we provided examples of how each technology could work in tracking employees. The scenarios were randomized, ensuring no ordering effect would bias the responses. The task completion time was, on average, 12 minutes, and each crowd-worker received 1$ as a compensation.

In our sample, crowd-workers were from the U.S (whose statistics are summarized in Table tab-crowdworkers-demographics). In total, we received responses from 87 male and 44 female with diverse ethnic backgrounds; White (80%), African-American (13%), Asian (4%), and Hispanic (3%). These crowd-workers also come from diverse work backgrounds, ranging from Information Technology (40%) to Industrials (12%) to Communications Services (2%), and held different roles in their companies such as managerial positions (54%), software engineers (17%), among others.

Ethical Considerations

The study was approved by Nokia Bell Labs, and the study protocol stated that the collected data will be analyzed for research purposes only. In accordance to GDPR, no researcher involved in the study could have tracked the identities of the crowd-workers (the AMT platform also uses unique identifiers that do not disclose the real identity of the worker), and all anonymous responses were analyzed at an aggregated level.

ANALYSIS

For each technology $tech$ and question $i$, we computed the average rating when $tech$ is deployed onsite, and the average rating when it is deployed remotely:

\begin{align*} _$onsite(tech)i$ = $tech$’s average onsite rating, _$remote(tech)i$ = $tech$’s average remote rating, \end{align*}

To then ease comparability, we $z$-scored these two values: \begin{align*} z_onsite(tech)_i=onsite(tech)_i - μ_onsite(tech)_i/σ_onsite(tech)_i, z_remote(tech)_i=remote(tech)_i - μ_remote(tech)_i/σ_remote(tech)_i \end{align*} where $i$ is one of the three questions (hard to adopt, intrusive, harmful), $μ_onsite(tech)$ and $σ_onsite(tech)$ are the average and standard deviation of the ratings for all technologies deployed onsite, and $μ_remote(tech)$ and $σ_remote(tech)$ are the average and standard deviation of the ratings for all technologies deployed remotely.

RESULTS

Unacceptable scenarios - those that were judged to be hard to adopt, intrusive, and harmful - include tracking physical movements, especially onsite. This scenario was indeed considered to: be not fully supported by current technologies in use (hard to adopt); interfere with work (intrusive); and infringe on one’s freedom of movement (harmful).

As for all the scenarios, we tested how each of them was judged along multiple dimensions. To that end, we computed the conditional probability of a scenario that was judged, say, hard to Adopt to be also judged Harmful. This probability is $p(Harmful | hard to Adopt)$, and is equal to 0.6 (Table tab-probs), meaning that if a scenario is hard to adopt is also likely to be considered harmful, but not always, as we shall see next. More generally, the conditional probabilities are computed as: $$ p(i | j) = #cases that are i and j/#cases that arej $$

From these conditional probabilities, we can see that 20% of the technologies that are hard to adopt are also considered intrusive, and 60% are also considered harmful; 25% of the technologies that are intrusive are considered hard to adopt; finally 43% of the harmful technologies are considered hard to adopt.

By qualitatively analyzing the ways our participants motivated their judgments, we found that these judgments followed three main heuristics (i.e.,mental shortcuts used to assess the scenarios quickly and efficiently):

-

[] Viability. The first heuristic we identified was whether the scenario can be easily built from existing technologies in a satisfactory manner. This judgment criterion is associated with the moral dimension of fairness (any prototype of a technology that is hard to build would inevitably fall short and would be ridden with inaccuracies and biases). For example, in an online meeting, accurately tracking facial expressions is technically easy to do using webcams. By contrast, tracking body postures is still a hard problem because it requires a combination of wearable sensors such as multiple gyroscopes (e.g., a couple on the earphones, and the other on a smart watch), which ends up producing spurious classifications of body postures.

-

[] Non-intrusiveness. Another heuristic was whether the scenario did not interfere with work or, more generally, was fit for purpose. This judgment criterion was associated with the two moral dimensions of authority (when the technology is invasive and authoritarian) and loyalty (when the technology disrespects one’s way of working and, as often mentioned by our respondents, it has been misused). For example, tracking eye movements in online meetings, despite being possible, was considered to be unfit for productivity trackingand be “on the way” of getting the job done. By contrast, tracking text messages in collaboration tools such as Slack was considered to not interfere with work (unobtrusive) and fit for the purpose of tracking productivity (not misused).

-

[]Responsibility. The final heuristic was whether the scenario was considered responsible in that it did not cause any harm, or infringed on any individual rights. This was associated with the two moral dimensions of harm (when the technology has negative effects on individuals) and purity (when the technology is seen to disrespect one’s beliefs). For example, tracking audio conversations in online meetings was considered to be possible (viable), and fit for purpose, yet it was considered to be harmful, as it entailed tracking not only whether a meeting took place but also its content.

Onsite vs. Remotely: Clustering how technologies are judged

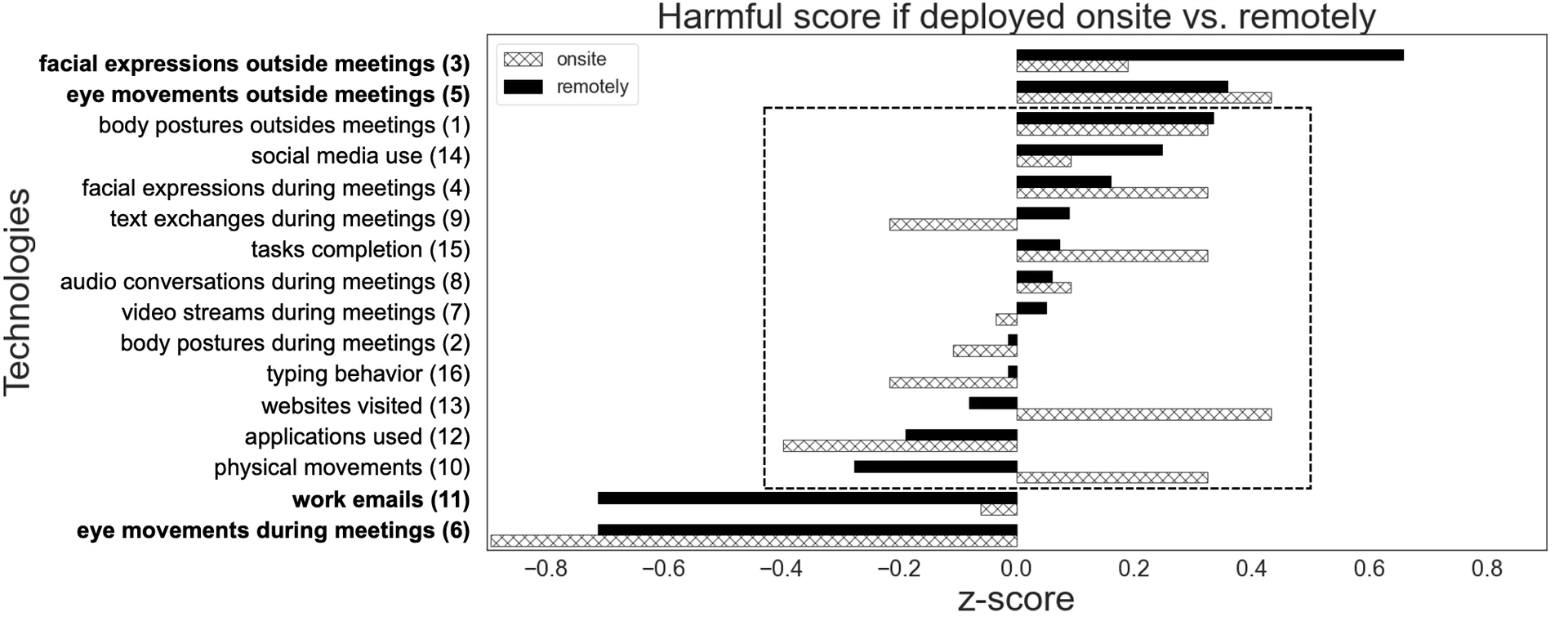

Figure fig-harmful-quadrant shows the harmful score when a technology is deployed onsite versus when it is deployed remotely. Four groups of technologies emerge: a) technologies considered harmful (harmless) both onsite and remotely, b) technologies considered harmful (harmless) onsite but not remotely, or vice-versa, c) technologies considered harmful remotely, and d) technologies considered harmless remotely. As for the first two groups of technologies, for example, tracking audio conversations during meetings led to same judgments irrespective the deployment setting, while, tracking text exchanges during meetings led to opposite judgments. As for the third group, tracking eye movements and facial expressions outside meetings were considered more harmful when deployed remotely, not least because remote work typically happens in a private spacesuch as one’s home, and, as a result, the use of tracking devices should be limited to specific work-related activities and should ideally not go beyond them. As for the fourth group, tracking work emails and tracking eye movements during meetings were considered less harmful when deployed remotely. As it is harder to measure productivity in remote settings, tracking work emails was considered a reasonable proxy for attention levels and a compromise to accept[https://www.gartner.com/smarterwithgartner/9-future-of-work-trends-post-covid-19 ][https://www.computerworld.com/article/3586616/the-new-normal-when-work-from-home-means-the-boss-is-watching.html ]. Also, tracking eye movements during meetings was seen as a proxy for body cues (e.g., facial expressions), which could reflect attention levels and often go unnoticed in virtual meetings.

Words associated with moral dimensions

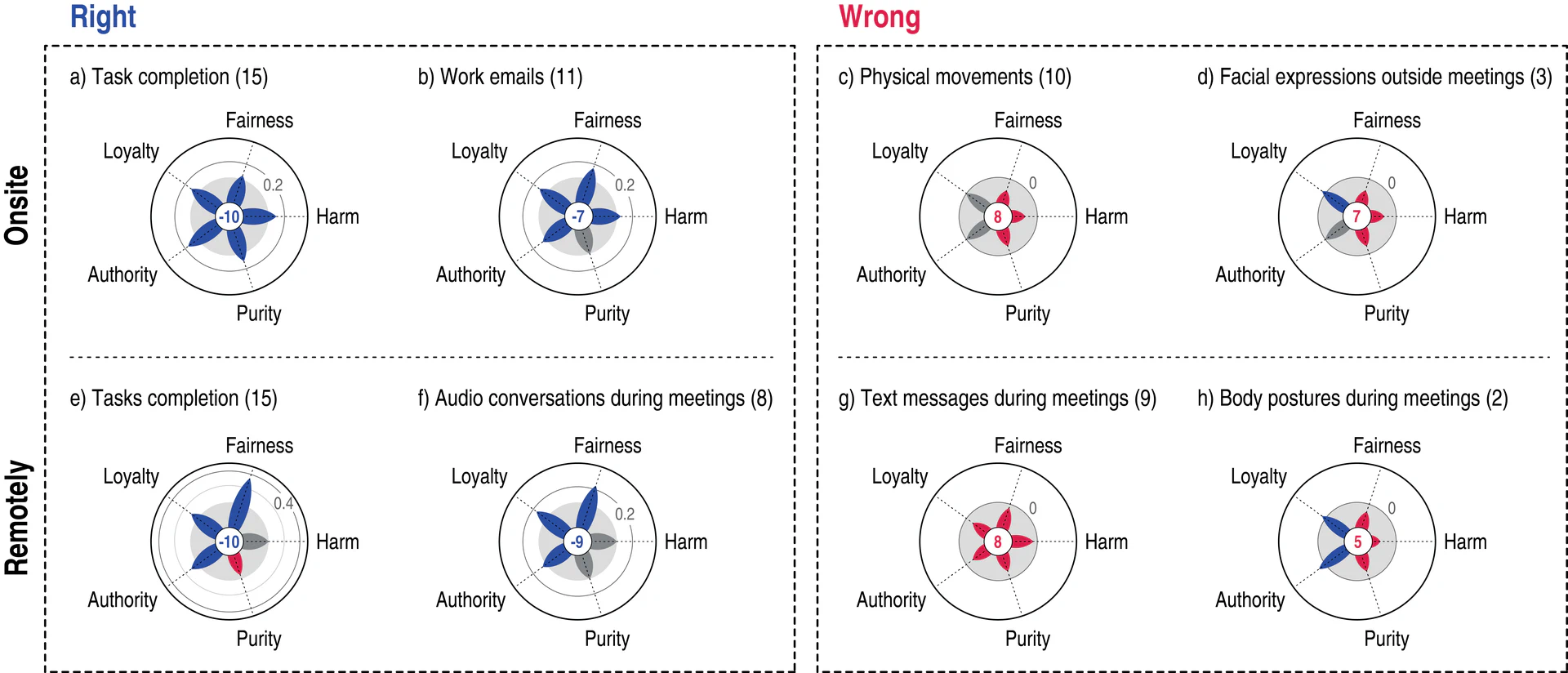

Next, we looked into how crowd-workers associated our technologies with words related to the five moral dimensions of harm, fairness, loyalty, authority, and purity. We computed the fraction of times a word associated with each moral dimension was chosen (Figure fig-radar-plots). For example, tracking task completion onsite (technology 15 in Table tab-technologies) was associated with fairness, loyalty, authority, purity, and lack of harm. The very same technology though when applied remotely was again associated with fairness, loyalty, authority, but also with harm and lack of purity.

By aggregating the negative and the positive words of the five moral dimensions (as per signs in sec:procedure), we computed a scenario’s `wrongness’. This score captures how morally wrong or right a technology is. Technologies that were considered to be morally right (blue in Figure fig-radar-plots) were associated with positive words (e.g., fair, impartial), while those considered to be morally wrong (red in Figure fig-radar-plots) were associated with negative words (e.g., unjust, discriminatory). We found that morally right technologies with negative values of wrongness in Figures fig-radar-plotsa-b, and Figures fig-radar-plotse-f were those that track productivity based on task completion, work emails, and audio and textual conversations during meetings, whereas morally wrong technologies (Figure fig-radar-plotsc-d, and g-h) were those that involved some kind of body-tracking such as tracking physical movements and facial expressions.

DISCUSSION AND CONCLUSION

Discussion of Results

We know surprisingly little about how people perceive pervasive technologies in the workplace. Yet we need to know more to inform the design of such technologies. This appears of crucial importance, not least because of the two polar opposite views animating today’s debate over technology. On one hand, informed by a widespread algorithmic aversion, we risk rejecting technologies that could make our lives better. On the other hand, informed by technological optimism, we may adopt technologies that could have detrimental impacts. To avoid rejecting good technologies and designing bad ones, we should unpack AI ethics in Pervasive Computing.

The heuristics we have found offer a guide on how technologies are likely to be morally judged. Having a technology that is easy to implement and does not interfere with work is not necessarily a technology that should be deployed. Tracking facial expressions (even beyond the nefarious uses—of dubious effectiveness—of inferring political orientation or sexual preferences from faces) is possible and could be done in seamless ways (e.g., with existing off-the-shelf cameras), yet it would be still considered harmful and unethical. Tracking eye movements, task completion, or typing behavior was considered a proxy for focus (harmless) yet intrusive as it would “get in the way.” Tracking social media use in remote work was considered not only intrusive but also harmful, as it infringes on privacy rights.

Finally, regardless of the work context (on-site vs. remote work), most scenarios are either harmless (e.g., tracking application usage was considered to be a proxy forfocus on work) or harmful (e.g., tracking physical movements, body posture, or facial expressions was not). Yet, other scenarios were context dependent (Figure fig-harmful-quadrant). Tracking text messages during meetings was considered less harmful (more fit-for-purpose) onsite than remotely. Text messages in onsite settings were considered “fair game” as they could reflect a meeting’s productivity, while those in a remote setting were usually used beyond the meeting’s purpose (e.g., used to catch up with colleagues), making them a poorer proxy for meeting productivity. Again, the heuristic used remained the same: whether a technology (e.g., inferring productivity from text messages) was fit for purpose. The only difference was that the same technology was fit for purpose in one context (e.g., in the constrained setting of a meeting) but not in the other (e.g., in the wider context of remote work).

Limitations and Future Work

This work has three main limitations that call for future research efforts. The first limitation concerns the generalizability of our findings. While our sample demographics were fairly distributed across industry sectors and ethnic backgrounds, most of the crowd-workers were based in the US. Our findings hold for this specific cohort. The second limitation concerns the negative connotation of the three questions being asked in the crowd-sourcing experiment, which might have biased the responses. Even in such a case, the results would still make sense in a comparative way as the responses would be systematically biased across all scenarios. The third limitation concerns the pervasive technologies under study. Given the rapid technological advancements, at the time of writing, the 16 technologies in Table tab-technologies were considered of likely adoption. Future studies could replicate our methodology to larger and diverse cohorts, in specific corporate contexts or geographical units, and to emerging technologies such as AR headsets and EMG body-tracking devices.

Theoretical and Practical Implications

From a theoretical standpoint, it contributes to the ongoing debate of ethical and fair use of AI—the emergent field of Responsible AI[https://www.bell-labs.com/research-innovation/responsible-ai ]. As we showed, one needs to consider whether a technology is irresponsible in the first place well before its design. While tracking facial expressions is supported from current technology (viable) and can be done in seamless ways (unobtrusive), it was yet considered to be irresponsible (causing harm) in the office context. This translates into saying that companies should be more thoughtful about the ways they manage their workforce, and not deploying tools just because the technology allows them to. To determine whether a technology is irresponsible is a complex matter though, not least because it entails ethical concepts that are hard to define. That is why new approaches helping AI developers and industry leaders think about multi-faceted Responsible AI concepts should be developed in the near future. From a practical standpoint, the Ubicomp community currently focuses on how to design better technologies by blending them into the background:monitoring movements in a building through wifi signals, for example, remain hidden. The problem is that, by blending technologies into the background, individuals are unaware of them and, as a result, their ethical concerns are often overlooked. That is why the Ubicomp community’s aspiration of blending technologies needs to go hand-in-hand with the need of unpacking AI ethics.

Marios Constantinides is currently a Senior Research Scientist at Nokia Bell Labs Cambridge (UK). He works in the areas of human-computer interaction and ubiquitous computing. Contact him at [email protected] .

Daniele Quercia is currently the Department Head at Nokia Bell Labs in Cambridge (UK) and Professor of Urban Informatics at King’s College London. He works in the areas of computational social science and urban informatics. Contact him at [email protected] .

Bibliography

1@inproceedings{buolamwini2018gender,

2 url = {http://proceedings.mlr.press/v81/buolamwini18a.html},

3 organization = {PMLR},

4 year = {2018},

5 pages = {77--91},

6 booktitle = {Conference on Fairness, Accountability and Transparency},

7 author = {Buolamwini, Joy and Gebru, Timnit},

8 title = {Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification},

9}

10

11@inproceedings{rudnicka2020eworklife,

12 organization = {The new future of work online symposium},

13 year = {2020},

14 booktitle = {Eworklife: developing effective strategies for remote working during the COVID-19 pandemic},

15 author = {Rudnicka, Anna and Newbold, Joseph W and Cook, Dave and Cecchinato, Marta E and Gould, Sandy and Cox, Anna L},

16 title = {Eworklife: Developing effective strategies for remote working during the COVID-19 pandemic},

17}

18

19@misc{tucsurvey,

20 year = {2018},

21 url = {https://www.tuc.org.uk/research-analysis/reports/ill-be-watching-you},

22 author = {{Trades Union Congress}},

23 title = {I'll be watching you. A report on workplace monitoring},

24}

25

26@article{ball2010workplace,

27 doi = {https://doi.org/10.1080/00236561003654776},

28 publisher = {Taylor \& Francis},

29 year = {2010},

30 pages = {87--106},

31 number = {1},

32 volume = {51},

33 journal = {Labor History},

34 author = {Ball, Kirstie},

35 title = {Workplace Surveillance: An Overview},

36}

37

38@misc{eef_analysis,

39 year = {2020},

40 url = {https://www.eff.org/deeplinks/2020/06/inside-invasive-secretive-bossware-tracking-workers},

41 author = {Cyphers, Bennet and Gullo, Karen},

42 title = {Inside the Invasive, Secretive “Bossware” Tracking Workers},

43}

44

45@book{hidalgo2021humans,

46 publisher = {MIT Press},

47 year = {2021},

48 author = {Hidalgo, C{\'e}sar A and Orghian, Diana and Canals, Jordi Albo and De Almeida, Filipa and Martin, Natalia},

49 title = {How humans judge machines},

50}

51

52@misc{mckinsey,

53 year = {2020},

54 url = {https://www.mckinsey.com/~/media/McKinsey/Business\%20Functions/Risk/Our\%20Insights/Cybersecurity\%20tactics\%20for\%20the\%20coronavirus\%20pandemic/Cybersecurity-tactics-for-the-coronavirus-pandemic-vF.ashx},

55 author = {Boehm, Jim and Kaplan, James and Sorel, Marc and Sportsman, Nathan and Steen, Trevor},

56 title = {Cybersecurity tactics for the coronavirus pandemic},

57}

58

59@article{kawsar2018earables,

60 publisher = {IEEE},

61 year = {2018},

62 pages = {83--89},

63 number = {3},

64 volume = {17},

65 journal = {IEEE Pervasive Computing},

66 author = {Kawsar, Fahim and Min, Chulhong and Mathur, Akhil and Montanari, Allesandro},

67 title = {Earables for personal-scale behavior analytics},

68}

69

70@inproceedings{choi2021kairos,

71 year = {2021},

72 pages = {30--36},

73 booktitle = {Proceedings of the 22nd International Workshop on Mobile Computing Systems and Applications},

74 author = {Choi, Jun-Ho and Constantinides, Marios and Joglekar, Sagar and Quercia, Daniele},

75 title = {KAIROS: Talking heads and moving bodies for successful meetings},

76}

77

78@article{wang2018deep,

79 publisher = {American Psychological Association},

80 year = {2018},

81 pages = {246},

82 number = {2},

83 volume = {114},

84 journal = {Journal of personality and social psychology},

85 author = {Wang, Yilun and Kosinski, Michal},

86 title = {Deep neural networks are more accurate than humans at detecting sexual orientation from facial images.},

87}

88

89@article{alge2013workplace,

90 publisher = {Routledge},

91 year = {2013},

92 pages = {233--261},

93 journal = {The psychology of workplace technology},

94 author = {Alge, Bradley J and Hansen, S Duane},

95 title = {Workplace monitoring and surveillance research since “1984”: A review and agenda},

96}

97

98@inproceedings{adib2013see,

99 year = {2013},

100 pages = {75--86},

101 booktitle = {Proceedings of the ACM SIGCOMM 2013 conference on SIGCOMM},

102 author = {Adib, Fadel and Katabi, Dina},

103 title = {See through walls with WiFi!},

104}

105

106@article{arrieta2020explainable,

107 publisher = {Elsevier},

108 year = {2020},

109 pages = {82--115},

110 volume = {58},

111 journal = {Information fusion},

112 author = {Arrieta, Alejandro Barredo and others},

113 title = {Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI},

114}

115

116@article{peer2014reputation,

117 publisher = {Springer},

118 year = {2014},

119 pages = {1023--1031},

120 number = {4},

121 volume = {46},

122 journal = {Behavior Research Methods},

123 author = {Peer, Eyal and Vosgerau, Joachim and Acquisti, Alessandro},

124 title = {Reputation as a sufficient condition for data quality on Amazon Mechanical Turk},

125}

126

127@incollection{nippert2010islands,

128 publisher = {University of Chicago Press},

129 year = {2010},

130 booktitle = {Islands of Privacy},

131 author = {Nippert-Eng, Christena E},

132 title = {Islands of privacy},

133}

134

135@article{haidt2007new,

136 publisher = {American Association for the Advancement of Science},

137 year = {2007},

138 pages = {998--1002},

139 number = {5827},

140 volume = {316},

141 journal = {science},

142 author = {Haidt, Jonathan},

143 title = {The new synthesis in moral psychology},

144}

145

146@article{marchant2019best,

147 publisher = {American Medical Association},

148 year = {2019},

149 pages = {356--362},

150 number = {4},

151 volume = {21},

152 journal = {AMA Journal of Ethics},

153 author = {Marchant, Gary E},

154 title = {What are best practices for ethical use of nanosensors for worker surveillance?},

155}

156

157@article{kallio2021unobtrusive,

158 publisher = {MDPI},

159 year = {2021},

160 pages = {2003},

161 number = {4},

162 volume = {13},

163 journal = {Sustainability},

164 author = {Kallio, Johanna and Vildjiounaite, Elena and Kantorovitch, Julia and Kinnula, Atte and Bordallo L{\'o}pez, Miguel},

165 title = {Unobtrusive Continuous Stress Detection in Knowledge Work—Statistical Analysis on User Acceptance},

166}

167

168@article{jacobs2019employee,

169 publisher = {Elsevier},

170 year = {2019},

171 pages = {148--156},

172 volume = {78},

173 journal = {Applied ergonomics},

174 author = {Jacobs, Jesse V and Hettinger, Lawrence J and Huang, Yueng-Hsiang and Jeffries, Susan and Lesch, Mary F and Simmons, Lucinda A and Verma, Santosh K and Willetts, Joanna L},

175 title = {Employee acceptance of wearable technology in the workplace},

176}Attribution

arXiv:2210.06381v2

[cs.HC]

License: cc-by-4.0