- Papers

Papers is Alpha. This content is part of an effort to make research more accessible, and (most likely) has lost some details from the original. You can find the original paper here.

Introduction

Millions of people rely on recommender systems daily. Examples of recommendation based activities include listening to music via streaming services, shopping for products and services online, and browsing through social media streams. What we can choose from in the context of these activities is determined by algorithmic decision architectures that are optimised for a specified target such as maximising engagement, maximising the click through rate[https://en.wikipedia.org/wiki/Click-through_rate ], or increasing the sales. To enable these functionalities, recommender systems employ increasingly sophisticated user behaviour models that incorporate information on users’ past behaviours, preferences, interactions and created content.

Machine learning (ML) models that enable recommendations and that are trained on user data may unintentionally leverage information on human characteristics that are considered vulnerabilities (e.g., depression, young age, gambling addiction). In simple terms, an ML model may include a parameterised representation of a person’s vulnerability. Algorithmic decisions that are based on models using such representations could be considered manipulative, because the use of algorithmic decisions based on representations of vulnerabilities might have a deteriorating impact on the vulnerability condition of individuals. Among the prohibited artificial intelligence (AI) applications listed in article 15 of the European Commission’s proposal for a Regulation on Artificial Intelligence, are applications that perform manipulative or exploitative online practices producing physical or psychological harms to individuals or exploit their vulnerability on the basis of age or disability.

The use of ML models that exploit or impact the vulnerability of persons can become an ethical and potentially legal issue, because it could lead to manipulation, reduced agency/autonomy, or altered behaviour of individuals, in a way that is not necessarily in their best interest. Currently it is difficult to know whether a given model’s decisions are based on vulnerability-related information. However, public mandates for AI auditing must address this issue, and explainable AI (XAI) methods may be necessary for detecting vulnerability exploitation by ML based recommendation systems.

In this paper we are concerned with the problem of vulnerability detection by machine learning models, and our specific contributions are the following.

-

We define and describe examples of potential vulnerabilities and how they manifest in behaviour.

-

Weargue, by means of a literature review, that machine learning models can and are being used to detect vulnerabilities.

-

We illustrate cases in which vulnerabilities are likely to play a role in algorithmic decision making based on behavioural and contextual data.

-

To ameliorate the current situation, we further propose a set of requirements that methods must fulfil in order to:

- detect the potential for vulnerability modelling,

- detect whether vulnerable groups are treated differently by the model, and

- detect whether an ML model has created an internal representation of vulnerability.

The paper structure follows the above order of contributions. We conclude with an outlook perspective on the importance to not only regulate applied AI systems on a technical level, but to put the human at the centre of this process.

Vulnerability in the context of machine learning

In the context of algorithmic decisions systems, it is challenging to draw the line between persuasivesystems and manipulation. It is not simple to identify where free will ends and where manipulation startsHence, to understand the possible impact that machine learning systems can have on individuals from vulnerable groups, we need to establish a definition of vulnerabilities and create an understanding of how machine learning with big behavioural data can model these.

Vulnerability The United Nations define vulnerabilities as “The conditions determined by physical, social, economic and environmental factors or processes which increase the susceptibility of an individual, a community, assets or systems to the impacts of hazards.”[https://www.undrr.org/terminology/vulnerability ]. Unlike in physical environments, where physical disabilities and vulnerabilities are impactful (e.g., paraplegia), in digital environments, psychological vulnerabilities (e.g., depression) are more exposed to exploitation and discrimination. Hence, here we are primarily concerned with psychological and social factors which increase the susceptibility of an individual to the impacts of hazards.

When consideringpsychological vulnerabilities, it can be helpful to distinguish between stable (i.e., traits; anxiety disorder) and momentary characteristics (i.e., states; momentary anxiety) of a person. In that regard, we define vulnerabilities for the purposes of this article as follows:

- A person is vulnerable if their permanent or momentary psychological characteristics makes it particularly difficult for them to make autonomous choices and to exert agency over their actions under full consideration of their consequences and outcomes.

It is “the quality or state of being exposed to the possibility of being attacked or harmed, either physically or emotionally.”. For this reason, vulnerable individuals can be exposed to additional forms of harm or an aggravation of their condition (e.g., aggravation from mild depression to major depression) without their awareness and the agency to prevent or counteract it.

For the purpose of this article, we will use symptoms of major depressionas an illustrative example of a relatively stable psychological vulnerability for which first evidence suggests the possibility to model it using machine learning and digital footprints. The identification of people who are suffering the symptoms of major depression can in certain contexts be in violation of several ethical principles.

Exploitable vulnerabilities in algorithmic decision systems

In machine learning systems, vulnerabilities can be automatically exploited when the vulnerable state or condition of an individual becomes entangled with the optimisation criteria of an algorithmic system (i.e., recommender system). For example, depressive users on a social media platform might engage more with content that is emotionally charged with feelings of sadness, depression, and hopelessness. This behavioural tendency or “interest” might be picked up by the optimisation algorithm of that platform that is designed to maximise user engagement based on behaviour. Consequently, the platform could provide an increasing number of similar content to the user. While this optimisation procedure is effective to optimise user-engagement and harmless for non-vulnerable users (e.g., providing increasingly specific content on taco recipes), the increased provision of depression-related content to users with the vulnerable condition might aggravate the depressive symptoms of the user (e.g., reinforcement of perception of worthlessness) while increasing their engagement with the platform.

It is difficult – and outside our aim – to analyse the underlying causal patterns between mental states and compulsive behaviours. There is, however, sufficient reason to consider that emotions of depression, anxiety,individuals having a negative view of themselves, coincide with mental conditions that lead to compulsive behaviours. For example,conclude that “Compulsive buying is frequent among bipolar patients going through a manic phase. In most cases, the behaviour is associated with other impulse control disorders or dependence disorders and a high level of impulsivity”. Similarly,conclude that “Our data emphasises the frequency of association between ICDs (impulse control disorders) and major depression, and 29% of the depressed patients also had an ICD”. Shopping as a coping behaviour for stress also is investigated in, who report that “more stress release was found with larger amounts spent”.state that “Although no studies directly compare a cohort of ICD patients with a cohort of mood disorder patients, available data suggest that ICDs and bipolar disorder share a number of features: (1) phenomenological similarities, including harmful, dangerous, or pleasurable behaviours, impulsivity, and similar affective symptoms and dysregulation …”. One could argue that these are exactly the kind of behaviours social media usage is likely to reflect, probably even before they are discovered clinically or by the individuals themselves.

This insight must be combined with the knowledge that data based models for identifying and predicting mental health conditions are used for commercial purposes. For example, a leaked Facebook document reported by The Australianrevealed that the platform uses data based models to identify young, meaning down to $14$ year old, individuals feeling vulnerable, i.e. “worthless”, “insecure”, “stressed”, “defeated”, “overwhelmed”, “anxious”, “nervous”, “stupid”, “silly”, “useless”, and “a failure”. Furthermore, the document, marked “Confidential: Internal Only”, outlines how Facebook can target “moments when young people need a confidence boost”, and reveals an interest in helping advertisers target moments in which young users desire “looking good and body confidence” or “working out and losing weight”.

As stated by: “A key ethics challenge for using social networking site data (…) will be to ensure that vulnerable individuals have a comprehensive and sustained understanding of what participation involves…”. We fully agree with this conclusion, and argue that most users of social platforms do indeed not have such a comprehensive and sustained understanding. Sadly, this is neither a novelty nor a controversial stance. However, we also argue that this problem exists on two levels, one level being the mere flow of information, or desire of social media platforms to inform users of how their data is being used. The second level is more subtle as well as technical, since “understanding of what participation involves” requires an understanding of the models used to analyse the data of the individual. Such understanding of non-interpretable models is at present often not possible, commonly referred to as the “black box” problem in machine learning and artificial intelligence.

This must be considered in light of the observation that the reviews quoted in the beginning of this section –, reporting the general tendency of machine learning methods replacing traditional forms of data analysis – consequently report an increase in non-interpretable models being proposed to predict psychological constructs and to detect mental health disorders.

All these things can be considered vulnerabilities, and in section sec-digbehdat we summarise research suggesting that AI can be used to detect such vulnerabilities.

Digital behavioural data for assessing psychological constructs

Unlike physical characteristics (e.g., body height), latent psychological characteristics of individuals cannot be measured directly but need to be estimated from reports, observations, or psychometric tests (e.g., cognitive abilities) of peoples thoughts, feelings, and behaviours. For example, most forms of mental health disorders are diagnosed via standardised self-report scales and structured clinical interviews. However, the self-reported information that is collected in this diagnostic process is highly subjective and retrospective. This is problematic because many psycho-pathological conditions impact peoples cognitive abilities (e.g., concentration, memory), hence potentially bias the obtained information. Moreover, self-reports are subject to a myriad of methodological influencesand intentional faking.

New approaches have been developed to assess psychological phenomena and psychopathology combining objective data on people`s digital behavioural and online footprints with machine learning. Text data in particular has long been considered as a valid source of information on psychological processes. As early as 1982reported that patients could be classified into groups suffering from depression and paranoia based on linguistic speech analysis. More recent workhas demonstrated that personal characteristics and traits can be inferred from text and other digital footprints on social media platforms. Others have demonstrated that these data can be used to predict individual well-beingand future mental illness.

Particularly well explored is depression, including comorbidity such as self-harm, and anorexia. Quantifiable signals in Twitter data relevant to bipolar disorder, major depressive disorder, post-traumatic-stress disorder and seasonal affective disorder were demonstrated by, who constructed data-based models capable of separating diagnosed from control users for each disorder. These systematic reviews,, provide an overview of these approaches.

Another growing body of research suggests that more fine-grained behavioural and contextual data that can be collected with of-the-shelf smartphones allow for similarly accurate predictions of psychological phenomena with much smaller samples. Computational inferences from mobile sensing data include a number of psychologically-relevant individual differences including demographics, moral values, and personality traits. Latest work, has started to explore the feasibility to predict clinical depression levels using messaging texts and sensor readings from smartphones. Finally, efforts to recognise unstable psychological states such as affective-emotional statesor cognitionproved more challenging and constitute an area of ongoing research. The algorithmic recognition of these states is highly relevant to the understanding of more complex psychological phenomena such as depression, yet more difficult to achieve.

Extant evidence sufficiently suggests that information about psychological traits and mental health states can be linked to digital behavioural footprints. However, it should be noted that despite a large body of research literature on the subject, obtaining clinically valid diagnostic information on individuals is challenging. This, as discussed in, leads to subsidiary “proxy diagnostic signals”, meaning characteristic online behaviours, being used instead in this kind of research.also report that these diagnostic signals lead to models with poor external validity, cautioning against their use for clinical decision-making.

While data based tools assessing mental health can certainly be used to help vulnerable individuals – suicide prevention tools have for instance been available on Facebook for more than ten years– their existence also raises several concerns: The general desirability of subsequent interventions has not been democratically agreed upon. Whether the use of data for this purpose can really be characterised as voluntary for Facebook users is not clear, as evidenced by e.g. the public dispute of the study `Experimental Evidence of Massive-Scale Emotional Contagion Through Social Networks’, including its editorial expression of concern. Showing that social media data can be used for emotional contagion – “leading people to experience (…) emotions without their awareness” –, this study together with the existing body of literature lead to the conclusion that not only can data and machine learning models be used to detect emotional states and mental health conditions, but that such models are already being successfully developed and used to change them.

Due to this fact caution is warranted in the way how these normally protected information is treated in digital environments.

Towards detecting vulnerability exploitation in machine learning models

As evidenced in the previous section, ML models are being successfully developed with the purpose of modelling vulnerability. Thus, we must consider whether models developed for other purposes – for example targeted advertisement – on data that contains information about vulnerability, are in fact modelling and thus exploiting this information incidentally.

As discussed in , there is no single marker for vulnerability: it is not directly represented by data features, and must be deduced. While age is something that can be a data feature, human beings do not have e.g. written somewhere on their body, and no single behaviour is uniquely linked to . Furthermore, vulnerability is context dependent. Therefore, in order to detect vulnerabilities, one would have to test for specific ones, and given the relevant context. To illustrate, we provide a list of examples of vulnerabilities in their contexts, see . This list is certainly not exhaustive, and merely included for illustration. A complete list of vulnerabilities to test for would have to be created by legislative operators in the respective administrative region (e.g., the European Union) and kept updated by national agencies and government organs charged with data and/or consumer protection.

Non-exhaustive list of potential vulnerabilities and corresponding relevant contexts. Abbreviations: Post traumatic stress disorder (PTSD); Generalized anxiety disorder (GDA).

Table Label: tab-vulnerabilities

Download PDF to view tablePsychological conditions that can form the base for vulnerabilities are ascertained by psychologists who in turn have to use self-reported feelings and behavioural tendencies to find out whether a person has a mental illness or vulnerability. Alternatively, machine learning models can use data containing language or behavioural patterns to construct latent features representing mental illness or vulnerability in a person. However, if a model is not specifically constructed to detect a vulnerability, how can we be sure that it hasn’t constructed latent features representing vulnerabilities, and exploits these to achieve its goal?

In order to understand whether and how a machine learning model incorporates vulnerabilities, we need to be able to answer some questions about this suspected model. It should also be clear who is able to understand the relationship between the predictions of the model and a specified vulnerability. In addition, there needs to be an understanding of how to sufficiently empower concerned stakeholders to enforce necessary changes to the suspected model and to mitigate the creation and the use of ethically undesirable models in general. Namely, not to simply impose restrictive regulations that prohibit certain model use, but rather to create tools to detect and measure what models do wrong and when.

Information contained in data

Is sufficient information about a given vulnerability contained in the training data of the model?

A data based model consisting only of operations on the input data – which is the case for all ML models, including neural networks – cannot add information beyond what is present in the input data. This is formalised in the Data Processing Inequality, stating that post-processing cannot increase information. Therefore, the necessary information for detecting a vulnerability must be present in the data available to the model under scrutiny. If it is, then there is a possibility that the model uses this information, although this might not be the prime objective of the model.

In order to model the manic phase in a bipolar disorder, the data used by the model must contain either direct or indirect information about manic behaviour.

Information about mental states and vulnerability can either be directly available (e.g., questionnaire data, estimates from models), or indirectly through correlation structures in the data. For example, depression symptoms can be characterised by feelings of sadness, fearfulness, emptiness or hopelessness – information that is frequently expressed in self-statements (e.g., text messages, see). Depressive episodes can also be indicated by more basic behavioural patterns such as unusual diurnal activity (i.e., sleep irregularities) or reduced physical activity, which can also be reflected in digital behavioural data. Knowing common symptoms of depression (e.g. from), we can flag data sets containing information about these behaviours as disposed for modelling depression.

Model behaviour

Are vulnerable groups systematically treated differently by the model?

Assuming we know the true vulnerability status of individuals, we can either monitor the predictions of the model under scrutiny, or attempt to directly compare the model to one that is known to incorporate the vulnerability of interest. The former is done by systematically analysing the predictions of the model and investigate whether there is a high correlation with the predictions of vulnerability.

Assume that we have a data set containing true labels of low impulse control in individuals, and want to investigate whether a targeted advertisement recommender model exploits the vulnerability of these individuals to cause compulsive buying behaviour. Then, we could let the recommender model predict on the data describing the low impulse control individuals as well as a control group consisting of individuals not sharing the vulnerability, and compare the scores assigned to all individuals by the model. If a significantly higher number of individuals with low impulse control are identified as potential buyers by the recommender model, then this model is likely to exploit knowledge of the low impulse control in its prediction. This approach is possible in the case of compulsive buying, since we know that it is possible to predict compulsive buying from pathological personality traits, see e.g..

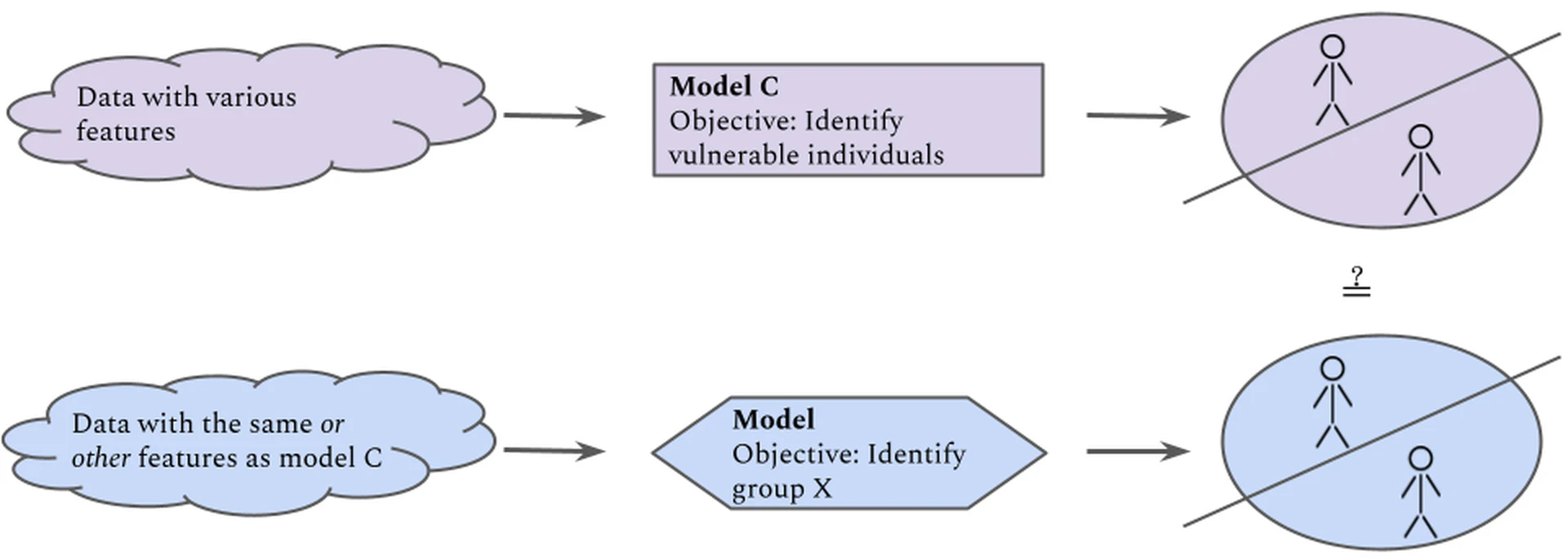

The second approach relies on the availability of a model that intentionally predicts the vulnerability of interest and that could serve as a “ground truth” source. Here, we give an example to illustrate this approach.

We can train a model $C$ to detect in individuals, and construct a test data set consisting of both individuals having as well as non-afflicted individuals. We can then compare directly how many of the individuals assigned the vulnerability label by $C$ are also identified by the model under scrutiny, either by being assigned to the same class (in the case of classification) or given similar scores (in the case of regression). This method is visualised in . Note that the features each model is trained on need not be the same in order to give the two models the same information about an individual’s vulnerability; most behavioural data are proxy variables.

XAI and existing methods

Has the model built an internal representation of vulnerability?

This approach is introspective, i.e. it involves investigating the internal parameters of the model in order to infer whether these contain sufficient information to separate vulnerable from non-vulnerable individuals. Restricting ourselves to neural networks, we can use methods allowing us to probe the internal state of a neural network for concepts, as described in. Interpreting neural networks’ latent features as human concepts has been studied for a variety of data and model types, including for words, images, and chess.

If we regard a specific vulnerability, for instance , as a concept, we can use concept activation methods to find out whether a neural network model has internalised a representation of this vulnerability as follows. A labelled data set consisting of people with and without the affliction is assembled, and used as positive and negative probes for the concept. Providing this data to the neural network, we collect the activations of its internal layers, and investigate whether these activations can be used to separate the afflicted individuals from the non afflicted individuals in the test data set. The accuracy with which this can be done indicates to which degree the neural network has represented the vulnerability concept. If we can identify representation of a concept by a late layer inside the neural network, or throughout the entire model, we can assume that it has found this information useful andmakes use of it for itsits classification.

Does the model assign high importance to features associated with a vulnerability?

Feature importance attribution methods can be used in order to determine which features have a high impact on a model’s prediction, i.e. which features are “perceived as important by the model itself”. Popular libraries for generating feature attributions include SHAP, SAGE, and LIME. Based on the resulting feature importances, a domain expert on human psychology can assess whether the model makes use of features that are informative with respect to a vulnerability.

Fairness and vulnerability

The concern that a decision-making algorithm, regardless of whether it is model-based or rule-based, treats disadvantaged groups of people differently, falls within the domain of fairness in AI. Fairness in AI is concerned with protecting the ethical value of justice. The justice principle is concerned with how people are treated and embodies the idea that decisions about individuals should be based on just arguments, the consequence of which is that similar people should be treated similarly. Group fairness in particular is concerned with ensuring that decisions to recommend an allocation of a resource, such as a job interview, are not directly or indirectly biased with respect to a legally protected feature of the applicant, for example race.

The fundamental question of fairness is “Is a particular group in treated unjustly or harmed?”. The group in question can be defined by protected attributes, such as race, religion, gender, etc. In principle, groups can also be characterised by vulnerability, and group fairness methods can be applied to issues of detecting vulnerability and de-biasing of a model used in a decision-making algorithm. In practice this might be difficult.

In order to use fairness metrics to detect vulnerability bias in decision-making, knowing the exact set of features that characterise that vulnerability is necessary. The set of features that characterise a protected group is always given, typically defined by law. On the other hand,for many mental health conditions, a clear set of diagnostic features is not available.

Most group fairness metrics assume the existence of a desirable model classification and measure the proportionality of two groups being classified. The de-biasing methods for ML models are a collection of methods that deploy different changes in the decision-making pipeline, which result in enforcing proportionality in desirable and undesirable decisions across two groups. At first glance, such methods could be shoehorned to change the proportion of vulnerable group members vs others that are, for example, targeted with a particular link. But these methods are effective in adjusting small differences and trading off model accuracy for group proportionality. If the overlap between vulnerable people and targeted people is large, current de-biasing methods would not be effective.

Challenges and call to action

We argue that methods for determining whether a model is in fact exploiting vulnerabilities must be developed and integrated in auditory frameworks. In this section, we first address challenges associated with developing such methods in , before pointing to the protections for vulnerable groups in specific regulation in .

Data describing vulnerabilities

In order to apply the model monitoring, model comparison or concept detection approaches described in Examples 2, 3 and 4 respectively, labelled data sets describing vulnerable individuals would have to be created. This immediately poses an ethical challenge: we would have to decide whether collecting and labelling data describing vulnerabilities is unethical, although the purpose is to identify and regulate models that do this. As we regard the creation, storage and use of such data sets as too risky to defend the potential gain, we wish to point out a possible alternative venue of research involving synthetic data. It could be possible to generate synthetic data, based on psychological descriptions of vulnerability traits. Similar venues are currently being explored in the context of medical data, to facilitate training ML models for clinical decision support without having to collect and store medical data, although not without challenges of its own.

Intentionally modelling vulnerability

There are also challenges associated with the creation and use of models trained to identify a state that can constitute a vulnerability, i.e. with the approach described in Example 2 and creating “model $C$”. The development of such models requires diagnostic, meaning sensitive and privacy protected, information about individuals. Hence there are many challenges and professional requirements that need to be met for safe and ethical handling and development of such models.

It has been argued[See for examplefor the discussion regarding ML use in detecting sexual orientation.] that models with such capabilities should not be developed due to the risk of abuse. However, we argue that the existence of models accidentally or covertly having the capabilities of identifying vulnerabilities constitutes a far greater risk of abuse – understanding something is often better than dogmatically banning it. This opens up a discussion on proportionality which is common in privacy law, and outside the scope of this paper. Still, we wish to point out the interesting trade-off that arises as developing type $C$ models helps detect accidental vulnerability modelling, while focusing the attention on difficult questions such as “Who should develop type $C$ models? Who should be trusted with using type $C$ models to test models already deployed? Can type $C$ models be distributed, or does their containing latent features describing vulnerability force us to consider them as containing sensitive information?

We most adamantly do not intend to argue that all platforms using models trained on publicly available behavioural data should also ask their users to provide sensitive data about their mental health status, in order to develop type $C$ models for testing.

Microtargeting and regulation

Theapproaches we describe in section sec-approach require access to the models themselves in addition to labelled data containing vulnerable individuals. Researchers and private initiatives typically do not have access to the commonly used recommender systems nor to the necessary data. Since many of the models that should be tested for vulnerability modelling are proprietary, we cannot require or expect that research groups be given access to these. It is only regulatory and supervisory authorities that can require such access in auditory processes. However, an effective regulatory framework for the protection of vulnerable individuals in AI may be hard to attain. The core problem with establishing and enforcing regulation is articulating the concern: what are the issues that we would like to avoid and where do they occur.

One area in which there is an articulated concern, and some legal protection, for the identification andexploitation of vulnerable states is targeted advertising, or rather its specific digital version called `microtargeting'.

Targeted advertising is advertising directed at an audience with certain characteristics. Although this type of marketing has always existed, the availability of data traces and online advertising has created the option to microtarget audiences and customers. Microtargeting is the practice ofusing data mining and AI techniques to infer personal traits of people and use them to adjust the marketing campaign to those traits. Micro-targetinggives advertisers the ability to exploit recipients’ personal characteristics and potential vulnerabilities.

It is not entirely clear how effective micro-targeting is in persuading individuals. What is clear is that micro-targeting at present is not transparent as to what data it uses to tailor exposure to content. If exploitation of vulnerabilities is happening, there are no mechanisms to detect it and mitigate it.

Online targeted advertisement is subject to data related and regulations in the European Union.identifythe following rules on online targeted advertising:

- the ePrivacy Directive (Directive 2002/58/ED, as amended);

- the GDPR (Regulation (EU) 2016/679, as amended);

- the eCommerce Directive (Directive 2000/31/EC);

- the Unfair Commercial Practices Directive (Directive 2005/29/EC, as amended);

- the Directive on Misleading and Comparative Advertising (Directive 2006/114/EC, as amended);

- the Audiovisual Media Services Directive (Directive (EU) 2018/1808);

- the Consumer Rights Directive (Directive 2011/83/EU, as amended);

- The Digital Markets Act (“DMA”); and

- The Digital Services Act (“DSA”)

Most of these regulations are concerned with obtaining the informed consent from a user for the processing of their data, as well as clearly indicating which legal or natural person has commissioned the advertising. The Audiovisual Media Services Directive prohibits the use of surreptitious or subliminal techniques of advertising when those cannot be readily recognised as such, however, micro-targeting is not considered a surreptitious or subliminal technique.

Most regulations are not explicitly concerned with vulnerable individuals. An exception is the recently agreed Digital Services Act (DSA). Article 63 specifies:

The obligations on assessment and mitigation of risks should trigger, on a case-by-case basis, the need for providers of very large online platforms and of very large online search engines to assess and, where necessary, adjust the design of their recommender systems, for example by taking measures to prevent or minimise biases that lead to the discrimination of persons in vulnerable situations, in particular where this is in conformity with data protection law and when the information is personalised on the basis of special categories of personal data, within the meaning of Article 9 of the Regulation (EU) 2016/679. In addition, and complementing the transparency obligations applicable to online platforms as regards their recommender systems, providers of very large online platforms and of very large online search engines should consistently ensure thatrecipients of their service enjoy alternative options which are not based on profiling, within the meaning of Regulation (EU) 2016/679, for the main parameters of their recommender systems. Such choices should be directly accessible from the interface where the recommendations are presented.

Concern and request for protection for vulnerable recipients occurs twice in the DSA, in both case minors are used as an example of such group of recipients.

Conclusion

In the very same way that specific vulnerabilities can lead to a feeling of being overwhelmed in the affected person, it seems that machine learning engineers, policy makers, and ethics specialists shy away from addressing them in the design and audit of autonomous learning systems. Both conditions need to be ameliorated. We are only now beginning to explore the power of behavioural prediction models and their potential for monetising.

To to protect vulnerable individuals and groups, three essential conditions must be met: i) understanding how vulnerable states can be exploited, ii) detecting when information about vulnerability is being used by an algorithm, iii) and creating social and financial incentives for preventing exploitation of vulnerabilities.

The study of the relationships between individuals’ vulnerabilities and their online behaviours, which may be exploited or monetised, falls within the realm of the behavioural sciences. This research can lead to the development of technology and laws that help prevent exploitation, but it also has the potential to aid exploiters in their efforts. One often proposed solution to this dilemma is to prohibit research that aims to understand such correlational patterns. However, this approach also has drawbacks, as it may prevent the advancement of knowledge and the creation of interventions to help vulnerable individuals.

Around the clock observation can be a privilege in monitoring a condition for those who can afford it (e.g., relapse prediction). It can also be a tool for oppression through surveillance. Combined with modern tools offering cheap and consistent unobtrusive observation, ML can help us understand mental health conditions that we otherwise would not be able to afford. Dishonest actors will always be able to identify exploitable vulnerabilities because complete and outright prohibition of methods whose impact we do not fully understand is not feasible.

We propose several approaches to identifying if an ML model uses information related to vulnerability. It is difficult to evaluate the approaches we propose because data sets to do so are not available. In domains in which we can easily suspect information on vulnerabilities is used, such as targeted advertising, the information is on who is being offered which content is understandably hard to come by. One approach would be to consider proxy domains, such as recommendations of movies or songs and look into who is being recommended what type of entertainment. This is what theinvestigation attempted.We would still be left with the problem of having access to the information of the actual mental state of individuals.This is not information that should be publicly available. This is why iii) is not only needed but necessary.

Advertising is an example of a context in which we can clearly see how vulnerabilities can be monetised and exploited. It is not necessarily the only such example. And even in this context, regulation is hard to agree on and enforce in time. In order to make AI safe and trustworthy, it is imperative that regulators engage in a debate and collaborate with experts in AI ethics, XAI and the behavioural sciences, to understand vulnerabilities and to regulate the domain.

Acknowledgement. The contribution of C.S. was partially funded by a research collaboration with armasuisse (contract: 8203004934) and by an internal University of Bergen grant for visiting researchers.

Bibliography

1@misc{pargent2022,

2 month = {Apr},

3 year = {2022},

4 author = {Pargent, Florian and Schoedel, Ramona and Stachl, Clemens},

5 publisher = {PsyArXiv},

6 doi = {10.31234/osf.io/89snd},

7 url = {psyarxiv.com/89snd},

8 title = {Best Practices in Supervised Machine Learning: A Tutorial for Psychologists},

9}

10

11@article{Saeb2016,

12pages = {e2537},

13month = {Sep},

14year = {2016},

15author = {Saeb, Sohrab and Lattie, Emily G and Schueller, Stephen M and Kording, Konrad P and Mohr, David C},

16journal = {PeerJ},

17doi = {10.7717/peerj.2537},

18issn = {2167/8359},

19volume = {4},

20title = {The relationship between mobile phone location sensor data and depressive symptom severity},

21}

22

23@article{Shneiderman2020,

24pages = {495/504},

25month = {Apr},

26year = {2020},

27author = {Shneiderman, Ben},

28publisher = {Taylor {\&} Francis},

29journal = {International Journal of Human-Computer Interaction},

30number = {6},

31doi = {10.1080/10447318.2020.1741118},

32issn = {1044-7318},

33volume = {36},

34title = {Human-Centered Artificial Intelligence: Reliable, Safe {\&} Trustworthy},

35}

36

37@article{Koch2022,

38pages = {106990},

39month = {Jan},

40year = {2022},

41author = {Koch, Timo and Romero, Peter and Stachl, Clemens},

42publisher = {Pergamon},

43journal = {Computers in Human Behavior},

44doi = {10.1016/j.chb.2021.106990},

45issn = {07475632},

46volume = {126},

47title = {Age and gender in language, emoji, and emoticon usage in instant messages},

48}

49

50@article{Malmi2016,

51language = {en},

52pages = {635/638},

53year = {2016},

54author = {Malmi, Eric and Weber, Ingmar},

55journal = {Proceedings of the International AAAI Conference on Web and Social Media},

56number = {11},

57doi = {10.1609/icwsm.v10i1.14776},

58issn = {2334/0770},

59rights = {Copyright (c) 2021 Proceedings of the International AAAI Conference on Web and Social Media},

60volume = {10},

61title = {You Are What Apps You Use: Demographic Prediction Based on User`s Apps},

62}

63

64@article{Sundsoy2016,

65numpages = {6},

66eprint = {1612.03870},

67eprinttype = {arXiv},

68url = {http://arxiv.org/abs/1612.03870},

69year = {2016},

70volume = {abs/1612.03870},

71journal = {CoRR},

72title = {Estimating individual employment status using mobile phone network

73data},

74author = {P{\aa}l Sunds{\o}y and

75Johannes Bjelland and

76Bj{\o}rn{-}Atle Reme and

77Eaman Jahani and

78Erik Wetter and

79Linus Bengtsson},

80}

81

82@article{Insel2017,

83pages = {1215-1216},

84month = {Oct},

85year = {2017},

86author = {Insel, Thomas R.},

87journal = {JAMA},

88number = {13},

89doi = {10.1001/jama.2017.11295},

90issn = {0098-7484},

91volume = {318},

92title = {Digital Phenotyping: Technology for a New Science of Behavior},

93}

94

95@article{Goerigk2020,

96pages = {153/168},

97year = {2020},

98author = {Goerigk, Stephan and Hilbert, Sven and Jobst, Andrea and Falkai, Peter and B{\"u}hner, Markus and Stachl, Clemens and Bischl, Bernd and Coors, Stefan and Ehring, Thomas and Padberg, Frank and Sarubin, Nina},

99journal = {European Archives of Psychiatry and Clinical Neuroscience},

100number = {2},

101doi = {10.1007/s00406/018/0967/2},

102issn = {14338491},

103volume = {270},

104title = {Predicting instructed simulation and dissimulation when screening for depressive symptoms},

105}

106

107@article{Moshe2021,

108language = {English},

109year = {2021},

110author = {Moshe, Isaac and Terhorst, Yannik and Opoku Asare, Kennedy and Sander, Lasse Bosse and Ferreira, Denzil and Baumeister, Harald and Mohr, David C. and Pulkki-Råback, Laura},

111publisher = {Frontiers},

112journal = {Frontiers in Psychiatry},

113doi = {10.3389/fpsyt.2021.625247},

114url = {https://www.frontiersin.org/articles/10.3389/fpsyt.2021.625247/full},

115issn = {1664-0640},

116volume = {0},

117title = {Predicting Symptoms of Depression and Anxiety Using Smartphone and Wearable Data},

118}

119

120@misc{kim_tcav,

121url = {http://proceedings.mlr.press/v80/kim18d/kim18d.pdf},

122year = {2018},

123author = {Been Kim and Martin Wattenberg and Justin Gilmer and Carrie Jun Cai and James Wexler and Fernanda Viegas and Rory Abbott Sayres},

124title = {Interpretability Beyond Feature Attribution: Quantitative Testing with Concept Activation Vectors (TCAV)},

125}

126

127@inbook{Klenk2020,

128language = {en},

129collection = {Philosophical Studies Series},

130pages = {81-100},

131year = {2020},

132author = {Klenk, Michael},

133publisher = {Springer International Publishing},

134booktitle = {Ethics of Digital Well-Being: A Multidisciplinary Approach},

135doi = {10.1007/978-3-030-50585-1_4},

136url = {https://doi.org/10.1007/978-3-030-50585-1_4},

137isbn = {978-3-030-50585-1},

138title = {Digital Well-Being and Manipulation Online},

139series = {Philosophical Studies Series},

140address = {Cham},

141}

142

143@incollection{Dsm5,

144address = {Washington DC},

145publisher = {American Psychiatric Association Publishing},

146language = {en},

147year = {2022},

148author = {{American Psychiatric Association}},

149edition = {5},

150doi = {10.1176/appi.books.9780890425787},

151url = {https://doi.org/10.1176/appi.books.9780890425787},

152booktitle = {Diagnostic and statistical manual of mental disorders},

153title = {Major depressive disorder},

154}

155

156@article{alphazero_concepts,

157eprint = {https://www.pnas.org/doi/pdf/10.1073/pnas.2206625119},

158url = {https://www.pnas.org/doi/abs/10.1073/pnas.2206625119},

159doi = {10.1073/pnas.2206625119},

160year = {2022},

161pages = {e2206625119},

162number = {47},

163volume = {119},

164journal = {Proceedings of the National Academy of Sciences},

165title = {Acquisition of chess knowledge in AlphaZero},

166author = {Thomas McGrath and Andrei Kapishnikov and Nenad Tomašev and Adam Pearce and Martin Wattenberg and Demis Hassabis and Been Kim and Ulrich Paquet and Vladimir Kramnik },

167}

168

169@article{Schoedel2020,

170pages = {733-752},

171month = {May},

172year = {2020},

173editor = {Rauthmann, John},

174author = {Schoedel, Ramona and Pargent, Florian and Au, Quay and V{\"o}lkel, Sarah Theres and Schuwerk, Tobias and B{\"u}hner, Markus and Stachl, Clemens},

175publisher = {John Wiley & Sons, Ltd},

176journal = {European Journal of Personality},

177number = {5},

178note = {Citation Key: Schoedel2020},

179doi = {10.1002/per.2258},

180issn = {10990984},

181volume = {34},

182title = {To Challenge the Morning Lark and the Night Owl: Using Smartphone Sensing Data to Investigate Day-Night Behaviour Patterns},

183}

184

185@inproceedings{NIPS2013_words,

186series = {NIPS'13},

187location = {Lake Tahoe, Nevada},

188numpages = {9},

189pages = {3111-3119},

190booktitle = {Proceedings of the 26th International Conference on Neural Information Processing Systems -- Volume 2},

191abstract = {The recently introduced continuous Skip-gram model is an efficient method for learning high-quality distributed vector representations that capture a large number of precise syntactic and semantic word relationships. In this paper we present several extensions that improve both the quality of the vectors and the training speed. By subsampling of the frequent words we obtain significant speedup and also learn more regular word representations. We also describe a simple alternative to the hierarchical softmax called negative sampling.An inherent limitation of word representations is their indifference to word order and their inability to represent idiomatic phrases. For example, the meanings of "Canada" and "Air" cannot be easily combined to obtain "Air Canada". Motivated by this example, we present a simple method for finding phrases in text, and show that learning good vector representations for millions of phrases is possible.},

192address = {Red Hook, NY, USA},

193publisher = {Curran Associates Inc.},

194year = {2013},

195title = {Distributed Representations of Words and Phrases and Their Compositionality},

196author = {Mikolov, Tomas and Sutskever, Ilya and Chen, Kai and Corrado, Greg and Dean, Jeffrey},

197}

198

199@article{Vaerenbergh2013,

200pages = {195-217},

201year = {2013},

202author = {Vaerenbergh, Yves Van and Thomas, Troy D.},

203journal = {International Journal of Public Opinion Research},

204number = {2},

205note = {Citation Key: Vaerenbergh2013},

206doi = {10.1093/ijpor/eds021},

207issn = {0954-2892},

208volume = {25},

209title = {Response styles in survey research: A literature review of antecedents, consequences, and remedies},

210}

211

212@article{Mueller2021,

213language = {en},

214pages = {14007},

215month = {Jul},

216year = {2021},

217author = {M{\"u}ller, Sandrine R. and Chen, Xi (Leslie) and Peters, Heinrich and Chaintreau, Augustin and Matz, Sandra C.},

218publisher = {Nature Publishing Group},

219journal = {Scientific Reports},

220number = {11},

221doi = {10.1038/s41598-021-93087-x},

222issn = {2045-2322},

223rights = {2021 The Author(s)},

224volume = {11},

225title = {Depression predictions from GPS-based mobility do not generalize well to large demographically heterogeneous samples},

226}

227

228@inproceedings{concepts_gans,

229address = {New York, USA},

230publisher = {IEEE},

231doi = {10.1109/ICCV.2017.244},

232pages = {2242-2251},

233number = {},

234volume = {},

235year = {2017},

236title = {Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks},

237booktitle = {2017 IEEE International Conference on Computer Vision (ICCV)},

238author = {Zhu, Jun-Yan and Park, Taesung and Isola, Phillip and Efros, Alexei A.},

239}

240

241@article{Panicheva2022,

242language = {en},

243pages = {1-43},

244month = {Dec},

245year = {2022},

246author = {Panicheva, Polina and Mararitsa, Larisa and Sorokin, Semen and Koltsova, Olessia and Rosso, Paolo},

247publisher = {SpringerOpen},

248journal = {EPJ Data Science},

249number = {11},

250doi = {10.1140/epjds/s13688-022-00333-x},

251issn = {2193-1127},

252rights = {2022 The Author(s)},

253volume = {11},

254title = {Predicting subjective well-being in a high-risk sample of Russian mental health app users},

255}

256

257@inproceedings{Lundberg2017AUA,

258series = {NIPS'17},

259location = {Long Beach, California, USA},

260numpages = {10},

261pages = {4768-4777},

262booktitle = {Proceedings of the 31st International Conference on Neural Information Processing Systems},

263abstract = {Understanding why a model makes a certain prediction can be as crucial as the prediction's accuracy in many applications. However, the highest accuracy for large modern datasets is often achieved by complex models that even experts struggle to interpret, such as ensemble or deep learning models, creating a tension between accuracy and interpretability. In response, various methods have recently been proposed to help users interpret the predictions of complex models, but it is often unclear how these methods are related and when one method is preferable over another. To address this problem, we present a unified framework for interpreting predictions, SHAP (SHapley Additive exPlanations). SHAP assigns each feature an importance value for a particular prediction. Its novel components include: (1) the identification of a new class of additive feature importance measures, and (2) theoretical results showing there is a unique solution in this class with a set of desirable properties. The new class unifies six existing methods, notable because several recent methods in the class lack the proposed desirable properties. Based on insights from this unification, we present new methods that show improved computational performance and/or better consistency with human intuition than previous approaches.},

264address = {Red Hook, NY, USA},

265publisher = {Curran Associates Inc.},

266isbn = {9781510860964},

267year = {2017},

268title = {A Unified Approach to Interpreting Model Predictions},

269author = {Lundberg, Scott M. and Lee, Su-In},

270}

271

272@inproceedings{covert_sage,

273year = {2020},

274volume = {33},

275address = {One Broadway 12th Floor Cambridge, MA 02142},

276url = {https://proceedings.neurips.cc/paper/2020/file/c7bf0b7c1a86d5eb3be2c722cf2cf746-Paper.pdf},

277title = {Understanding Global Feature Contributions With Additive Importance Measures},

278publisher = {Curran Associates, Inc.},

279pages = {17212--17223},

280editor = {H. Larochelle and M. Ranzato and R. Hadsell and M.F. Balcan and H. Lin},

281booktitle = {Advances in Neural Information Processing Systems},

282author = {Covert, Ian and Lundberg, Scott M and Lee, Su-In},

283}

284

285@article{Allport1942,

286pages = {71},

287year = {1942},

288author = {Allport, Gordon Willard},

289journal = {Marriage and Family Living},

290number = {3},

291volume = {4},

292title = {The use of personal documents in psychological science.},

293}

294

295@article{Stachl2021PS,

296pages = {1-22},

297month = {Jul},

298year = {2021},

299author = {Stachl, Clemens and Boyd, Ryan L. and Horstmann, Kai T. and Khambatta, Poruz and Matz, Sandra C. and Harari, Gabriella M.},

300journal = {Personality Science},

301doi = {10.5964/PS.6115},

302issn = {2700-0710},

303volume = {2},

304title = {Computational Personality Assessment},

305}

306

307@misc{Rauber2019,

308copyright = {arXiv.org perpetual, non-exclusive license},

309year = {2019},

310publisher = {arXiv},

311title = {Modeling patterns of smartphone usage and their relationship to cognitive health},

312keywords = {Machine Learning (cs.LG), Human-Computer Interaction (cs.HC), Machine Learning (stat.ML), FOS: Computer and information sciences, FOS: Computer and information sciences},

313author = {Rauber, Jonas and Fox, Emily B. and Gatys, Leon A.},

314url = {https://arxiv.org/abs/1911.05683},

315doi = {10.48550/ARXIV.1911.05683},

316}

317

318@inproceedings{lime,

319series = {KDD '16},

320location = {San Francisco, California, USA},

321keywords = {explaining machine learning, interpretable machine learning, black box classifier, interpretability},

322numpages = {10},

323pages = {1135-1144},

324booktitle = {Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining},

325doi = {10.1145/2939672.2939778},

326url = {https://doi.org/10.1145/2939672.2939778},

327address = {New York, NY, USA},

328publisher = {Association for Computing Machinery},

329isbn = {9781450342322},

330year = {2016},

331title = {"Why Should I Trust You?": Explaining the Predictions of Any Classifier},

332author = {Ribeiro, Marco Tulio and Singh, Sameer and Guestrin, Carlos},

333}

334

335@inproceedings{Gordon2019,

336pages = {1-12},

337month = {May},

338year = {2019},

339author = {Gordon, Mitchell L. and Garys, Leon and Guestrin, Carlos and Bigham, Jeffrey P. and Trister, Andrew and Patel, Kayur},

340publisher = {Association for Computing Machinery},

341booktitle = {Conference on Human Factors in Computing Systems - Proceedings},

342doi = {10.1145/3290605.3300398},

343url = {http://dl.acm.org/citation.cfm?doid=3290605.3300398},

344isbn = {978-1-4503-5970-2},

345title = {App usage predicts cognitive ability in older adults},

346address = {New York, New York, USA},

347}

348

349@article{Israel2020,

350pages = {1-19},

351month = {Aug},

352year = {2020},

353volume = {53},

354author = {Israel, Laura S. F. and Sch{\"o}nbrodt, Felix D.},

355publisher = {Springer},

356journal = {Behavior Research Methods},

357doi = {10.3758/s13428-020-01435-y},

358issn = {1554-3528},

359title = {Predicting affective appraisals from facial expressions and physiology using machine learning},

360}

361

362@article{Liu2022,

363pages = {7-14},

364month = {Apr},

365year = {2022},

366author = {Liu, Tony and Meyerhoff, Jonah and Eichstaedt, Johannes C. and Karr, Chris J. and Kaiser, Susan M. and Kording, Konrad P. and Mohr, David C. and Ungar, Lyle H.},

367publisher = {Elsevier},

368journal = {Journal of Affective Disorders},

369doi = {10.1016/J.JAD.2021.12.048},

370issn = {0165-0327},

371volume = {302},

372title = {The relationship between text message sentiment and self-reported depression},

373}

374

375@article{Stachl2020PNAS,

376abstract = {Smartphones enjoy high adoption rates around the globe. Rarely more than an arm’s length away, these sensor-rich devices can easily be repurposed to collect rich and extensive records of their users’ behaviors (e.g., location, communication, media consumption), posing serious threats to individual privacy. Here we examine the extent to which individuals’ Big Five personality dimensions can be predicted on the basis of six different classes of behavioral information collected via sensor and log data harvested from smartphones. Taking a machine-learning approach, we predict personality at broad domain (rmedian = 0.37) and narrow facet levels (rmedian = 0.40) based on behavioral data collected from 624 volunteers over 30 consecutive days (25,347,089 logging events). Our cross-validated results reveal that specific patterns in behaviors in the domains of 1) communication and social behavior, 2) music consumption, 3) app usage, 4) mobility, 5) overall phone activity, and 6) day- and night-time activity are distinctively predictive of the Big Five personality traits. The accuracy of these predictions is similar to that found for predictions based on digital footprints from social media platforms and demonstrates the possibility of obtaining information about individuals’ private traits from behavioral patterns passively collected from their smartphones. Overall, our results point to both the benefits (e.g., in research settings) and dangers (e.g., privacy implications, psychological targeting) presented by the widespread collection and modeling of behavioral data obtained from smartphones.},

377eprint = {https://www.pnas.org/doi/pdf/10.1073/pnas.1920484117},

378url = {https://www.pnas.org/doi/abs/10.1073/pnas.1920484117},

379doi = {10.1073/pnas.1920484117},

380year = {2020},

381pages = {17680-17687},

382number = {30},

383volume = {117},

384journal = {Proceedings of the National Academy of Sciences},

385title = {Predicting personality from patterns of behavior collected with smartphones},

386author = {Clemens Stachl and Quay Au and Ramona Schoedel and Samuel D. Gosling and Gabriella M. Harari and Daniel Buschek and Sarah Theres V{\"o}lkel and Tobias Schuwerk and Michelle Oldemeier and Theresa Ullmann and Heinrich Hussmann and Bernd Bischl and Markus B{\"u}hner },

387}

388

389@article{Azucar2018,

390pages = {150-159},

391month = {Apr},

392year = {2018},

393author = {Azucar, Danny and Marengo, Davide and Settanni, Michele},

394publisher = {Pergamon},

395doi = {10.1016/j.paid.2017.12.018},

396issn = {01918869},

397journal = {Personality and Individual Differences},

398volume = {124},

399title = {Predicting the Big 5 personality traits from digital footprints on social media: A meta-analysis},

400}

401

402@misc{lexico,

403note = {[Online; accessed 18-January-2018]},

404howpublished = {\url{https://en.oxforddictionaries.com/definition/vulnerability}},

405year = {2018},

406author = {{Oxford University Press}},

407title = {English Oxford Living Dictionaries},

408}

409

410@misc{medium_stereotypes,

411note = {[Online; accessed 01-January-2022]},

412year = {2022},

413howpublished = {\url{https://medium.com/@blaisea/do-algorithms-reveal-sexual-orientation-or-just-expose-our-stereotypes-d998fafdf477}},

414title = {Do algorithms reveal sexual orientation or just expose our stereotypes?},

415author = {Ag{\"u}era y Arcas, Blaise and Todorov, Alexander and Mitchell, Margaret},

416}

417

418@misc{who_depression,

419accessed = {14 December 2021},

420url = {http://ghdx.healthdata.org/gbd-results-tool?params=gbd-api-2019-permalink/d780dffbe8a381b25e1416884959e88b},

421institution = {Global Health Data Exchange (GHDx)},

422author = {{Institute of Health Metrics and Evaluation}},

423}

424

425@inproceedings{abbasi2019fairness,

426organization = {SIAM},

427year = {2019},

428pages = {801-809},

429booktitle = {Proceedings of the 2019 SIAM International Conference on Data Mining},

430author = {Abbasi, Mohsen and Friedler, Sorelle A and Scheidegger, Carlos and Venkatasubramanian, Suresh},

431title = {Fairness in representation: quantifying stereotyping as a representational harm},

432}

433

434@incollection{martin1990mental,

435address = {U.S.A},

436publisher = {Butterworth Publishers},

437year = {1990},

438edition = {3},

439booktitle = {Clinical Methods: The History, Physical, and Laboratory Examinations},

440author = {Martin, David C},

441title = {The mental status examination},

442}

443

444@article{facebook_depression2018,

445journal = {Proceedings of the National Academy of Sciences},

446eprint = {https://www.pnas.org/content/115/44/11203.full.pdf},

447url = {https://www.pnas.org/content/115/44/11203},

448issn = {0027-8424},

449publisher = {National Academy of Sciences},

450doi = {10.1073/pnas.1802331115},

451year = {2018},

452pages = {11203-11208},

453number = {44},

454volume = {115},

455title = {Facebook language predicts depression in medical records},

456author = {Eichstaedt, Johannes C. and Smith, Robert J. and Merchant, Raina M. and Ungar, Lyle H. and Crutchley, Patrick and Preo{\c t}iuc-Pietro, Daniel and Asch, David A. and Schwartz, H. Andrew},

457}

458

459@article{thorstad2019predicting,

460publisher = {Springer},

461year = {2019},

462pages = {1586-1600},

463number = {4},

464volume = {51},

465journal = {Behavior research methods},

466author = {Thorstad, Robert and Wolff, Phillip},

467title = {Predicting future mental illness from social media: A big-data approach},

468}

469

470@inproceedings{schwartz2014towards,

471pages = {118--125},

472doi = {10.3115/v1/W14-3214},

473url = {https://aclanthology.org/W14-3214},

474publisher = {Association for Computational Linguistics},

475address = {Baltimore, Maryland, USA},

476year = {2014},

477month = {June},

478booktitle = {Proceedings of the Workshop on Computational Linguistics and Clinical Psychology: From Linguistic Signal to Clinical Reality},

479author = {Schwartz, H. Andrew and

480Eichstaedt, Johannes and

481Kern, Margaret L. and

482Park, Gregory and

483Sap, Maarten and

484Stillwell, David and

485Kosinski, Michal and

486Ungar, Lyle},

487title = {Towards Assessing Changes in Degree of Depression through {F}acebook},

488}

489

490@article{inkster2016decade,

491publisher = {Elsevier},

492year = {2016},

493pages = {1087-1090},

494number = {11},

495volume = {3},

496journal = {The Lancet Psychiatry},

497author = {Inkster, Becky and Stillwell, David and Kosinski, Michal and Jones, Peter},

498title = {A decade into Facebook: where is psychiatry in the digital age?},

499}

500

501@inbook{mental_health_some2019,

502numpages = {16},

503pages = {1-16},

504booktitle = {Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems},

505url = {https://doi.org/10.1145/3290605.3300364},

506address = {New York, NY, USA},

507publisher = {Association for Computing Machinery},

508isbn = {9781450359702},

509year = {2019},

510title = {Methodological Gaps in Predicting Mental Health States from Social Media: Triangulating Diagnostic Signals},

511author = {Ernala, Sindhu Kiranmai and Birnbaum, Michael L. and Candan, Kristin A. and Rizvi, Asra F. and Sterling, William A. and Kane, John M. and De Choudhury, Munmun},

512}

513

514@article{tadesse2019detection,

515publisher = {IEEE},

516year = {2019},

517pages = {44883-44893},

518volume = {7},

519journal = {IEEE Access},

520author = {Tadesse, Michael M and Lin, Hongfei and Xu, Bo and Yang, Liang},

521title = {Detection of depression-related posts in reddit social media forum},

522}

523

524@inproceedings{cohan-etal-2018-smhd,

525pages = {1485-1497},

526url = {https://aclanthology.org/C18-1126},

527publisher = {Association for Computational Linguistics},

528address = {Santa Fe, New Mexico, USA},

529year = {2018},

530month = {August},

531booktitle = {Proceedings of the 27th International Conference on Computational Linguistics},

532author = {Cohan, Arman and

533Desmet, Bart and

534Yates, Andrew and

535Soldaini, Luca and

536MacAvaney, Sean and

537Goharian, Nazli},

538title = {{SMHD}: a Large-Scale Resource for Exploring Online Language Usage for Multiple Mental Health Conditions},

539}

540

541@inproceedings{Yates2017DepressionAS,

542abstract = {Users suffering from mental health conditions often turn to online resources for support, including specialized online support communities or general communities such as Twitter and Reddit. In this work, we present a framework for supporting and studying users in both types of communities. We propose methods for identifying posts in support communities that may indicate a risk of self-harm, and demonstrate that our approach outperforms strong previously proposed methods for identifying such posts. Self-harm is closely related to depression, which makes identifying depressed users on general forums a crucial related task. We introduce a large-scale general forum dataset consisting of users with self-reported depression diagnoses matched with control users. We show how our method can be applied to effectively identify depressed users from their use of language alone. We demonstrate that our method outperforms strong baselines on this general forum dataset.},

543pages = {2968--2978},

544doi = {10.18653/v1/D17-1322},

545url = {https://aclanthology.org/D17-1322},

546publisher = {Association for Computational Linguistics},

547address = {Copenhagen, Denmark},

548year = {2017},

549month = {September},

550booktitle = {Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing},

551author = {Yates, Andrew and

552Cohan, Arman and

553Goharian, Nazli},

554title = {Depression and Self-Harm Risk Assessment in Online Forums},

555}

556

557@article{oxman1982language,

558pages = {88-97},

559number = {3},

560volume = {131},

561publisher = {American Psychiatric Assn},

562year = {1982},

563journal = {The American journal of psychiatry},

564author = {Oxman, Thomas E and Rosenberg, Stanley D and Tucker, Gary J},

565title = {The language of paranoia},

566}

567

568@inproceedings{ramiirez2018upf,

569address = {online},

570publisher = {CEUR Workshop Proceedings},

571year = {2018},

572pages = {1-12},

573booktitle = {Cappellato L, Ferro N, Nie JY, Soulier L, editors. Working Notes of CLEF 2018-Conference and Labs of the Evaluation Forum; 2018 Sep 10-14; Avignon, France.[Avignon]: CEUR Workshop Proceedings},

574author = {Ram{\'\i}{\i}rez-Cifuentes, Diana and Freire, Ana},

575title = {UPF's Participation at the CLEF eRisk 2018: Early Risk Prediction on the Internet},

576}

577

578@inproceedings{coppersmith2014quantifying,

579year = {2014},

580address = {Baltimore, Maryland, USA},

581publisher = {Association for Computational Linguistics},

582pages = {51-60},

583booktitle = {Proceedings of the workshop on computational linguistics and clinical psychology: From linguistic signal to clinical reality},

584author = {Coppersmith, Glen and Dredze, Mark and Harman, Craig},

585title = {Quantifying mental health signals in Twitter},

586}

587

588@misc{facebook_suicide_prevention,

589url = {http://newsroom.fb.com/news/2017/03/building-a-safer-community-with-new-suicide-prevention-tools/},

590title = {Building a safer community with new suicide prevention tools},

591publisher = {Facebook},

592year = {2017},

593author = {Callison-Burch, Vanessa and Guadagno, Jennifer and Davis Antigone},

594}

595

596@article{aboureihanimohammadi2020identification,

597year = {2020},

598pages = {1--12},

599number = {4},

600volume = {7},

601journal = {Journal of Cognitive Psychology},

602author = {Aboureihani Mohammadi, M and Fadaei Moghadam Heydarabadi, M and Zardary, S and Heysieattalab, S},

603title = {Identification Psychological Disorders Based on Data in Virtual Environments Using Machine Learning},

604}

605

606@article{arabic_depression2020,

607doi = {10.1109/ACCESS.2020.2981834},

608pages = {57317-57334},

609number = {},

610volume = {8},

611year = {2020},

612title = {Predicting Depression Symptoms in an Arabic Psychological Forum},

613journal = {IEEE Access},

614author = {Alghamdi, Norah Saleh and Hosni Mahmoud, Hanan A. and Abraham, Ajith and Alanazi, Samar Awadh and García-Hernández, Laura},

615}

616

617@article{de2013predicting,

618pages = {128-137},

619month = {Aug.},

620year = {2021},

621author = {De Choudhury, Munmun and Gamon, Michael and Counts, Scott and Horvitz, Eric},

622journal = {Proceedings of the International AAAI Conference on Web and Social Media},

623number = {1},

624abstractnote = { <p> Major depression constitutes a serious challenge in personal and public health. Tens of millions of people each year suffer from depression and only a fraction receives adequate treatment. We explore the potential to use social media to detect and diagnose major depressive disorder in individuals. We first employ crowdsourcing to compile a set of Twitter users who report being diagnosed with clinical depression, based on a standard psychometric instrument. Through their social media postings over a year preceding the onset of depression, we measure behavioral attributes relating to social engagement, emotion, language and linguistic styles, ego network, and mentions of antidepressant medications. We leverage these behavioral cues, to build a statistical classifier that provides estimates of the risk of depression, before the reported onset. We find that social media contains useful signals for characterizing the onset of depression in individuals, as measured through decrease in social activity, raised negative affect, highly clustered egonetworks, heightened relational and medicinal concerns, and greater expression of religious involvement. We believe our findings and methods may be useful in developing tools for identifying the onset of major depression, for use by healthcare agencies; or on behalf of individuals, enabling those suffering from depression to be more proactive about their mental health.},

625doi = {10.1609/icwsm.v7i1.14432},

626url = {https://ojs.aaai.org/index.php/ICWSM/article/view/14432},

627volume = {7},

628title = {Predicting Depression via Social Media},

629}

630

631@inproceedings{orabi2018deep,

632abstract = {Mental illness detection in social media can be considered a complex task, mainly due to the complicated nature of mental disorders. In recent years, this research area has started to evolve with the continuous increase in popularity of social media platforms that became an integral part of people{'}s life. This close relationship between social media platforms and their users has made these platforms to reflect the users{'} personal life with different limitations. In such an environment, researchers are presented with a wealth of information regarding one{'}s life. In addition to the level of complexity in identifying mental illnesses through social media platforms, adopting supervised machine learning approaches such as deep neural networks have not been widely accepted due to the difficulties in obtaining sufficient amounts of annotated training data. Due to these reasons, we try to identify the most effective deep neural network architecture among a few of selected architectures that were successfully used in natural language processing tasks. The chosen architectures are used to detect users with signs of mental illnesses (depression in our case) given limited unstructured text data extracted from the Twitter social media platform.},

633pages = {88--97},

634doi = {10.18653/v1/W18-0609},

635url = {https://aclanthology.org/W18-0609},

636publisher = {Association for Computational Linguistics},

637address = {New Orleans, LA},

638year = {2018},

639month = {June},

640booktitle = {Proceedings of the Fifth Workshop on Computational Linguistics and Clinical Psychology: From Keyboard to Clinic},

641author = {Husseini Orabi, Ahmed and

642Buddhitha, Prasadith and

643Husseini Orabi, Mahmoud and

644Inkpen, Diana},

645title = {Deep Learning for Depression Detection of {T}witter Users},

646}

647

648@article{lejoyeux2002impulse,

649publisher = {LWW},

650year = {2002},

651pages = {310-314},

652number = {5},

653volume = {190},

654journal = {The Journal of nervous and mental disease},

655author = {Lejoyeux, Michel and Arbaretaz, Marie and McLOUGHLIN, MARY and Ad{\`e}s, Jean},

656title = {Impulse control disorders and depression},

657}

658

659@article{Kramer8788,

660journal = {Proceedings of the National Academy of Sciences},

661eprint = {https://www.pnas.org/content/111/24/8788.full.pdf},

662url = {https://www.pnas.org/content/111/24/8788},

663issn = {0027-8424},

664publisher = {National Academy of Sciences},

665doi = {10.1073/pnas.1320040111},

666year = {2014},

667pages = {8788-8790},

668number = {24},

669volume = {111},

670title = {Experimental evidence of massive-scale emotional contagion through social networks},

671author = {Kramer, Adam D. I. and Guillory, Jamie E. and Hancock, Jeffrey T.},

672}

673

674@article{lejoyeux1997study,

675publisher = {[Memphis, Tenn., Physicians Postgraduate Press]},

676year = {1997},

677pages = {169-173},

678number = {4},

679volume = {58},

680journal = {Journal of Clinical Psychiatry},

681author = {Lejoyeux, Michel and Tassain, Valerie and Solomon, Jacquelyn and Ades, Jean},

682title = {Study of compulsive buying in depressed patients},

683}

684

685@article{mcelroy1996impulse,

686publisher = {Elsevier},

687year = {1996},

688pages = {229-240},

689number = {4},

690volume = {37},

691journal = {Comprehensive psychiatry},

692author = {McElroy, Susan L and Pope Jr, Harrison G and Keck Jr, Paul E and Hudson, James I and Phillips, Katharine A and Strakowski, Stephen M},

693title = {Are impulse-control disorders related to bipolar disorder?},

694}

695

696@article{yasuhisa2001,

697doi = {10.1111/1468-5884.00179},

698journal = {Japanese Psychological Research},

699volume = {43},

700title = {Shopping as a coping behavior for stress},

701pages = {},

702month = {11},

703year = {2001},

704author = {Hama, Yasuhisa},

705}

706

707@misc{arstechnica_teens2017,

708year = {2017},

709author = {ArsTechnica},

710url = {https://arstechnica.com/information-technology/2017/05/facebook-helped-advertisers-target-teens-who-feel-worthless/},

711title = {Report: Facebook helped advertisers target teens who feel ``worthless''},

712}

713

714@article{Bell1985,

715eprint = {https://doi.org/10.1057/jors.1985.106},

716url = {https://doi.org/10.1057/jors.1985.106},

717doi = {10.1057/jors.1985.106},

718publisher = {Taylor & Francis},

719year = {1985},

720pages = {613-619},

721number = {7},

722volume = {36},

723journal = {Journal of the Operational Research Society},

724title = {Why Expert Systems Fail},

725author = {Michael Z. Bell},

726}

727

728@article{Barredo2020,

729author = {Alejandro {Barredo Arrieta} et.\ al.},

730url = {https://www.sciencedirect.com/science/article/pii/S1566253519308103},

731doi = {https://doi.org/10.1016/j.inffus.2019.12.012},

732issn = {1566-2535},

733year = {2020},

734pages = {82-115},

735volume = {58},

736journal = {Information Fusion},

737title = {Explainable Artificial Intelligence ({XAI}): Concepts, taxonomies, opportunities and challenges toward responsible {AI}},

738}

739

740@article{computer_ethics,

741doi = {10.1111/j.1467-9973.1985.tb00173.x},

742journal = {Metaphilosophy},

743volume = {16},

744title = {What Is Computer Ethics?},

745pages = {266-275},

746month = {08},

747year = {2007},

748author = {Moor, James},

749}

750

751@inproceedings{ethics_by_design,

752series = {AIES '18},

753location = {New Orleans, LA, USA},

754keywords = {machine ethics, multi-agent systems, ethics by design},

755numpages = {7},

756pages = {60-66},

757booktitle = {Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society},

758doi = {10.1145/3278721.3278745},

759url = {https://doi.org/10.1145/3278721.3278745},

760address = {New York, NY, USA},

761publisher = {Association for Computing Machinery},

762isbn = {9781450360128},

763year = {2018},

764title = {Ethics by Design: Necessity or Curse?},

765author = {Dignum, Virginia and Baldoni, Matteo and Baroglio, Cristina and Caon, Maurizio and Chatila, Raja and Dennis, Louise and G\'{e}nova, Gonzalo and Haim, Galit and Klie\ss{}, Malte S. and Lopez-Sanchez, Maite and Micalizio, Roberto and Pav\'{o}n, Juan and Slavkovik, Marija and Smakman, Matthijs and van Steenbergen, Marlies and Tedeschi, Stefano and van der Toree, Leon and Villata, Serena and de Wildt, Tristan},

766}

767

768@article{principles_alone,

769doi = {10.1038/s42256-019-0114-4},