- Papers

Papers is Alpha. This content is part of an effort to make research more accessible, and (most likely) has lost some details from the original. You can find the original paper here.

Motivation & Background

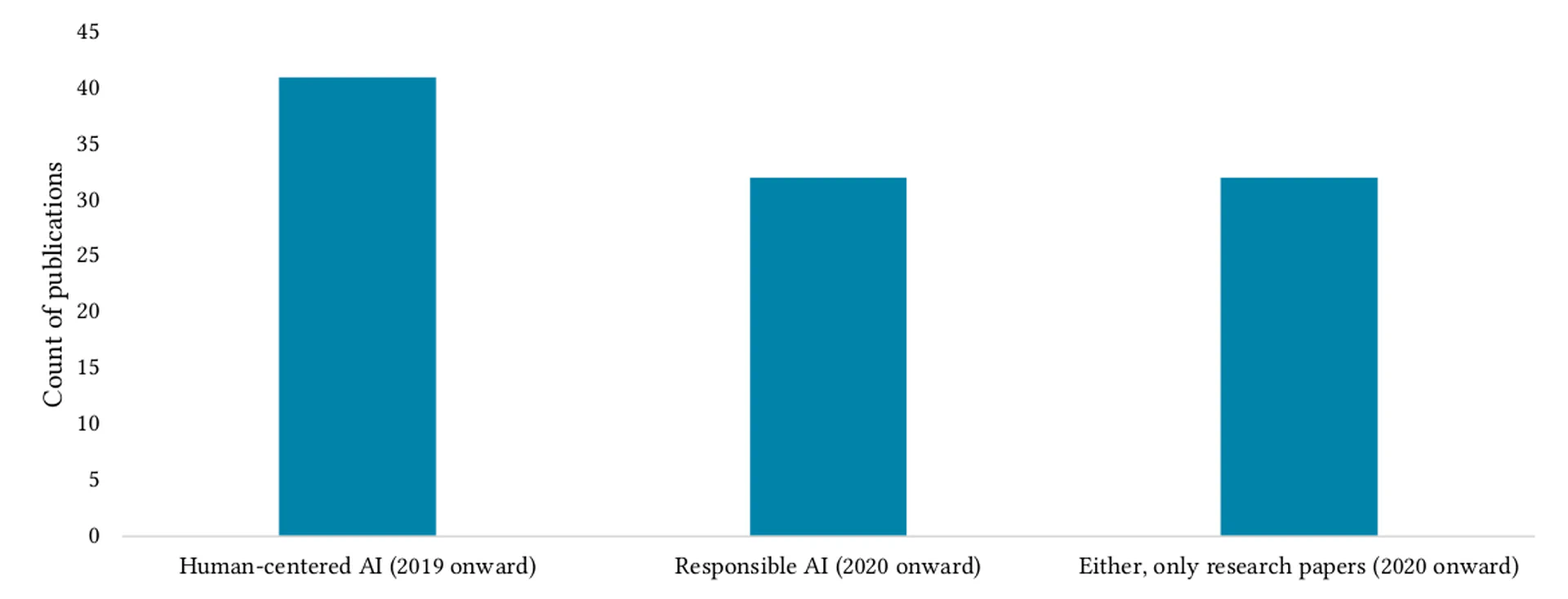

Human-Centered Responsible Artificial Intelligence (HCR-AI) [Different communities have adopted different terminologies to address related topics. We intentionally left the proposal and terminology open without emphasizing specific topics to attract participants from various backgrounds and interests. One reason to propose this SIG is to discuss various aspects of HCR-AI with researchers who can provide diverse perspectives.] aims to bring people and their values into the design and development of AI systems, which can contribute to building systems that benefit people and societies, as well as preventing and mitigating potential harms. Despite a long history of the importance of the human factor in AI systems, there has been a growing awareness of its importance within the CHI community in the past few years. Searching the ACM Digital Library within CHI proceedings shows the following results (Figure fig-trends):[Using search within anywhere on the ACM Digital Library. Results are not mutually exclusive and include all types of materials (e.g., research papers, extended abstracts, panels, and invited talks). Filtering for only research papers results in 32 unique papers since 2020. We acknowledge this is not an exhaustive search and is only to show the growing body of research in CHI.] “human-centered AI” results in 41 records since 2019 and “responsible AI” results in 32 records since 2020. Below, we highlight a few examples of these studies, which are relevant to the topic of the Special Interest Group (SIG), noting that this is not an exhaustive list and is only to show the breadth and depth of the existing work:

Ethics in AI involve socio-cultural and technical factors, spanning a range of responsible AI values (including but not limited to transparency, fairness, explainability, accountability, autonomy, sustainability, and trust). However, different stakeholders, including the general population and AI practitioners, may perceive and prioritize these values differently. For example, a representative sample of the U.S. population was more likely to value safety, privacy, and performance. In contrast, practitioners were more likely to prioritize fairness, dignity, and inclusiveness. Or, certain historically exploited groups may weigh privacy or non-participation more highly than groups with lower risk.

Aligned with responsible AI are calls to make AI more human-centric. In particular, there is an emphasis on the challenges of AI integration into socio-technical processes to preserve human autonomy and control, as well as the impacts of AI systems deployment and applications on society, organizations, and individuals. On this strand of research, understanding socio-technical and environmental factors can help surface why and how an AI system may become human-centered. For example, even for an AI for which there might be broader consensus on its utility, such as the detection of diabetes using retina scans, there may well be barriers to becoming useful for its intended users, including due to not fitting well with the users’ workflows (e.g., nurses) or the system requiring high-quality images that are not easy to produce, especially in locations with low resources where such technology can provide significant support to patients if done right.

Similarly, researchers have looked at individuals’ expectations and understandings of AI. For example, when making an ethical decision (e.g., a hypothetical scenario for bringing down a terrorist drone to save lives), people may put more capability trust in an AI decision maker (i.e., capacity trustworthiness, being more capable), whereas they may put more moral trust in a human expert (i.e., being able to be morally trustworthy and make decisions that are aligned with moral values); in either case, decision made by a human or an AI, prior work has found that people often see the human as partly responsible, be it the decision maker or the AI developer—even though the outcomes of the developer may intentionally or unintentionally limit the span of action of the decision-maker. Regarding moral dilemmas between AI and human decisions, people may not equally judge humans and machines. These variations in perceptions may be rooted in (a) people judging humans by their intentions and machines by their outcomes, and (b) people assigning extreme intentions to humans and narrow intentions to machines, while they may excuse human actions more than machine actions in accidental scenarios. Furthermore, people’s perceived fairness and trust in an AI may change with the terminology used to describe it (e.g., an algorithm, computer program, or artificial intelligence), which could eventually impact the system’s success and outcomes, especially when comparative research is done.

Another human aspect of AI systems is the people who work on these systems, such as annotators, engineers, and researchers. Data annotators are part of the workforce that produces the datasets used to train AI models. However, the workforce (sometimes referred to as _AI labor_or ghostworkers) behind the annotation task may have career aspirations that the current annotation companies do not support, or they may be poorly paid because of the push that comes from the recent development in AI that requires massive annotated datasets at low costs. Other researchers echo similar observations about AI labor by saying that “without the work and labor that were poured into the data annotation process, ML [Machine Learning] efforts are no more than sandcastles,”or “everyone wants to do the model work, not the data work,”a behavior that contributes to the creation of data cascades —which refer to compounding events causing adverse, downstream effects from data issues, resulting in technical debt.[In 1992, Ward Cunningham put forward the metaphor of technical debt to describe the build-up of cruft (deficiencies in internal quality) in software systems as debt accrual, similar to financialor ethical debt(i.e., “AI ethical debt is incurred when an agency opts to design, develop, deploy and use an AI solution without proactively identifying potential ethical concerns”).]

New tools and frameworks are now being proposed to help developers build more responsible AI systems (e.g., IBM’s 360 suites on fairness and explainabilityand Fairlearn), in addition to user-led approaches to algorithmic auditing to uncover potential harms of algorithmic systems. Despite the growing interest in HCI research and user experience design for AI, developing responsible AI remains challenging; a mission involving cognitive, socio-technical, cultural, and design perspectives.

These are just a few examples from many studies that cover topics that have emerged within the past few years and are relevant to the SIG’s scope. Besides CHI, the ACM Conference on Fairness, Accountability, and Transparency (ACM FAccT ), established in 2018, aims to bring “together researchers and practitioners interested in fairness, accountability, and transparency in socio-technical systems” highlighting the importance of the research in HCR-AI. We aim to bring this community together in a 75-minute discussion and brainstorming session at CHI 2023.

Proposal & SIG’s Goal

The SIG follows similar strands from past workshops at CHI 2020, 2021, and 2022. The topics discussed are evolving and growing (Figure ); hence, a SIG at CHI 2023 would be timely. We believe a SIG dedicated to the HCR-AI at CHI 2023 will benefit the CHI community and help build and establish a broader network of researchers and provide a mapping and understanding of current and future trends in this area. Researchers in this area come from industry and academia from diverse disciplinary backgrounds (e.g., theoretical computer science, social computing, machine learning, human-computer interaction, and social science). Therefore, having them all in one hybrid physical-virtual room for 75 minutes would benefit the community and the attendees to brainstorm and generate a map of current and future trends in this area (activity diagramming). We propose to use online tools such as Miro and Slack to (a) create a record of the group’s co-constructed knowledge; (b) serve as a persistent communication to others in the CHI community; and (c) enfranchise remote participants.

Attribution

arXiv:2302.08157v1

[cs.HC]

License: cc-by-4.0