- Papers

Papers is Alpha. This content is part of an effort to make research more accessible, and (most likely) has lost some details from the original. You can find the original paper here.

Introduction

In our increasingly data-driven society, artificial intelligence (AI) has become pervasive for decision-making in a wide range of fields. AI has immense potential to make positive change in fields ranging from agricultureto healthcare. However, as with any tool, AI can also cause great harm if developed and used improperly or inappropriately; regardless of whether this is done unintentionally or maliciously. The consequences of bad AI practice can range from bias affecting underprivileged or underrepresented groups, to financial harm, to physical and mental harm.

The need to develop AI in a way that benefits human life and societies has lead to the emergence of responsible AI (RAI). RAI is fundamentally the field of applying ethics to the development and utilization of AI, to ensure that AI systems and used for the good of humanity. As with all moral philosophy, there is no single consensus on what makes AI ethical and therefore responsible. However, efforts have been made to establish RAI characteristics, frameworks, and guidelines in academia, industry, and goverments and political bodies. Frequently identified pillars of RAI across these diverse sources include fairness, robustness, transparency, accountability, privacy, and safety.

Explainability is also considered as a pillar of RAI by many works, often connected to transparency. Explainability is broadly considered by the field of explainable AI (XAI), which is focused on providing humans with insight into the reasoning of AI models during their decision-making processes. Researchers in the XAI domain have developed methods for producing explanations that include text-based, visual, and feature importancemethods.

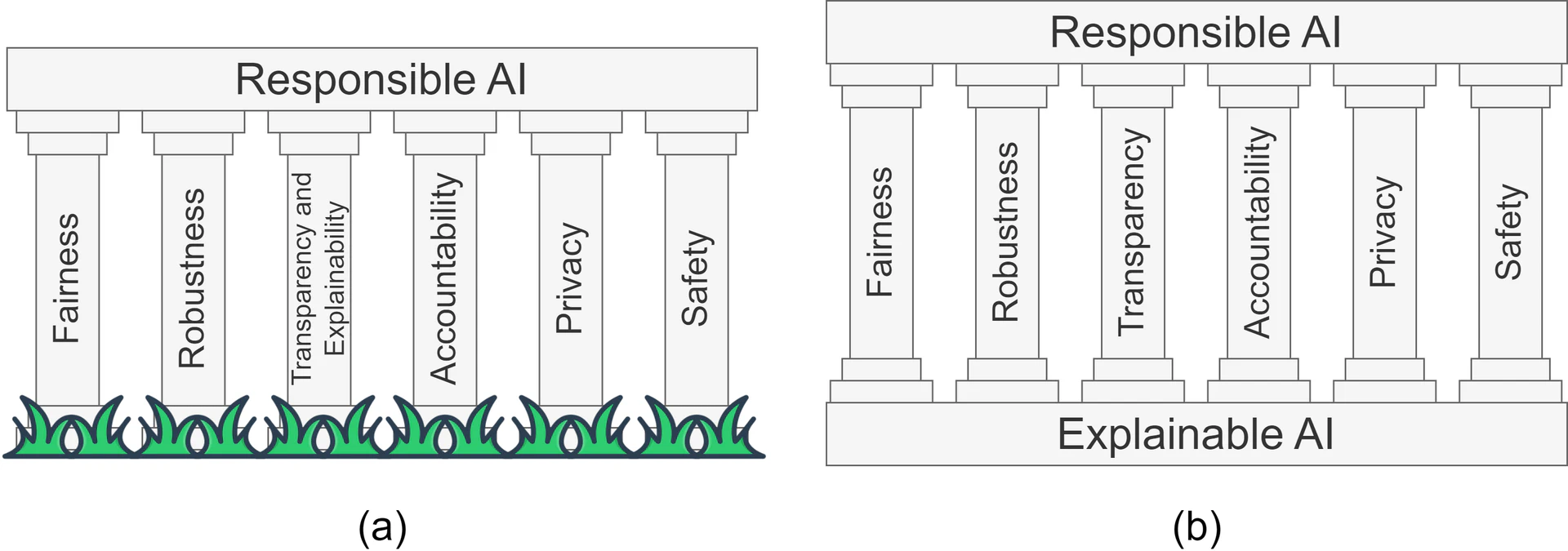

Given that XAI techniques improve transparency, it is understandable that some researchers have classed explainability as a pillar of RAI. However, our review of the literature finds that considering XAI as one discrete pillar of RAI, separate to all others, is insufficient to capture the potential impact of explainability on RAI. In this review, we find substantial evidence that XAI should instead be considered as the foundation of RAI; this key difference is shown in Fig. fig-pillars.

In our review, XAI is shown to support assessment of fairness, robustness, and safety, thus supporting responsible AI operation and enabling improvement as needed. The transparency offered by XAI is shown to improve interpretability and understability of AI through human evaluation in several studies, which in turn supports accountability for decision making in critical sectors such as financeand law. Finally, it is illustrated that XAI can be combined with privacy-preserving techniques to ensure that the requirement of privacy is met without compromising on other RAI requirements.

Original Contributions

The literature contains several high-quality reviews on the topics of XAI and RAI; however, these are typically considered as separate topics. In this work, we fill a key gap in the literature by illustrating that XAI is foundational to RAI. The key contributions of this work are as follows:

- We conduct a broad-scoping review of the literature on both XAI and RAI, highlighting the technologies, principals, and frameworks developed by previous works.

- We propose a novel framework that considers explainable AI as the foundation of responsible AI. This is in contrast to previous works, which have considered XAI as a single pillar of RAI.

- We demonstrate that XAI is foundational to the RAI principles of fairness, robustness, transparency, accountability, privacy and safety through rigorous exploration of the XAI literature.

- Building upon our findings, we illustrate real-world use cases where XAI directly supports RAI in applications within the key fields of generative AI, healthcare, and transportation.

Comparison to Other Works

The field of XAI has been extremely active in recent years, and responsible AI has also become a hot topic. As such, several high-quality surveys have examined these two topics. However, the concept of XAI as the foundational technology of RAI has not been considered in previous works. This is shown in Table tab-literaturecomparison, where we compare several recent surveys in the literature. We exclude surveys that review XAI and/or RAI in a domain-specific context (i.e., healthcare or finance).

Comparison of recent surveys on explainable or responsible AI. Key: The topic is explored in depth. The topic is briefly mentioned. The topic is not discussed.

Table Label: tab-literaturecomparison

Download PDF to view tableResponsible AI has been considered in recent surveys by Kaur _et al._and Li et al.. In both of these works, the literature on RAI was reviewed and findings were used to propose principals and guidelines for the development and deployment of RAI. In each work, explainability was identified as a characteristic of RAI but was not explored in depth. Characteristics of transparency, accountability, fairness, and robustness were identified by each work.

Another work by Ashok _et al._sought to outline broader ethical principles for digital technology development, including but not limited to AI. They ultimately proposed 14 ethical characteristics, which included accountability, fairness, and privacy. Explainability was not discussed.

RAI was explored through the lens of multiple critical use cases in another recent survey by Anagnostou et al.. Their work first explored the problems in critical sectors including healthcare and transportation, before identifying several characteristics required of RAI. These characteristics were similar to other works and included transparency, accountability, and fairness. Explainability was mentioned as a strategy for supporting transparency, but was not explored in depth.

Dwivedi _et al._recently conducted a survey that explored explainability techniques and provides guidance on Python programming packages that allow for implementation. Similarly, a recent review by Minh _et al._explored XAI techniques in depth, with some discussion of applications. Furthermore, a recent survey by Ahmed _et al._discussed AI, XAI, and use cases of XAI in Industry 4.0. However, these three works did not discuss responsible or ethical AI concepts.

Another recent survey conducted by Saeed _et al._explored XAI with a particular focus on future challenges. They also briefly discuss how XAI can be used to create trustworthy AI systems, which supports RAI. Perhaps the survey that most considered the relationship between XAI and RAI was conducted by Barredo Arrieta et al.. They comprehensively reviewed the literature on XAI, before discussing RAI as a separate but related topic. They briefly highlight how XAI could support RAI in terms of transparency and fairness. However, their review overlooked the usefulness of XAI in areas of RAI including robustness.

Overall, the literature is rich with surveys on RAI and XAI separately. However, there is relatively little discussion in the literature of the strong relationship between these two topics. In this review, we fill that gap in the literature by investigating how XAI can be used to create AI systems that align with existing RAI frameworks and characteristics.

Structure of the Paper

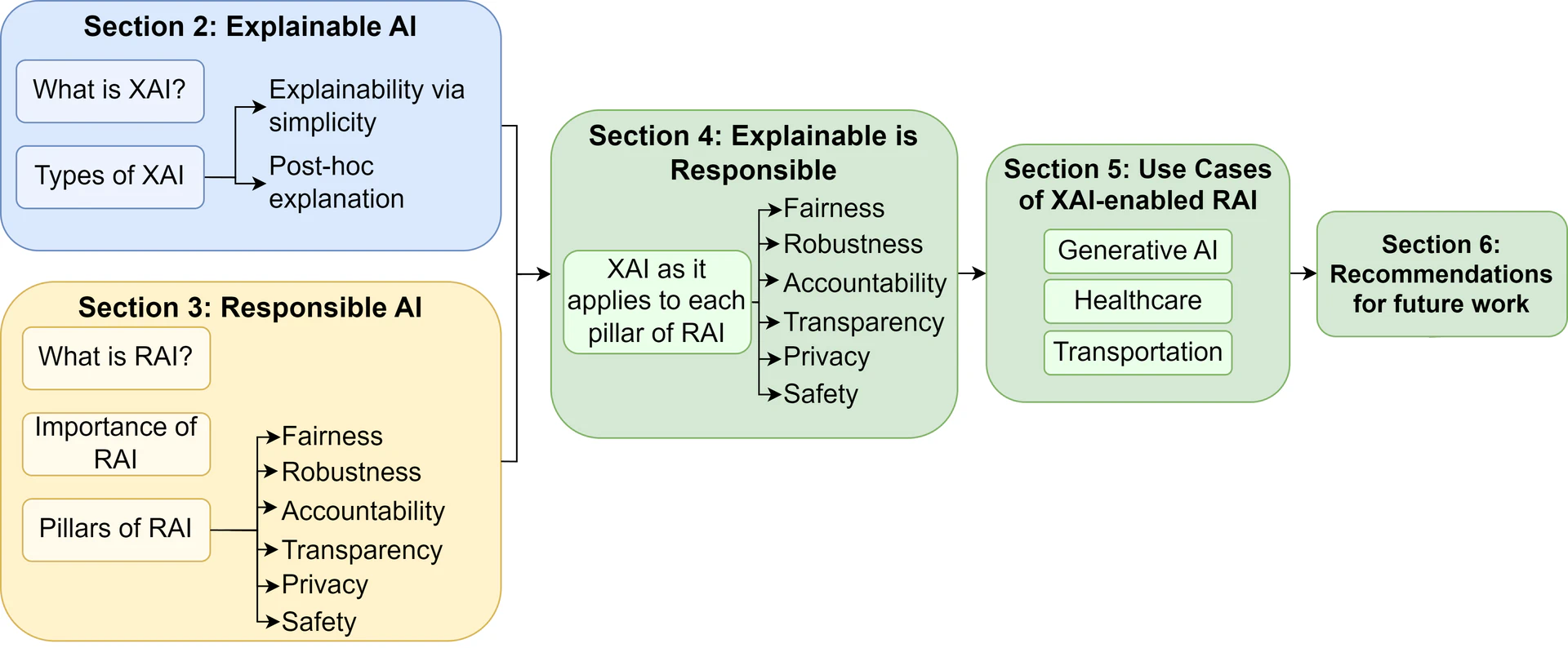

The remainder of this paper is structured as follows: Section 2 addresses the question of what makes AI explainable, and explores the types and applications of explainable AI. Section 3 then discusses responsible AI, including why responsibility is important and the characteristics that make AI responsible. Section 4 then examines how the pillars of responsible AI can be achieved through explainability. In Section 5, we present several real-world use cases to highlight the importance of explainability to produce responsible and trustworthy AI for sectors including healthcare and transportation. We present recommendations for future works in the AI domain in Section 6, before concluding the paper in Section 7. The key contents of this paper are illustrated in Fig. fig-structure.

Explainable AI

What is Explainable AI?

Explainable AI (XAI) describes strategies and processes used to make AI models more understandable and interpretable to their developers and end-users, without significant compromise on performance. Audience is critical to consider; strategies utilised to understand a model as the developer may differ from those needed to explain a model to non-technical end users. The key motivations for explainability are improved transparency and trust in AI models, particularly those used in critical applications.

The need for explainable AI is primarily due to the increasing complexity of AI models. Early AI models, such as linear and logistic regression algorithms, can be considered as explainable AI models due to being understandable by design. In such models, the internal mechanisms linking input to output can be readily visualised and interpreted. However, these models are often limited in terms of performance; their simplicity prevents them from accurately interpreting complex data.

As computing resources have improved, increasingly complex AI algorithms have been developed to support better interpretation of complex data. Advanced AI algorithms that continue to grow in prevalence include deep neural networks (DNNs) and random forest. Such models have demonstrated high performance in many domains, however their complexity limits insight into what happens in between input and output; they are not inherently understandable. The increase of high-performing yet opaque models, often termed black-box models, has lead to the substantially increasing interest in XAI for interpreting complex models.

The following subsections dive deeper into the existing literature on XAI, focusing on both explainability by design, where models are intentionally designed to be inherently interpretable, and post-hoc explainability, whereby the model is retrospectively explained after its development or use. Within both types of explainability, there are methods designed to provide local explanations (i.e., explain a single prediction) or global explanations (i.e., explain the general decision-making process of the model). Additionally, some strategies are model-agnostic (i.e., they can be applied to all or many models), while others are model-specific (they can only be applied to a select model). We introduce these terms here as they are relevant to our discussions in the next two subsections.

Explainability by Design

Several AI models can be considered explainable by design due to their simplicity; their inner workings are transparent and thus inherently interpretable. Models that fall into this category include linear regression, generalised additive models, logistic regression, decision trees, k-nearest neighbour, and low-dimensionality support vector machines.

Linear Regression

Linear regression (LR) seeks to find a line of best fit to continuous data, as illustrated in Fig. fig-linreg. In its simplest form with one independent variable, this type of regression is widely understood and thus inherently explainable. Multivariate LR is more common in the literature as it can consider multiple independent variables, each of which can be explained via individual LR plots. This is still relatively interpretable for lower numbers of variables, however weightings of variables may need to be explained. Despite their advantages in explainability, the performance of LR is limited by its inability to capture non-linear relationships, or complex relationships between variables. Nonetheless, multivariate LR remains in use with recent works utilising it to identify radiomics features associated with clinical outcomes, water and wastewater forecasting, and pandemic preparedness assessment.

Generalised Additive Models

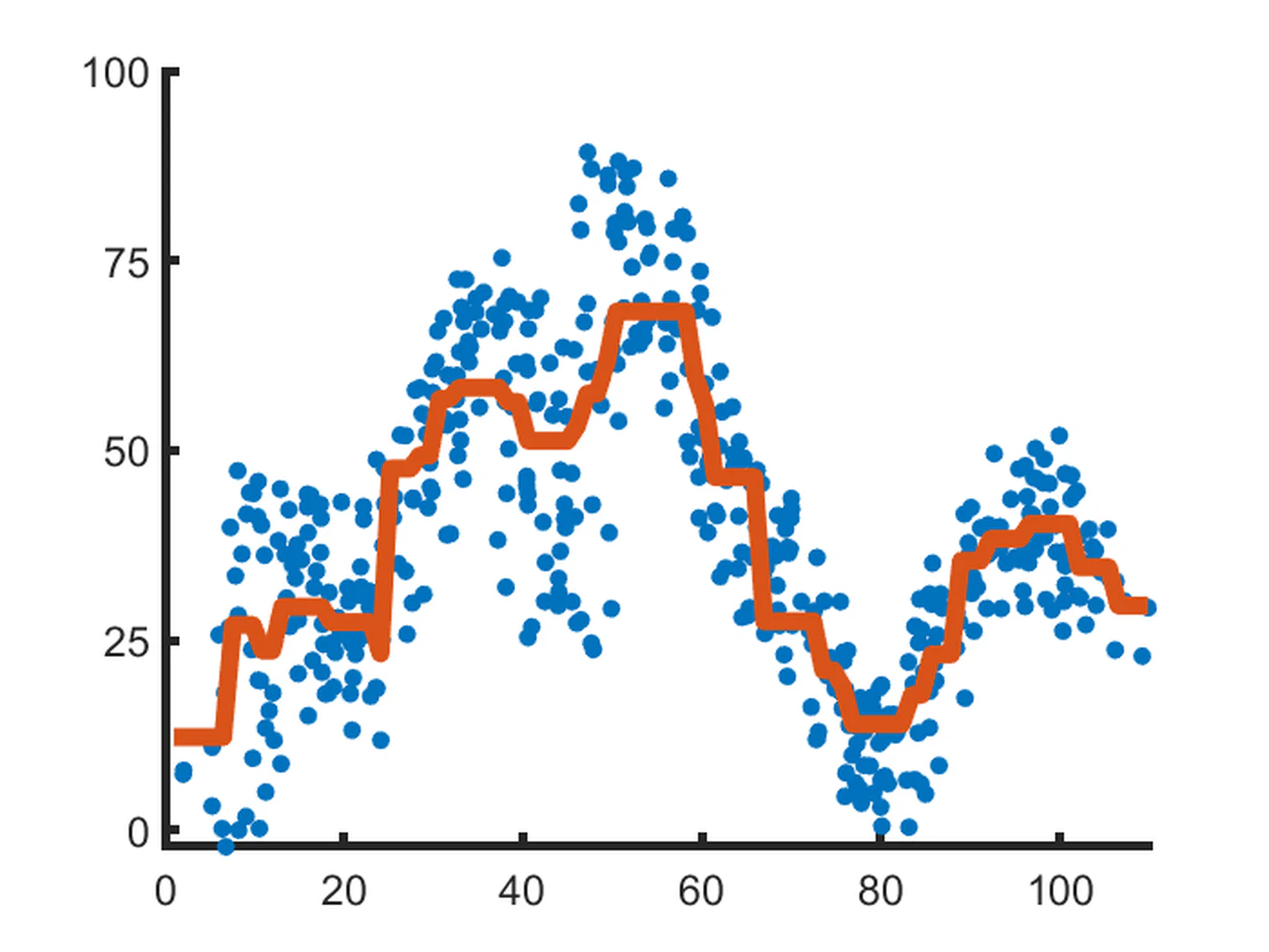

Generalised additive models (GAMs) overlap with linear regression in that they attempt to fit a line to the data. However, they differ in that they are not constrained to linear features. They are comprised of multiple `splines’ - non-linear smooth functions - added together to give an overall line describing the data, as illustrated in Fig. fig-gamreg. While there is some complexity in their development, their decisions can be readily explained to the end-user through illustrations of the fitted GAM lines for each variable considered; as with linear regression, interpretabiilty decreases as the number of variables increases. Due to their ability to capture non-linear relationships, GAMs have recently been used for applications including predicting time to crop maturity, electrical load forecasting, and bushfire prediction.

Logistic Regression

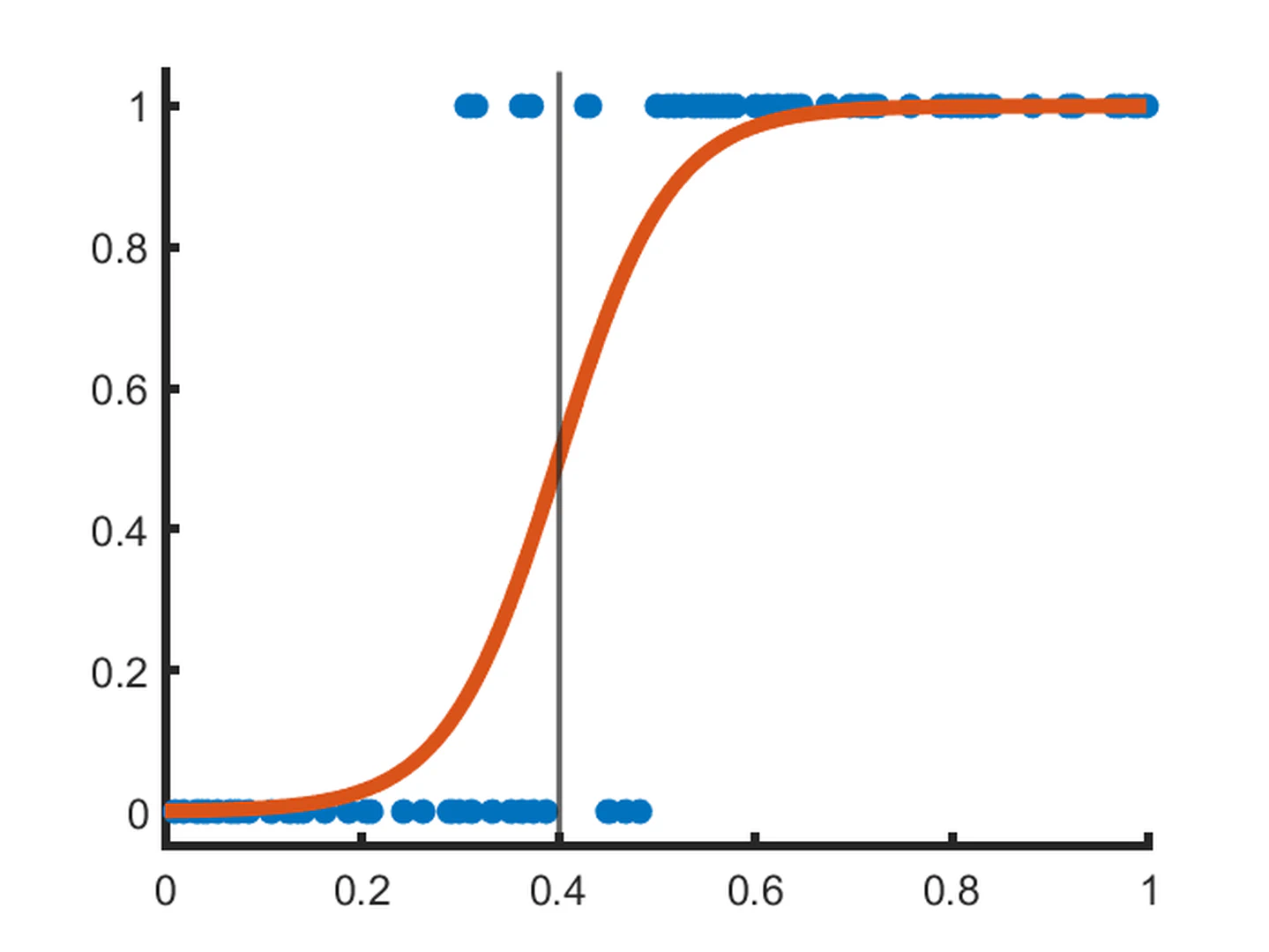

Commonly used for classification problems, logistic regression finds a logistic function that best fits to a given set of data. The binary classification case is shown in Fig. fig-logreg; the threshold (grey) line splits the outputs into the two classes. This can again be clearly illustrated and explained. Multiple logistic regression can be performed where multiple variables exist, remaining explainable for lower numbers of variables. Multiclass logistic regression is more complex, however recent papers have attempted to improve explainability through weight heat-maps. Logistic regression has been relatively popular in XAI literature, used for applications including image recognition, telehealth record sentiment analysis, adverse reaction to medication prediction, and predicting student outcomes. However, logistic regression is often outperformed by more advanced models.

Decision Trees

Decision trees (DTs) utilise multiple input features to make a series of decisions resulting in an output. The features are typically `branching’ points, with the tree splitting into separate paths after each feature is considered. The is illustrated by Fig. fig-dt, which shows a simple DT for choosing a sport to play based on weather conditions. DTs are explainable due to their clear logical flow, resembling flowcharts that are common in many industries. However, they are limited in their implementation and have become less popular in the literature. DT ensemble methods such as random forest (RF) are more common in the literature, however explainability decreases as the number of trees increases; many RF papers explain their models using post-hoc methodsdiscussed in the next section.

k-Nearest Neighbour

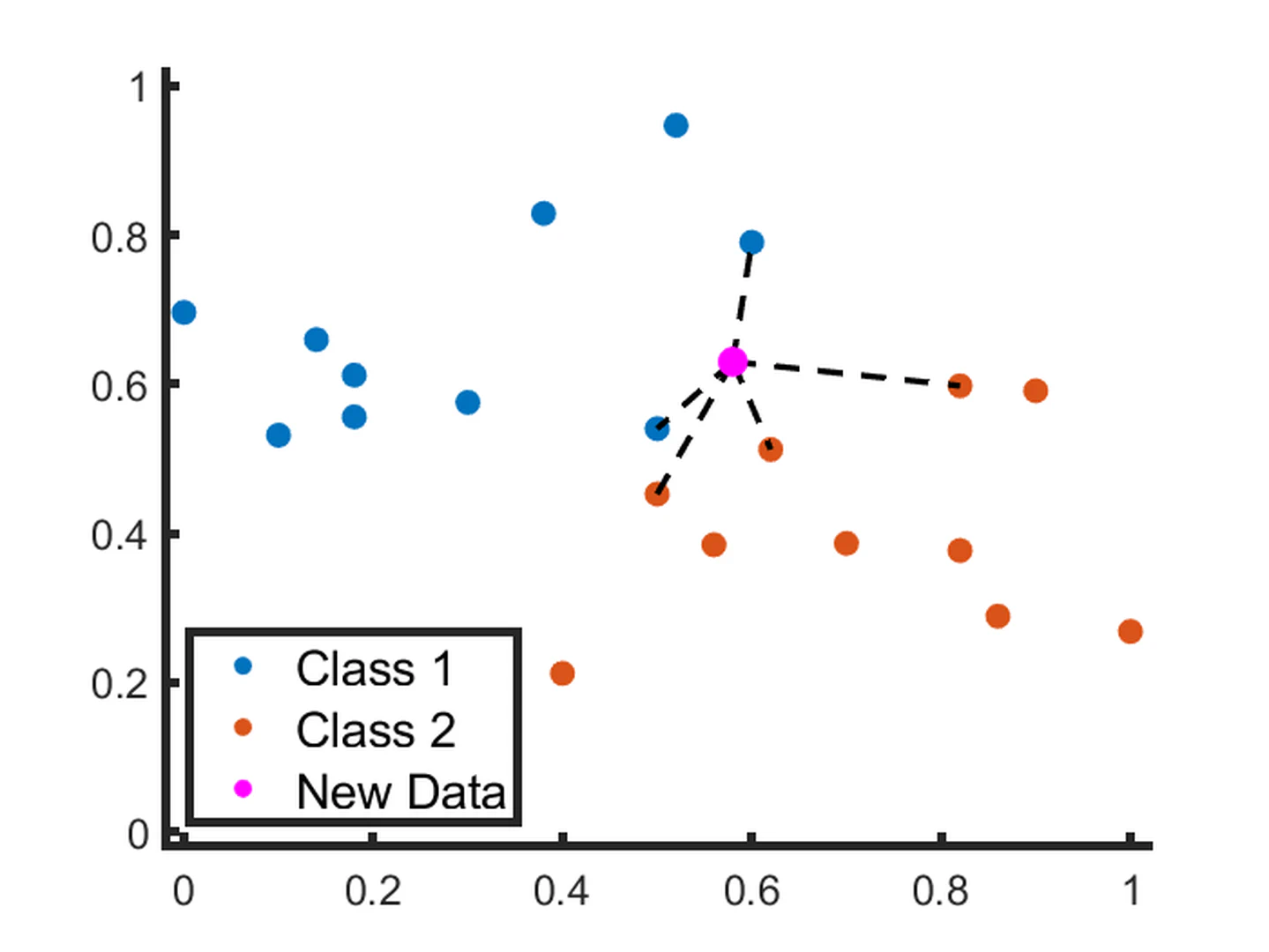

In $k$ -nearest neighbour (KNN) models, a prediction is made by identifying the k closest datapoints to the new datapoint of interest, and using their values to determine what output value should be assigned to a new input. In the classification example illustrated in Fig. fig-simpleknn, this would result in the new data being classified as Class 2. KNN can also be used for regression tasks. The premise of using proximate data to make a decision is intuitive, and can be easily visualised where k is small. However, as the value of k increases, the interpretability of the model decreases. The simplicity of KNN also leads to limitations, including susceptibility to unimportant features and ignoring the distances of each neighbour. Classic KNN is primarily seen in the literature for benchmarking or validating feature selection algorithms, however KNN variants have been recently used in applications including severity assessment for Parkinson’s diseaseand prediction of cardiovascular disease.

Support Vector Machines

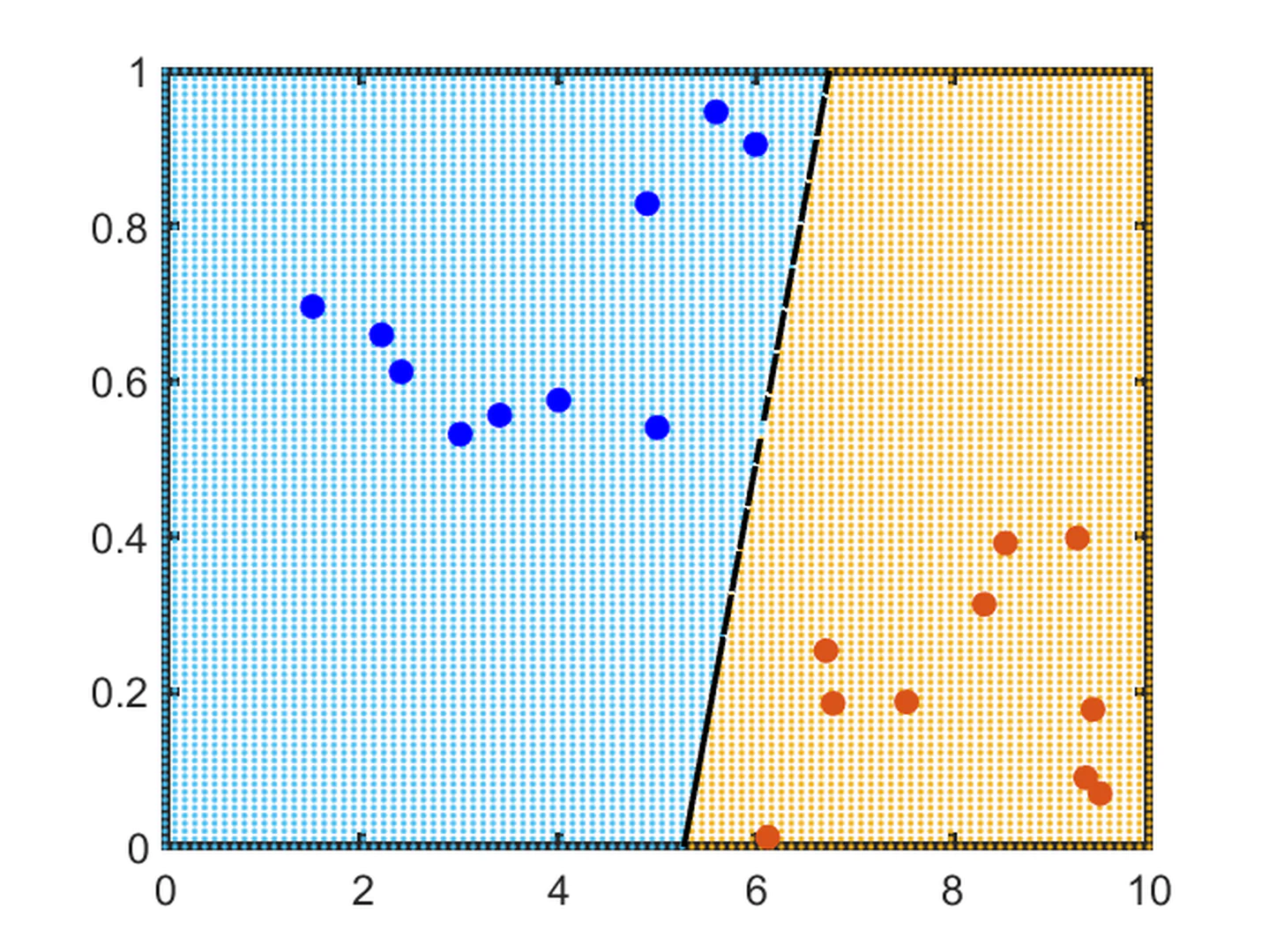

Support vector machines (SVMs) are generally not considered to be explainable, as they are challenging to visualise or explain for higher dimensionality inputs. However, we argue that this is also true of algorithms such as linear regression. Low-dimensionality SVMs split data into classes based on a line or hyperplane. For simple binary classification scenarios with up to three features (i.e., three dimensions), the clusters and separating line or plane can be readily illustrated to the end user; an example for 2D binary classification is given in Fig. fig-simplesvm. Due to the interpretability of low-dimensionality SVM, some early studies have sought to represent complex data in lower-dimensional feature spaces so that explainable 3D SVM can be utilised.

Post-Hoc Explainability

Permutation Importance

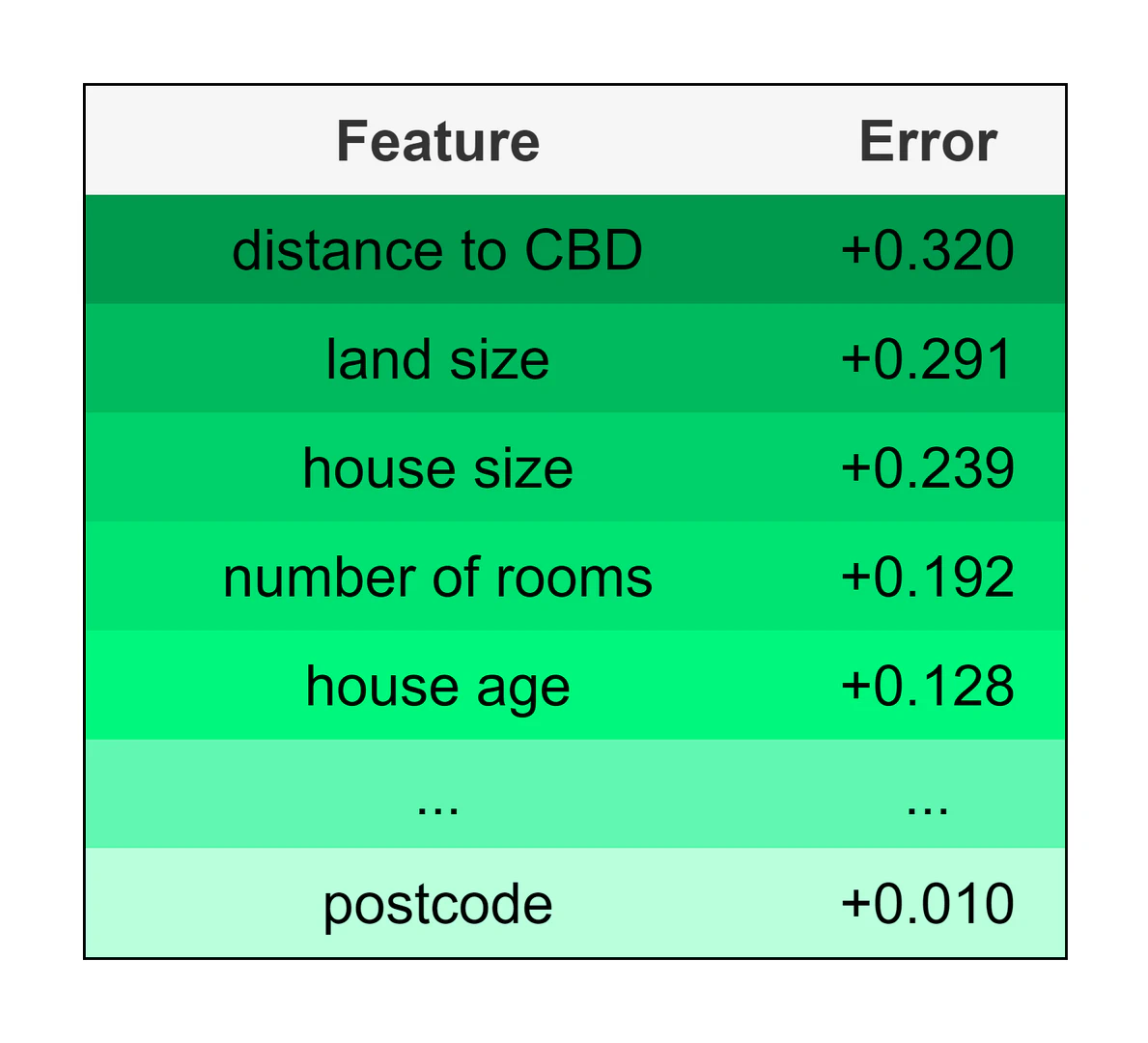

Permutation importance is a model-agnostic explainability technique that seeks to quantify feature importances in a global context, applied after a model is trained. It involves randomly shuffling all values for a particular feature and assessing whether this has an impact on model performance. The output of permutation importance is a list of weights indicating how important each feature is. These weights represent the impact of shuffling the particular feature on a metric of interest. An example output for a house pricing task is shown in Fig. fig-permutation, with features that caused the highest increase in prediction error considered to be the most important. Permutation importance is a computationally expensive technique, however both the output and the method itself are easily understood. This has lead to it being widely used in applications including disease biomarker discovery, digital soil mapping, wildfire susceptibility analysis, and prediction of biohydrogen production efficiency. A key limitation is that its results can be misleading where two variables are highly correlated; shuffling one may have little effect on the output due to the presence of the other.

Partial Dependence Plots and Individual Conditional Expectation

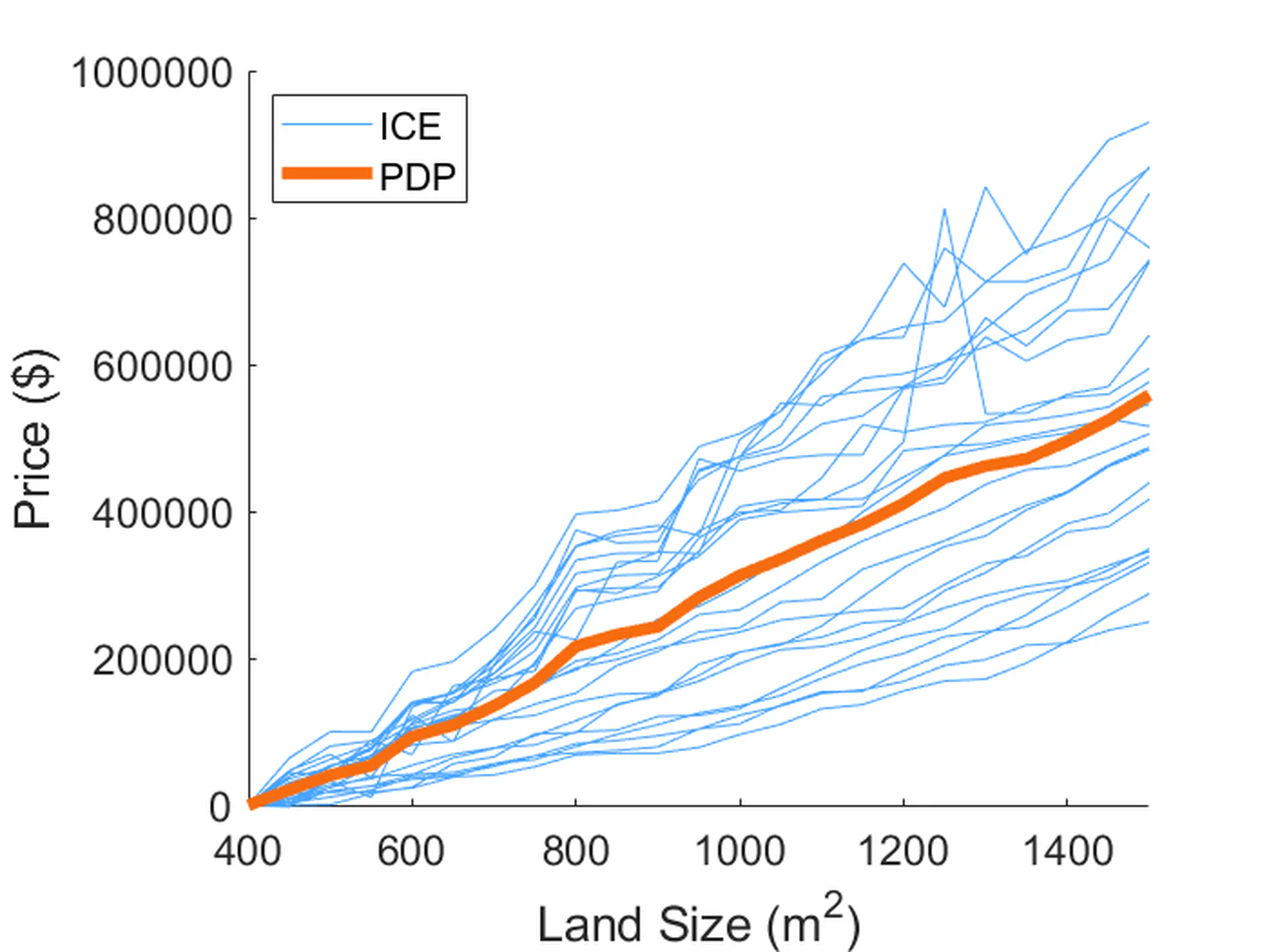

Partial dependence plots (PDPs) and individual conditional expectation (ICE) plots are model-agnostic methods which seek to understand the changes in prediction if a single feature is modified in the global context. After a model is trained, the particular feature is modified to assess the impact on the output. ICE plots illustrate the impact of the feature for every instance in the database; e.g., every instance is plotted as a single line. PDP plots find the average of the impact across all features, and therefore result in a single line. An example for the impact of land size on house pricing is illustrated in Fig. fig-iceandpdp.

ICE and PDP each have advantages and disadvantages. ICE can reveal heterogeneous effects, such as when changing a feature has a negative effect on half of the instances, but a positive effect in the other half. In such a case, PDP would display a flat, horizontal line - a result that is misleading and not useful. However, the interpretability of ICE decreases as the number of instances increases; plots become crowded, and it becomes harder to separate instances or identify atypical instances. PDP avoids this problem by illustrating an average.

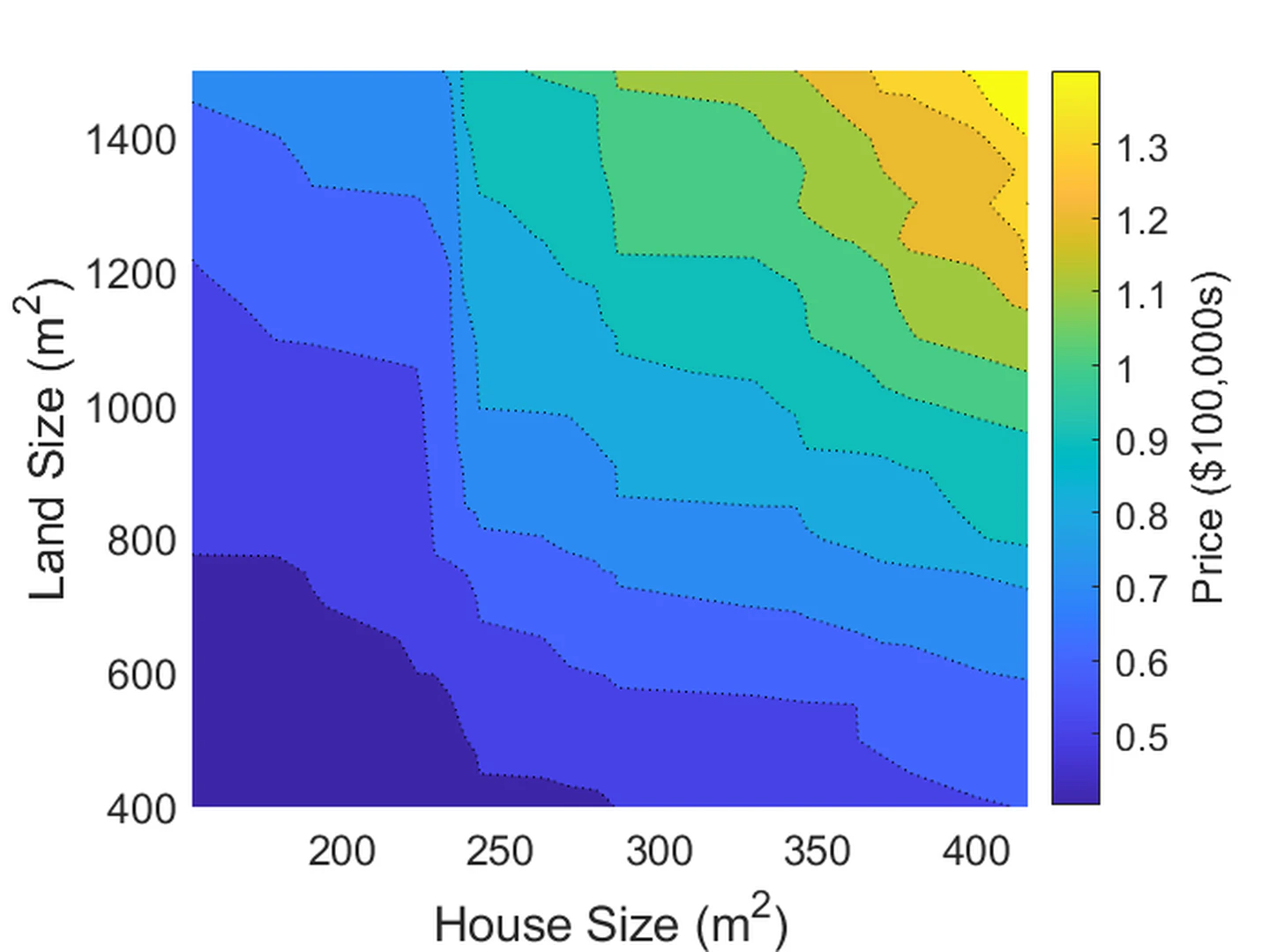

PDP is also useful in in graphing the dependencies of two features. As shown in Fig. fig-2dpdp, PDP can be used to generate contour plots that illustrate the relationship of two features to the output of interest - in our example, the relationship of house and land size to house price. This cannot be achieved with ICE, as the large number of overlapping contours would not be interpretable.

As they each have their respective benefits, ICE and PDP are commonly applied together; they have seen use in applications including acute kidney injury risk assessment, identification of factors in crash severity, water stream degradation assessment, and mortality risk prediction in intensive care settings. PDP has also been commonly applied alone in the literature. Recent works have applied PDP to applications including biochar yield prediction, concrete strength prediction, crop yield assessment, and deterioration in hepatitis patients.

Shapley Additive Explanations

Shapley Additive Explanations (SHAP)is a model-agnostic explainability approach that uses a game theory approach to assess the impact on model performance when different combinations of features (players') participate in the prediction (game’). It assesses all combinations of features and uses an additive approach to summarise the impact of each feature.

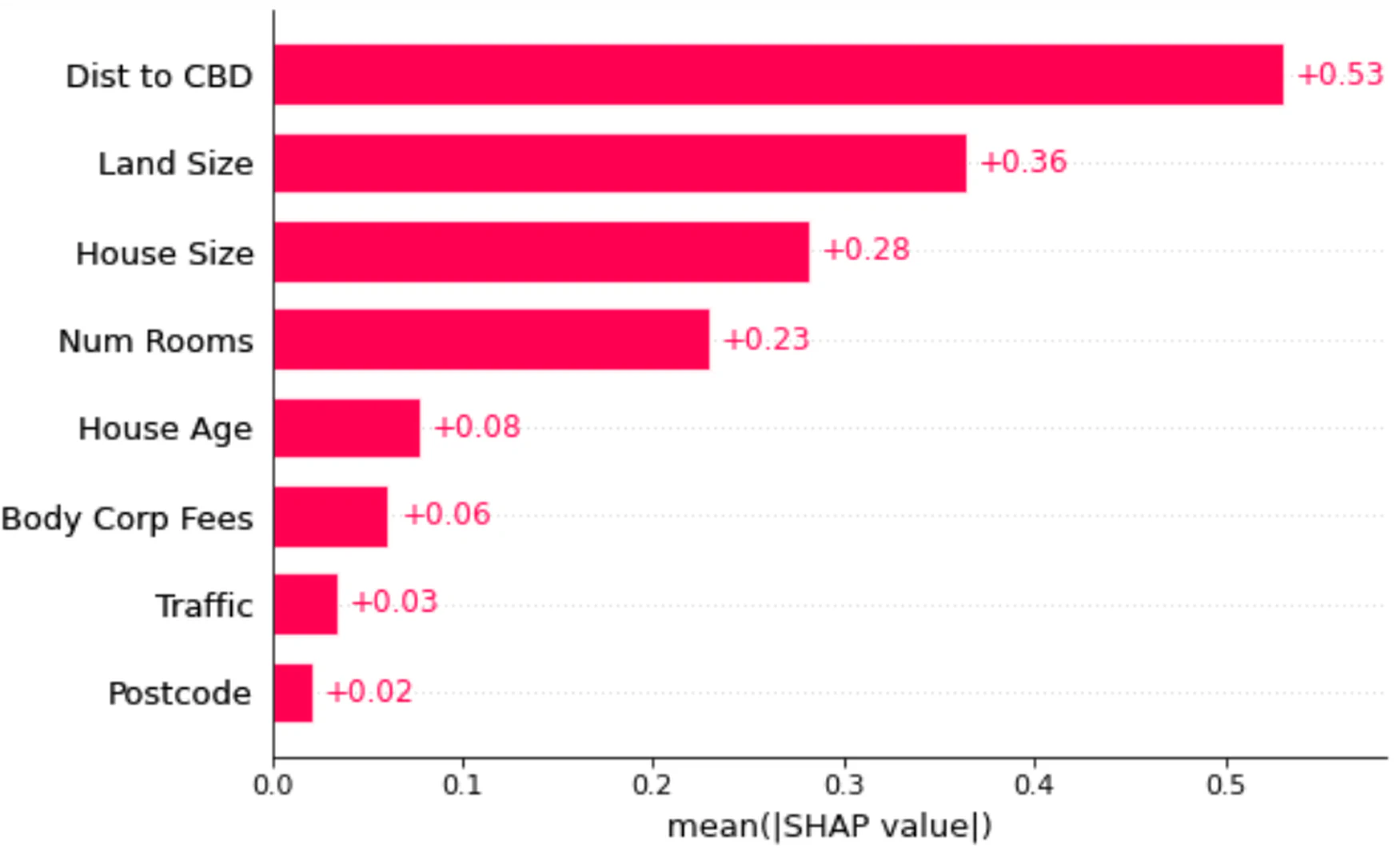

SHAP can be utilised in several different ways. Examples in the global context are illustrated by Figs. fig-shapbar and fig-shapbee. In Fig. fig-shapbar, feature importances as determined by SHAP are simply graphed as bar charts. Meanwhile, Fig. fig-shapbee shows a `beeswarm’ chart. Features are listed in order of determined importance. Each dot represents a single instance, and its colour indicates whether the feature had a low (blue) through to high (red) value; for example, large distances to the CBD are shown to have a negative impact on the output of house prices.

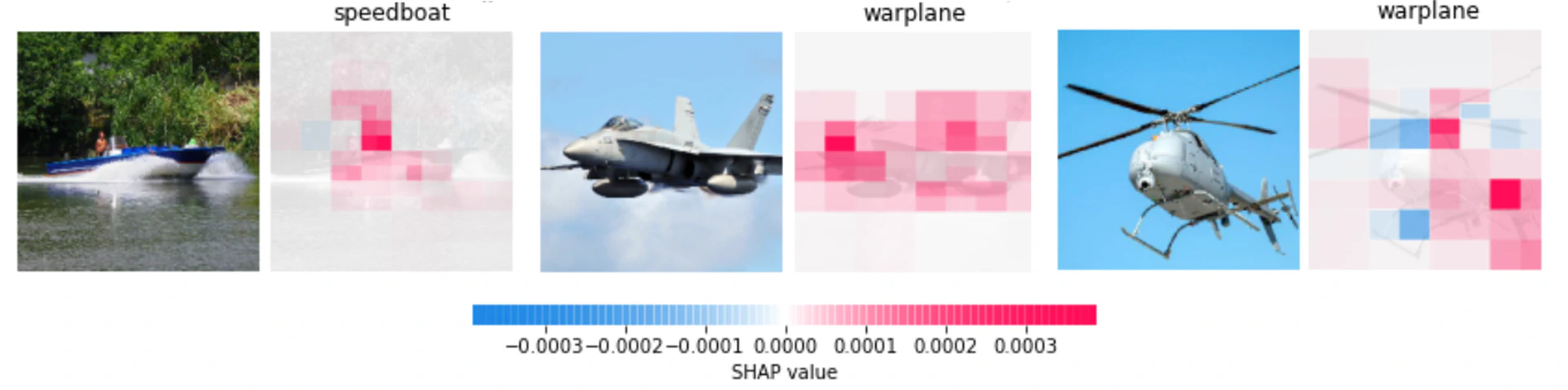

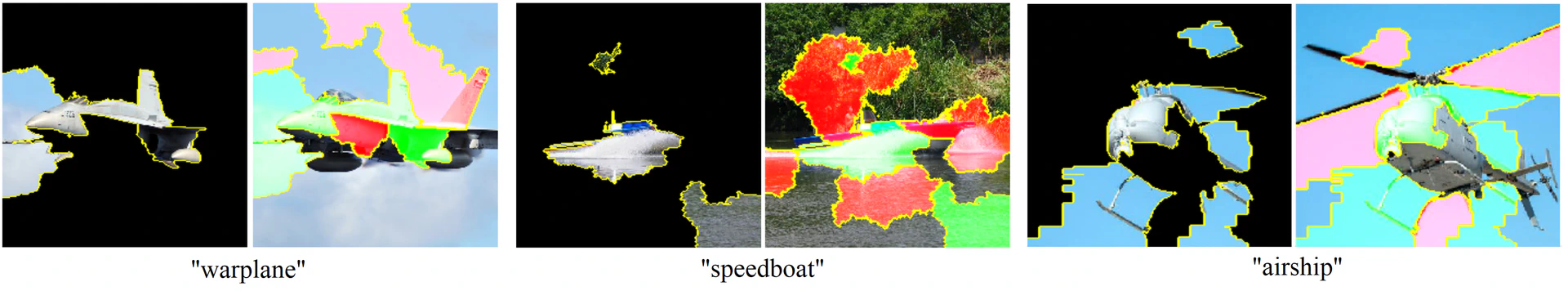

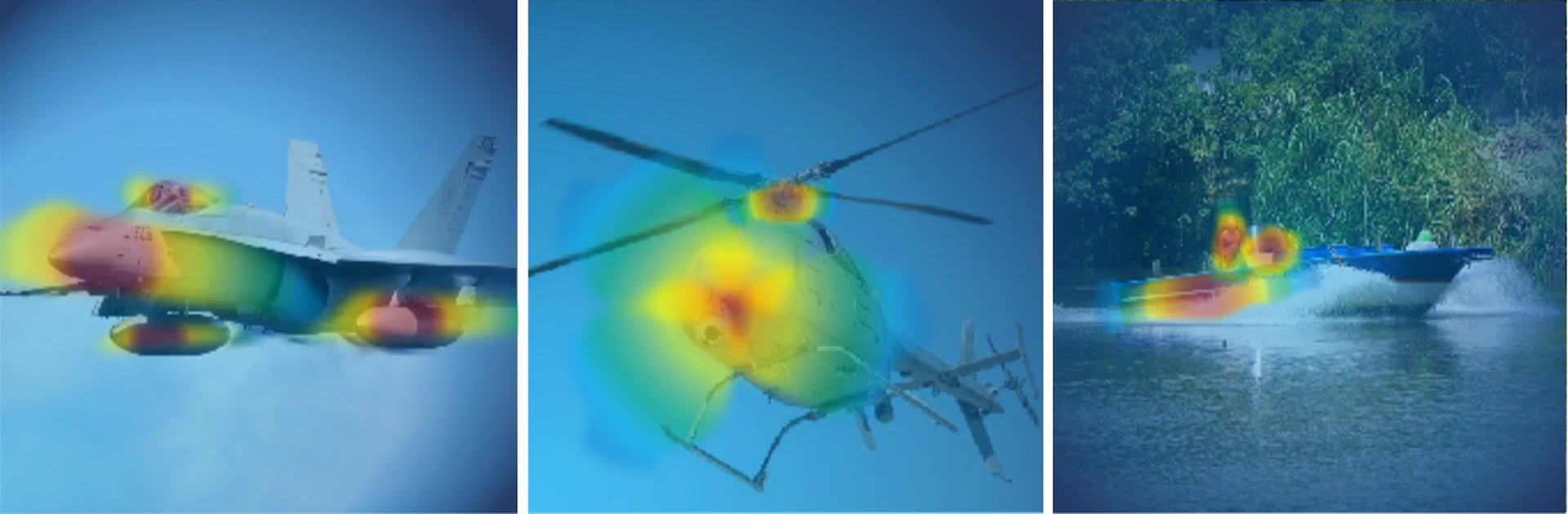

SHAP can also be used in a local context. For an individual prediction using feature-based data, force plots can be generated to explain which features contributes the final output, including the direction in which they contributed - as shown in Fig. fig-shapforce. For image-based data, heatmaps can be generated to illustrate the regions of an image that SHAP considers most important when making a classification. Examples of these are seen in Figs. fig-shapimage; these examples were generated using the pre-trained ResNet modelfor ImageNet data. The helicopter was incorrectly labelled as a warplane, but the presence of blue squares indicates a lower level of confidence. This transparency can help maintain trust even where predictions are wrong.

SHAP has been extremely popular in recent literature due to the range of clearly interpretable graphical methods that can be implemented to understand its results. SHAP has been used to explain feature importances in applications including mortality risk assessment, concrete creep prediction, plastic waste forecasting, wastewater processing analytics, electric vehicle route planning, and fault detection for rotating machinery. SHAP has also been applied for image explanations in a wide range of settings, including brain tumour diagnosis, offshore oil slick identification, and spam image detection.

Local Interpretable Model-Agnostic Explanations

Local Interpretable Model-Agnostic Explanations (LIME)is an explainability tool that does what its name suggests - offers model-agnostic explanations in a local context. It modifies a single data sample by altering features, and then fits a linear model to the pertubed dataset in the vicinity of the original data sample. This is then used to generate explanations that are accurate for the local context, but invalid in the global context.

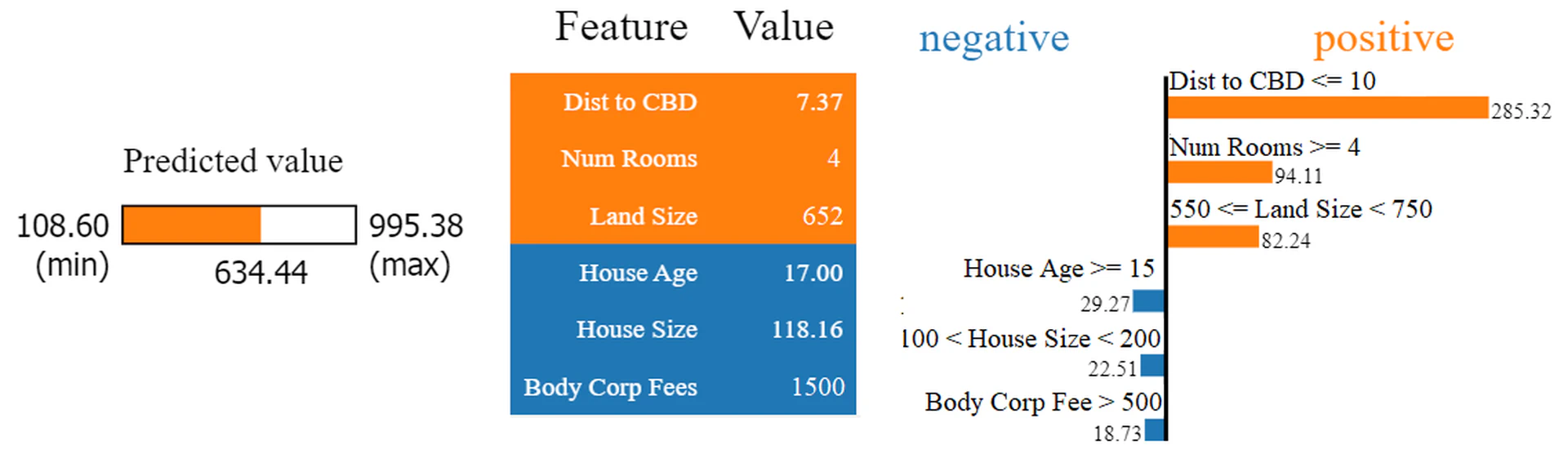

LIME returns scores for feature importances in the local context, and thus many graphical methods for interpretation resemble those seen with previous feature importance methods. Fig. fig-limefeature illustrates LIME’s graphical outputs when assessing a single prediction; it displays the overall prediction, a list of the most important features, and a `waterfall’ graph of the most important features. Features on the right pushed the predicted house price higher, while those on the left pushed the prediction lower. LIME can also be used on image and text data, highlighting regions of an image or words within a document that contributes to an overall output prediction, as shown in Fig. fig-limeimage. For each example in Fig. fig-limeimage, the visible section in the left image indicates the areas that contributed towards the prediction. The green and red areas highlighted in the right image are those that had positive and negative impacts on the prediction, respectively.

Alongside SHAP, LIME is one of the most popular explainability methods in the literature. LIME has been used on feature-based data for applications including activity recognition with wearable sensors, steel beam damage prediction, bankruptcy prediction, and stock market forecasting. It has also been broadly used for image and waveform classification tasks including heartbeat detection from electrocardiogram, COVID-19 detection in chest x-rays, and quality assessment for augmented synthetic aperture radar images. Finally, LIME has been used for text analysis tasks including depression identification from symptom self-reportingand identification of resource needs following natural disaster based on Twitter posts.

Class Activation Mapping

Class activation mapping (CAM) is a model-specific method for providing local explanations of image-based predictions made by CNNs. Classical CAM by inserting a global average pooling (GAP) layer after the final convolutional layer of a convolutional neural network (CNN), and weighting the GAP outputs to generate a heatmap. An example of outputs is illustrated in Fig. fig-camfig, with the red hotter' areas contributing the most to a prediction, through to blue cooler’ areas which contribute the least.

Classical CAM has also inspired a large number of variants in recent years, including Score-CAM, Gradient-weighted CAM (Grad-CAM), and HiResCAM. One recent paper proposed a CAM method using SHAP scores. The key purpose of each variant has been to produce visualisations that more faithfully illustrate a model’s decision making process. However, it is challenging to prove superiority of one method against another without extensive manual comparison of explanations by end-users; this has not been broadly conducted in the literature.

Variants of CAM have been utilised in a wide range of image classification papers, with applications including tree mapping for forest management, Alzheimer’s disease diagnosis from structural magnetic resonance imagery, manufacturing cost prediction from computer-aided designs, and guided rehabilitation training.

Other Techniques

In the above subsections, we have examined some of the most prevalent XAI techniques in the literature. However, there are many additional techniques that are gaining interest in the literature. One example is counterfactuals, a group of model-agnostic local explanation techniques that seek to explain what would need to be different for the outcome to have changed; these are particularly useful for binary classification tasks.

Anchors focus on building rule-based model simplifications that capture the key features and identify if-then rules that explain predictions. Rules are developed in the global context, and can then be used to explain local predictions. Anchors have also inspired related approaches such as the recently proposed CASTLE (cluster-aided space transformation for local explanations), which has been shown to outperform its predecessor on a number of databases.

Emerging AI systems such as generative AI have also posed challenges for explainability, as the underlying models have high complexity. In the natural language processing (NLP) context, one recent work adapted SHAP scores for the language context, highlighting text that indicated COVID-19 misinformation. Another novel method determined layer-wise relevance propagation (LRP) for each component of a transformer model, using this to generate heatmaps with higher accuracy than CAM approaches. This approach was also demonstrated to work in the NLP context, and has been applied to problems such as COVID-19 screening using chest radiography.

Responsible AI

What is Responsible AI?

Responsible AI (RAI) describes the principles by which AI systems are developed, deployed, and utilised in order to comply with legal and ethical standards. As laws and ethics vary from region to region, there is no singular definition of what makes AI responsible; however, many stakeholders have sought to define frameworks to guide responsible AI development.

In much recent literature, RAI has been used synonymously with related terms such as trustworthy AI, ethical AI, and fair or equitable AI. However, we suggest that RAI encompasses these concepts; that truly responsible AI must be trustworthy, fair, and adhere to ethical norms, amongst other characteristics. Based on our review of the literature on RAI and related terms, this section illustrates the importance of responsibility in AI and defines six essential pillars of responsibility.

Importance of Responsibility

AI systems are becoming increasingly prevalent in everyday life, used in applications ranging from media recommendation systems to self-driving vehicles. The dangers of irresponsibly developed AI in safety-critical applications are evident: if autonomous vehicles, weapon systems, or automated medication delivery devices fail to operate as intended, then lives can be lost or irreparably altered. It is essential that AI systems making safety-critical decisions are designed responsibly to ensure that they are reliable, accountable, and free from biases.

In other domains, the dangers of irresponsible AI design are less obvious but can be equally serious. One notable example is facial recognition, which is used by law enforcement, retail stores, and sporting venues. In each of these settings, facial recognition technology is primarily being used to identify perpetrators of crime or misdemeanours. However, researchers have found that commercial facial recognition tools have higher error rates when identifying women and racial or ethnic minorities compared to light-skinned men. Thus, innocent civilians belonging to marginalized groups are more likely to be wrongly criminalized due to mistaken identification. Additionally, predictive policing algorithms trained on images of previously arrested persons have been shown to exhibit racial biases. It is widely theorised that biases in facial recognition algorithms are largely due to data imbalance; minority groups are often underrepresented in data used to train commercial facial recognition models, and overrepresented in data used to train predictive policing models. Thus, irresponsible AI can reproduce and reinforce human biases in justice systems, contributing to a cycle of discriminatory arrest practices.

Facial recognition is just one example of where AI systems can have life-altering consequences. Medical AI diagnosis systems can fail to accurately diagnose conditions in gender, racial, and ethnic minorities, largely due to these groups being underrepresented in medicine and medical data until the last century. Hiring algorithms have also been shown to exhibit biases based on gender, ethnicity, age, disability, and sexuality due to human biases. Even where these characteristics are removed from a resume, NLP approaches can still discriminate against minority groups based on sociolinguistic patterns. Automated lending algorithms are also known to exhibit biases in terms of approval rates and maximum loan sizes for minority groups.

Aside from the potentially life-altering consequences of biased AI, there are also risks of physical, mental and social harm associated directly with some types of AI. Generative AI systems are increasingly popular in a range of fields, ranging from education to psychology. However, there are many recent and historical examples of generative AIs producing biased musings and hate speech, perpetuating misinformation, or responding inappropriately to mental health crises. There is also some evidence that human-chatbot relationships can become dysfunctional and lead to social or mental harm. Even state-of-the-art models such as ChatGPT are already being shown to have dangers; one recent work demonstrated that ChatGPT provided misleading information about mental health disorders and treatments, while another recent work suggests that ChatGPT provides “correct but inadequate” information about medical conditions.

Even seemingly innocuous recommendation systems can have dangers. Some studies have found that recommendation systems on some media platforms can lead to users being guided towards problematic content based on their previous media engagement. Amplification of misinformation and extremism has been found to be particularly prevalent on YouTube, creating `filter bubbles’ where users are predominantly recommended content that confirms their existing views. Research has found that the filter bubble can be easily burst through providing more balanced recommendations, however this is not being implemented by all platforms.

Overall, there are many ways that AI can cause harm - socially, financially, mentally, and physically. The potential of AI to cause harm is the key motivation for RAI. Each of the substantial risks outlined in this section can be mitigated through strategies to open the black box of AI in order to better develop, deploy and use AI responsibly - for the good of society and environments.

Pillars of Responsibility

In this section, we propose a framework comprised of six critical characteristics for responsible AI: fairness, robustness, transparency, accountability, privacy, and safety. Each of these pillars has been identified as critical to RAI based on our review of RAI policies and frameworks recently proposed in academia, industry, and governance, as highlighted in the following subsections. The identification of these key pillars then guides our discussions in Section 4, where we examine how XAI is foundational to all pillars of RAI.

To overview the prevalence of each pillar in the literature, Table tab-raipillars summarises the frequency with which various sources include a particular pillar in their own responsible AI framework or guidelines. In terms of academic sources, we focus on four recent frameworks published in reputable journals. In terms of government and political sources, we have considered AI frameworks and guidelines developed by government and political bodies in eight diverse regions - namely Australia’s Commonwealth Scientific and Industrial Research Organisation (CSIRO), the United States’ (US) National Institute of Standards and Technology (NIST), China’s Ministry of Science and Technology (MOST), India’s INDIAai, Japan’s Ministry of Economy Trade and Industry (METI), Saudi Arabia’s Saudi Authority for Data and Artificial Intelligence (SADAI), the United Kingdom’s (UK) Office for Artificial Intelligence, and the European Union’s (EU) High-Level Expert Group on Artificial Intelligence (AI HLEG). Lastly, we considered RAI principles from six industry sources that are prevalent in the AI space, namely Google, Microsoft, Meta, Samsung, IBM, and Amazon.

Quantifying the number of considered sources that included each pillar in their respective RAI frameworks or guidelines. A total of 4 academic sources, 8 government and political sources, and 6 industry sources were consulted. Academic sources were papers recently published in reputable journals, government and political sources were selected to be representative of a wide range of global regions, and industry sources were selected based on their prevalence in the AI domain.

Table Label: tab-raipillars

Download PDF to view tableFairness

Fairness of an AI system ensures that the model treats people and scenarios equitably, without discrimination. Several recent works on RAI have identified fairness as a key attribute of responsibility. Another work by Mehrabi _et al._conducted a focused survey on bias and fairness in AI, arguing that fairness is the most critical issue facing successful development and deployment of AI systems.

Four of the considered industry sources specifically named fairness as a fundamental principle of RAI. Of the two that didn’t explicitly name fairness, the concept is still present - Google’s related principle is to “avoid creating or reinforcing unfair bias”, while Samsung discusses fairness under their principle of “Diversity & Inclusion”.

Government sources from around the world unanimously agree that fairness and non-discrimination is compulsory for AI systems. However, it is worth noting that not all sources define what fairness means in their regional context.

Overall, the principle of fairness is perhaps the most agreed upon of all the pillars of RAI. Fairness is explicitly mentioned by all consulted sources across academia, governing bodies, and industry.

Robustness

Robustness of an AI system ensures that the model is accurate across all potential use cases, and resilient against malicious attacks. This concept is perhaps the most familiar to AI developers and users; there has long been significant value placed on ensuring that AI systems are robust, reliable, and resilient.

The pillar of robustness has been identified by most academic sources. One work directly names robustness as a key principle of RAI, focusing on robustness against adversarial attacks. Meanwhile, another work identifies the related concept of “technical robustness and safety” as critical; they suggest that a system meets this criteria if it performs as expected, is resilient against attacks, and can recover from failure without causing harm. Additionally, the related concept of reliability is mentioned in one work as a means of ensuring that AI is understandable.

In industry, robustness is widely considered but terminology varies. Meta, IBM, and Amazonall name robustness as a key principal of RAI, while Microsoft uses the terminology of reliability. Googledo not directly discuss reliability or responsibility, but under the principle of ensuring safety they mention the need to “avoid unintended results that create risks of harm”. This is somewhat related to robustness, but does not cover all elements of the concept. Interestingly, Samsungdo not discuss robustness, reliability, or accuracy in their RAI framework.

Most government and political bodies note the need for robustness in RAI systems. Robustness is noted as key for responsibility by government bodies in the US, EU, Japan, and Saudi Arabia. Those that did not discuss robustness instead noted that the strongly related concept of reliability is critical for responsible AI systems.

Overall, robustness and reliability are considered across academia, industry, and governing bodies to be essential for the development of ethical and responsible AI.

Transparency

Transparency is the principle of ensuring that the decisions made by an AI system are able to be described and reproduced. For transparency to be achieved, descriptions should be accessible and understandable to users, developers, and other stakeholders.

The principle of transparency in RAI is largely agreed upon by academic, government, and industry sources. In terms of academic sources, three works name transparency as an essential pillar of RAI. Another work also discussed the need for transparency in the context of the overarching principle of “intelligibility”. Each of these works note that transparency also supports the identification of issues within a model that might cause failures or biases.

In industry, transparency is often mentioned but individual definitions vary. Microsoft, IBM, and Samsungeach note transparency as a key RAI principle in the sense of a model being understandable to users, however Samsung stipulates that this applies only where it does not compromise corporate competitiveness. Amazonstate that models should be transparent to the extent that users can decide whether or how to use them, while Meta’s policyfocuses on transparency around how data is used. Google’s stance on transparency is vague, mentioning that AI systems should feature “relevant explanations” and “provide appropriate transparency and control over the use of data”, but this is in the context of accountability and privacy principles.

Government agencies are in consensus regarding transparency - all eight considered government and political bodies noted transparency as a critical feature of responsible AI. Most noted the need for transparency primarily for supporting auditability and for ensuring that end users know how decisions are being made about them by an AI system.

Accountability

Accountability of an AI system ensures that a model can justify its decisions. An AI system is accountable if its functionality and decisions can be explained to users, governing bodies, and other stakeholders to ensure compliance with laws and ethical standards. Accountability also means that AI should be subject to feedback and appeals from users and governing bodies, with mechanisms for remediation in place where issues arise.

In terms of academic literature, accountability is discussed extensively in all considered works. One work notes that the principle of transparency can support accountability, however does not guarantee it. Academic literature emphasises the need for AI systems to be made accountable to ensure that they can be made liable for their decisions.

Industry sources also commonly include accountability in their principles, but implementations vary. Meta notes accountability and governance as critical for RAI, with internal self-governance measures in place along with processes for user appeals. Google explicitly states that RAI should “be accountable to people”, further clarifying that this includes providing explanations and opportunities for feedback and appeal. Conversely, Microsoft states that people should be accountable for AI systems. Amazon mentions the related principle of governance as key for RAI, but provides no clear guidelines on how this should be implemented. IBM discusses accountability and governance extensively in their stance on AI ethics, noting that governance to ensure accountability should occur both internally and externally; however, accountability is not one of their five primary principles of RAI. Samsung does not include accountability or governance in their RAI framework.

Accountability is also considered as a key principle of RAI by all government and political bodies considered, but descriptions of accountability vary. RAI principles from governing bodies in the US, China, India, Japan and Australia focus on holding people accountable for AI systems. Meanwhile, holding AI itself accountable is the focus of accountability principles proposed by political bodies in the EUand the UK. RAI principles from Saudi Arabia’s SADAI considers accountability of both people and technology, indicating that people across the AI supply chain should be accountable for AI systems, as should the system itself. RAI criteria from the US, UK, and Japanconsider transparency and accountability as a combined principle of RAI, while all other bodies consider accountability as an individual principle.

Overall, accountability is another prevalent pillar of RAI in the literature. It is clear that AI systems must be accountable for their decisions in order for systems and developers to meet legal and ethical requirements.

Privacy

Privacy in AI systems relates to ensuring that sensitive information used in training, validating, testing, or using the model remains private. An AI system should not be able to be `reverse engineered’ to reveal private information about persons in the training data, nor should it be manipulable to reveal sensitive information about a person through malicious inputs.

Privacy is named as a key principle of RAI by all considered academic sources. The definitions of privacy in all instances refer to ensuring that sensitive data are not revealed. Emphasis is broadly placed on ensuring that data is protected at all stages of the AI pipeline.

Similarly, all considered industry sources mention the concepts of privacy, data protection, or security in their RAI principles. Implementations of privacy vary somewhat between companies. Meta specify that their privacy approach requires them “assess privacy risks that involve the collection, use, or sharing of people’s information”, however they do not specify how data sharing or collection will be disclosed. Conversely, Google notes the need for consent and notice of how data is used or shared, and IBM notes the need for at least notice. Policies from Microsoft, Amazon and Samsung focus on preventing data breaches.

All considered government and political sources also noted the need for privacy in RAI. Privacy is directly named as an RAI principle by six bodies, while the US NIST used the terminology `privacy-enhancing’. The UK government emphasised the related concepts of data protection and security. All definitions focused on preserving individual privacy with strong emphasis on data security. The US NIST’s documentationalso notes that “privacy-related risks may overlap with security, bias, and transparency,” highlighting the challenges of ensuring transparency and fairness without compromising privacy.

Overall, the principle of privacy is well agreed upon - all considered academic, government, and industry sources note it as essential. However, it is worth noting that there are varied descriptions of how privacy should be considered amongst industry sources.

Safety

Safety of an AI system is focused on ensuring that it does not harm people, environments, and societies. The need for safety to prevent harm is clear in a range of applications, from mental health chatbots to autonomous driving systems.

All considered literature sources discussed safety, to varying degrees. One workconsidered the principles of robustness and safety together - arguing that a robust system would prevent harm by preventing failures. Other works discussed safety separately, with one noting the need for real-time protectionsto mitigate safety issues should an AI fail.

Industry also broadly agrees that safety is a critical issue. Metaand Microsoftboth group safety with either robustness or reliability in their frameworks. Guidelines presented by Samsung do not explicitly name safety, but do discuss limiting potential harm to humans, environments and ecosystems. Google states that AI should “be built and tested for safety”. Interestingly, neither IBM nor Amazon include safety in their guidelines.

All government and political bodies discuss safety. The US’s NIST names safety as an attribute of RAI, while guidelines from China, Japan, and the UKalso discuss safety or minimisation of harm. Australia’s CSIROaddresses safety under the principle of “Do no harm”, stating that civilian AI systems should be designed to minimise harm and negative outcomes. Government and political bodies from India, the EU, and Saudi Arabiaall group safety with robustness or reliability, again highlighting a link between these two pillars.

Overall, safety is widely agreed to be critical for RAI. Interestingly, many sources grouped safety with robustness or reliability. However, an AI model could be robust without being safe if it is designed to do harm. As such, we argue that safety should be considered as a discrete pillar of RAI.

Summary

Our review of current academic, industrial, and government frameworks and policies on RAI has revealed that there are six critical pillars for RAI: Fairness, Robustness, Transparency, Accountabiilty, Privacy, and Safety.

Interestingly, we found that government and political bodies are leading the push for responsible AI. In all instances, government and political bodies had substantial guidelines in place for the development of responsible and ethical AI. Additionally, government bodies from a diverse group of regions all agreed on these six fundamental principles of RAI.

Overall, this section has highlighted that these six principles are critical in ensuring that AI is developed, deployed, and used responsibly. However, a significant question remains: how do we address these pillars to create responsible AI systems? In the next section, we propose an answer: the use of XAI to underpin truly responsible AI.

Explainable is Responsible

In this section, we investigate the use of explainability to enable responsibility. In particular, we have presented strong evidence illustrating that explainability is foundational for each of the six pillars of responsibility identified in the previous section. Through our analysis, we determine that XAI is foundational for RAI; it would not be possible for RAI to exist without it.

Fairness

In order to achieve fairness in AI systems, developers must first be able to identify when bias or discrimination occur so that the problem can be rectified. Explainability techniques are highly suited to revealing a model’s biases, both at global and local levels. Consider a home loan example: if explainability tools such as SHAP or LIME are applied and reveal that a person’s gender was the largest contributor towards an AI system deciding to decline their home loan application, then this reveals a clear bias that must be corrected. This intuition has led to explainability being broadly considered in the literature for promoting development of fair AI systems.

One recent work examined the fairness of AI models including RF, KNN, and DTs trained to predict recidivism in juveniles convicted of a crime. Their analysis used LIME scoring alongside accuracy and fairness metrics, comparing models trained with only static inputs (those that cannot be changed, such as sex and nationality), only dynamic inputs (those that can be changed, such as social support and school performance), and a combination of both. Using LIME scoring, they identified that AI models made decisions largely based on static variables where these were available; the models were learning to be biased. Further analysis showed that disparity metrics also increased where static variables were included - specifically, the likelihood of someone being falsely identified as recidivist was significantly higher amongst men and foreigners. A trade-off between accuracy and fairness was identified, with the feature encoding strategy of learning fair representations (LFR) identified as a candidate for mitigating bias. In this instance, the explanations provided by LIME were essential in revealing the bias of all developed AI models, and thus enabling bias mitigation strategies to be applied and assessed.

In the medical domain, one recent work proposed a framework based on their custom XAI tool for auditing medical diagnostics systems. Their proposed XAI tool was inspired by both LIME and anchors, generating a local `neighbourhood’ of pertubations, and thereafter extracting rules to provide as output explanations. The overall framework initially calculates disparity scores for different groups (including groups based on sex, ethnicity, and insurance status) based on the Wasserstein distance between predictions and the ground truth. Their custom XAI tool is then utilised to explain system misclassifications. They suggest that this allows for assessment of fairness at two stages: firstly, by visualising the disparity scores and assessing whether any one group suffers from higher disparity than another, and secondly, but inspecting local explanations of misclassifications to assess whether the model demonstrated biases in making those predictions. Overall, their proposed system supports fairness in an auditable way.

In another recent work, an interactive and explainable tool was developed for loan decision making. The prototype was based on a logistic regression model with interfaces that explain feature importances at global and local levels based on model weights, as well as enabling comparison to similar applications. The interactive component of the prototype allows non-technical end users to mark a decision as fair' or not fair’, and adjust weightings on different features to see if a decision changes; these labels and suggested weight changes were then utilised to adjust the model. Prior fair' and not fair’ labels are incorporated into explanations; users can see how many times the model’s predictions were labelled as fair' and not fair’ overall, as well as seeing whether similar applications were deemed to be fairly assessed by the model or not. Additionally, when the suggested weight changes were incorporated into the model, it was found to increase fairness based on disparate impact (DI) assessment. Overall, this is an interesting approach that shows promise in incorporating human feedback from end-users to improve the fairness of models, however the authors did caution that there is always a risk of people introducing their own biases to the model.

Another work that sought to enable human `fairness debugging’ through a proposed system called Gopher, which seeks to quantify and explain model bias, utilising an approach of data subset removal and fairness metrics to analyse which types of data are causing biases and make suggestions on how to correct these biases. Explanations are made in the form of lists of patterns (groups of one or more co-occurring features) responsible for biases, with additional explanations provided as to possible updates or data removals that would reduce the bias of the model. The effectiveness of this approach was demonstrated on multiple well-known databases.

Overall, the use of explainability for the purpose of supporting fairness has become established in the literature. Several works used explanation tools like LIME and SHAP to inspect whether particular features are being unfairly emphasised by a model. Meanwhile, several works propose systems that instead assess and explain fairness to developers and end-users, with the intention of receiving feedback and iterating the model to improve fairness. It is clear from the literature that XAI strongly supports the fairness principle of RAI.

Robustness

Robustness has been a key focus for many works utilising explainable AI. Explainability can help to reveal whether a model behaves consistently with similar inputs, as well as being robust against adversarial attacks.

In one recent work, a novel explainability technique entitled Similarity Difference and Uniqueness (SIDU) was proposed for producing heatmap explanations of image data that are robust against adversarial attacks. SIDU extracts the final layer of a CNN and calculates similarity and difference masks relatingto different feature applications. These are then fused to generate a final heatmap. In their experiments, SIDU produced explanations that were strongly aligned with human-grounded evaluation via eye tracking, outperforming prevalent literature models including Grad-CAM. To assess robustness, noise was introduced to input images, with CNN model predictions then explained using SIDU and competing literature models. SIDU explanations remained consistent as noise levels increased, while Grad-CAM explanations rapidly declined with increased noise. Their work demonstrates that explanations can be used to assess whether a model is robust against noise, and that explainability tools themselves can be enhanced to improve robustness.

Another recent work examined the relationship between robustness and explainability from a different angle, exploring whether robust models are inherently explainable. Their study used clean and adversarial data to train models for improved robustness, as well as training equivalent non-robust models with only clean data. They then examined how closely salience maps produced by the models matched target salience maps. Across two open-access databases, it was shown that salience maps for the robust models were more closely aligned with target salience maps than those of the non-robust models. This indicates that models designed to be robust have more inherently interpretable salience maps, potentially eliminating the need for post-hoc heatmap explanations where robustness is achieved.

Explainability has also been used to quantify robustness, with one study proposing a robustness metric based on counterfactual explanations. Their proposed Counterfactual Explanation-based Robustness Score (CERScore) operates on the principal that when comparing two AI models, the model where counterfactuals are further away on average from the input instances is the more robust model. CERScore is also model-agnostic and can be applied with no knowledge of the underyling architecture, a significant advantage over previous benchmark robustness metrics. Through a series of experiments on established AI models, the CERScore authors demonstrated that their proposed metric produced robustness scores consistent with literature benchmarks, indicating that explainability can be used to quantify robustness.

XAI has also been used to fine-tune models to improve their robustness. In one recent study, a DNN model was trained to identify malware using adversarial training and XAI-based fine tuning. Following initial adversarial training, SHAP scores were calculated for the considered input features. A new database was then developed using input features and their corresponding SHAP scores to create a second adversarial database. This was then utilised to train the final DNN model. Testing showed that the XAI-based fine-tuning approach improved the accuracy of the model on unseen data, compared to using solely traditional adversarial training approaches.

Overall, recent literature has demonstrated that explainability can be utilised both to quantify robustness and to provide robustness. Both of these areas of robustness are critical to RAI. Quantification of robustness is a useful metric for RAI, as it allows comparison between candidate models. Additionally, the use of XAI to make models that are more robust is essential to RAI, as it supports development of models are resilient against adversarial attacks and invalid inputs.

Transparency

Transparency is perhaps the most self-evident characteristic of RAI that XAI can provide; the key purpose of XAI is to open the black-box of AI to improve transparency and comprehensibility. To achieve transparency, models must provide explanations that are interpretable and understandable to end-users. Transparency may include clarifying the inner workings of a black-box model, or explaining how a model made a decision in a way that users find acceptable and meaningful.

Several recent studies have sought to understand whether XAI explanations are transparent, interpretable, or understandable to end-users. In one such study, a counterfactual-based explanation strategy was developed to explain decisions made by a chest X-ray CNN classifier to expert radiologists. To assess the transparency of their approach, they utilised survey questions and free-text responses to assess whether explanations improved the understandability and trust in their proposed XAI system, compared to no explanation, saliency maps, and cycleGAN. Based on a 5-point Likert scale, experts rated the counterfactual explanations the highest for understandability and justifiability. Additionally, all explainability tools trialled were rated significantly higher for understability than the no-explanation case. Several free-text comments also verified that the experts had a better understanding of the AI model following counterfactual explanation.

In another study, text-based explanations were generated by analysing the decision pathways of a DT model trained to assess behavioural anomalies and cognitive status in a smart home residential care setting. Clinicians were asked to complete a Likert scale survey to rate their experience with the provided explanations. Questions about transparency were rated highly, with all clinicians agreeing that explanations were easily understandable and essential to understanding how the model classified anomalies for individual patients. Most clinicians reported that the explanations would assist them in determining whether assessments made by the model were correct.

In a study focusing on the education sector, a RF model was developed to make decisions on admissions to graduate school, with the decisions then explained using LIME, local SHAP, global SHAP, and PDP. University students with a range of AI experience were then surveyed using a Likert scale approach to determine whether explanations improved the understandability of the model. PDP and LIME were both found to significantly improve understandability amongst both AI novices and students with some AI experience. SHAP showed some improvement in understandability, but to a lesser extent. However, it is unclear which graphical format was used to present SHAP scores, so the chosen method may have contributed to the lower ranking.

Another recent study sought to understand what level of transparency gave users the most confidence in an autonomous vehicle’s driving decisions. They developed a confidence metric derived from case-based reasoning, which utilised prior situations to determine an autonomous vehicle’s confidence in a current situation. The model’s confidence in a given situationwas presented to a non-expert cohort, using several different text structures to explain the confidence score. Survey participants were asked to rank which text structure they found most useful. Results of the survey found that the best-perceived explanation included the confidence level along with general information about prior situations. Explanations that provided confidence in addition to current or future situation information were also well received. The worst-ranked explanation was one that provided the confidence level only, with no transparency about how that confidence level was reached. These results indicate that transparency via text explanations of model confidence were critical to improving acceptability of the proposed AI approach.

Overall, recent literature that has sought to quantify transparency, understandability, or interpretability of AI systems have indicated a strong link between explanations and transparency. A wide range of explanation approaches have been examined by the literature, including text-based explanations, visual explanations, and feature-based explanations; each has been shown to improve transparency to some degree. Explanations have also been shown to support transparency for a wide range of users, with varied levels of domain and AI expertise.

Accountability

Accountability requires AI models to justify their decisions to ensure that they can be audited and assessed for compliance with legal and governance requirements. This principle is related to transparency, as models must be able to explain their decisions to be accountable.

One sector where accountability is critical is finance. In one recent study, an auditable pipeline based on various XAI techniques was proposed to provide transparent and accountable credit scoring. The authors note that logistic regression is popular in credit risk scoring due to the inherent explainability of these models that has lead to acceptance amongst regulators, however note that logistic regression does not perform as strongly as advanced AI techniques for this task. To address this issue, they proposed a pipeline that begins with global explanations using feature permutation importance, followed by assessment of the most important features with PDP and ICE plots. From there, local instances are then able to be explained using LIME, SHAP, and another variant of SHAP. They indicate that this pipeline explains advanced AI algorithms in a manner that satisfies prevalent regulatory and legal requirements for transparency, accountability, and privacy.

There is also a need for accountable AI systems to establish liability in legal cases. One recent work identifies XAI as highly suitable for providing a `forensic toolset’ that can help establish liability of AI systems in a range of settings. In particular, they illustrate a case study where an autonomous vehicle changes lanes to avoid a collision with car A, ultimately causing a collision with car B. The authors demonstrate that the use of XAI tools can help establish why the AI model made the decision, thus establishing factual and legal causation as is required by many common law systems. Ultimately, this enables legal systems to hold AI systems accountable for their actions, and thereafter to determine whether the AI system in question or another party is at fault for an accident. It is suggested that LIME, ICE, and PDP are critical XAI tools for understanding the global model, while SHAP is essential to understand individual decisions; this is consistent with suggestions made by the credit scoring study discussed above.

In the medical sector, an auditable pipeline was recently developed for prediction of oxygen requirement in COVID-19 patients, based on GradCAM explanations of features identified in chest x-ray input images. Their technique included the development of model-derived atlases for similar feature patches and similar overall predictions, based on areas that GradCAM highlighted as important. When a prediction is made, the clinician is presented with 8 similar patches from the atlas alongside the test patch, a GradCAM heatmap of the overall x-ray, a table comparing the test x-ray with several similar x-rays both visually and with feature-based similarity scores, and finally a table showing the model’s confidence in each feature it detects. Clinicians can also select feature labels of interest to receive further characterization of the given disease. The use of example-based explanations and numerical scores for confidence makes the decisions made by AI highly auditable and accountable, both to expert clinicians and regulatory bodies.

Overall, it is clear that accountability is critical in a wide range of sectors to ensure that AI models can be held to the same standards as humans. The literature indicates that XAI can provide an appropriate level of accountability and auditability across several key sectors, which contributes significantly towards the development of RAI systems.

Privacy

Privacy is critical for AI systems, as no sensitive information should be accessible to users of an AI system. Achieving privacy while maintaining transparency has previously been identified as a key challenge in developing responsible AI systems.

In standard use, most techniques for global and local explanation are inherently privacy-preserving as they do not explicitly reveal identifiable information about data in the training set. However, limited recent studies have identified that targeted adversarial attacks on CAM-based XAI models have a nominally increased chance of success in breaching privacy through training image reconstructionor model duplicationcompared to equivalent models without explanations.

Critically, these attacks were successful against both explainable and non-explainable models; thus data protection and privacy-preserving techniques are essential for developing responsible AI. However, such techniques make AI more opaque and thus are in conflict with transparency and accountability principles for RAI. This brings us full-circle back to XAI: several recent works have demonstrated that XAI techniques continue to provide robust and meaningful explanations where data protection and privacy-preserving techniques have been applied, thus supporting privacy to be incorporated into RAI systems without compromising on other RAI principles.

In one such work, federated learning was applied to develop a model for heart arrhythmia classification from electrocardiogram (ECG) signals before explainability was applied. Federated learning preserves privacy by enabling individual insitutions to train local models, with these models then shared to a central node for aggregation. The aggregated model is then returned to institutions for use. This enables the development of more robust models without direct data sharing, and thus protects against data inversion attacks. In the ECG study, federated learning was shown to improve accuracy in detecting key ECG features and classifying arrhythmia compared to local models, even where signals were noisy. Explanations were provided using GradCAM, providing heatmaps of the most important regions in a signal. Model duplication is still feasible, however reconstruction of local models is unlikely if weight sharing is protected.

In another work, swarm learning was applied to develop a model for cancer diagnosis from histopathology slides. Swarm learning is similar to federated learning in that models are trained locally first, however aggregation of models occurs by sharing trained local models in a peer-to-peer structure; this distributed and decentralized architecture ensures that the failure of a single node does not lead to failure of the entire network. The cancer histopathology work demonstrated that this enabled the development of swarm models that exceeded the performance of local models across all criteria of interest. To explain model predictions, heatmaps were generated to illustrate regions of interest that lead to a prediction. As with federated learning, this approach is robust against data inversion attacks as the final aggregate model has no knowledge of training data. The decentralized nature of swarm learning also ensures higher robustness in the event of a node failure.

Another approach to privacy preservation is the notion of differential privacy, where data is anonymized by injecting noise. One recent study explored whether this approach had a significant impact on SHAP values, using various open-access databases, data pertubation methods, and AI models. Their findings showed that SHAP values were minimally affected by data protection techniques. Simple linear models were more affected than more advanced models such as support vector regression, however overall still provided meaningful explanations. Based on their findings, the authors concluded that data protection and explanation are not mutually exclusive.

Differential privacy was also considered in an image context by a recent work that used image compression to remove spatial and edge information in order to privatise data prior to training. Their work considered three image-based use cases: chest x-ray classification, cervix type classification, and glaucoma detection. Their work indicated that high accuracy could still be achieved with compressed images. Salience map explanations were also able to provide meaningful information about relevant areas of an image without access to the original uncompressed image. Overall, this approach improves privacy as original images are not used for model training.

Overall, XAI enables privacy in an indirect manner. AI systems are vulnerable to many adversarial attacks, and privacy-preserving techniques can compromise RAI principles such as transparency and accountability. XAI thus supports privacy by enabling privacy-preserving learning and data protection techniques to be implemented without violating the other key requirements of RAI.

Safety

Safety of humans, environments and societies is critical for AI systems, particularly in high-risk settings such as autonomous driving and healthcare. As XAI helps reveal the inner workings of an AI model, it can similarly assist in revealing safety risks. Additionally, XAI systems can be developed to sit above existing AI systems to provide safety in terms of detecting network intrusion or other attacks, protecting the overall pipeline from adversarial attacks and thus supporting RAI.

In one recent work, the need for XAI in ensuring safety in healthcare systems was examined through a case study on predicting when a patient should be extubated from mechanical ventilation in intensive care. In this application, safety is of the utmost importance as early extubation can lead to emergency re-intubation, while late extubation increases risk of complications including pneumonia. Their work identified that XAI techniques are useful for verifying safety in several stages of AI development. In particular, they highlight that feature importance and counterfactual example methods support safety in AI. Feature importances can allow clinicians to assess whether the model utilised features that meet clinical expectations, improving confidence that the model makes decisions that will benefit the patient. Meanwhile, counterfactual explanations can be used to determine how many features would need to change (and by how much) in order to change the decision. This allows clinicians to evaluate the confidence of the model in its decision, which in turn supports patient safety as clinicians can make informed decisions about whether the model can be trusted in a particular instance or whether further investigation is needed.

In the autonomous driving domain, XAI has been applied in one recent work to compare the performance of three AI models classifying traffic signs where camera failures such as broken lens, ice coverage, or dead pixels occur. Their study evaluated the performance decreases for several types of lens failure, identifying that the model based on AlexNetwas the strongest performer across most lens failure modes. To understand this further, LIME heatmaps were applied to traffic sign classification outputs. This illustrated that predictions made by the AlexNet model used features distributed over a larger section of the inputted image compared to the other models. The authors hypothesise that this leads to the higher camera failure tolerance of AlexNet, as the features of interest are less likely to be obscured by the defect if they are widely spread throughout the image. In this context, XAI supports safe autonomous driving by enabling identification of the traffic sign model that performs most reliably under adverse conditions.

Another recent work reviewed the field of goal-driven AI systems (GDAIs), which include robots and other agents operating independently to fulfil their individual goals. In particular, they examined the need for explainable GDAIs in safety-critical applications to ensure that GDAIs do not cause harm to humans or environments. Their work identified that communication of actions via explanations such as CAM, SHAP, and textual description are essential for communicating the decisions of GDAIs. Through their analysis, the authors identify explanations support human-computer interaction, and thus support safety by enabling early detection of problems and thus early intervention to prevent failures, which is particularly crucial for safety-critical applications.

XAI can also be used to create a layer of protection against adversarial attacks on digital systems that employ AI. In one recent study, explainability techniques were utilised as part of an intrusion detection system for recognising attacks on Internet of Things (IoT) networks. Using a pipeline including rule-based explanations, LIME, and SHAP, the proposed intrusion detection system accurately detects intruder or compromised nodes on the IoT network, with global and local explanations provided to show how the decision was made so that human experts can decide on a response path. The use of XAI in this context helps to protect any AI models operating on the IoT network data from attack, as rapid and interpretable detection of intruders greatly reduces the risk of the IoT network being poisoned by malicious data injection.

Overall, explainability strongly supports safety across diverse AI applications. XAI techniques improve safety by enabling human experts to review decisions and decide whether they were made reasonably. Additionally, XAI can be applied on top of other AI systems to detect security risks such as network intrusions, reducing the risk of adversarial attacks. XAI is thus an important tool for ensuring that the RAI principle of safety is met.

Lessons Learned

Through our exploration of the literature, it was found that XAI is foundational to each key pillar of RAI. The relationship of XAI to the principles of transparency and accountability was found to be straightforward. The primary intention of explanation is to make models more interpretable, and several works have established that users perceive XAI to be more transparent, understandable, and trustworthy. Transparency is also the first step towards accountability, where AI models need to be able to explain and justify their decisions to relevant stakeholders. Works to date have established that XAI is essential in making AI accountable and, where necessary, liable for its actions in critical sectors from autonomous driving to healthcare.

Accountability is also connected to the principles of fairness and safety. In our exploration of the literature, it was found that XAI tools can highlight societal biases learned from historic data. Human-in-the-loop fairness debugging was also discussed, with human users able to provide feedback on potential biases based on model explanations, as a form of fairness accountability. Human-in-the-loop debugging was also considered in the safety context, with explanations provided by goal-driven AIs to human safety auditors. In turn, humans could provide feedback to the AI models that would improve the safety and failure tolerance of the systems. Similarly, safety-critical applications in healthand autonomous drivingwere supported by XAI through the provision of explanations to domain experts, who could then assess how and why the model is making a decision and determine whether that decision is appropriate.

Safety is also tied to the principle of robustness; a model that is less likely to fail, is less likely to cause harm in safety-critical applications. XAI was found to support the RAI principle of robustness in several direct and indirect ways. In particular, XAI has been used quantify robustness, enable robustness comparison between models, and support fine-tuning of models to enhance robustness. Interestingly, one study also identified that robust models have a higher degree of inherent explainability, indicating a strong relationship between these principles.

In our analysis of XAI robustness literature, one study highlighted that certain explanations remain accurate in the face of adversarial attacks that utilise perturbed inputs to compromise a model. The resilience of XAI to adversaries was considered by several works seeking to improve privacy. In some works, it was found that XAI could still successfully explain decisions where user privacy was protected by perturbing the dataset such that adversarial attacks would not be able to unveil genuine and identifiable information. Another key approach for preserving privacy was distributed learning, both in federatedand swarm learning structures, where local models are trained on private data, shared securely, and then aggregated into a final model for the end-user. In this context, XAI supported privacy indirectly by enabling models to continue meeting other RAI requirements whilst using an otherwise opaque method of learning.